pytorch __init__、forward和__call__小结

1.介绍

当我们使用pytorch来构建网络框架的时候,也会遇到和tensorflow(tensorflow __init__、build 和call小结)类似的情况,即经常会遇到__init__、forward和call这三个互相搭配着使用,那么它们的主要区别又在哪里呢?

1)__init__主要用来做参数初始化用,比如我们要初始化卷积的一些参数,就可以放到这里面,这点和tf里面的用法是一样的

2)forward是表示一个前向传播,构建网络层的先后运算步骤

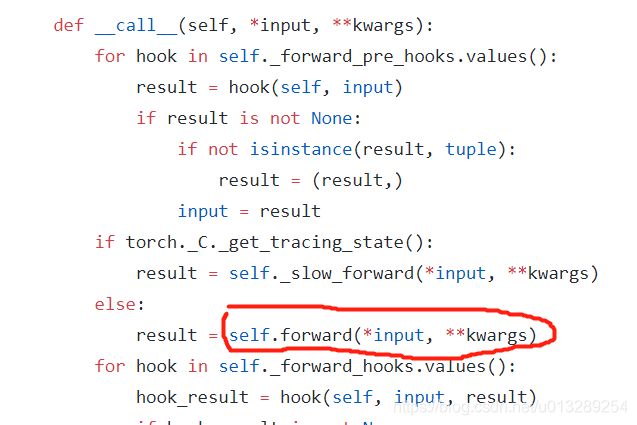

3)__call__的功能其实和forward类似,所以很多时候,我们构建网络的时候,可以用__call__替代forward函数,但它们两个的区别又在哪里呢?当网络构建完之后,调__call__的时候,会去先调forward,即__call__其实是包了一层forward,所以会导致两者的功能类似。在pytorch在nn.Module中,实现了__call__方法,而在__call__方法中调用了forward函数:https://github.com/pytorch/pytorch/blob/master/torch/nn/modules/module.py

2.代码

import torch

import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self, in_channels, mid_channels, out_channels):

super(Net, self).__init__()

self.conv0 = torch.nn.Sequential(

torch.nn.Conv2d(in_channels, mid_channels, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

torch.nn.LeakyReLU())

self.conv1 = torch.nn.Sequential(

torch.nn.Conv2d(mid_channels, out_channels * 2, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))

def forward(self, x):

x = self.conv0(x)

x = self.conv1(x)

return x

class Net(nn.Module):

def __init__(self, in_channels, mid_channels, out_channels):

super(Net, self).__init__()

self.conv0 = torch.nn.Sequential(

torch.nn.Conv2d(in_channels, mid_channels, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

torch.nn.LeakyReLU())

self.conv1 = torch.nn.Sequential(

torch.nn.Conv2d(mid_channels, out_channels * 2, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))

def __call__(self, x):

x = self.conv0(x)

x = self.conv1(x)

return x