AlexNet

一、AlexNet的介绍

AleNet是真正意义上的深度卷积神经网络,并在2012年ImagNet竞赛中获得冠军。相比于LeNet网络,Alexnet深度更深,参数更多,应用更广泛。为了改善性能,引入了ReLU、Dropout和LRN等方法,并使用了双GPU加速。

二、AlexNet的网络结构

![]()

上图是作者论文中提供的网络结构,与LeNet相比,有几点改变:

(1)、输入由单通道变成了三通道;(LeNet也可以实现三通道)

(2)、使用两个GPU加速;

(3)、使用ReLU作为激活函数;

(4)、为了防止过拟合,采用了Dropout方法;

(5)、采用最大池化层(maxpooling)替代平均池化层(avgpooling);

(6)、加入了LRN层;

(7)、采用了数据增强,增大数据量。

(8)、增加了三个卷积层。

每一层的数量计算公式如下:

各层的数量变化可以看参考文献[1]。

三、model的实现:

具体的理论部分不做过多介绍,直接查看代码构成。

建立网络连接:

依据上图建立net网络,图中和LeNet网络进行了对比。

为了训练时间更短,将卷积通道数都减半处理。

import torch.nn as nn

import torch

class AlexNet(nn.Module):

def __init__(self, num_classes=1000, init_weights=False):

super(AlexNet, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 48, kernel_size=11, stride=4, padding=2), # input[3, 224, 224] output[48, 55, 55]

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2), # output[48, 27, 27]

nn.Conv2d(48, 128, kernel_size=5, padding=2), # output[128, 27, 27]

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2), # output[128, 13, 13]

nn.Conv2d(128, 192, kernel_size=3, padding=1), # output[192, 13, 13]

nn.ReLU(inplace=True),

nn.Conv2d(192, 192, kernel_size=3, padding=1), # output[192, 13, 13]

nn.ReLU(inplace=True),

nn.Conv2d(192, 128, kernel_size=3, padding=1), # output[128, 13, 13]

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2), # output[128, 6, 6]

)

self.classifier = nn.Sequential(

nn.Dropout(p=0.5),

nn.Linear(128 * 6 * 6, 2048),

nn.ReLU(inplace=True),

nn.Dropout(p=0.5),

nn.Linear(2048, 2048),

nn.ReLU(inplace=True),

nn.Linear(2048, num_classes),

)

if init_weights:

self._initialize_weights()

def forward(self, x):

x = self.features(x)

x = torch.flatten(x, start_dim=1)

x = self.classifier(x)

return x

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

if m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01)

nn.init.constant_(m.bias, 0)打印出网络结构。

X=torch.randn(1,3,224,224)

for layer in AlexNet:

X=layer(X)

print(layer.__class__.__name__,'output shape:\t',X.shape)输出为:

Conv2d output shape: torch.Size([1, 48, 55, 55])

ReLU output shape: torch.Size([1, 48, 55, 55])

MaxPool2d output shape: torch.Size([1, 48, 27, 27])

Conv2d output shape: torch.Size([1, 128, 27, 27])

ReLU output shape: torch.Size([1, 128, 27, 27])

MaxPool2d output shape: torch.Size([1, 128, 13, 13])

Conv2d output shape: torch.Size([1, 192, 13, 13])

ReLU output shape: torch.Size([1, 192, 13, 13])

Conv2d output shape: torch.Size([1, 192, 13, 13])

ReLU output shape: torch.Size([1, 192, 13, 13])

Conv2d output shape: torch.Size([1, 128, 13, 13])

ReLU output shape: torch.Size([1, 128, 13, 13])

MaxPool2d output shape: torch.Size([1, 128, 6, 6])

Flatten output shape: torch.Size([1, 4608])

Linear output shape: torch.Size([1, 2048])

ReLU output shape: torch.Size([1, 2048])

Dropout output shape: torch.Size([1, 2048])

Linear output shape: torch.Size([1, 2048])

ReLU output shape: torch.Size([1, 2048])

Dropout output shape: torch.Size([1, 2048])

Linear output shape: torch.Size([1, 10])

数据集可视化,导入数据集并显示4个。

import os

import sys

import json

import torch

import torch.nn as nn

from torchvision import transforms, datasets, utils

import matplotlib.pyplot as plt

import numpy as np

import torch.optim as optim

from tqdm import tqdm

from model import AlexNet

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print("using {} device.".format(device))

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]),

"val": transforms.Compose([transforms.Resize((224, 224)), # cannot 224, must (224, 224)

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])}

data_root = os.path.abspath(os.path.join(os.getcwd(), "../")) # get data root path

image_path = os.path.join(data_root, "data_set", "flower_data") # flower data set path

assert os.path.exists(image_path), "{} path does not exist.".format(image_path)

train_dataset = datasets.ImageFolder(root=os.path.join(image_path, "train"),

transform=data_transform["train"])

train_num = len(train_dataset)

# {'daisy':0, 'dandelion':1, 'roses':2, 'sunflower':3, 'tulips':4}

flower_list = train_dataset.class_to_idx

cla_dict = dict((val, key) for key, val in flower_list.items())#将表示方式调换{0:'daisy'...}

# write dict into json file

json_str = json.dumps(cla_dict, indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

batch_size = 64

nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8]) # number of workers

print('Using {} dataloader workers every process'.format(nw))

train_loader = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size, shuffle=True,

num_workers=0)#单线程设置为0

validate_dataset = datasets.ImageFolder(root=os.path.join(image_path, "val"),

transform=data_transform["val"])

val_num = len(validate_dataset)

validate_loader = torch.utils.data.DataLoader(validate_dataset,

batch_size=batch_size, shuffle=False,

num_workers=0)

print("using {} images for training, {} images for validation.".format(train_num,

val_num))

可视化的时候validata_loader的batch_size改为4,方便一张图上显示。

test_data_iter = iter(validate_loader)

test_image, test_label = test_data_iter.next()

def imshow(img):

img = img / 2 + 0.5 # unnormalize

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

plt.show()

print(' '.join('%5s' % cla_dict[test_label[j].item()] for j in range(4)))

imshow(utils.make_grid(test_image))输出可视化结果:

训练完整程序:

import os

import sys

import json

import torch

import torch.nn as nn

from torchvision import transforms, datasets, utils

import matplotlib.pyplot as plt

import numpy as np

import torch.optim as optim

from tqdm import tqdm

from model import AlexNet

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print("using {} device.".format(device))

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]),

"val": transforms.Compose([transforms.Resize((224, 224)), # cannot 224, must (224, 224)

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])}

data_root = os.path.abspath(os.path.join(os.getcwd(), "../")) # get data root path

image_path = os.path.join(data_root, "data_set", "flower_data") # flower data set path

assert os.path.exists(image_path), "{} path does not exist.".format(image_path)

train_dataset = datasets.ImageFolder(root=os.path.join(image_path, "train"),

transform=data_transform["train"])

train_num = len(train_dataset)

# {'daisy':0, 'dandelion':1, 'roses':2, 'sunflower':3, 'tulips':4}

flower_list = train_dataset.class_to_idx

cla_dict = dict((val, key) for key, val in flower_list.items())#将表示方式调换{0:'daisy'...}

# write dict into json file

json_str = json.dumps(cla_dict, indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

batch_size = 64

nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8]) # number of workers

print('Using {} dataloader workers every process'.format(nw))

train_loader = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size, shuffle=True,

num_workers=0)#单线程设置为0

validate_dataset = datasets.ImageFolder(root=os.path.join(image_path, "val"),

transform=data_transform["val"])

val_num = len(validate_dataset)

validate_loader = torch.utils.data.DataLoader(validate_dataset,

batch_size=4, shuffle=False,

num_workers=0)

print("using {} images for training, {} images for validation.".format(train_num,

val_num))

# #可视化的时候validata_loader的batch_size改为4,方便一张图上显示。

# test_data_iter = iter(validate_loader)

# test_image, test_label = test_data_iter.next()

# def imshow(img):

# img = img / 2 + 0.5 # unnormalize

# npimg = img.numpy()

# plt.imshow(np.transpose(npimg, (1, 2, 0)))

# plt.show()

# print(' '.join('%5s' % cla_dict[test_label[j].item()] for j in range(4)))

# imshow(utils.make_grid(test_image))

net = AlexNet(num_classes=5, init_weights=True)

net.to(device)

# print(net)

loss_function = nn.CrossEntropyLoss()

# pata = list(net.parameters())

optimizer = optim.Adam(net.parameters(), lr=0.0002)

epochs = 50

save_path = './AlexNet.pth'

best_acc = 0.0

train_steps = len(train_loader)

val_acc=[]

train_loss=[]

for epoch in range(epochs):

# train

net.train()

running_loss = 0.0

train_bar = tqdm(train_loader, file=sys.stdout)

for step, data in enumerate(train_bar):

images, labels = data

optimizer.zero_grad()

outputs = net(images.to(device))

loss = loss_function(outputs, labels.to(device))

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

train_bar.desc = "train epoch[{}/{}] loss:{:.3f}".format(epoch + 1,

epochs,

loss)

# validate

net.eval()

acc = 0.0 # accumulate accurate number / epoch

with torch.no_grad():

val_bar = tqdm(validate_loader, file=sys.stdout)

for val_data in val_bar:

val_images, val_labels = val_data

outputs = net(val_images.to(device))

predict_y = torch.max(outputs, dim=1)[1]

acc += torch.eq(predict_y, val_labels.to(device)).sum().item()

val_accurate = acc / val_num

val_acc.append(val_accurate)

train_loss.append(running_loss / train_steps)

print('[epoch %d] train_loss: %.3f val_accuracy: %.3f' %

(epoch + 1, running_loss / train_steps, val_accurate))

if val_accurate > best_acc:

best_acc = val_accurate

torch.save(net.state_dict(), save_path)

print('best score is :{:.2f}'.format(best_acc))

print('Finished Training')

plt.plot(val_acc,'-*',label='验证集精度')

plt.plot(train_loss,'-o',label='训练集损失值')

plt.legend()

plt.show()

if __name__ == '__main__':

main()

选择epoch=50。输出图:

best score is :0.83

看图形的走势,应该是随着epoch的增加精度逐渐增大。但是预测精度会有抖动。

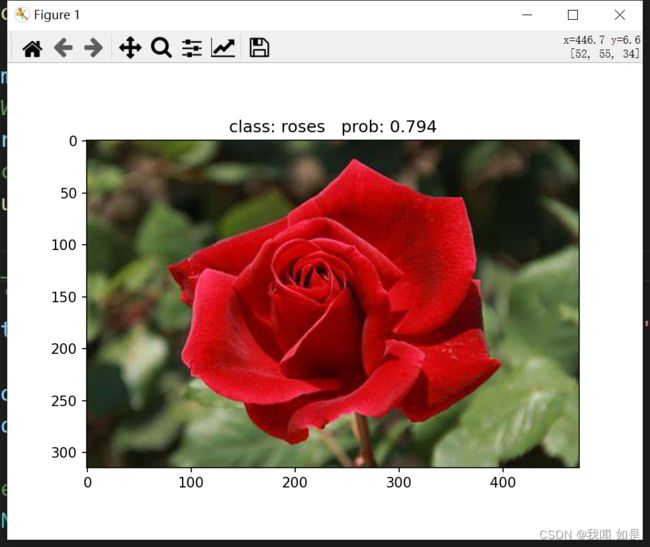

预测程序:

import os

import json

import torch

from PIL import Image

from torchvision import transforms

import matplotlib.pyplot as plt

from model import AlexNet

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

data_transform = transforms.Compose(

[transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

# load image

img_path = "./rose.jpg"

# img_path="./rose.jpg"

assert os.path.exists(img_path), "file: '{}' dose not exist.".format(img_path)

img = Image.open(img_path)

plt.imshow(img)

# [N, C, H, W]

img = data_transform(img)

# expand batch dimension

img = torch.unsqueeze(img, dim=0)

# read class_indict

json_path = './class_indices.json'

assert os.path.exists(json_path), "file: '{}' dose not exist.".format(json_path)

with open(json_path, "r") as f:

class_indict = json.load(f)

# create model

model = AlexNet(num_classes=5).to(device)

# load model weights

weights_path = "./AlexNet.pth"

assert os.path.exists(weights_path), "file: '{}' dose not exist.".format(weights_path)

model.load_state_dict(torch.load(weights_path))

model.eval()

with torch.no_grad():

# predict class

output = torch.squeeze(model(img.to(device))).cpu()

predict = torch.softmax(output, dim=0)

predict_cla = torch.argmax(predict).numpy()

print_res = "class: {} prob: {:.3}".format(class_indict[str(predict_cla)],

predict[predict_cla].numpy())

plt.title(print_res)

for i in range(len(predict)):

print("class: {:10} prob: {:.3}".format(class_indict[str(i)],

predict[i].numpy()))

plt.show()

if __name__ == '__main__':

main()

结果:

class: daisy prob: 0.000752

class: dandelion prob: 0.000157

class: roses prob: 0.794

class: sunflowers prob: 0.000145

class: tulips prob: 0.205

找了一堆玫瑰花预测。

class: daisy prob: 0.000572

class: dandelion prob: 3.1e-05

class: roses prob: 0.942

class: sunflowers prob: 5.18e-05

class: tulips prob: 0.0576

找些塑料玫瑰花测试。

class: daisy prob: 0.00329

class: dandelion prob: 0.000144

class: roses prob: 0.91

class: sunflowers prob: 0.000294

class: tulips prob: 0.0866

整体的预测结果还是比较成功的,能达到预测要求。

参考资料:

[1] 从LeNet到GoogLeNet:逐层详解,看卷积神经网络的进化

[2] vgg和alexnet,lenet resnet等网络简要评价和使用体会

[3] 原论文的下载地址:imagenet.pdf (toronto.edu)

[4] 参考视频地址:AleNet程序