批归一化

model = keras.models.Sequential()

model.add(keras.layers.Flatten(input_shape=[28, 28]))

for _ in range(20):

model.add(keras.layers.Dense(100, activation="relu"))

model.add(keras.layers.BatchNormalization())

model.add(keras.layers.Dense(10, activation="softmax"))

import matplotlib as mpl

import matplotlib.pyplot as plt

import numpy as np

import sklearn

import pandas as pd

import os

import sys

import time

import tensorflow as tf

from tensorflow import keras

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

print(tf.__version__)

print(sys.version_info)

for module in mpl, np, pd, sklearn, tf, keras:

print(module.__name__, module.__version__)

fashion_mnist = keras.datasets.fashion_mnist

(x_train_all, y_train_all), (x_test, y_test) = fashion_mnist.load_data()

x_valid, x_train = x_train_all[:5000], x_train_all[5000:]

y_valid, y_train = y_train_all[:5000], y_train_all[5000:]

print(x_valid.shape, y_valid.shape)

print(x_train.shape, y_train.shape)

print(x_test.shape, y_test.shape)

print(np.max(x_train), np.min(x_train))

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

x_train_scaled = scaler.fit_transform(

x_train.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28)

x_valid_scaled = scaler.transform(

x_valid.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28)

x_test_scaled = scaler.transform(

x_test.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28)

model = keras.models.Sequential()

model.add(keras.layers.Flatten(input_shape=[28, 28]))

for _ in range(20):

model.add(keras.layers.Dense(100, activation="relu"))

model.add(keras.layers.BatchNormalization())

model.add(keras.layers.Dense(10, activation="softmax"))

model.compile(loss="sparse_categorical_crossentropy",

optimizer = "sgd",

metrics = ["accuracy"])

print(model.layers,model.summary())

logdir = "keras实战"

logdir = os.path.join(logdir,"dnn-bn-callbacks")

if not os.path.exists(logdir):

os.mkdir(logdir)

output_model_file = os.path.join(logdir,"fashion_mnist_model.h5")

callbacks = [

keras.callbacks.TensorBoard(logdir),

keras.callbacks.ModelCheckpoint(output_model_file,

save_best_only = True),

]

history = model.fit(x_train_scaled, y_train, epochs=10,

validation_data=(x_valid_scaled, y_valid),

callbacks = callbacks)

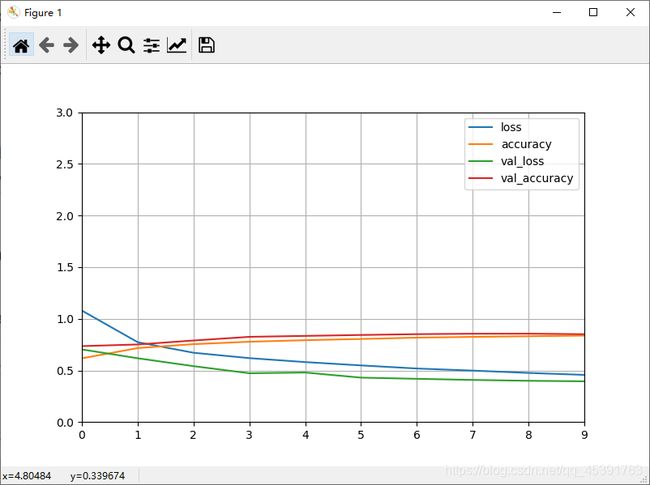

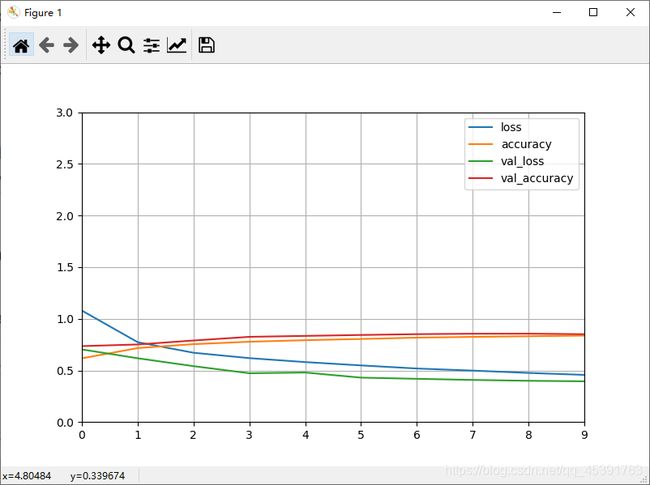

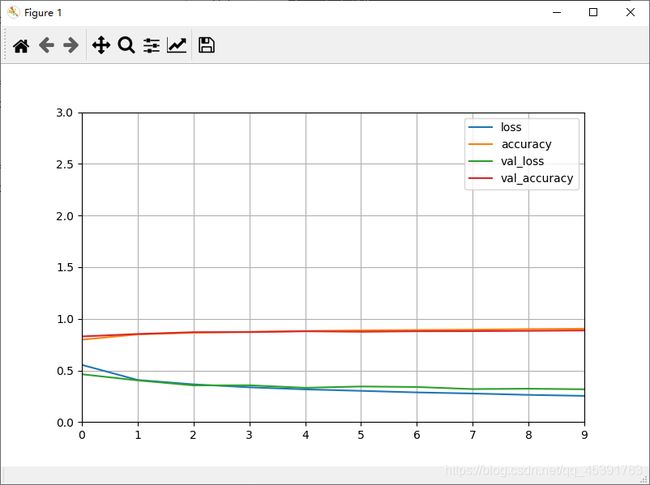

def plot_learning_curves(history):

pd.DataFrame(history.history).plot(figsize=(8,5))

plt.grid(True)

plt.gca().set_ylim(0,3)

plt.show()

plot_learning_curves(history)

model.evaluate(x_test_scaled,y_test)

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param

=================================================================

flatten (Flatten) (None, 784) 0

_________________________________________________________________

dense (Dense) (None, 100) 78500

_________________________________________________________________

batch_normalization (BatchNo (None, 100) 400

_________________________________________________________________

dense_1 (Dense) (None, 100) 10100

_________________________________________________________________

batch_normalization_1 (Batch (None, 100) 400

_________________________________________________________________

dense_2 (Dense) (None, 100) 10100

_________________________________________________________________

batch_normalization_2 (Batch (None, 100) 400

_________________________________________________________________

dense_3 (Dense) (None, 100) 10100

_________________________________________________________________

batch_normalization_3 (Batch (None, 100) 400

_________________________________________________________________

dense_4 (Dense) (None, 100) 10100

_________________________________________________________________

batch_normalization_4 (Batch (None, 100) 400

_________________________________________________________________

dense_5 (Dense) (None, 100) 10100

_________________________________________________________________

batch_normalization_5 (Batch (None, 100) 400

_________________________________________________________________

dense_6 (Dense) (None, 100) 10100

_________________________________________________________________

batch_normalization_6 (Batch (None, 100) 400

_________________________________________________________________

dense_7 (Dense) (None, 100) 10100

_________________________________________________________________

batch_normalization_7 (Batch (None, 100) 400

_________________________________________________________________

dense_8 (Dense) (None, 100) 10100

_________________________________________________________________

batch_normalization_8 (Batch (None, 100) 400

_________________________________________________________________

dense_9 (Dense) (None, 100) 10100

_________________________________________________________________

batch_normalization_9 (Batch (None, 100) 400

_________________________________________________________________

dense_10 (Dense) (None, 100) 10100

_________________________________________________________________

batch_normalization_10 (Batc (None, 100) 400

_________________________________________________________________

dense_11 (Dense) (None, 100) 10100

_________________________________________________________________

batch_normalization_11 (Batc (None, 100) 400

_________________________________________________________________

dense_12 (Dense) (None, 100) 10100

_________________________________________________________________

batch_normalization_12 (Batc (None, 100) 400

_________________________________________________________________

dense_13 (Dense) (None, 100) 10100

_________________________________________________________________

batch_normalization_13 (Batc (None, 100) 400

_________________________________________________________________

dense_14 (Dense) (None, 100) 10100

_________________________________________________________________

batch_normalization_14 (Batc (None, 100) 400

_________________________________________________________________

dense_15 (Dense) (None, 100) 10100

_________________________________________________________________

batch_normalization_15 (Batc (None, 100) 400

_________________________________________________________________

dense_16 (Dense) (None, 100) 10100

_________________________________________________________________

batch_normalization_16 (Batc (None, 100) 400

_________________________________________________________________

dense_17 (Dense) (None, 100) 10100

_________________________________________________________________

batch_normalization_17 (Batc (None, 100) 400

_________________________________________________________________

dense_18 (Dense) (None, 100) 10100

_________________________________________________________________

batch_normalization_18 (Batc (None, 100) 400

_________________________________________________________________

dense_19 (Dense) (None, 100) 10100

_________________________________________________________________

batch_normalization_19 (Batc (None, 100) 400

_________________________________________________________________

dense_20 (Dense) (None, 10) 1010

=================================================================

Total params: 279,410

Trainable params: 275,410

Non-trainable params: 4,000

_________________________________________________________________

[.python.keras.layers.core.Flatten object at 0x0000019A7EF6EC08>, .python.keras.layers.core.Dense object at 0x0000019A00080548>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A7EEF6748>, .python.keras.layers.core.Dense object at 0x0000019A001A46C8>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A03B8CAC8>, .python.keras.layers.core.Dense object at 0x0000019A001A4F88>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A67A23E48>, .python.keras.layers.core.Dense object at 0x0000019A03B8CFC8>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A67A2D048>, .python.keras.layers.core.Dense object at 0x0000019A67A91148>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A67AAA1C8>, .python.keras.layers.core.Dense object at 0x0000019A67A91BC8>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A67B31308>, .python.keras.layers.core.Dense object at 0x0000019A67BB0E48>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A67BB0D88>, .python.keras.layers.core.Dense object at 0x0000019A67C1FB48>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A67C9F188>, .python.keras.layers.core.Dense object at 0x0000019A67C15488>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A67C9F788>, .python.keras.layers.core.Dense object at 0x0000019A67D04588>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A67D04788>, .python.keras.layers.core.Dense object at 0x0000019A67D9EE88>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A67DA0D48>, .python.keras.layers.core.Dense object at 0x0000019A67E16F48>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A67E80048>, .python.keras.layers.core.Dense object at 0x0000019A67E92BC8>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A67E986C8>, .python.keras.layers.core.Dense object at 0x0000019A67F126C8>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A67F16D88>, .python.keras.layers.core.Dense object at 0x0000019A67F93108>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A67F9B3C8>, .python.keras.layers.core.Dense object at 0x0000019A68011588>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A67F9B1C8>, .python.keras.layers.core.Dense object at 0x0000019A68092408>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A680242C8>, .python.keras.layers.core.Dense object at 0x0000019A6810D188>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A68099F48>, .python.keras.layers.core.Dense object at 0x0000019A68186CC8>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A6811FF08>, .python.keras.layers.core.Dense object at 0x0000019A68204F08>, .python.keras.layers.normalization_v2.BatchNormalization object at 0x0000019A68197E48>, .python.keras.layers.core.Dense object at 0x0000019A68281C08>] None

Train on 55000 samples, validate on 5000 samples

Epoch 1/10

2019-12-08 11:31:42.159307: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library cublas64_100.dll

2019-12-08 11:31:42.426357: I tensorflow/core/profiler/lib/profiler_session.cc:184] Profiler session started.

2019-12-08 11:31:42.431835: W tensorflow/stream_executor/platform/default/dso_loader.cc:55] Could not load dynamic library 'cupti64_100.dll'; dlerror: cupti64_100.dll not found

2019-12-08 11:31:42.438507: W tensorflow/core/profiler/lib/profiler_session.cc:192] Encountered error while starting profiler: Unavailable: CUPTI error: CUPTI could not be loaded or symbol could not be found.

32/55000 [..............................] - ETA: 6:00:15 - loss: 3.1655 - accuracy: 0.12502019-12-08 11:31:44.460596: I tensorflow/core/platform/default/device_tracer.cc:588] Collecting 0 kernel records, 0 memcpy records.

2019-12-08 11:31:44.464763: E tensorflow/core/platform/default/device_tracer.cc:70] CUPTI error: CUPTI could not be loaded or symbol could not be found.

WARNING:tensorflow:Method (on_train_batch_end) is slow compared to the batch update (1.013791). Check your callbacks.

55000/55000 [==============================] - 51s 923us/sample - loss: 1.0800 - accuracy: 0.6173 - val_loss: 0.7038 - val_accuracy: 0.7358

Epoch 2/10

55000/55000 [==============================] - 35s 636us/sample - loss: 0.7738 - accuracy: 0.7169 - val_loss: 0.6182 - val_accuracy: 0.7516

Epoch 3/10

55000/55000 [==============================] - 35s 636us/sample - loss: 0.6716 - accuracy: 0.7547 - val_loss: 0.5418 - val_accuracy: 0.7902

Epoch 4/10

55000/55000 [==============================] - 35s 633us/sample - loss: 0.6195 - accuracy: 0.7783 - val_loss: 0.4731 - val_accuracy: 0.8256

Epoch 5/10

55000/55000 [==============================] - 35s 634us/sample - loss: 0.5812 - accuracy: 0.7932 - val_loss: 0.4800 - val_accuracy: 0.8348

Epoch 6/10

55000/55000 [==============================] - 35s 635us/sample - loss: 0.5490 - accuracy: 0.8048 - val_loss: 0.4307 - val_accuracy: 0.8436

Epoch 7/10

55000/55000 [==============================] - 35s 641us/sample - loss: 0.5189 - accuracy: 0.8179 - val_loss: 0.4193 - val_accuracy: 0.8516

Epoch 8/10

55000/55000 [==============================] - 35s 632us/sample - loss: 0.4989 - accuracy: 0.8252 - val_loss: 0.4084 - val_accuracy: 0.8558

Epoch 9/10

55000/55000 [==============================] - 35s 631us/sample - loss: 0.4761 - accuracy: 0.8315 - val_loss: 0.4001 - val_accuracy: 0.8562

Epoch 10/10

55000/55000 [==============================] - 35s 629us/sample - loss: 0.4573 - accuracy: 0.8377 - val_loss: 0.3950 - val_accuracy: 0.8510

激活函数selu

model = keras.models.Sequential()

model.add(keras.layers.Flatten(input_shape=[28, 28]))

for _ in range(20):

model.add(keras.layers.Dense(100, activation="selu"))

model.add(keras.layers.Dense(10, activation="softmax"))

import matplotlib as mpl

import matplotlib.pyplot as plt

import numpy as np

import sklearn

import pandas as pd

import os

import sys

import time

import tensorflow as tf

from tensorflow import keras

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

print(tf.__version__)

print(sys.version_info)

for module in mpl, np, pd, sklearn, tf, keras:

print(module.__name__, module.__version__)

fashion_mnist = keras.datasets.fashion_mnist

(x_train_all, y_train_all), (x_test, y_test) = fashion_mnist.load_data()

x_valid, x_train = x_train_all[:5000], x_train_all[5000:]

y_valid, y_train = y_train_all[:5000], y_train_all[5000:]

print(x_valid.shape, y_valid.shape)

print(x_train.shape, y_train.shape)

print(x_test.shape, y_test.shape)

print(np.max(x_train), np.min(x_train))

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

x_train_scaled = scaler.fit_transform(

x_train.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28)

x_valid_scaled = scaler.transform(

x_valid.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28)

x_test_scaled = scaler.transform(

x_test.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28)

model = keras.models.Sequential()

model.add(keras.layers.Flatten(input_shape=[28, 28]))

for _ in range(20):

model.add(keras.layers.Dense(100, activation="selu"))

model.add(keras.layers.Dense(10, activation="softmax"))

model.compile(loss="sparse_categorical_crossentropy",

optimizer = "sgd",

metrics = ["accuracy"])

print(model.layers,model.summary())

logdir = "keras实战"

logdir = os.path.join(logdir,"dnn-selu-callbacks")

if not os.path.exists(logdir):

os.mkdir(logdir)

output_model_file = os.path.join(logdir,"fashion_mnist_model.h5")

callbacks = [

keras.callbacks.TensorBoard(logdir),

keras.callbacks.ModelCheckpoint(output_model_file,

save_best_only = True),

]

history = model.fit(x_train_scaled, y_train, epochs=10,

validation_data=(x_valid_scaled, y_valid),

callbacks = callbacks)

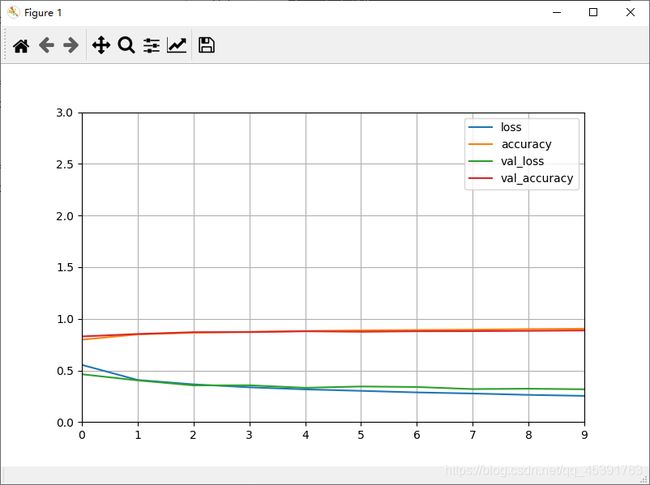

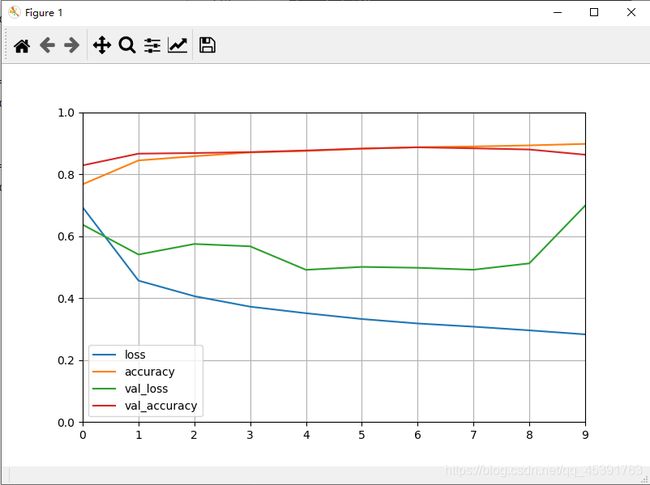

def plot_learning_curves(history):

pd.DataFrame(history.history).plot(figsize=(8,5))

plt.grid(True)

plt.gca().set_ylim(0,3)

plt.show()

plot_learning_curves(history)

model.evaluate(x_test_scaled,y_test)

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param

=================================================================

flatten (Flatten) (None, 784) 0

_________________________________________________________________

dense (Dense) (None, 100) 78500

_________________________________________________________________

dense_1 (Dense) (None, 100) 10100

_________________________________________________________________

dense_2 (Dense) (None, 100) 10100

_________________________________________________________________

dense_3 (Dense) (None, 100) 10100

_________________________________________________________________

dense_4 (Dense) (None, 100) 10100

_________________________________________________________________

dense_5 (Dense) (None, 100) 10100

_________________________________________________________________

dense_6 (Dense) (None, 100) 10100

_________________________________________________________________

dense_7 (Dense) (None, 100) 10100

_________________________________________________________________

dense_8 (Dense) (None, 100) 10100

_________________________________________________________________

dense_9 (Dense) (None, 100) 10100

_________________________________________________________________

dense_10 (Dense) (None, 100) 10100

_________________________________________________________________

dense_11 (Dense) (None, 100) 10100

_________________________________________________________________

dense_12 (Dense) (None, 100) 10100

_________________________________________________________________

dense_13 (Dense) (None, 100) 10100

_________________________________________________________________

dense_14 (Dense) (None, 100) 10100

_________________________________________________________________

dense_15 (Dense) (None, 100) 10100

_________________________________________________________________

dense_16 (Dense) (None, 100) 10100

_________________________________________________________________

dense_17 (Dense) (None, 100) 10100

_________________________________________________________________

dense_18 (Dense) (None, 100) 10100

_________________________________________________________________

dense_19 (Dense) (None, 100) 10100

_________________________________________________________________

dense_20 (Dense) (None, 10) 1010

=================================================================

Total params: 271,410

Trainable params: 271,410

Non-trainable params: 0

_________________________________________________________________

[.python.keras.layers.core.Flatten object at 0x0000026BA1BF1D08>, .python.keras.layers.core.Dense object at 0x0000026BA1C93608>, .python.keras.layers.core.Dense object at 0x0000026BA1EEEFC8>, .python.keras.layers.core.Dense object at 0x0000026BA1EEECC8>, .python.keras.layers.core.Dense object at 0x0000026BA1C939C8>, .python.keras.layers.core.Dense object at 0x0000026BA1F04C88>, .python.keras.layers.core.Dense object at 0x0000026BA5776F08>, .python.keras.layers.core.Dense object at 0x0000026BA577D108>, .python.keras.layers.core.Dense object at 0x0000026BA1F0B408>, .python.keras.layers.core.Dense object at 0x0000026BA57C8688>, .python.keras.layers.core.Dense object at 0x0000026BA57CFDC8>, .python.keras.layers.core.Dense object at 0x0000026BA57EF708>, .python.keras.layers.core.Dense object at 0x0000026BB64B8E48>, .python.keras.layers.core.Dense object at 0x0000026BB64D29C8>, .python.keras.layers.core.Dense object at 0x0000026BC3450688>, .python.keras.layers.core.Dense object at 0x0000026BC3450608>, .python.keras.layers.core.Dense object at 0x0000026BC347D688>, .python.keras.layers.core.Dense object at 0x0000026BC34CA088>, .python.keras.layers.core.Dense object at 0x0000026BC34CAB88>, .python.keras.layers.core.Dense object at 0x0000026BC34E88C8>, .python.keras.layers.core.Dense object at 0x0000026E07C6FEC8>, .python.keras.layers.core.Dense object at 0x0000026E07C53E48>] None

Train on 55000 samples, validate on 5000 samples

Epoch 1/10

2019-12-08 11:41:44.181888: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library cublas64_100.dll

2019-12-08 11:41:44.426800: I tensorflow/core/profiler/lib/profiler_session.cc:184] Profiler session started.

2019-12-08 11:41:44.430989: W tensorflow/stream_executor/platform/default/dso_loader.cc:55] Could not load dynamic library 'cupti64_100.dll'; dlerror: cupti64_100.dll not found

2019-12-08 11:41:44.437310: W tensorflow/core/profiler/lib/profiler_session.cc:192] Encountered error while starting profiler: Unavailable: CUPTI error: CUPTI could not be loaded or symbol could not be found.

32/55000 [..............................] - ETA: 1:55:02 - loss: 3.2050 - accuracy: 0.09382019-12-08 11:41:45.149939: I tensorflow/core/platform/default/device_tracer.cc:588] Collecting 0 kernel records, 0 memcpy records.

2019-12-08 11:41:45.154289: E tensorflow/core/platform/default/device_tracer.cc:70] CUPTI error: CUPTI could not be loaded or symbol could not be found.

WARNING:tensorflow:Method (on_train_batch_end) is slow compared to the batch update (0.365009). Check your callbacks.

55000/55000 [==============================] - 17s 305us/sample - loss: 0.5542 - accuracy: 0.7983 - val_loss: 0.4633 - val_accuracy: 0.8294

Epoch 2/10

55000/55000 [==============================] - 12s 218us/sample - loss: 0.4080 - accuracy: 0.8491 - val_loss: 0.4041 - val_accuracy: 0.8532

Epoch 3/10

55000/55000 [==============================] - 12s 211us/sample - loss: 0.3656 - accuracy: 0.8655 - val_loss: 0.3553 - val_accuracy: 0.8708

Epoch 4/10

55000/55000 [==============================] - 12s 216us/sample - loss: 0.3371 - accuracy: 0.8741 - val_loss: 0.3566 - val_accuracy: 0.8714

Epoch 5/10

55000/55000 [==============================] - 12s 212us/sample - loss: 0.3170 - accuracy: 0.8820 - val_loss: 0.3324 - val_accuracy: 0.8794

Epoch 6/10

55000/55000 [==============================] - 11s 206us/sample - loss: 0.3028 - accuracy: 0.8880 - val_loss: 0.3450 - val_accuracy: 0.8748

Epoch 7/10

55000/55000 [==============================] - 12s 217us/sample - loss: 0.2876 - accuracy: 0.8918 - val_loss: 0.3400 - val_accuracy: 0.8798

Epoch 8/10

55000/55000 [==============================] - 12s 212us/sample - loss: 0.2771 - accuracy: 0.8949 - val_loss: 0.3199 - val_accuracy: 0.8806

Epoch 9/10

55000/55000 [==============================] - 12s 214us/sample - loss: 0.2637 - accuracy: 0.9004 - val_loss: 0.3236 - val_accuracy: 0.8836

Epoch 10/10

55000/55000 [==============================] - 12s 214us/sample - loss: 0.2541 - accuracy: 0.9044 - val_loss: 0.3178 - val_accuracy: 0.8872

dropout

model = keras.models.Sequential()

model.add(keras.layers.Flatten(input_shape=[28, 28]))

for _ in range(20):

model.add(keras.layers.Dense(100, activation="selu"))

model.add(keras.layers.AlphaDropout(rate=0.5))

model.add(keras.layers.Dense(10, activation="softmax"))

import matplotlib as mpl

import matplotlib.pyplot as plt

import numpy as np

import sklearn

import pandas as pd

import os

import sys

import time

import tensorflow as tf

from tensorflow import keras

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

print(tf.__version__)

print(sys.version_info)

for module in mpl, np, pd, sklearn, tf, keras:

print(module.__name__, module.__version__)

fashion_mnist = keras.datasets.fashion_mnist

(x_train_all, y_train_all), (x_test, y_test) = fashion_mnist.load_data()

x_valid, x_train = x_train_all[:5000], x_train_all[5000:]

y_valid, y_train = y_train_all[:5000], y_train_all[5000:]

print(x_valid.shape, y_valid.shape)

print(x_train.shape, y_train.shape)

print(x_test.shape, y_test.shape)

print(np.max(x_train), np.min(x_train))

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

x_train_scaled = scaler.fit_transform(

x_train.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28)

x_valid_scaled = scaler.transform(

x_valid.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28)

x_test_scaled = scaler.transform(

x_test.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28)

model = keras.models.Sequential()

model.add(keras.layers.Flatten(input_shape=[28, 28]))

for _ in range(20):

model.add(keras.layers.Dense(100, activation="selu"))

model.add(keras.layers.AlphaDropout(rate=0.5))

model.add(keras.layers.Dense(10, activation="softmax"))

model.compile(loss="sparse_categorical_crossentropy",

optimizer = "sgd",

metrics = ["accuracy"])

print(model.layers,model.summary())

logdir = "keras实战"

logdir = os.path.join(logdir,"dnn-selu-dropout-callbacks")

if not os.path.exists(logdir):

os.mkdir(logdir)

output_model_file = os.path.join(logdir,"fashion_mnist_model.h5")

callbacks = [

keras.callbacks.TensorBoard(logdir),

keras.callbacks.ModelCheckpoint(output_model_file,

save_best_only = True),

]

history = model.fit(x_train_scaled, y_train, epochs=10,

validation_data=(x_valid_scaled, y_valid),

callbacks = callbacks)

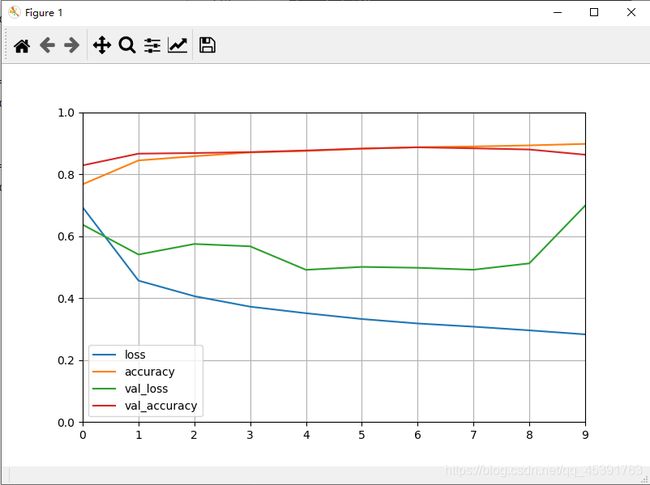

def plot_learning_curves(history):

pd.DataFrame(history.history).plot(figsize=(8,5))

plt.grid(True)

plt.gca().set_ylim(0,1)

plt.show()

plot_learning_curves(history)

model.evaluate(x_test_scaled,y_test)

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param

=================================================================

flatten (Flatten) (None, 784) 0

_________________________________________________________________

dense (Dense) (None, 100) 78500

_________________________________________________________________

dense_1 (Dense) (None, 100) 10100

_________________________________________________________________

dense_2 (Dense) (None, 100) 10100

_________________________________________________________________

dense_3 (Dense) (None, 100) 10100

_________________________________________________________________

dense_4 (Dense) (None, 100) 10100

_________________________________________________________________

dense_5 (Dense) (None, 100) 10100

_________________________________________________________________

dense_6 (Dense) (None, 100) 10100

_________________________________________________________________

dense_7 (Dense) (None, 100) 10100

_________________________________________________________________

dense_8 (Dense) (None, 100) 10100

_________________________________________________________________

dense_9 (Dense) (None, 100) 10100

_________________________________________________________________

dense_10 (Dense) (None, 100) 10100

_________________________________________________________________

dense_11 (Dense) (None, 100) 10100

_________________________________________________________________

dense_12 (Dense) (None, 100) 10100

_________________________________________________________________

dense_13 (Dense) (None, 100) 10100

_________________________________________________________________

dense_14 (Dense) (None, 100) 10100

_________________________________________________________________

dense_15 (Dense) (None, 100) 10100

_________________________________________________________________

dense_16 (Dense) (None, 100) 10100

_________________________________________________________________

dense_17 (Dense) (None, 100) 10100

_________________________________________________________________

dense_18 (Dense) (None, 100) 10100

_________________________________________________________________

dense_19 (Dense) (None, 100) 10100

_________________________________________________________________

alpha_dropout (AlphaDropout) (None, 100) 0

_________________________________________________________________

dense_20 (Dense) (None, 10) 1010

=================================================================

Total params: 271,410

Trainable params: 271,410

Non-trainable params: 0

_________________________________________________________________

[.python.keras.layers.core.Flatten object at 0x000001DD4FF6EE08>, .python.keras.layers.core.Dense object at 0x000001DD50012708>, .python.keras.layers.core.Dense object at 0x000001DD5013DFC8>, .python.keras.layers.core.Dense object at 0x000001DD4928D3C8>, .python.keras.layers.core.Dense object at 0x000001DD50158F48>, .python.keras.layers.core.Dense object at 0x000001DD4FF90B48>, .python.keras.layers.core.Dense object at 0x000001DD50DB5DC8>, .python.keras.layers.core.Dense object at 0x000001DD50DEC888>, .python.keras.layers.core.Dense object at 0x000001DD4FFB2B48>, .python.keras.layers.core.Dense object at 0x000001DD50DEC9C8>, .python.keras.layers.core.Dense object at 0x000001DD50E1A7C8>, .python.keras.layers.core.Dense object at 0x000001DD50E1AD48>, .python.keras.layers.core.Dense object at 0x000001DD50E378C8>, .python.keras.layers.core.Dense object at 0x000001DD53B304C8>, .python.keras.layers.core.Dense object at 0x000001DD53B30788>, .python.keras.layers.core.Dense object at 0x000001DD50E51808>, .python.keras.layers.core.Dense object at 0x000001DD53B5DCC8>, .python.keras.layers.core.Dense object at 0x000001DD5A395FC8>, .python.keras.layers.core.Dense object at 0x000001DD5A3BA388>, .python.keras.layers.core.Dense object at 0x000001DD5A3D7088>, .python.keras.layers.core.Dense object at 0x000001DD5A3BAA48>, .python.keras.layers.noise.AlphaDropout object at 0x000001DD71819108>, .python.keras.layers.core.Dense object at 0x000001DD718A5EC8>] None

Train on 55000 samples, validate on 5000 samples

Epoch 1/10

2019-12-08 11:46:21.230274: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library cublas64_100.dll

2019-12-08 11:46:21.462637: I tensorflow/core/profiler/lib/profiler_session.cc:184] Profiler session started.

2019-12-08 11:46:21.466558: W tensorflow/stream_executor/platform/default/dso_loader.cc:55] Could not load dynamic library 'cupti64_100.dll'; dlerror: cupti64_100.dll not found

2019-12-08 11:46:21.474198: W tensorflow/core/profiler/lib/profiler_session.cc:192] Encountered error while starting profiler: Unavailable: CUPTI error: CUPTI could not be loaded or symbol could not be found.

32/55000 [..............................] - ETA: 2:02:50 - loss: 3.5272 - accuracy: 0.09382019-12-08 11:46:22.211412: I tensorflow/core/platform/default/device_tracer.cc:588] Collecting 0 kernel records, 0 memcpy records.

2019-12-08 11:46:22.216862: E tensorflow/core/platform/default/device_tracer.cc:70] CUPTI error: CUPTI could not be loaded or symbol could not be found.

WARNING:tensorflow:Method (on_train_batch_end) is slow compared to the batch update (0.378985). Check your callbacks.

55000/55000 [==============================] - 18s 323us/sample - loss: 0.6932 - accuracy: 0.7676 - val_loss: 0.6371 - val_accuracy: 0.8286

Epoch 2/10

55000/55000 [==============================] - 12s 217us/sample - loss: 0.4566 - accuracy: 0.8445 - val_loss: 0.5409 - val_accuracy: 0.8664

Epoch 3/10

55000/55000 [==============================] - 12s 218us/sample - loss: 0.4064 - accuracy: 0.8581 - val_loss: 0.5749 - val_accuracy: 0.8684

Epoch 4/10

55000/55000 [==============================] - 12s 213us/sample - loss: 0.3725 - accuracy: 0.8702 - val_loss: 0.5673 - val_accuracy: 0.8714

Epoch 5/10

55000/55000 [==============================] - 12s 212us/sample - loss: 0.3515 - accuracy: 0.8751 - val_loss: 0.4915 - val_accuracy: 0.8766

Epoch 6/10

55000/55000 [==============================] - 11s 209us/sample - loss: 0.3327 - accuracy: 0.8823 - val_loss: 0.5009 - val_accuracy: 0.8828

Epoch 7/10

55000/55000 [==============================] - 12s 210us/sample - loss: 0.3184 - accuracy: 0.8873 - val_loss: 0.4981 - val_accuracy: 0.8868

Epoch 8/10

55000/55000 [==============================] - 11s 209us/sample - loss: 0.3080 - accuracy: 0.8896 - val_loss: 0.4918 - val_accuracy: 0.8836

Epoch 9/10

55000/55000 [==============================] - 12s 209us/sample - loss: 0.2961 - accuracy: 0.8931 - val_loss: 0.5123 - val_accuracy: 0.8798

Epoch 10/10

55000/55000 [==============================] - 12s 216us/sample - loss: 0.2832 - accuracy: 0.8979 - val_loss: 0.6987 - val_accuracy: 0.8630

12s 216us/sample - loss: 0.2832 - accuracy: 0.8979 - val_loss: 0.6987 - val_accuracy: 0.8630