Pytorch训练Bilinear CNN模型笔记

Pytorch训练Bilinear CNN模型笔记

注:一个项目需要用到机器学习,而本人又是一个python小白,根据老师的推荐,然后在网上查找了一些资料,终于实现了目的。

参考文献:

Caltech-UCSD Birds 200 (CUB) 数据库预处理

使用 PyTorch 处理CUB200_2011数据集

CUB200-2011鸟类细粒度数据集训练集和测试集划分python代码

PYTORCH框架下使用CUB200-2011细粒度数据集训练BILINEAR CNN网络模型

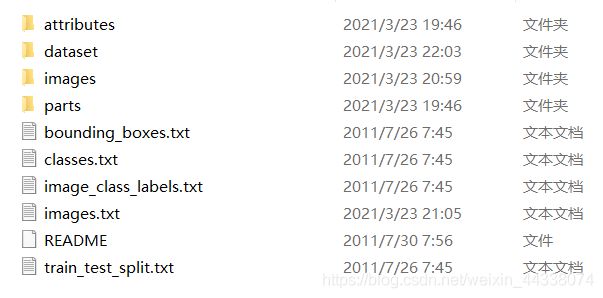

Dataset

开始跑这个模型的时候用的公开数据集是Caltech-UCSD Birds 200鸟类数据集,后面的自己的数据集格式也是按照CUB(200)的格式来的。

这里对几个文件进行一个说明:

bounding_boxes.txt : 包含每张图像的物体边框,格式为

classes.txt : 包含每张图片的类别序号和名称,格式为

image_class_labels.txt : 包含每张图片对应的类别序号,格式为

images.txt : 包含每张图片的路径信息,格式为

train_test_split.txt : 记录数据集的训练集和测试集划分,格式为

数据集预处理

统一图片格式

功能:将图像尺寸改成224*224,放在一个新目录下

注:数据集里并不是所有图片都是彩色的,黑白图读出来没有channel这一维。

处理办法:用numpy建一个目标尺寸的、通道数为3的数组,每个通道都赋成那张黑白图的值,这样能扩展出channel这维,且与原来的黑白图一致。

# -*- coding: utf8 -*-

from os.path import join

from os import listdir, makedirs

from imageio import imread, imwrite

from scipy.misc import imresize #, imread, imsave

import numpy as np

IN = 'images' # 源目录

OUT = 'images.resized' # 输出目录

CHANNEL = 3 # 通道数

SIZE_2D = (224, 224) # 目标长宽

SIZE_3D = (224, 224, CHANNEL)

makedirs(OUT) # 创建输出目录

ls = list(listdir(IN)) # 列出(按类组织的)子目录

# print(ls)

for p in ls:

out_d = join(OUT, p) # 输出(按类组织的)子目录

makedirs(out_d)

p = join(IN, p)

# print(listdir(p))

for filE in listdir(p):

img = imread(join(p, filE)) # 读图

img = imresize(img, SIZE_2D) # 改图

if img.shape != SIZE_3D: # 黑白图

tmp = np.zeros(SIZE_3D)

for c in range(CHANNEL):

tmp[:, :, c] = img

img = tmp

imwrite(join(out_d, filE), img) # 存图

将数据集划分为训练集和测试集

根据train_test_txt文件和images.txt文件将原始数据集分为训练集和测试集

# *_*coding: utf-8 *_*

"""

读取images.txt文件,获得每个图像的标签

读取train_test_split.txt文件,获取每个图像的train, test标签.其中1为训练,0为测试.

"""

import os

import shutil

import numpy as np

import config

import time

time_start = time.time()

# 文件路径

path_images = config.path + 'images.txt'

path_split = config.path + 'train_test_split.txt'

trian_save_path = config.path + 'dataset/train/'

test_save_path = config.path + 'dataset/test/'

# 读取images.txt文件

images = []

with open(path_images,'r') as f:

for line in f:

images.append(list(line.strip('\n').split(',')))

# 读取train_test_split.txt文件

split = []

with open(path_split, 'r') as f_:

for line in f_:

split.append(list(line.strip('\n').split(',')))

# 划分

num = len(images) # 图像的总个数

for k in range(num):

file_name = images[k][0].split(' ')[1].split('/')[0]

aaa = int(split[k][0][-1])

if int(split[k][0][-1]) == 1: # 划分到训练集

#判断文件夹是否存在

if os.path.isdir(trian_save_path + file_name):

shutil.copy(config.path + 'images/' + images[k][0].split(' ')[1], trian_save_path+file_name+'/'+images[k][0].split(' ')[1].split('/')[1])

else:

os.makedirs(trian_save_path + file_name)

shutil.copy(config.path + 'images/' + images[k][0].split(' ')[1], trian_save_path + file_name + '/' + images[k][0].split(' ')[1].split('/')[1])

print('%s处理完毕!' % images[k][0].split(' ')[1].split('/')[1])

else:

#判断文件夹是否存在

if os.path.isdir(test_save_path + file_name):

aaaa = config.path + 'images/' + images[k][0].split(' ')[1]

bbbb = test_save_path+file_name+'/'+images[k][0].split(' ')[1]

shutil.copy(config.path + 'images/' + images[k][0].split(' ')[1], test_save_path+file_name+'/'+images[k][0].split(' ')[1].split('/')[1])

else:

os.makedirs(test_save_path + file_name)

shutil.copy(config.path + 'images/' + images[k][0].split(' ')[1], test_save_path + file_name + '/' + images[k][0].split(' ')[1].split('/')[1])

print('%s处理完毕!' % images[k][0].split(' ')[1].split('/')[1])

time_end = time.time()

print('CUB200训练集和测试集划分完毕, 耗时%s!!' % (time_end - time_start))

config文件

# *_*coding: utf-8 *_*

path = '/media/lm/C3F680DFF08EB695/细粒度数据集/birds/CUB200/CUB_200_2011/'

ROOT_TRAIN = path + 'images/train/'

ROOT_TEST = path + 'images/test/'

BATCH_SIZE = 16

模型训练

文件夹结构

注:model文件夹用于存放训练好的模型

详细代码

config文件

# *_*coding: utf-8 *_*

import os

CUB_PATH = '/media/lm/C3F680DFF08EB695/细粒度数据集/birds/CUB200/CUB_200_2011/dataset'

PROJECT_ROOT = os.getcwd()

PATH = {

'cub200_train': CUB_PATH + '/train/',

'cub200_test': CUB_PATH + '/test/',

'model': os.path.join(PROJECT_ROOT, 'model/')

}

BASE_LEARNING_RATE = 0.05

EPOCHS = 100

BATCH_SIZE = 8

WEIGHT_DECAY = 0.00001

BILINEAR CNN网络模型——BCNN_FC

# *_*coding: utf-8 *_*

import torch

import torch.nn as nn

import torchvision

class BCNN_fc(nn.Module):

def __init__(self):

#torch.nn.Module.__init__()

super(BCNN_fc, self).__init__()

# VGG16的卷积层和池化层

self.features = torchvision.models.vgg16(pretrained=True).features

self.features = nn.Sequential(*list(self.features.children())[:-1]) # 去除最后的pool层

# 线性分类层

self.fc = nn.Linear(512*512, 200)

# 冻结以前的所有层

for param in self.features.parameters():

param.requres_grad = False

# 初始化fc层

nn.init.kaiming_normal_(self.fc.weight.data)

if self.fc.bias is not None:

nn.init.constant_(self.fc.bias.data, val=0)

def forward(self, x):

N = x.size()[0]

assert x.size() == (N, 3, 448, 448)

x = self.features(x)

assert x.size() == (N, 512, 28, 28)

x = x.view(N, 512, 28*28)

x = torch.bmm(x, torch.transpose(x, 1, 2)) / (28*28) # 双线性

assert x.size() == (N, 512, 512)

x = x.view(N, 512*512)

x = torch.sqrt(x + 1e-5)

x = torch.nn.functional.normalize(x)

x = self.fc(x)

assert x.size() == (N, 200)

return x

BILINEAR CNN网络模型——BCNN_ALL

# *_*coding: utf-8 *_*

import torch

import torch.nn as nn

import torchvision

class BCNN_all(nn.Module):

def __init__(self):

super(BCNN_all, self).__init__()

# VGG16的卷积层与池化层

self.features = torchvision.models.vgg16(pretrained=False).features

self.features = torch.nn.Sequential(*list(self.features.children())[:-1]) # 去除最后的pool层

# 全连接层

self.fc = torch.nn.Linear(512*512, 200)

def forward(self, x):

N = x.size()[0]

assert x.size() == (N, 3, 448, 448)

x = self.features(x)

assert x.size() == (N, 512, 28, 28)

x = x.view(N, 512, 28*28)

x = torch.bmm(x, torch.transpose(x, 1, 2))/(28*28) # 双线性

assert x.size() == (N, 512, 512)

x = x.view(N, 512*512)

x = torch.sqrt(x + 1e-5)

x = torch.nn.functional.normalize(x)

x = self.fc(x)

assert x.size() == (N, 200)

return x

data_load,数据集加载

# *_*coding: utf-8 *_*

import torch

import torchvision

import config

def train_data_process():

train_transforms = torchvision.transforms.Compose([

torchvision.transforms.Resize(size=448),

torchvision.transforms.RandomHorizontalFlip(),

torchvision.transforms.RandomCrop(size=448),

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize(mean=(0.485, 0.456, 0.406),

std=(0.229, 0.224, 0.225))

])

train_data = torchvision.datasets.ImageFolder(root=config.PATH['cub200_train'],

transform=train_transforms)

train_loader = torch.utils.data.DataLoader(train_data,

batch_size=config.BATCH_SIZE,

shuffle=True,

num_workers=8,

pin_memory=True)

return train_loader

def test_data_process():

test_transforms = torchvision.transforms.Compose([

torchvision.transforms.Resize(size=448),

torchvision.transforms.RandomCrop(size=448),

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize(mean=(0.485, 0.456, 0.406),

std=(0.229, 0.224, 0.225))

])

test_data = torchvision.datasets.ImageFolder(root=config.PATH['cub200_test'],

transform=test_transforms)

test_loader = torch.utils.data.DataLoader(test_data,

batch_size=config.BATCH_SIZE,

shuffle=True,

num_workers=8,

pin_memory=True)

return test_loader

if __name__ == '__main__':

train_data_process()

test_data_process()

main.py,用于模型的训练及测试数据下的精度测试

# *_*coding: utf-8 *_*

import os

import torch

import torch.nn as nn

import torchvision

import argparse

import config

from BCNN_fc import BCNN_fc

from BCNN_all import BCNN_all

from data_load import train_data_process, test_data_process

# 配置GPU

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# 加载数据

train_loader = train_data_process()

test_loader = test_data_process()

# 主程序

if __name__ == '__main__':

parser = argparse.ArgumentParser(description='network_select')

parser.add_argument('--net_select',

dest='net_select',

default='BCNN_all',

help='select which net to train/test.')

args = parser.parse_args()

if args.net_select == 'BCNN_fc':

net = BCNN_fc().to(device)

else:

net = BCNN_all().to(device)

# 损失

criterion = nn.CrossEntropyLoss().cuda()

optimizer = torch.optim.SGD(net.fc.parameters(),

lr=config.BASE_LEARNING_RATE,

momentum=0.9,

weight_decay=config.WEIGHT_DECAY)

scheduler = torch.optim.lr_scheduler.ReduceLROnPlateau(optimizer,

mode='max',

factor=0.1,

patience=3,

verbose=True,

threshold=1e-4)

# 训练模型

print('Start Training ==>')

total_step = len(train_loader)

best_acc = 0.0

best_epoch = None

for epoch in range(config.EPOCHS):

epoch_loss = []

num_correct = 0

num_total = 0

for i, (images, labels) in enumerate(train_loader):

# 数据转为cuda

images = torch.autograd.Variable(images.cuda())

labels = torch.autograd.Variable(labels.cuda())

#梯度清零

optimizer.zero_grad()

#前向传播

outputs = net(images)

loss = criterion(outputs, labels)

aaaa = loss.data

epoch_loss.append(loss.data)

#预测

_, prediction = torch.max(outputs.data, 1)

num_total += labels.size(0)

num_correct += torch.sum(prediction == labels.data)

#后向传播

loss.backward()

optimizer.step()

if (i + 1) % 10 == 0:

print('Epoch [{}/{}], Step [{}/{}], Training Loss: {:.4f}'.format(epoch + 1, config.EPOCHS, i+1, total_step, loss.item()))

train_Acc = 100*num_correct/num_total

print('Epoch:%d Training Loss:%.03f Acc: %.03f' % (epoch+1, sum(epoch_loss)/len(epoch_loss), train_Acc))

# 在测试集上进行测试

print('Watting for Test ==>')

with torch.no_grad():

num_correct = 0

num_total = 0

for images, labels in test_loader:

net.eval()

images = torch.autograd.Variable(images.cuda())

labels = torch.autograd.Variable(labels.cuda())

outputs = net(images)

_, prediction = torch.max(outputs.data, 1)

num_total += labels.size(0)

num_correct += torch.sum(prediction == labels.data).item()

test_Acc = 100 * num_correct / num_total

print('第%d个Epoch下的测试精度为: %.03f' % (epoch+1, test_Acc))

# 保存模型

torch.save(net.state_dict(), config.PATH['model'] + 'vgg16_epoch_%d.pth' % (epoch + 1))

具体的调参就需要自己去慢慢的调

最后,这里有一篇对Epoch、Iteration、Batchsize参数理解的文章:

神经网络中Epoch、Iteration、Batchsize相关理解和说明