【阅读笔记】多任务学习之MMoE(含代码实现)

本文作为自己阅读论文后的总结和思考,不涉及论文翻译和模型解读,适合大家阅读完论文后交流想法。

MMoE

-

- 一. 全文总结

- 二. 研究方法

- 三. 结论

- 四. 创新点

- 五. 思考

- 六. 参考文献

- 七. Pytorch实现⭐

一. 全文总结

提出了一种基于**多门混合专家(MMoE)**结构的多任务学习方法,验证了模型的有效性和可训练性。

二. 研究方法

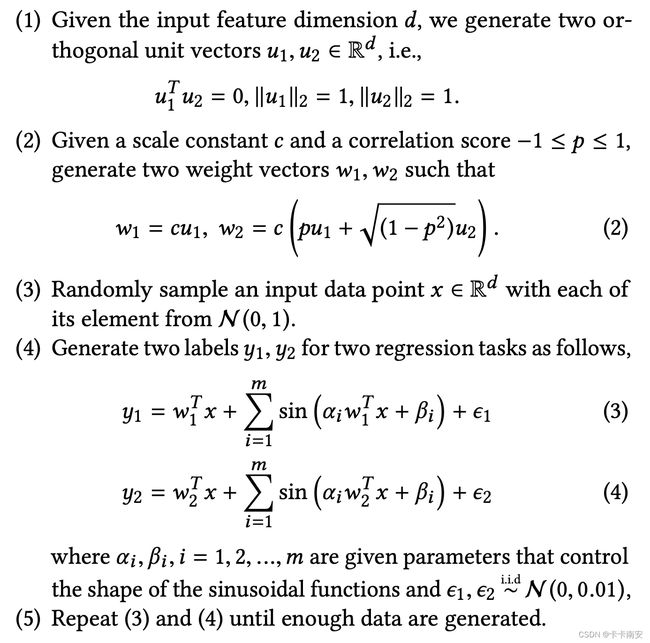

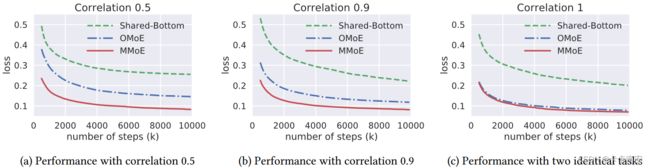

构造了可以人为控制相关性的合成数据集,比较了Share-Bottom、OMoE、MMoE在不同相关系数任务下的训练精度。最后,对真实的基准数据和具有数亿用户和项目的大规模生产推荐系统进行了实验,验证了MMoE在现实环境中的效率和有效性。

下图为三种模型在不同相关性任务中的表现:

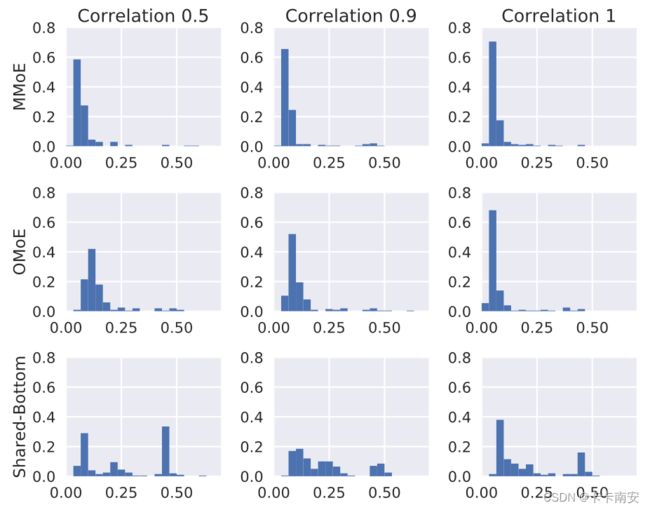

下图为不同模型在不同相关性任务中,重复实验200次最低loss的分布情况:

三. 结论

- MMoE明确地学习从数据中建模任务关系,可以更好地处理任务不太相关的场景。

- 与基线方法相比,MMoE 更容易训练。

- MMoE 在很大程度上保留了计算优势(有更好的计算效率),因为门控网络通常是轻量级的,并且专家网络在所有任务中共享。

四. 创新点

- 提出了一种新颖的多门专家混合模型MMoE,该模型明确地对任务关系进行建模。通过调制和门控网络,MMoE自动调整建模共享信息和建模任务特定信息之间的参数化。

- 对合成数据进行控制实验,报告了任务相关性如何影响多任务学习中的训练动态以及 MMoE 如何提高模型表达能力和可训练性。

- 对真实的基准数据和具有数亿用户和项目的大规模生产推荐系统进行了实验,实验验证了MMoE在现实环境中的效率和有效性。

五. 思考

- MMoE在任务相关性低时较其他模型有更好的效果,但是可能会”跷跷板“的情况:一个task的效果提升,会伴随着另一个task的效果降低。

- 门控网络一般由线性变换+softmax组成,这部分计算量非常小,几乎可以忽略,但有人实验表明门控网络多叠加几层会有更好的效果。

- 多门结构在解决由任务差异引起的冲突引起的不良局部最小值方面有效。

六. 参考文献

- 大厂技术实现 | 多目标优化及应用(含代码实现)

- 我要打十个:多任务学习模型MMoE解读

- 多目标学习(Multi-task Learning)-网络设计和损失函数优化

- 收藏|浅谈多任务学习(Multi-task Learning)

七. Pytorch实现⭐

class Expert(nn.Module):

def __init__(self,input_dim,output_dim): #input_dim代表输入维度,output_dim代表输出维度

super(Expert, self).__init__()

p=0

expert_hidden_layers = [64,32]

self.expert_layer = nn.Sequential(

nn.Linear(input_dim, expert_hidden_layers[0]),

nn.ReLU(),

nn.Dropout(p),

nn.Linear(expert_hidden_layers[0], expert_hidden_layers[1]),

nn.ReLU(),

nn.Dropout(p),

nn.Linear(expert_hidden_layers[1],output_dim),

nn.ReLU(),

nn.Dropout(p)

)

def forward(self, x):

out = self.expert_layer(x)

return out

class Expert_Gate(nn.Module):

def __init__(self,feature_dim,expert_dim,n_expert,n_task,use_gate=True): #feature_dim:输入数据的维数 expert_dim:每个神经元输出的维数 n_expert:专家数量 n_task:任务数(gate数) use_gate:是否使用门控,如果不使用则各个专家取平均

super(Expert_Gate, self).__init__()

self.n_task = n_task

self.use_gate = use_gate

'''专家网络'''

for i in range(n_expert):

setattr(self, "expert_layer"+str(i+1), Expert(feature_dim,expert_dim))

self.expert_layers = [getattr(self,"expert_layer"+str(i+1)) for i in range(n_expert)]#为每个expert创建一个DNN

'''门控网络'''

for i in range(n_task):

setattr(self, "gate_layer"+str(i+1), nn.Sequential(nn.Linear(feature_dim, n_expert),

nn.Softmax(dim=1)))

self.gate_layers = [getattr(self,"gate_layer"+str(i+1)) for i in range(n_task)]#为每个gate创建一个lr+softmax

def forward(self, x):

if self.use_gate:

# 构建多个专家网络

E_net = [expert(x) for expert in self.expert_layers]

E_net = torch.cat(([e[:,np.newaxis,:] for e in E_net]),dim = 1) # 维度 (bs,n_expert,expert_dim)

# 构建多个门网络

gate_net = [gate(x) for gate in self.gate_layers] # 维度 n_task个(bs,n_expert)

# towers计算:对应的门网络乘上所有的专家网络

towers = []

for i in range(self.n_task):

g = gate_net[i].unsqueeze(2) # 维度(bs,n_expert,1)

tower = torch.matmul(E_net.transpose(1,2),g)# 维度 (bs,expert_dim,1)

towers.append(tower.transpose(1,2).squeeze(1)) # 维度(bs,expert_dim)

else:

E_net = [expert(x) for expert in self.expert_layers]

towers = sum(E_net)/len(E_net)

return towers

class MMoE(nn.Module):

#feature_dim:输入数据的维数 expert_dim:每个神经元输出的维数 n_expert:专家数量 n_task:任务数(gate数)

def __init__(self,feature_dim,expert_dim,n_expert,n_task,use_gate=True):

super(MMoE, self).__init__()

self.use_gate = use_gate

self.Expert_Gate = Expert_Gate(feature_dim=feature_dim,expert_dim=expert_dim,n_expert=n_expert,n_task=n_task,use_gate=use_gate)

'''Tower1'''

p1 = 0

hidden_layer1 = [64,32] #[64,32]

self.tower1 = nn.Sequential(

nn.Linear(expert_dim, hidden_layer1[0]),

nn.ReLU(),

nn.Dropout(p1),

nn.Linear(hidden_layer1[0], hidden_layer1[1]),

nn.ReLU(),

nn.Dropout(p1),

nn.Linear(hidden_layer1[1], 1))

'''Tower2'''

p2 = 0

hidden_layer2 = [64,32]

self.tower2 = nn.Sequential(

nn.Linear(expert_dim, hidden_layer2[0]),

nn.ReLU(),

nn.Dropout(p2),

nn.Linear(hidden_layer2[0], hidden_layer2[1]),

nn.ReLU(),

nn.Dropout(p2),

nn.Linear(hidden_layer2[1], 1))

def forward(self, x):

towers = self.Expert_Gate(x)

if self.use_gate:

out1 = self.tower1(towers[0])

out2 = self.tower2(towers[1])

else:

out1 = self.tower1(towers)

out2 = self.tower2(towers)

return out1,out2

Model = MMoE(feature_dim=112,expert_dim=32,n_expert=4,n_task=2,use_gate=True)

nParams = sum([p.nelement() for p in Model.parameters()])

print('* number of parameters: %d' % nParams)

输入数据格式为(batchsize,feature_dim),输出为(batchsize,2)。

在原文中作者构造了可以控制任务相关性的人工数据集,我搜遍全网都没找到人工数据集的创建方式,于是自己写了一个分享给大家:MMoE论文中Synthetic Data生成代码(控制多任务学习中任务之间的相关性)