深度学习(4):基于深层卷积网络实现车牌识别

目的:基于深层卷积神经网络结合CTC损失函数对车牌进行识别,通过对车牌数据集进行训练获得识别模型,并验证模型性能和将模型进行应用。

一、原理

- 了解深层卷积神经网络构建方法和基本原理

- 熟悉目标识别相关算法的常规训练流程

- 掌握CTC损失函数的基本原理

CTC 的全称是Connectionist Temporal Classification. 这个方法主要是解决神经网络label 和output 不对齐的问题(Alignment problem)。参考博客:CTC loss原理详解大全_@you_123的博客-CSDN博客_ctcloss的原理

二、数据探索

1.安装库

- scikit-image是基于scipy的一款图像处理包,它将图片作为numpy数组进行处理,正好与matlab一样,scikit-image是基于numpy,因此需要安装numpy和scipy,同时需要安装matplotlib进行图片的实现等。

- OpenCV-Python是由OpenCV C++实现并封装的Python库。 OpenCV-Python使用Numpy,这是一个高度优化的数据操作库,具有MATLAB风格的语法。 所有OpenCV数组结构都转换为Numpy数组。这也使得与使用Numpy的其他库(如SciPy和Matplotlib)集成更容易。

torchvision是pytorch的一个图形库,它服务于PyTorch深度学习框架的,主要用来构建计算机视觉模型。参考torchvision详细介绍_Fighting_1997的博客-CSDN博客_torchvisiontorchsummary能够查看模型的输入和输出的形状,可以更加清楚地输出模型的结构。- torchviz模型可视化

#安装组件,安装完成需要重新启动一下kernel

!pip3 install scikit-image

!pip3 install opencv-python

!pip3 install torchvision

!pip3 install torchsummary

!pip3 install torchviz2.导入库

tqdm是一个快速的,易扩展的进度条提示模块,参考tqdm 简介及正确的打开方式_、Edgar的博客-CSDN博客_tqdm- PyTorch将深度学习中常用的优化方法全部封装在

torch.optim中,其设计十分灵活,能够很方便的扩展成自定义的优化方法。

import torch

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import Dataset, DataLoader

from tqdm import tqdm

import numpy as np

import cv2

import matplotlib.pyplot as plt

import os

from skimage import io

%matplotlib inline3.准备数据

#准备数据,从OSS中获取数据并解压到当前目录:

import oss2

access_key_id = os.getenv('OSS_TEST_ACCESS_KEY_ID', 'LTAI4G1MuHTUeNrKdQEPnbph')

access_key_secret = os.getenv('OSS_TEST_ACCESS_KEY_SECRET', 'm1ILSoVqcPUxFFDqer4tKDxDkoP1ji')

bucket_name = os.getenv('OSS_TEST_BUCKET', 'mldemo')

endpoint = os.getenv('OSS_TEST_ENDPOINT', 'https://oss-cn-shanghai.aliyuncs.com')

# 创建Bucket对象,所有Object相关的接口都可以通过Bucket对象来进行

bucket = oss2.Bucket(oss2.Auth(access_key_id, access_key_secret), endpoint, bucket_name)

# 下载到本地文件

bucket.get_object_to_file('data/c12/number_plate_data.zip', 'number_plate_data.zip')!unzip -q -o number_plate_data.zip

!rm -rf __MACOSX#训练集路径

data_dir_train = 'gen_res'

#验证集路径

data_dir_val = 'gen_res_val'4.可视化

从训练集中随机提取18张照片进行可视化,代码如下:

import os

import numpy as np

import matplotlib.pyplot as plt

import random

# 随机选择18张照片

train_folder = data_dir_train+"/15/"

images = random.choices(os.listdir(train_folder), k=18)

fig = plt.figure(figsize=(20, 10))

# 6列

columns = 6

# 3行

rows = 3

# 依次可视化

for x, i in enumerate(images):

path = os.path.join(train_folder,i)

img = plt.imread(path)

fig.add_subplot(rows, columns, x+1)

plt.imshow(img)

plt.show()三、模型训练

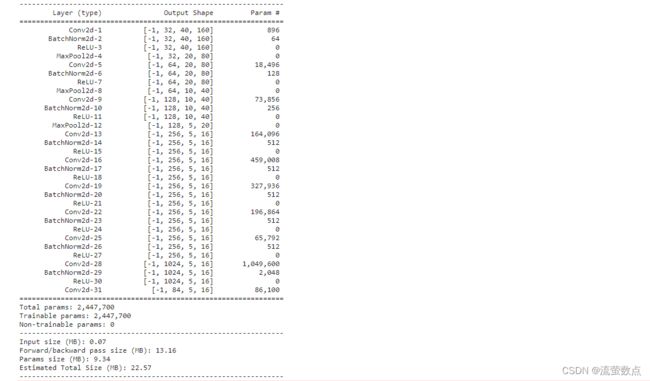

1.构建深层卷积网络

- Module类是

nn模块里提供的一个模型构造类(专门用来构造模型的),是所有神经网络模块的爸爸,我们可以继承它来定义我们想要的模型。 - forward定义模型的前向计算,即如何根据输入x计算返回所需要的模型输出

class Model(nn.Module):

def __init__(self):

super().__init__()

self.conv0 = nn.Conv2d(3, 32, 3, stride=1, padding=1)

self.bn0 = nn.BatchNorm2d(32)

self.relu0 = nn.ReLU()

self.pool0 = nn.MaxPool2d(2, stride=2)

self.conv1 = nn.Conv2d(32, 64, 3, stride=1, padding=1)

self.bn1 = nn.BatchNorm2d(64)

self.relu1 = nn.ReLU()

self.pool1 = nn.MaxPool2d(2, stride=2)

self.conv2 = nn.Conv2d(64, 128, 3, stride=1, padding=1)

self.bn2 = nn.BatchNorm2d(128)

self.relu2 = nn.ReLU()

self.pool2 = nn.MaxPool2d(2, stride=2)

self.conv2d_1 = nn.Conv2d(128, 256, (1,5), stride=1)

self.batch_normalization_1 = nn.BatchNorm2d(256)

self.activation_1 = nn.ReLU()

# --------------------

self.conv2d_2 = nn.Conv2d(256, 256, (7,1), stride=1, padding=(3,0))

self.batch_normalization_2 = nn.BatchNorm2d(256)

self.activation_2 = nn.ReLU()

self.conv2d_3 = nn.Conv2d(256, 256, (5,1), stride=1, padding=(2,0))

self.batch_normalization_3 = nn.BatchNorm2d(256)

self.activation_3 = nn.ReLU()

self.conv2d_4 = nn.Conv2d(256, 256, (3,1), stride=1, padding=(1,0))

self.batch_normalization_4 = nn.BatchNorm2d(256)

self.activation_4 = nn.ReLU()

self.conv2d_5 = nn.Conv2d(256, 256, (1,1), stride=1)

self.batch_normalization_5 = nn.BatchNorm2d(256)

self.activation_5 = nn.ReLU()

# -----------------

self.conv_1024_11 = nn.Conv2d(1024, 1024, 1, stride=1)

self.batch_normalization_6 = nn.BatchNorm2d(1024)

self.activation_6 = nn.ReLU()

self.conv_class_11 = nn.Conv2d(1024, 84, 1, stride=1)

def forward(self, x):

x = self.pool0(self.relu0(self.bn0(self.conv0(x))))

x = self.pool1(self.relu1(self.bn1(self.conv1(x))))

x = self.pool2(self.relu2(self.bn2(self.conv2(x))))

x = self.activation_1(self.batch_normalization_1(self.conv2d_1(x)))

x2 = self.activation_2(self.batch_normalization_2(self.conv2d_2(x)))

x3 = self.activation_3(self.batch_normalization_3(self.conv2d_3(x)))

x4 = self.activation_4(self.batch_normalization_4(self.conv2d_4(x)))

x5 = self.activation_5(self.batch_normalization_5(self.conv2d_5(x)))

x = torch.cat([x2, x3, x4, x5], dim=1)

x = self.activation_6(self.batch_normalization_6(self.conv_1024_11(x)))

x = self.conv_class_11(x)

x = torch.softmax(x, dim=1)

return x2.定义数据集

#定义识别标签词典

vocabulary = ["京", "沪", "津", "渝", "冀", "晋", "蒙", "辽", "吉", "黑", "苏", "浙", "皖", "闽", "赣", "鲁", "豫", "鄂", "湘", "粤", "桂",

"琼", "川", "贵", "云", "藏", "陕", "甘", "青", "宁", "新", "0", "1", "2", "3", "4", "5", "6", "7", "8", "9", "A",

"B", "C", "D", "E", "F", "G", "H", "J", "K", "L", "M", "N", "P", "Q", "R", "S", "T", "U", "V", "W", "X",

"Y", "Z","港","学","使","警","澳","挂","军","北","南","广","沈","兰","成","济","海","民","航","空", ""]- Python的魔法方法

__getitem__可以让对象实现迭代功能,这样就可以使用for...in...来迭代该对象了

#车牌数据读取类

class CarnumberDataset(Dataset):

def __init__(self, data_dir):

files = []

for d in os.listdir(data_dir):

for d2 in os.listdir(os.path.join(data_dir, d)):

files.append(os.path.join(data_dir, d, d2))

self.files = files

def __len__(self):

return len(self.files)

def __getitem__(self, idx):

img_name = self.files[idx]

image = io.imread(img_name)

image = cv2.resize(image, (160,40)).transpose((2,1,0))

text = os.path.basename(img_name).split('.')[0]

carnumber = np.zeros((len(text)), dtype=np.long)

for i, char in enumerate(text):

carnumber[i] = vocabulary.index(char)

return image, carnumber3.读取数据

dataset_train = CarnumberDataset(data_dir_train)

#构建读取训练集加载器,其中读取过程中对数据进行随机化,每批大小为4个

dataloader_train = DataLoader(dataset_train, batch_size=4, shuffle=True, num_workers=0, drop_last=True)dataset_val = CarnumberDataset(data_dir_val)

#构建验证集读取器,其中读取过程中对数据进行随机化,每批大小为4个

dataloader_val = DataLoader(dataset_val, batch_size=4, shuffle=True, num_workers=0, drop_last=True)4.定义工具方法

#移除CTC中连续的重复字符

def remove_connective_duplicate(src):

last = None

out = []

for c in src.tolist():

if last is None or last != c:

last = c

out.append(c)

return np.array(out)

#对模型输出结果进行词典解码

def decode(output):

return ''.join(list(map(lambda i: vocabulary[i], output)))

#获得识别结果的概率值

def decode_prob(prob):

return decode(remove_connective_duplicate(prob.argmax(axis=0)))5.模型训练

#定义模型结构

model = Model()

#选择Adam作为学习率优化器

optimizer = optim.Adam(model.parameters(), lr=0.0001)

#选择CTC损失

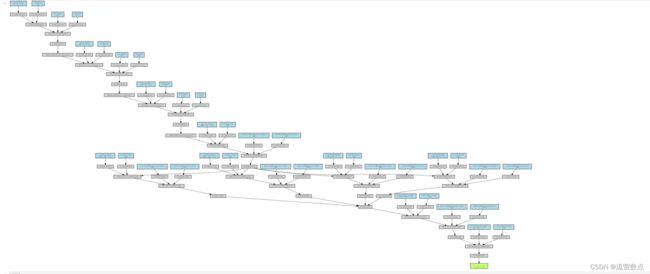

criterion = nn.CTCLoss(blank=len(vocabulary)-1)- 调用make_dot()函数构造图对象 ,可视化模型。参考pytorch 网络结构可视化方法汇总(三种实现方法详解)_LoveMIss-Y的博客-CSDN博客_pytorch网络结构可视化

#输出模型结构

from torchviz import make_dot

from torchsummary import summary

summary(model,(3, 40, 160))

x = torch.zeros(1, 3, 40, 160, dtype=torch.float, requires_grad=False)

out = model(x)

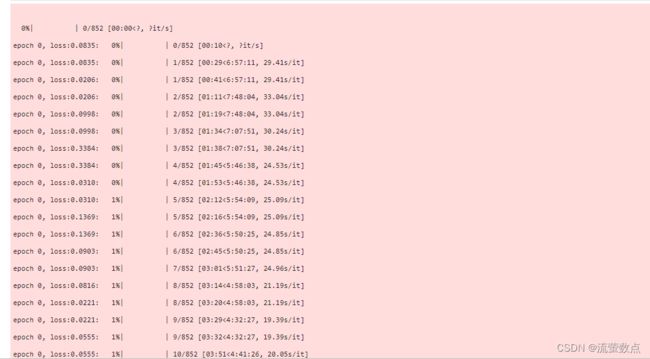

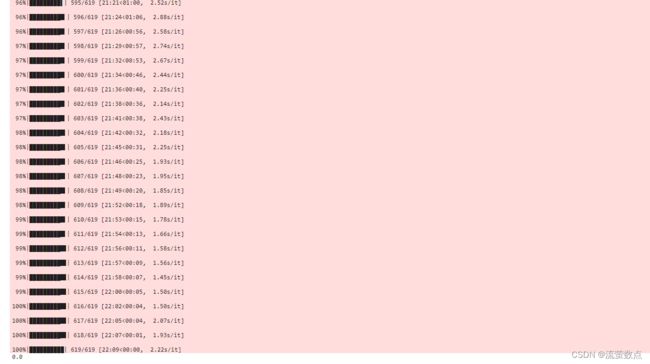

make_dot(out, params=dict(model.named_parameters()), show_attrs=True, show_saved=True)#模型训练

model = model.train()#训练历史记录

loss_history = []

for epoch in range(10):

pbar = tqdm(dataloader_train)

for batch_image, batch_carnumber in pbar:

batch_image = batch_image.float()

batch_output = model(batch_image).squeeze()

loss = criterion(batch_output.permute(2,0,1).log(),

batch_carnumber,

torch.tensor([batch_output.shape[2]]*batch_output.shape[0]),

torch.tensor([batch_carnumber.shape[1]]*batch_carnumber.shape[0]))

pbar.set_description(f'epoch {epoch}, loss:{loss.item():.4f}')

loss_history.append(loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()#模型保存

torch.save(model, 'model-lastest.pth')6.模型验证

#构建验证模型

model = model.eval()

#计算正确率指标

correct = 0

for sample in tqdm(dataset_val):

sample_image = sample[0]

sample_carnumber = sample[1]

output = decode_prob(model(torch.tensor(sample_image[np.newaxis,...]).float())[0].squeeze().detach().numpy())

truth = decode(sample_carnumber)

if output == truth:

correct += 1

#输出正确率结果

print(correct/len(dataset_val))四、模型应用

1.加载模型

#加载模型

model = torch.load('model-lastest.pth',map_location="cpu")

for m in model.modules():

if 'Conv' in str(type(m)):

setattr(m, 'padding_mode', 'zeros')2.定义模型使用方法

- eval():将字符串string对象转化为有效的表达式参与求值运算返回计算结果。

- eval(expression,globals=None, locals=None)返回的是计算结果。

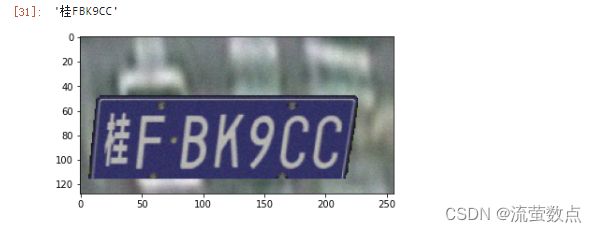

#定义模型使用方法

def eval(file):

image = io.imread(file)

#输入图片可视化

plt.imshow(image)

image = cv2.resize(image, (160,40)).transpose((2,1,0))

output = model(torch.tensor(image[np.newaxis,...]).float())[0].squeeze().detach().numpy()

return decode_prob(output)eval('gen_res_val/29/宁C0B5GP.jpg')eval('gen_res_val/21/琼DXQ66B.jpg')eval('gen_res_val/20/桂FBK9CC.jpg')本实验基于深层卷积算法实现车牌数据建模,通过将原始图片进行训练,获得识别模型,然后对测试车牌数据进行验证和应用。