(17)PyTorch中计算模型的参数量(params)和浮点运算量(FLOPs)

方法1.使用summary

test.py正确代码如下:

import torch

from torchsummary import summary

from nets.yolo4 import YoloBody

if __name__ == "__main__":

# 需要使用device来指定网络在GPU还是CPU运行

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = YoloBody(3,80,backbone="mobilenetv2",phi=0).to(device)

summary(model, input_size=(3, 416, 416))输出网络结构和参数量,以及可训练参数量

方法2.使用torchstat

遇到的问题:RuntimeError: Input type (torch.FloatTensor) and weight type (torch.cuda.FloatTensor) should be the same

问题分析:输入在cpu,模型指定在gpu,应该一致

解决方法:都放到cpu上

test.py正确代码如下:

import torch

from torchsummary import summary

from torchstat import stat

from nets.yolo4 import YoloBody

if __name__ == "__main__":

# 需要使用device来指定网络在GPU还是CPU运行

# device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# model = YoloBody(3,80,backbone="mobilenetv2",phi=0).to(device)

model = YoloBody(3, 80, backbone="mobilenetv2", phi=0)

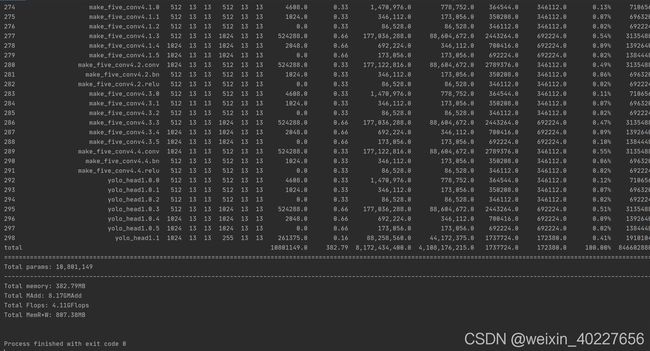

stat(model, (3, 416, 416))运行结果如下:

参考:PyTorch查看网络模型的参数量params和FLOPs等

RuntimeError: Input type (torch.cuda.FloatTensor) and weight type (torch.FloatTensor) should be the

方法3.使用thop

参考:Pytorch中计算自己模型的FLOPs | thop.profile() 方法 |

test.py代码:

import torch

from torchsummary import summary

from thop import profile

from nets.yolo4 import YoloBody

if __name__ == "__main__":

# 需要使用device来指定网络在GPU还是CPU运行

# device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# model = YoloBody(3,80,backbone="mobilenetv2",phi=0).to(device)

model = YoloBody(3, 80, backbone="mobilenetv2", phi=0)

# input = torch.randn(1, 3, 416, 416)

input = [1, 3, 416, 416]

flops, params = profile(model, inputs=(input, ))

print(flops)

print(params)报错:TypeError: conv2d(): argument 'input' (position 1) must be Tensor, not list

改正:修改input

test.py正确代码如下:

import torch

from torchsummary import summary

from thop import profile

from nets.yolo4 import YoloBody

if __name__ == "__main__":

# 需要使用device来指定网络在GPU还是CPU运行

# device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# model = YoloBody(3,80,backbone="mobilenetv2",phi=0).to(device)

model = YoloBody(3, 80, backbone="mobilenetv2", phi=0)

input = torch.randn(1, 3, 416, 416)

flops, params = profile(model, inputs=(input, ))

print(flops)

print(params)