1.准备工作先对相机进行内参标定

%YAML:1.0

---

model_type: KANNALA_BRANDT

camera_name: camera

scaling_ratio: 1

image_width: 640

image_height: 480

projection_parameters:

k2: -0.02059949

k3: -0.00272535

k4: -0.00411149

k5: 0.00134326

mu: 338.79350848

mv: 338.75216467

u0: 334.20126771

v0: 234.73275102

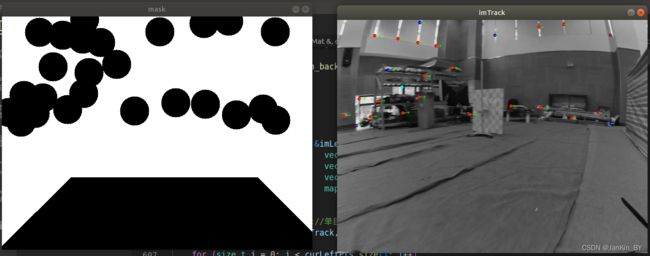

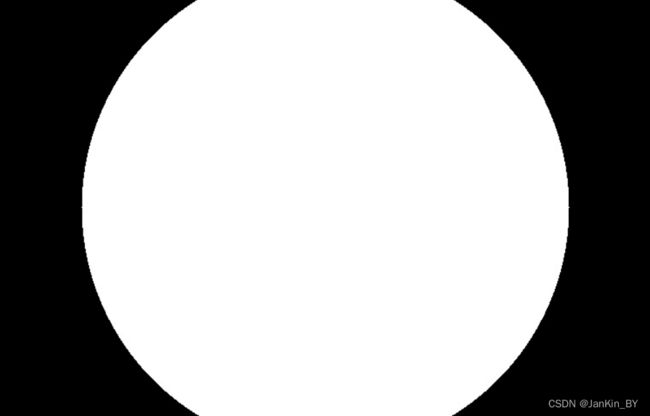

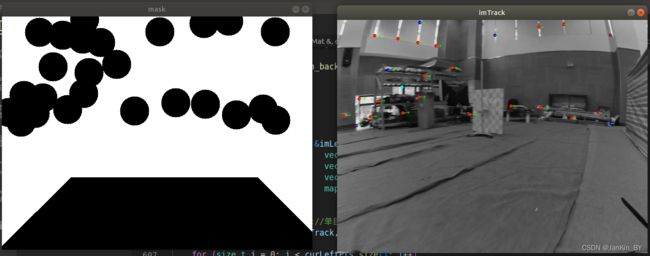

2.添加遮罩

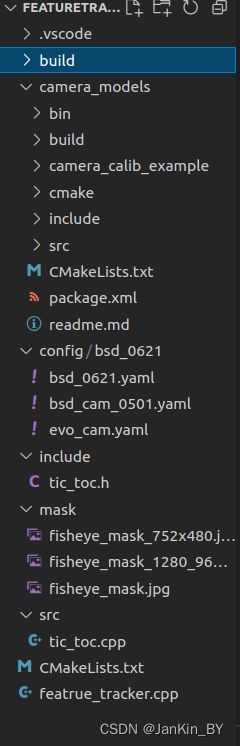

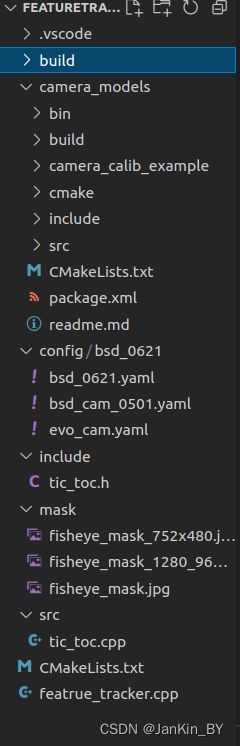

3.下载相机模型文件https://github.com/hengli/camodocal

camera_models

函数

#include

#include

#include

#include

#include

#include

#include

#include