KNN算法解决鸢尾花分类案例

KNN算法解决鸢尾花分类案例

本文分别通过KNN底层算法实现和sklearn中的KNeighbors Classifier(K近邻分类模拟)和对3中不同的鸢尾花的分类。

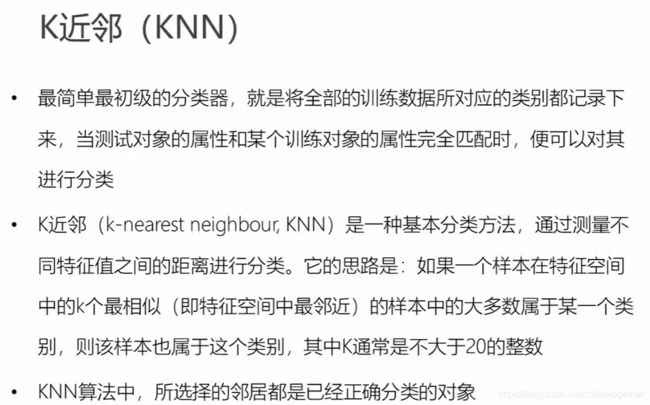

一、K近邻(KNN)算法介绍

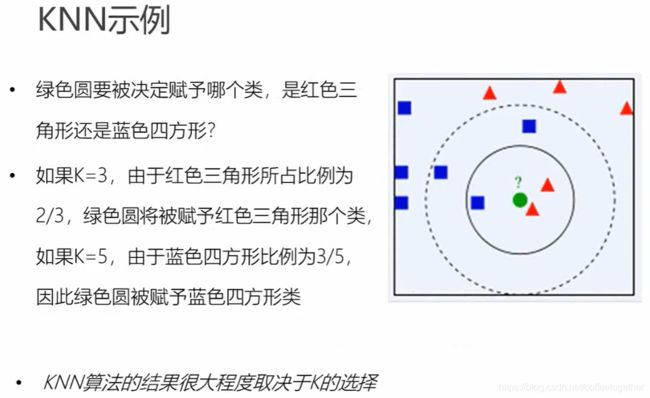

二、KNN举例说明

三、KNN举例计算

四、KNN算法实现

五、利用KNN算法实现鸢尾花分类案例

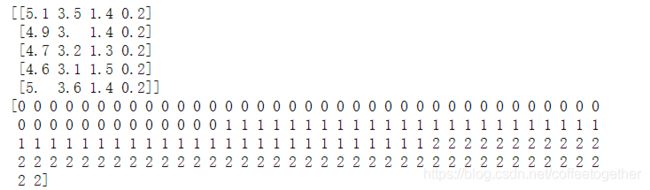

案例背景说明:数据为sklearn自带的,数据集共有150条,其中数据

data代表着鸢尾花的4个特征(花萼长度,花萼宽度,花瓣长度,花瓣宽度)。target表示鸢尾花的三种不同类型(setosa,versicolor,virginica)

通过KNN算法,将数据集按比例随机生成分成70%的训练集和30%测试集。

最后通过对比预测的结果与实际结果,并计算出预测准确率。

方案一:通过KNN底层算法实现

1.导入必要库

# 导入库

import numpy as np

import pandas as pd

# 导入sklearn的数据集

from sklearn.datasets import load_iris

# 切分数据集和训练集

from sklearn.model_selection import train_test_split

# 计算分类预测准确率

from sklearn.metrics import accuracy_score

2.查看鸢尾花的数据

iris = load_iris()

# 查看x的前5行数据

print(iris.data[:5])

# 查看结果y的前5行数据

print(iris.target)

3.对数据进行预处理

x = iris.data

y = iris.target.reshape(-1,1)

print(x.shape,y.shape)

4.将测试集与训练集分离

# 换分训练集和测试集

x_train,x_test,y_train,y_test = train_test_split(x,y,test_size=0.3,random_state=35,stratify = y)

print(x_train.shape,y_train.shape)

print(x_test.shape,y_test.shape)

5.核心算法实现

# 距离函数定义

# 曼哈顿距离

def l1_distance(a,b):

return np.sum(np.abs(a-b),axis=1)

def l2_distance(a,b):

return np.sqrt(np.sum((a-b)**2,axis=1))

# 分类器实现

class kNN(object):

# 定义初始化方法,初始化kNN需要的超参数

def __init__(self,n_neighbors = 1,dist_func = l1_distance):

self.n_neighbors = n_neighbors

self.dist_func = dist_func

# 训练模型方法

def fit(self,x,y):

# 将x,y传进来即可

self.x_train = x

self.y_train = y

# 模型预测方法

def predict(self,x):

# 初始化预测分类数组

y_pred = np.zeros((x.shape[0],1),dtype = self.y_train.dtype)

# 遍历输入的x数据点

for i,x_test in enumerate(x):

# x_test跟所有的训练数据计算距离

distances = self.dist_func(self.x_train,x_test)

# 得到的距离按照由近到远排序

nn_index = np.argsort(distances)

# 选取最近的k个点,保存其类别

nn_y = self.y_train[nn_index[:self.n_neighbors]].ravel()

# 统计类别中频率最高的那个,赋给y_pred[i]

y_pred[i] = np.argmax(np.bincount(nn_y))

return y_pred

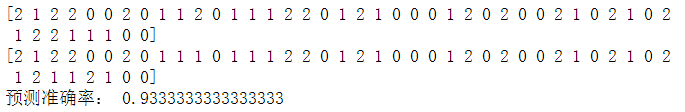

6.测试数据

# 定义一个knn实例

knn = kNN(n_neighbors = 3)

# 训练模型

knn.fit(x_train,y_train)

# 传入测试数据,做预测

y_pred = knn.predict(x_test)

print(y_test.ravel())

print(y_pred.ravel())

# 求准确率

accuracy = accuracy_score(y_test,y_pred)

print('预测准确率:',accuracy)

7.通过改变不同的k值和距离函数类型,得到更好的方案

# 定义一个knn实例

knn = kNN()

# 训练模型

knn.fit(x_train,y_train)

# 创建一个列表保存不同的准确率

result_list = []

for p in [1,2]:

knn.dist_func = l1_distance if p == 1 else l2_distance

# 考虑不同的k值

for k in range(1,10,2):

knn.n_neighbors = k

# 传入测试数据,做预测

y_pred = knn.predict(x_test)

# 求出预测准确率

accuracy = accuracy_score(y_test,y_pred)

result_list.append([k,'l1_distance' if p ==1 else 'l2_distance',accuracy])

df = pd.DataFrame(result_list,columns = ['k','距离函数','预测准确率'])

df

方案二:通过sklearn中的KNeighbors Classifier实现

1.导入必要库

from __future__ import print_function

# 导入数据集

from sklearn import datasets

from sklearn.model_selection import train_test_split

# 导入K近邻算法库

from sklearn.neighbors import KNeighborsClassifier

2.导入数据

iris = datasets.load_iris()

iris_X = iris.data

iris_y = iris.target

3.查看数据

# 查看data数的前5行

print(iris_X[:5])

# 查看分类结果

print(iris_y)

# 查看data和target的数据结构

print(iris_X.shape)

print(iris_y.shape)

2.分离数据(70%训练集和30%测试集)

# 将数据分成训练集和测试集

X_train, X_test, y_train, y_test = train_test_split(

iris_X, iris_y, test_size=0.3) # 将数据集分开,测试集的数据量设置为30%,训练集为70%

3.创建KNN回归模型进行预测

# 分类

knn = KNeighborsClassifier()

knn.fit(X_train, y_train)

# 预测结果

print(knn.predict(X_test))

# 实际结果

print(y_test)

4.计算预测准确率

# 计算分类预测准确率

from sklearn.metrics import accuracy_score

# 求准确率

accuracy = accuracy_score(y_test,knn.predict(X_test))

print('预测准确率:',accuracy)