【论文翻译】Learning from Few Samples: A Survey 小样本学习综述

论文链接:https://arxiv.org/abs/2007.15484

摘要

Deep neural networks have been able to outperform humans in some cases like image recognition and image classification. However, with the emergence of various novel categories, the ability to continuously widen the learning capability of such networks from limited samples, still remains a challenge. Techniques like Meta-Learning and/or few-shot learning showed promising results, where they can learn or generalize to a novel category/task based on prior knowledge. In this paper, we perform a study of the existing few-shot meta-learning techniques in the computer vision domain based on their method and evaluation metrics. We provide a taxonomy for the techniques and categorize them as data-augmentation, embedding, optimization and semantics based learning for few-shot, one-shot and zero-shot settings. We then describe the seminal work done in each category and discuss their approach towards solving the predicament of learning from few samples. Lastly we provide a comparison of these techniques on the commonly used benchmark datasets: Omniglot, and MiniImagenet, along with a discussion towards the future direction of improving the performance of these techniques towards the final goal of outperforming humans.

在某些情况下,如图像识别和图像分类,深度神经网络已经能够超越人类。然而,随着各种新类别的出现,从有限样本中不断扩大此类网络学习能力的能力仍然是一个挑战。元学习和/或小样本学习等技术显示出了有希望的结果,在这些技术中,他们可以基于先验知识学习或概括到一个新的类别/任务。在本文中,我们对计算机视觉领域现有的基于方法和评估指标的小样本元学习技术进行了研究。我们为这些技术提供了一个分类法,并将它们分类为数据增强、嵌入、优化和基于语义的学习,用于few-shot, one-shot 和 zero-shot设置。然后,我们描述了在每个类别中所做的开创性工作,并讨论了他们解决从小样本中学习的困境的方法。最后,我们在常用的基准数据集Omniglot和MiniImagenet上对这些技术进行了比较,并讨论了未来改进这些技术性能的方向,以实现超越人类的最终目标。

1 Introduction

Artifical intelligence (AI) based systems are becoming a huge part of the human life whether be it personal or professional. We are surrounded by AI-based machines and applications which intend to make our life easier. For example, the automatic mail filtering (spam detection), suggesting shopping websites, social networking in smartphones, etc. [1], [2], [3], [4]. This impressive progress has been possible due to the breakthrough success achieved by machine or deep learning models [5]. Machine or deep learning occupies a big part of the AI domain. Deep Learning models are built over multiple layers of perceptrons combined with the ability to apply gradient-based optimization techniques. Two of the most common applications of deep learning models are: computer vision (CV), where the goal is to teach machines how to see and perceive things like humans do; Natural Language Processing (NLP) and Natural Language Understanding (NLU), where the goal is to analyze and comprehend large amounts of natural language data. These deep learning models have achieved tremendous success in image recognition [6], [7], [8], speech recognition [9], [10], [11], [12], [13], natural language processing and understanding [14], [15], [16], [17], [18], video analytics [19], [20], [21], [22], [23], cyber security [24], [25], [26], [27], [28], [29], [30]. The most common approach towards machine and/or deep learning is supervised learning, where large number of data samples, towards a particular application, are collected along with their respective labels and formed as a dataset. This dataset is categorized into three parts: training, validation and testing. During the training phase, the model is fed the data from the training and validation sets along with their respective labels and based on back propagation and optimization, the model generalizes to a hypothesis. During testing phase, the testing data is fed to the model and based on the derived hypothesis, the model predicts the output class of the testing data samples.

基于人工智能(AI)的系统正在成为人类生活的一个重要组成部分,无论是个人生活还是职业生活。我们周围都是基于人工智能的机器和应用程序,它们旨在让我们的生活更轻松。例如,自动邮件过滤(垃圾邮件检测)、建议购物网站、智能手机社交网络等[1]、[2]、[3]、[4]。由于机器或深度学习模型取得了突破性的成功,这一令人印象深刻的进步成为可能[5]。机器或深度学习占据了人工智能领域的很大一部分。深度学习模型是在多层感知器上建立的,结合了应用基于梯度的优化技术的能力。深度学习模型最常见的两个应用是:计算机视觉(CV),其目标是教机器如何像人类一样看到和感知事物;自然语言处理(NLP)和自然语言理解(NLU),其目标是分析和理解大量自然语言数据。这些深度学习模型在图像识别[6]、[7]、[8]、语音识别[9]、[10]、[11]、[12]、[13]、自然语言处理和理解[14]、[15]、[16]、[17]、[18]、视频分析[19]、[20]、[21]、[22]、[23]、网络安全[24]、[25]、[26]、[27]、[28]、[29]、[30]等方面取得了巨大成功。机器和/或深度学习最常见的方法是监督学习,其中针对特定应用的大量数据样本与其各自的标签一起收集,并形成数据集。该数据集分为三个部分:训练、验证和测试。在训练阶段,模型将来自训练集和验证集的数据及其各自的标签反馈给模型,并基于反向传播和优化,将模型推广到一个假设。在测试阶段,将测试数据输入到模型中,并基于导出的假设,模型预测测试数据样本的输出类别。

The ability to handle large amounts of data, thanks to the power of computational and modern systems [31], [32], has been exceptional. Along with the advancements of various algorithms and models, deep learning has been able to match up to humans and in some cases outperform humans. AlphaGo [33] an AI-based agent, trained without any human guidance was able to defeat the world champion of Go, an ancient board game considered to be 10x complicated than chess [34]; In another example of a complex and strategical multiplayer game called DOTA, the AIagent was able to defeat human players of DOTA [35]; For the task of image recognition and classification models like ResNet [6] and Inception [36], [37], [38] were able to achieve better performance than humans on the popular ImageNet dataset which consists of over 14 million images with over 1000 classes [39].

由于计算机和现代系统的强大功能,处理大量数据的能力非常出色[31],[32]。随着各种算法和模型的进步,深度学习已经能够与人类相匹配,并且在某些情况下优于人类。AlphaGo[33]一种基于人工智能的特工,在没有任何人类指导的情况下训练,能够击败围棋世界冠军,围棋是一种古老的棋类游戏,被认为比国际象棋复杂10倍[34];在另一个名为DOTA的复杂且具有战略意义的多人游戏示例中,AIagent能够击败DOTA的人类玩家[35];对于图像识别和分类任务,如ResNet[6]和Inception[36]、[37]、[38]等模型能够在流行的ImageNet数据集上实现比人类更好的性能,该数据集由1400多万张图像和1000多个类别组成[39]。

One of the ultimate goal of AI is to match or outperform humans in any given task. To achieve this goal, it is imperative to have minimal dependency on large balanced labeled datasets. Current models achieving successful results in tackling tasks with tremendous amounts of labelled data, however, approaches for other large variety tasks where the labelled data is scarce (few samples only) the performance of the respective models drops significantly. It is unrealistic to expect large balanced datasets for any particular task because due to nature of various categories it is nearly impossible to keep up with the producing labelled data. Furthermore, generation of labelled datasets require resources like time, human efforts and can be financially expensive. On the other hand, humans can quickly learn new class or classes, like given a photo of a strange animal, it can easily identify the animal from a photo which consists of a variety of animals. Another advantage of humans over machines is the ability to learn new concepts or classes on the fly, whereas machines have to go through an expensive offline process of training and retraining the entire model repeatedly to learn new classes, provided, the availability of labelled data. Researchers and developers are motivated to bridge this gap between humans and machines. As a potential solution to this problem, we have seen an ever increasing work in the area of meta-learning [40], [41], [42], [43], [44], [45], [46], [47], [48], [49], [50], few-shot learning [51], [52], [53], [54], lowshot learning [55], [56], [57], [58], zero-shot learning [59], [60], [61], [62], [63], [64], [65], where the goal is to make the model generalize better to novel tasks consisting of few labelled samples.

人工智能的最终目标之一是在任何给定任务中与人类匹敌或超越人类。为了实现这一目标,必须尽量减少对大型平衡标记数据集的依赖。当前的模型在处理具有大量标记数据的任务时取得了成功的结果,但是,对于标记数据稀少(仅少数样本)的其他多种任务,相应模型的性能显著下降。对于任何特定的任务来说,期望大型平衡数据集是不现实的,因为由于各种类别的性质,几乎不可能跟上生成标记数据的步伐。此外,生成带标签的数据集需要时间、人力等资源,而且可能在财务上很昂贵。另一方面,人类可以快速学习新的类,就像给一张奇怪动物的照片一样,它可以很容易地从一张由多种动物组成的照片中识别出动物。与机器相比,人类的另一个优势是能够动态地学习新概念或类,而机器必须经历一个昂贵的离线过程,即反复训练和重新训练整个模型,以学习新类,前提是有标记数据可用。研究人员和开发人员被激励去弥合人与机器之间的鸿沟。作为这个问题的一个潜在解决方案,我们已经看到元学习[40]、[41]、[42]、[43]、[44]、[45]、[46]、[47]、[48]、[49]、[50]、few-shot学习[51]、[52]、[53]、[54]、low-shot学习[55]、[56]、[57]、[58]、zero-shot学习[59]、[60]、[61]、[62]、[63]、[64]、[65]、等领域的工作越来越多,目标是使模型更好地推广到包含少量标记样本的新任务。

1.1 What is Few-shot Learning and Meta-Learning

In few-shot, low-shot, or n-shot learning (where n is generally between 1 to 5), the basic idea is to train the model with large amount of data samples on multiple categories, and during testing, the model is provided with novel categories (also referred to as novel set) where there are multiple data samples for each category and generally the number of categories is limited to five. In meta learning, the goal is to generalize or learn the learning process, where the models are trained on a particular task, and a function of a different classifier is used on the novel set. The objective is to find the best hyperparameters and model weights where the model can easily adapt to the novel tasks without over-fitting to a novel task. In meta-learning, there are two categories of optimizational running simultaneously: one which learns to the new task; another which trains the learner. In recent times, few shot learning and meta-learning techniques have garnered interest, thus becoming a hot topic for research, with a flurry of recent papers1[66].

在few-shot, low-shot或n-shot learning(其中n通常在1到5之间)中,基本思想是使用多个类别的大量数据样本训练模型,并在测试期间,该模型提供了新类别(也称为新集合),其中每个类别都有多个数据样本,并且通常类别的数量限制为五个。在元学习中,目标是概括或学习学习过程,在学习过程中,模型针对特定任务进行训练,并在新集合上使用不同分类器的函数。其目标是找到最佳的超参数和模型权重,使模型能够轻松适应新任务,而不会过度拟合新任务。在元学习中,有两类优化同时运行:一类是学习新任务;另一种训练学习器的方法。最近,小样本学习和元学习技术引起了人们的兴趣,因此成为研究的热门话题,最近发表了一系列论文[66]。

Early work in the area of meta-learning was done by Y oshua and Samy Bengio [67] and Fei-Fei Li in few-shot learning [68]. Metric learning is one of the older techniques used, where the objective is to learn from the embedding space. Images are transformed to their embeddings and images for a particular category were observed to be in close cluster whereas images from different categories were observed to be far away. Another popular approach where the data is augmented which results in generation of more samples from the limited few samples available. Currently semantics based approaches are extensively researched upon where the classification is based solely on the name of the category and its attribute. This semantics based approach is inspired towards solving zero-shot learning applications.

元学习领域的早期工作由Y oshua、Samy Bengio[67]和Fei Fei Li在小样本学习[68]中完成。度量学习是使用的较老技术之一,其目标是从嵌入空间学习。将图像转换为嵌入,观察到特定类别的图像处于紧密的聚类中,而来自不同类别的图像处于较远的聚类中。另一种流行的方法是增加数据,从而从有限的几个可用样本中生成更多样本。目前,基于语义的方法得到了广泛的研究,其中分类仅基于类别的名称及其属性。这种基于语义的方法受到了解决零样本学习应用程序的启发。

1.2 Transfer Learning and Self-Supervised Learning

The overall objective of transfer learning is to learn knowledge or experience from a set of tasks and transfer it to a task in the similar domain [95]. The task used to train the model to gain knowledge has lots of labelled samples whereas the transferred task has comparatively less labelled data (also called as fine-tuning), which is not enough to train and converge the model to the particular task. The performance of transfer learning technique is dependant on the relevance between the two tasks. While performing transfer learning, the classification layers are trained for the new tasks whereas the weights of the previous layers in a model are kept frozen [96]. For every new task, where we do transfer learning, the choice of learning rate and the number of layers to be frozen has to be decided manually. In contrast to this, meta-learning techniques can quite rapidly adapt to a new task automatically.

迁移学习的总体目标是从一组任务中学习知识或经验,并将其迁移到类似领域的任务中[95]。用于训练模型以获取知识的任务具有大量标记样本,而转移的任务具有相对较少的标记数据(也称为微调),这不足以训练模型并将其收敛到特定任务。迁移学习技术的表现取决于两个任务之间的相关性。在执行迁移学习时,分类层针对新任务进行训练,而模型中先前层的权重保持不变[96]。对于我们进行迁移学习的每个新任务,学习速率的选择和冻结的层数都必须手动决定。与此相反,元学习技术可以非常快速地自动适应新任务。

Self-supervised learning research have gained a lot of popularity in recent time [97], [98], [99]. Training of Self-Supervised Learning (SSL) techniques is based on two steps: one, where the model is trained on a pre-defined pre-text task where it it trained on a large corpus of unlabelled data samples; two, where the learned model parameters are used to train or fine-tune the model for the main downstream task. The idea behind meta-learning or few-shot learning techniques is quite similar to self-supervised learning, which is to use prior knowledge, to recognize or fine tune to a novel task. Studies have shown that self supervised learning can be used along with few-shot learning to boost the performance of the model towards novel categories [100], [101].

近年来,自我监督学习研究得到了广泛的关注[97]、[98]、[99]。自我监督学习(SSL)技术的训练基于两个步骤:第一步,在预定义的文本任务上训练模型,在大量未标记数据样本上训练模型;第二,学习的模型参数用于训练或微调主要下游任务的模型。元学习或小样本学习技术背后的思想与自监督学习非常相似,即使用先验知识识别或微调新任务。研究表明,自监督学习可以与小样本学习一起使用,以提高模型对新类别的性能[100],[101]。

1.3 Taxonomy and Organization

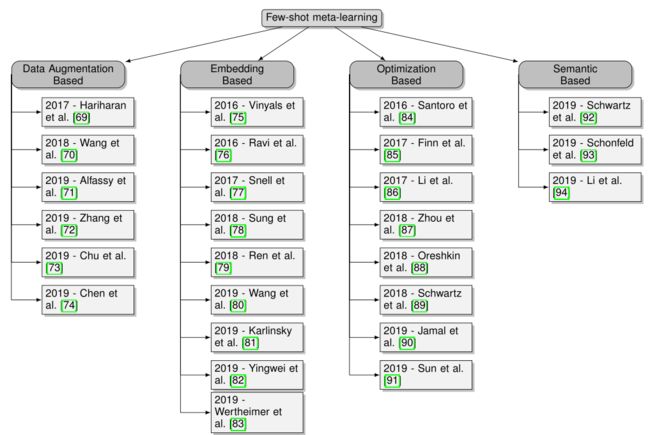

The main goal of meta-learning, few-shot learning, low-shot learning, one-shot, zero-shot learning, techniques is to make the deep learning model generalize to better to novel categories from handful samples with iterative training based on the prior knowledge or experience. Prior knowledge is knowledge acquired from training the samples on a labelled dataset consisting of large number of samples and then using this knowledge, THA T the model is trained ON, to recognize novel tasks with exposure to limited samples. Therefore, in the paper, we have combined all these techniques together under the main umbrella of fewshot meta-learning. As there is no pre-define taxonomy to these techniques, we have classified these approaches into four main categories: data-augmentation based; metric-learning based, metaoptimization based; and semantic-based (as illustrated in Figure 1). Data-augmentation based techniques are quite popular where the idea is to expand the prior knowledge by augmenting the minimally available samples and generating more diverse samples to train the model. In embedding-based techniques, the data samples are transformed to an another low-level dimension and then classified based on a distance between these embeddings. In optimization based techniques where a meta-optimizer is used to better generalize the model during the initial training and thus can do better prediction for the novel tasks. Semantic-based techniques are where the semantics of the data are used along with the prior knowledge for the model to either learn or optimize to novel categories. Table 1 highlights the commonly used symbols used in various equations and algorithms in the rest of the paper along with their meaning. Our contributions to this paper are summarized as follows:

元学习、少样本学习、低样本学习、单样本学习、零样本学习技术的主要目标是通过基于先验知识或经验的迭代训练,使深度学习模型从少数样本中推广到更好的新类别。先验知识是指在一个由大量样本组成的标记数据集上训练样本,然后使用该知识(即训练模型的知识)来识别暴露在有限样本下的新任务所获得的知识。因此,在本文中,我们在fewshot元学习的主框架下将所有这些技术结合在一起。由于这些技术没有预定义的分类法,我们将这些方法分为四大类:基于数据增强的方法;基于度量学习,基于元优化和基于语义的(如图1所示)。基于数据扩充的技术非常流行,其思想是通过扩充最小可用样本和生成更多样化的样本来训练模型,从而扩展先验知识。在基于嵌入的技术中,数据样本被转换到另一个低层维度,然后根据这些嵌入之间的距离进行分类。在基于优化的技术中,元优化器用于在初始训练期间更好地概括模型,从而可以更好地预测新任务。基于语义的技术是将数据的语义与模型的先验知识一起用于学习或优化新类别。表1突出显示了本文其余部分中各种方程和算法中使用的常用符号及其含义。我们对本文的贡献总结如下:

• We analyze the seminal work done in the area of few shot meta-learning ( based on published research from the year 2016 to 2020) and provide a taxonomy and categorize the techniques into four categories: data augmentation based, embedding based, optimization based, and semantics based and summarize the work done in each of the proposed category.

• We did a comparison of the performance of the techniques in each category with reference to the two commonly used benchmark dataset: Onmiglot and MiniImagenet and discuss the limitations and possible future directions towards solving the problem of few shot meta-learning.

•我们分析了在小样本元学习领域所做的开创性工作(基于2016年至2020年发表的研究),并提供了分类法,将技术分为四类:基于数据增强、基于嵌入、基于优化,并基于语义对每一类所做的工作进行总结。

•我们参考两个常用的基准数据集:Onmiglot和MiniImagenet,对每一类技术的性能进行了比较,并讨论了解决小样本元学习问题的局限性和未来可能的方向。

Fig. 1: Taxonomy of Few-shot meta-learning techniques. We have classified these techniques under four categories based on data augmentation, embedding, optimization and semantic. Data augmentation based techniques involves approach where the limited data samples are augmented to generate more samples to enrich the training experience. Embedding based techniques involve approaches where the data is transformed to a low dimensional space and then clustered into different groups using a specific distance function. In optimization based techniques, a meta optimizer is used which learns/generalizes from the overall learning process. In semantic based learning, the semantic information of the data samples is used along with the samples to better generalize and thus predict the novel categories. 小样本元学习技术的分类。我们根据数据扩充、嵌入、优化和语义将这些技术分为四类。基于数据扩充的技术涉及一种方法,即对有限的数据样本进行扩充以生成更多的样本以丰富训练体验。基于嵌入的技术涉及将数据转换到低维空间,然后使用特定的距离函数将其聚集到不同的组中的方法。在基于优化的技术中,使用了一个元优化器,它从整个学习过程中学习/概括。在基于语义的学习中,数据样本的语义信息与样本一起被用来更好地概括和预测新类别。

The remainder of the paper is structured as following. In subsection 2.1 we discuss the data augmentation based techniques in which the training data is augmented to generate more samples to train the neural network model. In subsection 2.1 the techniques where the input data samples are augmented to increase the training data are discussed. In subsection 2.2, we discuss the techniques where the general approach is to convert the high dimensional data to a lower dimension embedding and then using various distance or metric function compare the embedding of the initial trained tasks to that of the novel tasks. In subsection 2.3, we discuss techniques in which a meta-optimizer is used which can learn from the training set and can generalize well on the novel tasks. In subsection 2.4 we discuss techniques which extract semantic information from the training set to generalize on the novel tasks. In section 3 we compare the performance of the various techniques discussed in the paper. This comparison is done on two benchmark datasets: Omniglot and miniImagenet.

论文的其余部分结构如下。在第2.1小节中,我们讨论了基于数据增强的技术,其中训练数据被增强以生成更多样本来训练神经网络模型。在第2.1小节中,讨论了增加输入数据样本以增加训练数据的技术。在第2.2小节中,我们讨论了一般方法是将高维数据转换为低维嵌入,然后使用各种距离或度量函数将初始训练任务的嵌入与新任务的嵌入进行比较的技术。在第2.3小节中,我们讨论了使用元优化器的技术,元优化器可以从训练集中学习,并且可以很好地概括新任务。在第2.4小节中,我们讨论了从训练集中提取语义信息以概括新任务的技术。在第3节中,我们比较了本文中讨论的各种技术的性能。这种比较是在两个基准数据集上进行的:Omniglot和miniImagenet。

2 FEW-SHOT META LEARNING

In this section, we describe the seminal work done in the data augmentation, embedding, optimization and semantic based learning approaches in the few shot meta learning domain as highlighted in Figure 1

在本节中,我们描述了在图1中突出显示的小样本元学习领域中,在数据增强、嵌入、优化和基于语义的学习方法方面所做的开创性工作

2.1 Data Augmentation Based Techniques基于数据扩充的技术

Data-augmentation based techniques are quite popular in the supervised learning domain. Traditional techniques like scaling, cropping, rotating (clockwise and anti-clockwise) were implemented to expand the size of the training dataset where the goals was to make the model generalize better and avoid overfitting/underfitting scenarios. In the meta-learning space, the idea is to expand the prior knowledge by augmenting the minimally available samples and generating more diverse samples to train the model.

基于数据增强的技术在有监督学习领域非常流行。实现了缩放、裁剪、旋转(顺时针和逆时针)等传统技术来扩展训练数据集的大小,其目标是使模型更好地通用化,避免过拟合/欠拟合场景。在元学习空间中,其思想是通过增加最小可用样本并生成更多不同的样本来训练模型,从而扩展先验知识。

2.1.1 LaSO: Label-Set Operations networks标签集操作网络

The work done by Alfassy et al. in [71] describes a technique to tackle samples with more than one label for few-shot classification settings. Their novel idea consists to combine multiple labels of samples in the feature space. The resultant feature vector will comprise of labels which have gone through a particular set of operations on the label set of the respective data sample-label pair. Using their method, data samples comprising of intersection, union, or set-difference which are generated from labels present in two different input data samples.

Alfassy et al.在[71]中所做的工作描述了一种处理具有多个标签的样本的技术,用于小样本分类设置。他们的新想法是在特征空间中组合多个样本标签。生成的特征向量将由标签组成,这些标签在各自数据样本标签对的标签集上经历了一组特定的操作。使用他们的方法,数据样本由两个不同输入数据样本中的标签生成的交集、并集或集差组成。

As illustrated in Figure 2, the input the system are two different images, x and y with the set of their respective multiple labels L(x), L(y). The labels in the feature space F are represented with Fxand Fy. The Inception model [36] is used as backbone B to generate this feature space. The concatenated feature vectors, Fxand Fyare passed as an input to three sub-modules of the network Mint, Muni, Msubwhich synthesize the feature vector in the same space. The original vectors L(x), L(y) along with the generated output of the three sub-modules are passed onto the classifier. Even though a set of pre-defined labels are used, the model can also generalize to labels which are not present into the set and are forced to learn implicitly only by observing the L(x), L(y). Code: https://github.com/leokarlin/LaSO [71]

如图2所示,系统的输入是两个不同的图像,x和y以及它们各自的多个标签L(x),L(y)的集合。要素空间F中的标签用Fx和Fy表示。初始模型[36]用作主干B以生成该特征空间。连接的特征向量Fx和Fy作为输入传递给网络Mint、Muni、Msub的三个子模块,它们在同一空间合成特征向量。原始向量L(x)、L(y)以及三个子模块的生成输出被传递到分类器。即使使用了一组预定义的标签,该模型也可以推广到不存在于该集合中的标签,并且只能通过观察L(x),L(y)来隐式学习。代码:https://github.com/leokarlin/LaSO [71]

Fig. 2: The input the system are two different images, x and y with the set of their respective multiple labels L(x), L(y). The labels in the feature space F are represented with Fxand Fy. The original vectors L(x), L(y) along with the generated output of the three sub-modules are passed onto the classifier. Even though a set of pre-defined labels are used, the model can also generalize to labels which are not present into the set and are forced to learn implicitly only by observing the L(x), L(y). Image Source: [71] 系统的输入是两个不同的图像,x和y以及它们各自的多个标签L(x),L(y)的集合。要素空间F中的标签用Fx和Fy表示。原始向量L(x)、L(y)以及三个子模块的生成输出被传递到分类器。即使使用了一组预定义的标签,该模型也可以推广到不存在于该集合中的标签,并且只能通过观察L(x),L(y)来隐式学习。

2.1.2 Recognition by Shrinking and Hallucinating Features 用缩小和幻觉特征识别

In the work done by Hariharan and Girshick [69], the authors come up with a low-shot learning benchmark. This benchmark is inspired from the ImageNet1k dataset [102]. In the initial training step of the benchmark the learner is able to generalize to the Dtrainfrom the dataset, and during the few shot training step, the model should be able to generalize from the feature space of the Dtrainand the Dtest, to correctly predict the novel tasks. The benchmark is used to provide a sanity check (by comparing the accuracy) on the model’s ability to learn during its training on Dtrainand testing with Dtest. Code: https://github.com/facebookresearch/low-shot-shrink-hallucinate [69]

在Hariharan和Girshick[69]所做的工作中,作者提出了一个低成本的学习基准。该基准测试源于ImageNet1k数据集[102]。在基准测试的初始训练步骤中,学习者能够从数据集中概括到Dtrain,在小样本训练步骤中,模型应该能够从Dtrain和Dtest的特征空间中概括,以正确预测新任务。基准测试用于对模型在Dtrain训练和Dtest测试期间的学习能力进行健全性检查(通过比较准确性)。代码:https://github.com/facebookresearch/low-shot-shrink-hallucinate [69]

They showed that by hallucinating the feature vector for the Dtrain, to train the model on more number of images. Doing so, it enhances the model’s ability generalize better to novel class. The hallucination is done by using a G function. The G function consists of three fully connected MLP layers. For the model to learn and generalize better to the novel class, the authors have introduced a new loss function called the squared gradient magnitude loss (SGM) which is applied during the fewshot learning phase. The loss is given by:

他们证明,通过对数据序列的特征向量产生幻觉,可以在更多的图像上训练模型。这样,就增强了模型对新类的更好的泛化能力。幻觉是通过G函数实现的。G功能由三个完全连接的MLP层组成。为了使模型更好地学习和推广到新类,作者引入了一种新的损失函数,称为平方梯度幅度损失(SGM),该函数在fewshot学习阶段应用。损失如下所示:

2.1.3 Learning via Saliency-guided Hallucination 通过显著性引导的幻觉学习

The work done by Zhang et al. in [72] is based on using data hallucination technique in which they use a saliency network [103], [104] to generate the background and foreground information of an image. Using a two-stream network which generates hallucinated data points in the feature space based on the foreground and background information. Their model takes advantage of the generated saliency maps to improve the performance of few-shot technique.

Zhang等人在[72]中所做的工作基于使用数据幻觉技术,其中他们使用显著性网络[103],[104]来生成图像的背景和前景信息。使用基于前景和背景信息在特征空间中生成幻觉数据点的双流网络。他们的模型利用生成的显著性图来改进小样本技术的性能。

Their model consists of three modules: one, Saliency Network; two, A network to encode and mix the foreground and background information (FEMN); and three, A Similarity network. The saliency network generates the saliency maps based on the feature vector of the support S samples. The FEMN combines the foreground and background information. The similarity network determines if the query image and and the samples from the support set. The data hallucination process is the summation of foreground and the background information. Code: https://github.com/HongguangZhang/SalNet-cvpr19-master [72]

其模型由三个模块组成:一是显著性网络;二是对前景和背景信息进行编码和混合的网络(FEMN);第三,相似网络。显著性网络根据支持向量样本的特征向量生成显著性映射。FEMN结合了前景和背景信息。相似性网络确定查询图像和样本是否来自支持集。数据幻觉过程是前景和背景信息的总和。代码:https://github.com/HongguangZhang/SalNet-cvpr19-master [72]

2.1.4 Low-Shot Learning from Imaginary Data 基于虚拟数据的小样本学习

The work done by Wang et al. [70] is based on hallucinating the Dtraindata samples which can be useful for the classifier to learn or generalize to novel tasks. Instead of using hallucination to generate more diverse data like in [69], [72], their goal is to generate hallucinated data which is related to the samples in Dtrain. In their approach, they introduce a hallucinator along with the meta-learner to learn and generalize to the novel tasks. The objective of the hallucinator model is to map the hallucinated data to the original samples in Dtrain. The hallucinator model produces an extended set of Dtrainafter being trained on the base Dtrain. Code: https://github.com/facebookresearch/ low-shot-shrink-hallucinate [70]

Wang等人[70]所做的工作是基于对数据样本的幻觉,这有助于分类器学习或推广到新任务。他们的目标是生成与Dtrain中的样本相关的幻觉数据,而不是使用幻觉来生成更多样化的数据,如[69]、[72]。在他们的方法中,他们引入了幻觉器和元学习者来学习和概括新的任务。幻觉器模型的目标是将幻觉数据映射到Dtrain中的原始样本。幻觉器模型在基础数据训练后生成一组扩展的数据训练。代码:https://github.com/facebookresearch/ low-shot-shrink-hallucinate[70]

2.1.5 A Maximum-Entropy Patch Sampler 最大熵分片采样器

The work done by Chu et al. in [73] is based on a “learned” form of data augmentation where they search from various sequences of patches generated by sampling the image and perform classification on those patches using the extracted features. They claim that their method, along with the positive and negative sampling rules, together with an improved reward function (based on Maximum Entropy Reinforcement Learning [73]), improves the performance for n-shot learning paradigm.

Chu等人在[73]中所做的工作基于一种“学习”形式的数据扩充,他们从通过采样图像生成的各种面片序列中进行搜索,并使用提取的特征对这些面片进行分类。他们声称,他们的方法、正负采样规则以及改进的奖励函数(基于最大熵强化学习[73])提高了n-shot学习范式的性能。

Their model consists of five modules: feature extractor, a CNN based model, which at each time step extracts the feature embedding on the patches (generated by the maximum entropy sampler).; state encoder, which is used to aggregate the features generated by the feature extractor step. To achieve this, they use a RNN based GRU [105] model; a maximum entropy Sampler which generates patches from the input data sample. This is build by taking inspiration from Soft Q- Learning [106]; Action context encoder’s goal is to take into consideration all the global information generated by the maximum entropy sampler along with the feature extractor modules; classifier, whose objective is to accurately differentiate the input data sample to generate it predicted label.

他们的模型由五个模块组成:特征提取器,一个基于CNN的模型,它在每个时间步提取嵌入在面片上的特征(由最大熵采样器生成)。状态编码器,用于聚合特征提取器步骤生成的特征。为了实现这一点,他们使用基于RNN的GRU[105]模型。最大熵采样器,从输入数据样本生成面片,这是从Soft Q- Learning[106]中获得灵感构建的。动作上下文编码器的目标是考虑最大熵采样器和特征提取模块生成的所有全局信息。分类器,其目标是准确区分输入数据样本以生成预测标签。

2.1.6 Image Deformation Meta-Networks 图像变形元网络

The work done by Chen et al. in [74] generates additional training samples by combining a meta-learner module with an image deformation module. These aforementioned modules are trained in an end-to-end manner to significantly outperforms the then state-of-the-art technique. The meta-learning module learns from a group of k − way, n − shot tasks to classify samples from Dtrainwhich is then evaluated on samples from Dtest. The deformation module adaptively fuses the images from the support set S to generate synthesized deformed images which are then mapped to the feature vector generated from Dtrainthrough an embedding sub-module to construct the one-shot classification. Code: https://github.com/tankche1/IDeMe-Net

Chen等人在[74]中所做的工作通过将元学习者模块与图像变形模块相结合来生成额外的训练样本。上述模块以端到端的方式进行训练,大大优于当时最先进的技术。元学习模块从一组k− shot中学习,n− shot任务对来自dtrain的样本进行分类,然后对来自Dtest的样本进行评估。变形模块自适应地融合来自支持集S的图像以生成合成变形图像,然后通过嵌入子模块将合成变形图像映射到从Dtrain生成的特征向量以构建一次性分类。代码:https://github.com/tankche1/IDeMe-Net

2.2 Embedding-Based Techniques

In this subsection, we discuss techniques are which are model based, where the goal is to find best possible hypothesis from the hypothesis space and which can generalize well to a variety of tasks. Embedding based techniques also known as metric based techniques, is where the data is transformed to a lower dimension representation and then clustered and compared using a specific distance/metric function.

在本小节中,我们将讨论基于模型的技术,其目标是从假设空间中找到最佳可能的假设,并且可以很好地推广到各种任务。基于嵌入的技术也称为基于度量的技术,即将数据转换为低维表示,然后使用特定的距离/度量函数进行聚类和比较。

2.2.1 Relation Network

The work done by Sung et al. [78] proposes a flexible and simple yet effective network called as Relation Network (RN), for the n-shot learning settings. The basic idea with RN is based on episode training [75], where an episode consists of randomly selected tasks from the Dtrainwith k number of labelled samples from each class.

Sung等人[78]的工作为n-shot学习环境提出了一种灵活、简单但有效的网络,称为关系网络(RN)。RN的基本思想是基于事件训练[75],其中一个事件由从K个Dtrsin中随机选择的任务组成,每个类有k个标记样本。

The relation network is based on two steps: one, where the samples from Dtrainand the query set are transformed to a low level embedding space. This process is done by the embedding module; two, using a relation module these low level representations are compared and determined if the query image is matching to any of the output categories. The embedding module consists of four layers of convolutional neural network where each layer consists of 64 convolutional filters with a 3 × 3 kernel size, followed by batch normalization, ReLU activation and max-pooling performed using a 2 × 2 window. The relation module is a basic comparison module where the use mean square error (MSE) as a distance function. Code: https://github.com/floodsung/LearningToCompare FSL

关系网络基于两个步骤:第一步,将来自DTRAIN和查询集的样本转换为一个低层嵌入空间。该过程由嵌入模块完成;第二,使用关系模块对这些低级表示进行比较,并确定查询图像是否与任何输出类别匹配。嵌入模块由四层卷积神经网络组成,每层由64个卷积滤波器组成,每个滤波器的内核大小为3×3,然后使用2×2窗口执行批标准化、ReLU激活和最大池。关系模块是一个基本的比较模块,其中用户使用均方误差(MSE)作为距离函数。代码:https://github.com/floodsung/LearningToCompareFSL

2.2.2 Prototypical Network 原型网络

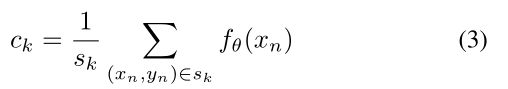

The work done Snell et al. in [77] describes a novel then stateof-the-art network called Prototypical Network to target few-shot and zero-shot applications. The network computes a prototype representation (an M-dimensional representation) of each class using an embedding function fθ: RD→ RM, where θ is the learnable parameters. The prototype is given by ck∈ RM which is calulated using the mean vector of the embedded support points in the class space. ckis given by:

Snell等人在[77]中所做的工作描述了一种当时最先进的新型网络,称为原型网络,用于针对few-shot 和 zero-shot应用。网络使用嵌入函数fθ:RD→ RM计算每个类的原型表示(M维表示),其中θ是可学习的参数。原型由ck∈RM给出,是使用类空间中嵌入支撑点的平均向量计算的。由以下公式得出:

where sk is the number of samples in true class k 其中sk是真实类别k中的样本数

The authors have primarily used squared Euclidean distance (D) for their prototypical network where where D : RM×RM→ [0,+∞]. For a querry x0the prototypical network, based on the softmax [107] performed on the distances to the prototypes in embedding space produces a distribution over the total range of classes using the embedding class meta-data vk. Code: https:// github.com/jakesnell/prototypical-networks

作者主要使用平方欧氏距离(D)作为他们的原型网络,其中D:RM×RM→ [0,+∞]. 对于querry X0,原型网络基于对嵌入空间中到原型的距离执行的softmax[107],使用嵌入类元数据vk在类的总范围内产生分布。代码:https:// github.com/jakesnell/prototypical-networks

Fig. 3: Figure explaining the prototypical network for few-shot and zero-shot application, where ckare prototypes for few-shot learning application and vkis the meta-data. An embedding of query points for an image ˆ x is classified using a softmax function performed over the class function using squared Euclidean distance function. Image Source: [77] 解释少样本和零样本应用的原型网络的图,其中CKK是少样本学习应用的原型,vk是元数据。图像ˆx的查询点的嵌入使用在使用平方欧几里德距离函数的类函数上执行的softmax函数进行分类。图片来源:[77]

2.2.3 Learning in localization of realistic settings 现实环境下的归一化学习

Wertheimer et al. [83] proposes an incremental work on top of prototypical network [77] to target the realistic open world images involve thousands of different classes with subtle variations. They target the problems of heavy class imbalance, heavy tailored and fine-grained clutter recognition. Their work is incremental on the existing work of prototypical networks that results in significant increase in the performance without adding much complexity to the over network. They propose a new training approach to tackle the class imbalance problem which is based on top of leaveone-out cross validation. To tackle the clutter problem, they use an learner architecture which can efficiently localize and object before classifying them into various classes. To tackle the finegrain problem and to differentiate subtleties in an object, they use bilinear pooling [108], [109] to increase the representation power of the learner model. Using the combination of the three improvement techniques, Wertheimer et al. were able to double the results with respect to accuracy of prototypical networks on meta-iNat benchmark.

Wertheimer等人[83]在原型网络[77]的基础上提出了一种增量工作,以针对现实的开放世界图像,该图像涉及数千个不同的类别,具有细微的变化。它们的目标是重类不平衡、重裁剪和细粒度杂波识别问题。他们的工作是在原型网络的现有工作的基础上进行的,这些工作在不增加网络复杂性的情况下显著提高了性能。他们提出了一种新的训练方法来解决类别不平衡问题,该方法基于一对一交叉验证。为了解决杂乱问题,他们使用了一种学习器体系结构,该体系结构可以在将它们分类到不同的类之前有效地定位和识别。为了解决精细问题并区分目标中的细微之处,他们使用双线性池[108],[109]来增加学习者模型的表示能力。Wertheimer等人结合使用这三种改进技术,能够在meta iNat基准上将原型网络的精度提高一倍。

Cross Validation using Leave-one-Out Approach: To have a successful prototypical network which can recognise novel rare classes, it needs to be trained on a relatively great size of images belonging to the common class and on the few referenced images belonging to the rare novel class. To achieve this, either the batch size needs to be increased or reduce the number of referenced categories during the training. Increasing the batch size is not always an option due to the computational limitations, whereas reducing the novel referenced images can result in the model learning poorly and the center of each class becoming distorted. To overcome this, their approach uses cross validation based on leave-one-out approach.

使用Leave-one-Out方法进行交叉验证:为了有一个成功的原型网络能够识别新的稀有类,需要对属于普通类的较大尺寸的图像和属于稀有类的少数参考图像进行训练。为了实现这一点,需要在训练期间增加批次大小或减少参考类别的数量。由于计算限制,增加批大小并不总是一种选择,而减少新的参考图像可能导致模型学习效果不佳,并且每个类的中心都会扭曲。为了克服这一点,他们的方法使用了基于 leave-one-out的交叉验证。

Let c be the entire set of classes and skis the number of samples in each class. vn,k is the feature vector of the nthsample in the cthcategory, the prototypes are generated in the following manner

设c为整个类集合,并计算每个类中的样本数。vn,k是第 cge 类别中第n个样本的特征向量,原型按以下方式生成

Effective Localization: To distinguish relevant object from a clustered image is highly difficult when it is trained on a few images and their respective labels. To address this issue, the authors proposed to localize the object in the referenced image and the query image which can make the process of classification significantly better. They used two approaches to isolate the images: Unsupervised localization - where a category-agnostic learner model is internally developed on the Dtrain; and few-shot localization - where the images from Dtrain are used to generate bounding box on the Dtest.

有效定位:当对少量图像及其各自的标签进行训练时,将相关目标与聚集图像区分开来是非常困难的。为了解决这个问题,作者提出在参考图像和查询图像中定位目标,这可以使分类过程明显更好。他们使用了两种方法来分离图像:无监督定位——在Dtrain上内部开发类别不可知学习者模型;小样本定位——其中来自Dtrain的图像用于在Dtest上生成边界框。

The procedure for both the localization techniques is same where a sub-module of localizer is used to classify the location of every object in the final 10 × 10 feature map layer of the model and categorized as ‘foreground’ and ‘background’ predictions. A softmax function is applied on these prediction embeddings and using a L2 distance. The training is done end-to-end for both of the localization procedures. The localizer is trained and used only for the purpose of classification.

两种定位技术的步骤相同,其中使用定位器的子模块对模型最后10×10特征地图层中每个目标的位置进行分类,并将其分类为“前景”和“背景”预测。对这些预测嵌入应用softmax函数,并使用L2距离。这两个本地化程序的训练都是端到端进行的。定位器经过训练,仅用于分类目的。

Bilinear pooling: Techniques like fisher vectors [110] and others [111], [112], [113], [114] are used to increase the feature space F, and increase the fine-grain classification power of the models. These techniques are applied to the fully-connected layers or the classifier layers in a model which increases the model parameters and it complexity. The approach used by Wertheimer et al. of bilinear pooling [109] takes into account two feature maps and computes the cross variance amongst them and performs a pixel-wise product and then does average pooling on top of it. By doing so, they claim that no extra parameters are added to the network. Code: https://github.com/daviswer/fewshotlocal

双线性合并:使用fisher向量[110]和其他[111]、[112]、[113]、[114]等技术来增加特征空间F,并提高模型的细粒度分类能力。这些技术应用于模型中的完全连接层或分类器层,这增加了模型参数和其复杂性。Wertheimer等人使用的双线性合并方法[109]考虑了两个特征映射,计算它们之间的交叉方差,并执行像素级乘积,然后在其上进行平均合并。通过这样做,他们声称没有额外的参数添加到网络中。代码:https://github.com/daviswer/fewshotlocal

2.2.4 Learning for Semi-Supervised Classification

In this work, Ren et al. [79] propose a semi-supervised and novel extension to the prototypical networks to deal with scenarios where unlabelled data samples are available along with Dtrain samples and their respective labels can generate prototypes. As shown in Figure 4, they take into consideration two scenarios: one where the unlabelled data samples belong to the same set of classes c; and other where the unlabelled data samples belong to another set of classes called as distractor classes. In the semisupervised based few-shot learning approach the Dtrainconsists of a tuple (S, R) where (S) is the set of labelled samples and (R) is the set of unlabelled samples. (S) is the reference set of support set used in the prototypical networks. This set consists of various images and their respective labels

在这项工作中,Ren等人[79]提出了对原型网络的半监督和新颖的扩展,以处理未标记的数据样本与数据训练样本一起可用的场景,并且它们各自的标签可以生成原型。如图4所示,它们考虑了两种场景:一种是未标记的数据样本属于同一组c类;以及其他未标记的数据样本属于另一组称为干扰物类的类。在基于半监督的小样本学习方法中,Dtrain由元组(S,R)组成,其中(S)是标记样本集,(R)是未标记样本集。(S) 是原型网络中使用的支持集的参考集。此集合由各种图像及其各自的标签组成

Fig. 4: Figure explaining the semi-supervised few-shot learning setup. (S) and (R) are sets of labelled and unlabelled data samples respectively and the goal is to use these samples to generalize well on the query set (Q). The green plus sign indicates that the images in the distractor set that belong to the classes that of in the training set, whereas the ones indicated by red minus sign are the images in the distractor class that do not belong to classes in the training dataset. Source: [79] 说明半监督小样本学习设置的图。(S) 和(R)分别是带标签和未带标签的数据样本集,目标是使用这些样本在查询集(Q)上进行良好的泛化。绿色加号表示干扰物集中的图像属于训练集中的类,而红色减号表示干扰物中的图像不属于训练数据集中的类。资料来源:[79]

If the original prototypical networks discussed in [77] is considered, it can successfully generate prototypes ckfor the labelled set (S) but fails to generate prototypes ( ˆ ck) for the unlabelled set (R). The authors provide various techniques to process on the labelled set (S) and generate refined prototypes for the unlabelled (R) set. One the refined prototypes ( ˆ ck) for (R) are generated, the model is trained with the same loss function as used for the vanilla prototypical network (refer Equation 3) for the refined prototypes ( ˆ ck). After that each query is classified based on the distance function and the proximity of the query to the generated refined prototypes ( ˆ ck) using average negative log probability (refer Figure 6. The refined prototypes, which are generated by considering the samples from (R), are seen to be classified accurately.

如果考虑[77]中讨论的原始原型网络,它可以成功地为标记集生成原型,但无法为未标记集(R)生成原型(ˆck)。作者提供了各种技术来处理标记集,并为未标记集生成细化的原型。生成(R)的细化原型(ˆck)后,使用与细化原型(ˆck)的普通原型网络(参考等式3)相同的损失函数对模型进行训练。之后,根据距离函数和查询与生成的优化原型(ˆck)的接近程度,使用平均负对数概率对每个查询进行分类(见图6)。通过考虑(R)中的样本生成的优化原型被视为准确分类。

Fig. 6: Figure indicating the prototype clustering before and after refinement. Image Source: [79] 表示细化前后原型聚类的图。图片来源:[79]

Technique using using soft k-means: In this approach, the prototype is looked as a separate cluster center and the refinement process tries to cluster locations to better fit the labelled and unlabelled samples. Once the (ck) are generated for the labelled samples and clusters are formed based on the distance between the prototypes and the refined prototypes. For the unlabelled samples, they are first partially assigned to the (ck) clusters based based on the distance between the (ck) and ( ˆ ck). Finally, refined prototypes are obtained by incorporating the unlabelled samples. This approach is used in cases where the unlabelled image belongs to one of the class in labelled set.

使用 soft k-means的技术:在这种方法中,原型被视为一个单独的聚类中心,细化过程试图对位置进行聚类,以更好地拟合标记和未标记的样本。一旦为标记样本生成了(ck),根据原型和优化原型之间的距离形成簇。对于未标记的样本,首先根据(ck)和(ˆck)之间的距离将它们部分分配给(ck)簇。最后,通过合并未标记的样本获得细化的原型。这种方法用于未标记图像属于标记集中某个类的情况。

Technique using using soft k-means with distractor class: The soft k-means approach does not perform on the distractor class (R), where the unlabelled samples does not necessarily are from the range of classes. The distractor class images can be harmful when it comes to the k-means approach as the ( ˆ ck) prototypes have to be adjusted to the (ck) clusters. To overcome this, the authors suggest to add an additional cluster which can capture the distractors and avoid the unnecessary population of the (ck) clusters.

使用 soft k-means和干扰类的技术: soft k-means方法不适用于干扰类(R),其中未标记的样本不一定来自类别范围。当涉及到k-means方法时,干扰物类图像可能是有害的,因为(ˆck)原型必须调整到(ck)簇。为了克服这个问题,作者建议添加一个额外的集群,它可以捕获干扰物并避免不必要的(ck)集群。

Technique using using soft k-means and masking: The soft kmeans with distractor class technique works better for distractor class where all the samples belong to one class. But this is was to simplistic and in real world, it is most unlikely to have a distractor class with samples belonging to just one class. To overcome this, the authors consider that the examples are not within some area of any (ck) clusters generated from the labelled data samples. This is achieved using the masking procedure. The high-level goal is to mask more the samples which are further away from a prototype and mask less the ones which are closer. Code: https://github.com/ xinzheli1217/learning-to-self-train

使用soft k-means和掩蔽的技术:soft k-means和干扰物类技术对于干扰物类效果更好,因为所有样本都属于一个类。但这太简单了,在现实世界中,最不可能有一个样本只属于一个类的干扰物类。为了克服这一点,作者认为,实例不在由标记数据样本生成的任何(CK)簇的某些区域内。这是通过掩蔽程序实现的。高层次的目标是掩盖更多远离原型的样本,较少掩盖更接近原型的样本。代码:https://github.com/ xinzheli1217/learning-to-self-train

2.2.5 T ransferable Prototypical Networks

The work done by Yingwei et al. [82] is based on the remoulding the vanilla prototypical network to Transferable Prototypical Network (TPN) which can target the scenario of unlabelled data samples by jointly bridging the domain. The classifiers are constructed with target data which does not have labels along with source data and its respective labels. Initially the transferable prototypical networks classifiers learn from the source data and then directly predict the pseudo labels of the target data which does not have any labels. This results in the generation of two prototypical network based classifiers which are target-only and source-only. TPN training is done to simultaneously reduce the discrepancy at sample level and class level while predicting the correct sample class. They match the prototypes generated from each class and reduce the class-level discrepancy. Also, by enforcing the score distributions over classes of each sample in different domain, the sample-level discrepancy is reduced.

Yingwei等人[82]所做的工作基于将普通原型网络改造为可转移原型网络(TPN),该网络可以通过联合桥接域来针对未标记数据样本的场景。分类器由没有标签的目标数据以及源数据及其各自的标签构成。可转移原型网络分类器首先从源数据中学习,然后直接预测没有任何标签的目标数据的伪标签。这就产生了两种典型的基于网络的分类器,它们分别是纯目标分类器和纯源分类器。TPN训练是为了在预测正确的样本类别的同时,同时减少样本级别和类别级别的差异。它们匹配从每个类生成的原型,并减少类级别的差异。此外,通过在不同领域的每个样本的类别上实施分数分布,样本水平差异也得到了减少。

As illustrated in Figure 5, firstly, the TPN model allocates a ‘pseudo’ label to each of the target class. This is achieved by matching prototypes of each samples from the target class to the nearest prototype from the source data samples. Afterwards, prototypes are generated based on source-only, target-only and source-target samples. Based on a general purpose adaptation, these prototypes (generated from samples in each domain in all the classes) are pushed to the closest domain in the embedding space. TPN simultaneously aligns the distribution of scores generated by the prototypes of all the samples in various domains. This aligning is performed by doing the task-specific adaptation of the data samples. The entire TPN network is end-to-end trained by reducing the classification loss on the source data along with the general-purpose and task-specific adaptation

如图5所示,首先,TPN模型为每个目标类分配一个“伪”标签。这是通过将目标类中每个样本的原型与源数据样本中最近的原型进行匹配来实现的。然后,基于仅源、仅目标和源-目标样本生成原型。基于通用的自适应,这些原型(从所有类中每个域中的样本生成)被推送到嵌入空间中最近的域。TPN同时调整了各个领域中所有样本原型生成的分数分布。这种对齐是通过对数据样本进行特定于任务的自适应来执行的。整个TPN网络通过减少源数据上的分类丢失以及通用和特定任务的自适应进行端到端训练

Fig. 5: The difference between the previous unsupervised domain adaption models like MMD [115] and domain discriminator [116]. Compared to these techniques, Transferable Prototypical Network (TPN) can target the scenario of unlabelled data samples by jointly bridging the domain gap and the classifiers are constructed with unlabeled target data and labeled source data. Image Source: [82] MMD[115]和域鉴别器[116]等以前的无监督域自适应模型之间的差异。与这些技术相比,可转移原型网络(transportable prototypic Network,TPN)可以通过联合桥接域间隙来针对未标记数据样本的场景,并且分类器由未标记的目标数据和标记的源数据构成。图片来源:[82]

2.2.6 Matching Network 匹配网络

The work done by Vinyals et al. [75] is inspired from metric learning techniques [117], [118], [119], [120], memory networks [121], [122], pointer networks [123] and augmented neural networks [124]. The matching network’s novelty was two-fold: one, a novel training method tailored for one-shot learning applications; two, introduce a novel network called matching network, which is based on the attention mechanism [125], [126]. Code: https://github.com/AntreasAntoniou/MatchingNetworks

Vinyals等人[75]所做的工作受到度量学习技术[117]、[118]、[119]、[120]、记忆网络[121]、[122]、指针网络[123]和增强神经网络[124]的启发。匹配网络的新颖性有两个方面:一是针对一次性学习应用的新颖训练方法;第二,介绍一种基于注意机制的新型网络,称为匹配网络[125],[126]。代码:https://github.com/AntreasAntoniou/MatchingNetworks

In matching network, the use of fully differentiable attention mechanism is done to read and/or write from the external memory. The external memory stores important information or knowledge which is pertinent to the task at hand. The essence of matching networks is, without any modifications to the network it can generate accurate labels for the data samples Dtest. The matching network maps data samples and their respective label in (S), where (S) = {(xn, yn}k, is mapped to a classifier cs(x0) which can define a probability distribution over possible output categories y0, given an input x0from the Dtest. When (S) is mapped to cs(x0), the probability is given by:

在匹配网络中,使用完全可微注意机制从外部存储器进行读取和/或写入。外部存储器存储与手头任务相关的重要信息或知识。匹配网络的本质是,在不修改网络的情况下,它可以为数据样本Dtest生成准确的标签。匹配网络将数据样本及其各自的标签映射到(S)中,其中![]() 被映射到分类器cs(x’),该分类器可以定义来自Dtest的输入x0的可能输出类别y'上的概率分布。当(S)映射到cs(x')时,概率由下式给出:

被映射到分类器cs(x’),该分类器可以定义来自Dtest的输入x0的可能输出类别y'上的概率分布。当(S)映射到cs(x')时,概率由下式给出:

where, a is the attention mechanism. So when a small support set (Q) from Dtestis provided to the one-shot learning model, the distribution of the output classes y0is calculated based on:

其中,a是注意机制。因此,当从Dtest向一次性学习模型提供一个小的支持集(Q)时,输出类Y0的分布是基于:

2.2.7 Task dependent adaptive metric learning 任务相关自适应度量学习

The work done by Oreshkin [88] focuses on a novel technique of metric scaling which improves the performance of few-shot applications. Metric training is trying to learn a suitable distance function or a similarly measure (example: cosine or euclidean) as shown above in the work done by Snell et al. [77] and Vinyals et al. [75] in prototypical network and matching network, respectively, which is loosely based on the work done by Perez et al. in [127], [128], [129]. They claim that the improvement in the performance of few-shot techniques can be directed key to using different scaling methods and select a particular one based on the softmax from the pool of metrics. They propose a learnable parameter which can make the model understand the best possible metric from the collection of metrics. They also propose to use task conditioning where the embedding generated are not based on a general embedding function but the functions varies with different tasks. Code: https://github.com/ElementAI/TADAM

Oreshkin[88]所做的工作集中于一种新的度量缩放技术,该技术提高了小样本应用的性能。度量训练是试图学习一个合适的距离函数或类似的度量(例如:余弦或欧几里德),如Snell等人[77]和Vinyals等人[75]分别在原型网络和匹配网络中所做的工作所示,这大致基于Perez等人在[127]、[128]、[129]中所做的工作。他们声称,小样本技术性能的提高可以通过使用不同的缩放方法来实现,并基于度量池中的softmax选择一种特定的缩放方法。他们提出了一个可学习的参数,该参数可以使模型从度量集合中理解最佳可能度量。他们还建议使用任务条件,其中生成的嵌入不是基于一般的嵌入函数,而是函数随不同任务而变化。代码:https://github.com/ElementAI/TADAM

2.2.8 Representative-based metric learning

The work done by Karlinsky et al. in [81] introduces an effective approach for few-shot object classification and detection using a technique based on Distance Metric Learning (DML). Their training is inspired on an end-to-end manner where the network simultaneously trains and learns the network parameters, the embedding space, the feature space and the representative vectors. In their work, every class is represented by a mixture model combined with multiple modes. The center of each of these modes is called as a representative vector. Representative vectors will vary along with their respective class. Code: https://github.com/jshtok/RepMet

Karlinsky等人在[81]中所做的工作介绍了一种使用基于距离度量学习(DML)的技术进行小样本目标分类和检测的有效方法。他们的训练是以端到端的方式进行的,网络同时训练和学习网络参数、嵌入空间、特征空间和代表向量。在他们的工作中,每个类都由一个混合模型和多个模式表示。每个模式的中心称为代表向量。代表性向量将随其各自的类别而变化。代码:https://github.com/jshtok/RepMet

2.2.9 T ask-Aware Feature Embedding

The work done by Wang et al. [80] focuses on the construction of feature embeddings that are set for a particular task. To achieve their goal, they use a novel model called TAFE-Net (TaskAware Feature Embedding Network) which has two modules or subnetworks: meta learner; followed by a prediction network. Depending upon the task, the meta-learner modules learns and produces features for a particular task and the prediction layer adjusts to the new task based on the features generated by meta network.

Wang等人[80]所做的工作重点是为特定任务构建特征嵌入。为了实现他们的目标,他们使用了一种称为TAFE网络(TaskAware Feature Embedded Network,任务感知特征嵌入网络)的新模型,该模型有两个模块或子网络:元学习器;然后是预测网络。根据任务,元学习器模块学习并生成特定任务的特征,预测层根据元网络生成的特征调整新任务。

As illustrated in Figure 7, the TAFE-Net generates the TAFEs from the generic image. This is achieved through the meta learner module which is able to generate the feature representation of the different layers in the classification module. The generated weights for these layers are transformed into a task-specific low dimension embedding whereas the high dimension weights are shared among all the global tasks thus reducing the overall complexity. The classifier module used is the same irrespective of the intended task and the input to the classifier are the TAFEs generated by the meta-learner module. Code: https://github.com/ucbdrive/tafe-net

如图7所示,TAFE网络从通用图像生成TAFE。这是通过元学习器模块实现的,该模块能够生成分类模块中不同层的特征表示。为这些层生成的权重被转换为特定于任务的低维嵌入,而高维权重在所有全局任务之间共享,从而降低了总体复杂性。无论预期任务是什么,所使用的分类器模块都是相同的,分类器的输入是元学习者模块生成的TAFE。代码:https://github.com/ucbdrive/tafe-net

Fig. 7: The design of the TAFE-Net. TAFE-Net has two modules, meta learner and a prediction network. Depending upon the task, the meta-learner modules learns and produces features for a particular task and the prediction layer adjusts to the new task based on the features generated by meta network. Image Source: [80] TAFE网络的设计。TAFE网络有两个模块,元学习器和预测网络。根据任务,元学习器模块学习并生成特定任务的特征,预测层根据元网络生成的特征调整新任务。图片来源:[80]

2.3 Optimization-Based Techniques 基于优化的技术

The techniques discussed in the following section involve the use of an meta-optimizer which can generalize better to novel tasks. An external memory network, Long Short-Term Memory (LSTM) [130], a recurrent neural network (RNN), a holistic gradient descent optimizer, etc. are various types of techniques which are used as a meta-optimizer during the initial training phase. The goal of the meta-optimizer is to learns from various tasks and generalize to novel tasks.

下一节讨论的技术涉及到元优化器的使用,该优化器可以更好地推广到新任务。外部记忆网络、长期短期记忆(LSTM)[130]、递归神经网络(RNN)、整体梯度下降优化器等是各种类型的技术,在初始训练阶段用作元优化器。元优化器的目标是从各种任务中学习并推广到新任务。

2.3.1 LSTM-based Meta Learner

The work done by Ravi et al. in [76] is based on a Long ShortTerm Memory (LSTM) network acting as a meta-learner model to generalize and learn the optimal optimization algorithm which can be used to trainer a classifier from another model which has an application towards few-shot regime. Training of most deep neural networks is based on some standard gradient descent algorithm for optimizing the network towards a specific task

Ravi et al.在[76]中所做的工作基于一个作为元学习者模型的长短时记忆(LSTM)网络,以推广和学习最佳优化算法,该算法可用于训练另一个模型中的分类器,该模型适用于小样本区域。大多数深层神经网络的训练都基于一些标准的梯度下降算法,用于针对特定任务优化网络

where at tthiteration, θ is neural network parameters, αtis the learning rate, Ltis the loss and θt−1Ltis the gradient of the loss.

其中,在t迭代时,θ是神经网络参数,α是学习率,lti是损失,θt−这是损失的梯度。

The meta-learner LSTM can learn and adapt its rules for training the model. The initial state of the LSTM cell is set to θt and the candidate cell state is set to the gradient of the loss which is θt−1Ltwhich can determine how valuable the information of the gradient is. They determine the parameters for ntand ft. The meta-learner takes these values and finds the best possible values which can be used during the training period. ntand ftare given by:

元学习器LSTM可以学习并调整其规则来训练模型。LSTM单元的初始状态设置为θt,候选单元状态设置为损失梯度θt−1这可以确定梯度信息的价值。他们确定NTA和ft的参数。元学习者获取这些值,并找到可在培训期间使用的最佳可能值。N和F由以下人员给出:

where W indicates the weights and B indicates the biases of the overall model. Based on the nt and ft information, the LSTM can learn quickly without diverging. Code: https://github. com/markdtw/meta-learning-lstm-pytorch

其中W表示权重,B表示整个模型的偏差。基于nt和ft信息,LSTM可以快速学习而不发散。代码:https://github.com/markdtw/meta-learning lstm-pytorch

2.3.2 Memory Augmented Networks based Learning 基于记忆增强网络的学习

The work done by Santoro et al. in [84] uses a Neural Turing Machine (NTM) [131] which they consider as a fully differentiable implementation of Memory Augmented Neural Network (MANN). They use a LSTM as the controller which can communicate with the external memory by using a variety of read and write heads. The speed of information exchange between the model and the external memory is rapid as they external memory stores the vector representation which is been moved in or moved out of the memory because of which NTM is a great option for the application of meta-learning and n-shot predictions. Once the NTM learns how to strategically place the vector representation of the data to be later used for making prediction for the data samples in Dtest. Code: https://github.com/vineetjain96/one-shot-mann

桑托罗等人在[84 ]中所做的工作使用了一种神经图灵机器(NTM)[131 ],他们认为这是一种完全可扩展的记忆增强神经网络(MN)的实现。它们使用LSTM作为控制器,通过使用各种读写磁头与外部存储器通信。模型和外部存储器之间的信息交换速度很快,因为外部存储器存储了已移入或移出存储器的向量表示,因为NTM是元学习和n-shot预测应用的一个很好的选择。一旦NTM学会如何策略性地放置数据的向量表示,以便稍后用于在Dtest中对数据样本进行预测。代码:https://github.com/vineetjain96/one-shot-mann

2.3.3 Model Agnostic based Meta Learning 基于模型不可知的元学习

The work done by Finn et al. [85] proposes to train the weights of a given neural network in a way that, the network can generalize to novel tasks with just few samples. Their approach provided the modern performances on the novel tasks along with quick fine-tuning. Their model has produced good results in the reinforcement learning domain as well, where they achieved quick fine-tuning for the gradient based policies. Their mechanism is able to quickly tune the weights of a model so that it can generalize and adapt quickly to novel tasks. The idea behind their approach was based on the fact that some internal parameters are more transferable than others. For example, in a CNN, the higher levels of convolution can learn features which can be applicable to a variety of tasks irrespective of the intended task. The authors exploit this fact and using a gradient based finetuning, they show that the model can rapidly learn and progress onto novel tasks without worrying about overfitting. Algorithm 1 depicts the overall learning process of the MAML approach. Code: https://github.com/cbfinn/maml

Finn等人[85]所做的工作提出了一种训练给定神经网络权重的方法,即该网络可以推广到只需少量样本的新任务。他们的方法提供了新任务的现代性能以及快速微调。他们的模型在强化学习领域也取得了良好的效果,实现了基于梯度策略的快速微调。他们的机制能够快速调整模型的权重,使其能够概括并快速适应新任务。他们的方法背后的想法是基于这样一个事实,即一些内部参数比其他参数更容易转移。例如,在CNN中,更高级别的卷积可以学习适用于各种任务的特征,而与预期任务无关。作者利用这一事实,并使用基于梯度的微调,他们表明该模型可以快速学习并进入新任务,而不必担心过度拟合。算法1描述了MAML方法的整体学习过程。代码:https://github.com/cbfinn/maml

2.3.4 Task-Agnostic Meta-Learning

The work done by Jamal et al. in [90] proposes the use of a task-agnostic learning approach. The model, trained initially on a dataset with a range of tasks, can be biased towards few tasks especially when the novel tasks and the trained have some disparities, which can result in the model to be over-performing to few of the tasks that can prevent the meta-learning to learn better to the novel tasks. To overcome this problem, the authors use an unbiased learner able to learn better on the novel tasks. The authors provide two variants for the task-agnostic meta-learner (TAML): Entropy-Maximization/Reduction (EMR-TAML); and Inequality Minimization (IM-TAML). In EMR-TAML, thus avoid the model to overfit to the novel tasks. The authors use a random guess with an equal probability over the predicted labels, which results in biased predictions towards the novel tasks. This is indicated by maximum entropy over the model parameters (θ) and results in the initial model having a large entropy over the predicted labels. The entropy is given in Equation 10. Alternatively, they also propose to minimize the entropy (HTi(fθi)) resulting in higher confidence levels towards the predicted labels after the model parameters are updated from θ to θiin the process to find the optimum θ.

Jamal等人在[90]中所做的工作建议使用任务无关学习方法。最初在包含一系列任务的数据集上训练的模型可能偏向于少数任务,特别是当新任务和训练的任务存在一些差异时,这可能导致模型过度执行少数任务,从而妨碍元学习更好地学习新任务。为了克服这个问题,作者使用了一个能够在新任务中更好地学习的无偏见学习器。作者为任务不可知元学习器(TAML)提供了两种变体:熵最大化/约简(EMR-TAML);和不等式极小化(IM-TAML)。在EMR-TAML中,避免模型过度适应新任务。作者在预测标签上使用等概率的随机猜测,这导致对新任务的预测有偏差。这由模型参数(θ)上的最大熵表示,并导致初始模型在预测标签上具有较大的熵。方程10给出了熵。或者,他们还建议最小化熵(HTi(fθi)),在找到最佳θ的过程中,将模型参数从θ更新为θii后,对预测标签产生更高的置信水平。

To nullify the biased effect of a model to any particular task, they authors use an approach based on ‘economic inequality’ [132] to measure the amount of task being biased. The approach of economic inequality is inspired from the statistics family, where the loss for each task Tiof the initial model is looked as an input for the task. Afterwards, the TAML minimizes this loss inequality for multiple tasks, resulting in better meta-learning.

为了消除模型对任何特定任务的偏向效应,他们作者使用了一种基于“经济不平等”的方法[132]来衡量偏向的任务数量。经济不平等的方法受统计学家族的启发,其中初始模型的每个任务的损失被视为任务的输入。之后,TAML将多个任务的这种损失不等式最小化,从而实现更好的元学习。

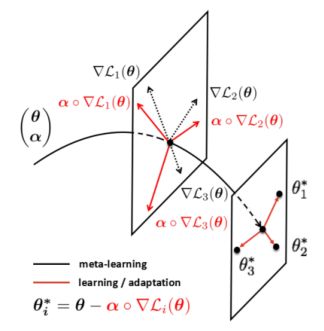

2.3.5 Meta-SGD

The work done by Li et al. in [86] proposes a SGD like optimizer called Meta-SGD which can easily and quickly adapt to novel tasks and is applicable for supervised learning (classification, regression, etc.) and reinforcement learning [44], [133], [134], [135] domain. Meta-SGD is similar to Meta-Learning LSTM [76] in terms of easy to implement, conceptually simple, easy to train, and can achieve better performance than Meta-Learning LSTM. Experimental results show that Meta-SGD does indeed outperform MAML approach.

Li等人在[86]中所做的工作提出了一种类似SGD的优化器,称为元SGD,该优化器可以轻松快速地适应新任务,适用于监督学习(分类、回归等)和强化学习[44]、[133]、[134]、[135]领域。Meta SGD与Meta Learning LSTM[76]在易于实施、概念简单、易于训练方面类似,并且可以实现比Meta Learning LSTM更好的性能。实验结果表明,元SGD确实优于MAML方法。

Figure 9 ilustrates the overall learning procedure of the MetaSGD. The inspiration is based on a meta-learner, where the metalearner can generalize from a range of different tasks and can generalize better to a novel task. The learning in Meta-SGD is based on two steps: One, where the meta-learner gradually learns on the different tasks in the meta-space (θ, α); two, where based on the feedback of the meta-learner the learning approach of the meta-learner is evolved in the learning space. Code: https://github. com/foolyc/Meta-SGD

图9展示了MetaSGD的整体学习过程。灵感来源于元学习器,元学习器可以从一系列不同的任务中概括,并且可以更好地概括为一项新任务。元SGD中的学习分为两个步骤:第一步,元学习者在元空间(θ,α)中逐渐学习不同的任务;第二,基于元学习者的反馈,元学习者的学习方法在学习空间中进化。代码:https://github. com/foolyc/Meta-SGD

Fig. 9: The overview of the Meta-SGD learning process. The metaSGD which can quickly adapt to novel tasks and is applicable for supervised reinforcement learning domain. Image Source: [86] 元SGD学习过程的概述。metaSGD能快速适应新任务,适用于有监督强化学习领域。图片来源:[86]

2.3.6 Learning to Learn in the Concept Space 在概念空间中学习

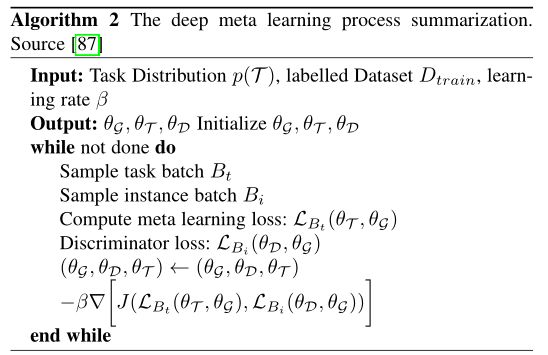

The work done by Zhou et al. in [87] incorporates the representation power of deep learning into the task of meta learning. As illustrated from Figure 8, their approach includes three sub-modules: a concept generator G; a meta-learner T ; and a concept discriminator D. The concept generator is a deep neural network (e.g. ResNet, Inception, VGG Net) which extracts features from the input images, the meta-learner learns on these extracted features and the concept discriminator (e.g SVM, DNN, fully connected layers, etc.) differentiates between the features to recognize different images. The main goal in this approach is to train the concept generator in parallel with the meta-learner on a series similar tasks, which they claim to improve the performance of a vanilla metalearner. (Deep Meta-Learning) DEML in combination with several other techniques (like Matching Network [75], MAML [85], MetaSGD [86]) has shown to outperform the then best results for the n-shot learning problem.

Zhou等人在[87]中所做的工作将深度学习的表征能力纳入了元学习任务中。如图8所示,他们的方法包括三个子模块:概念生成器G;元学习者T和概念鉴别器D。概念生成器是一个深度神经网络(例如ResNet、Inception、VGG网络),它从输入图像中提取特征,元学习者学习这些提取的特征,概念鉴别器(如SVM、DNN、完全连接层等)区分特征以识别不同的图像。这种方法的主要目标是在一系列类似的任务上训练概念生成器与元学习者并行,他们声称这些任务可以提高学习者的表现。(深度元学习)DEML与其他几种技术(如匹配网络[75]、MAML[85]、MetaSGD[86])的结合表明,对于n-shot学习问题,其表现优于当时最好的结果。

Fig. 8: The overview of the deep meta-learner. The network consists of three sub-modules: a concept generator G; a meta-learner T ; and a concept discriminator D. The main objective is to train the concept generator in parallel with the meta-learner on a series similar tasks, through which the performance of a vanilla meta-learner is enhanced. Image Source: [87] 深层元学习器概述。该网络由三个子模块组成:概念生成器G;元学习器T;概念鉴别器D。主要目标是训练概念生成器与元学习器并行完成一系列类似任务,从而提高普通元学习器的效率。图片来源:[87]

The goal is to minimize joint expectation (J), of the meta learning loss LT(θT, θG) and the discriminator loss L(x,y)(θD, θG) of the labelled samples (x, y) in Dtrain

目标是最小化Dtrain中标记样本(x,y)的元学习损失LT(θT,θG)和鉴别器损失L(x,y)(θD,θG)的联合期望(J)

2.3.7 ∆-encoder

The research done by Schwartz et al. in [89] proposes the use of an effective and comparatively easy approach for the one-shot and few-shot learning settings where they build upon a modified auto-encoder calling it ∆-encoder. This ∆-encoder can generalize/predict novel tasks based on exposure to few samples from those tasks. The ∆s are the transferable intra-class parameters. The classifier used is able learn how to extract these ∆s, and at the same time also transfers the ∆s towards the prediction of novel samples. Code available at https://github.com/EliSchwartz/ DeltaEncoder

Schwartz et al.在[89]中所做的研究提出了一种有效且相对简单的方法,用于单次和少次的学习设置,在这种设置中,它们建立在一个修改过的自动编码器上∆-编码器。这∆-编码器可以基于对来自这些任务的少量样本的暴露来概括/预测新任务。这个∆s是可转移的类内参数。使用的分类器能够学习如何提取这些信息∆s、 同时也转移了∆这是对新样本的预测。代码https://github.com/EliSchwartz/DeltaEncoder

2.4 Semantic-Based Techniques

This section, discusses the semantic-based techniques, in which the semantics is included along with the samples to learn and generalize better to novel tasks. Semantic-based techniques are used more popularly for zero-shot learning (ZSL) settings. The inspiration behind this approach is that, often an adult is accompanied, when pointing out a new thing to a child, to make the association. The information or pointing out of an adult can be compared as a semantic knowledge which helps the child learn novel classes. In traditional zero-shot learning settings, either, the semantics and the data are mapped to a common embedding space [138], or, the semantics are mapped to the data or vice-versa [59], [139].

本节将讨论基于语义的技术,其中包括语义和示例,以便更好地学习和概括新任务。基于语义的技术更广泛地用于零镜头学习(ZSL)设置。这种方法背后的灵感是,当成年人向孩子指出一件新事物时,通常会有人陪伴,让他们联想到新事物。成年人的信息或指点可以作为一种语义知识进行比较,帮助孩子学习新类别。在传统的零样本学习设置中,语义和数据映射到公共嵌入空间[138],或者语义映射到数据,反之亦然[59],[139]。

2.4.1 Learning with Multiple Semantics 多重语义学习

Schwartz [92] presents an incremental work to few-shot learning techniques discussed in [140], where they incorporate additional semantic information that can help the learning process to be more effective. They propose that by combining multiple highlevel semantics like natural language description, category labels, and attributes. They split the training into two stages: Phase one, where the train the CNN backbone of the network from scratch using the labelled samples. They, however, believe that a pretrained task specific CNN model fine-tuned to the labelled dataset gives much better performance rather than training it from scratch; Phase two, where the linear classifier is replaced by MLP (multilayer Perceptron), freezing all the previous layers. The MLP is able to generate ‘semantic prototypes’ which are then added up to the semantic branches as shown in Figure 10.

Schwartz[92]介绍了[140]中讨论的小样本技术的增量工作,其中它们包含了额外的语义信息,可以帮助学习过程更加有效。他们提出,通过结合多种高级语义,如自然语言描述、类别标签和属性。他们将训练分为两个阶段:第一阶段,使用标记的样本从头开始训练网络的CNN主干。然而,他们相信,一个预先训练的特定于任务的CNN模型,经过微调,能够提供更好的性能,而不是从头开始训练;第二阶段,线性分类器被MLP(多层感知器)取代,冻结所有之前的层。MLP能够生成“语义原型”,然后将其添加到语义分支中,如图10所示。

Fig. 10: The overview of the multiple semantics method where they include various high-level information like natural language, category labels, and attributes. They split the training into two stages: Phase one, where the train the CNN backbone of the network from scratch using the labelled samples. Image Source: [92] 多语义方法的概述,其中包括各种高级信息,如自然语言、类别标签和属性。他们将培训分为两个阶段:第一阶段,使用标记的样本从头开始培训网络的CNN主干。图片来源:[92]

2.4.2 Learning via Aligned Variational Autoencoders (VAE)

The work done by Schonfeld et al. [93] is incremental to the feature generation technique where a model shares a latent space of the image embeddings and the respective class embeddings. The shared latent space is learned by variational autoencoders (V AE) [141] which are modality specific. They evaluated the model on several benchmark datasets and claimed that their model is state-of-the-art for generalized zero-shot learning and few-shot learning techniques. Code: https://github.com/edgarschnfld/CADA-VAE-PyTorch [93]

Schonfeld等人[93]所做的工作是对特征生成技术的改进,其中模型共享图像嵌入和相应类嵌入的潜在空间。共享的潜在空间由模态特定的变分自动编码器(V AE)[141]学习。他们在几个基准数据集上对模型进行了评估,并声称他们的模型是广义零样本学习和少样本学习技术的最新模型。代码:https://github.com/edgarschnfld/CADA-VAE-PyTorch [93]

2.4.3 Learning by Knowledge T ransfer With Class Hierarchy

Li et al. in [94] propose a large-scale model where the learnt features are transferable between the class hierarchy and can encode the semantic relation between the source and target class, respectively. They claim that their model is able to outperform the then state-of-the-art approach on a large scale zero-shot learning problem and can also be extended to few-shot learning applications. In high level, the prior knowledge they use is the semantic relation between the source classes and target classes and they claim that by transferring the feature embedding of the source class can help in predicting the target class. Code: https://github.com/tiangeluo/fsl-hierarchy

Li等人在[94]中提出了一个大规模模型,在该模型中,学习的特征可以在类层次结构之间转移,并且可以分别编码源类和目标类之间的语义关系。他们声称,他们的模型能够在大规模零样本学习问题上优于当时最先进的方法,并且还可以扩展到少样本学习应用。在高层,他们使用的先验知识是源类和目标类之间的语义关系,他们声称通过传递源类的特征嵌入可以帮助预测目标类。代码:https://github.com/tiangeluo/fsl-hierarchy

3 DISCUSSION AND FUTURE DIRECTION

This section presents the different techniques used and compare their performance on various benchmarks and datasets. For testing of these techniques, the two most commonly used benchmark datasets: Omniglot and MiniImageNet. Omniglot dataset contain 1623 various handwritten characters from various alphabets. Amazon’s Mechanical Turk was used by 20 different people to online draw these 1623 various characters. Omniglot is similar to MNIST dataset with respect to the complexity of the images. On the 13 other hand, the much complicated, MiniImageNet is a subset of the ImageNet dataset [142]2. The MiniImageNet dataset contains images of size 84 × 84 which belong to randomly selected 100 output categories, with each category having 600 images, i.e. a total of 6000 images. The standard training procedure in a 5-way settings is to have 1 or 5 samples (or it can be any number of samples in between 1 and 10) for each of the 5 output classes in the support set. ( therefore it is called as 5-way) Out of the 100, 64 categories are used for training, 16 for validation whereas 20 are used for testing.

本节介绍使用的不同技术,并比较它们在各种基准测试和数据集上的性能。为了测试这些技术,有两个最常用的基准数据集:Omniglot和MiniImageNet。Omniglot数据集包含来自不同字母表的1623个手写字符。亚马逊的Mechanical Turk被20个不同的人用来在线绘制这1623个不同的角色。就图像的复杂性而言,Omniglot与MNIST数据集类似。另一方面,非常复杂的MiniImageNet是ImageNet数据集[142]2的子集。MiniImageNet数据集包含大小为84×84的图像,这些图像属于随机选择的100个输出类别,每个类别有600个图像,即总共6000个图像。五向设置中的标准培训程序是为支持集中的5个输出类中的每个类提供1或5个样本(也可以是1到10之间的任意数量的样本)。(因此被称为5向)在100个类别中,64个类别用于培训,16个用于验证,20个用于测试。

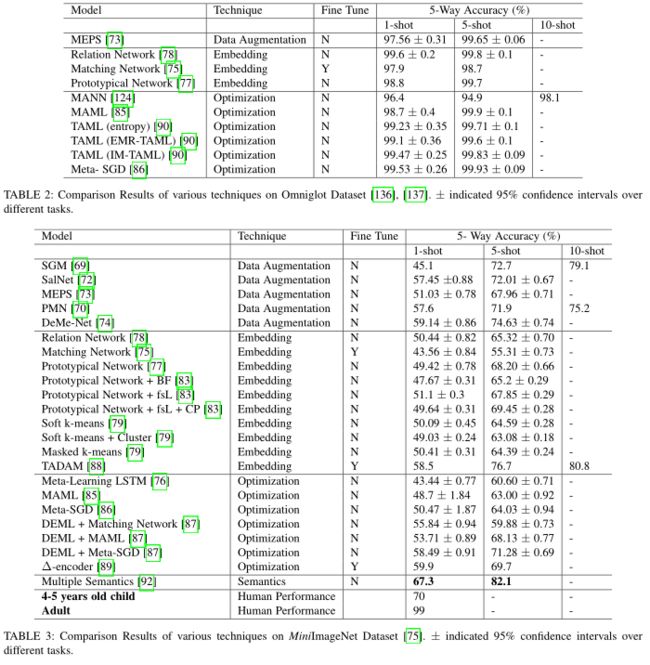

Table 2 depict the performance of various techniques discussed on the omniglot dataset. The dataset been relative simple, majority of the models are able to achieve high accuracy for 1-shot and 5shot settings. Even though Data Augmentation and Embedding (or metric) learning achieved high accuracies, the optimization based techniques are a clear winner with an accuracy of 99.53% using the Meta-SGD algorithm [86]. Few of the optimizationbased techniques have a broader application scope and can be used in reinforcement settings as well including the Meta-SGD algorithm.

表2描述了omniglot数据集上讨论的各种技术的性能。数据集相对简单,大多数模型能够实现 1-shot 和 5-shot 设置的高精度。尽管数据增强和嵌入(或度量)学习实现了高精度,但基于优化的技术显然是赢家,使用Meta-SGD算法的准确率为99.53%[86]。很少有基于优化的技术具有更广泛的应用范围,并且可以用于加固设置,包括元SGD算法。

Table 3 highlights the performance of various techniques discussed on the MiniImageNet dataset 3. As evidently seen, there is a considerable drop in the performance of the techniques discussed compared to their performance on the omniglot dataset. Techniques like Matching Network, MAML, Meta-SGD have 95% accuracy on the omniglot dataset whereas these same models have significantly lower accuracies on the MiniImageNet dataset. A major reason for this drop is that the images in the MiniImageNet dataset are much more complicated in terms of the contextual meaning, rich source of information, etc as compared to the omniglot dataset where the images are just characters. The data augmentation, embedding, optimization approach have 1-shot accuracies around 55%, even though there is a significant rise in the accuracies for the 5-shot settings, accuracies in the 70% ballpark. The trend is, more the number of samples, the better the accuracy. In order to make our model learn from as less samples as possible, we focus on the 1-shot accuracies. The state-of-theart accuracy is achieved through using semantic as an additional source of information along with the data to make the model understand novel categories.

Table 3 highlights the performance of various techniques discussed on the MiniImageNet dataset 3. As evidently seen, there is a considerable drop in the performance of the techniques discussed compared to their performance on the omniglot dataset. Techniques like Matching Network, MAML, Meta-SGD have 95% accuracy on the omniglot dataset whereas these same models have significantly lower accuracies on the MiniImageNet dataset. A major reason for this drop is that the images in the MiniImageNet dataset are much more complicated in terms of the contextual meaning, rich source of information, etc as compared to the omniglot dataset where the images are just characters. The data augmentation, embedding, optimization approach have 1-shot accuracies around 55%, even though there is a significant rise in the accuracies for the 5-shot settings, accuracies in the 70% ballpark. The trend is, more the number of samples, the better the accuracy. In order to make our model learn from as less samples as possible, we focus on the 1-shot accuracies. The state-of-theart accuracy is achieved through using semantic as an additional source of information along with the data to make the model understand novel categories.

表3突出显示了MiniImageNet数据集3上讨论的各种技术的性能。显然,与omniglot数据集上的性能相比,所讨论的技术的性能有相当大的下降。在omniglot数据集上,匹配网络、MAML、Meta SGD等技术的准确率为95%,而在MiniImageNet数据集上,这些模型的准确率明显较低。这种下降的一个主要原因是,MiniImageNet数据集中的图像在上下文含义、丰富的信息源等方面比omniglot数据集中的图像更复杂,而omniglot数据集中的图像只是字符。数据扩充、嵌入和优化方法的单次精度约为55%,尽管五次设置的精度有显著提高,但精度在70%的范围内。趋势是,样本数量越多,准确性越好。为了使我们的模型从尽可能少的样本中学习,我们将重点放在单次精度上。通过将语义与数据一起用作附加信息源,使模型能够理解新的类别,从而实现最新的准确性。

The present state-of-the-art is still deficient when compared to a 4-5 years old preschooler’s performance, indicating a substantial scope of improvement in these techniques in the near future to compete or match with an adult human being’s performance. A hybrid model which can exploit the advantages of various techniques to boost performance can be the next course of action in the few shot meta learning domain. A hybrid model; a cross-modal implementation which incorporates the semantic information along with the data-augmentation and/or embedding techniques with reference to results in Table 3. An observation at the results generated, acknowledges that using semantic information achieves superiror results in-comparison to data-augmentation or embedding techniques. However,the sole use of semantic based approach still lacks performance quality when compared to that of a human. Incorporating advanced techniques or models like attention mechanism [143], [144], self-attention mechanism, transformers [1] or a variation of variational auto encoders (V AEs) like beta-V AE [145], VQ-V AE [146], VQ-V AE-2 [147] and TDV AE [148] that can better generate low dimension semantic information for better generalization of limited samples and thus can perform better on novel categories along with an improved distance function (euclidean or cosine) which can robustly classify or cluster the low dimensional embeddings

与4-5岁学龄前儿童的表现相比,目前最先进的技术仍然不足,这表明在不久的将来,这些技术仍有很大的改进空间,可以与成人的表现相竞争。混合模型可以利用各种技术的优势来提高性能,这可能是少数镜头元学习领域的下一步行动。混合模型是一种跨模态实现,结合了语义信息以及数据扩充和/或嵌入技术,参考表3中的结果。对生成的结果的观察表明,与数据扩充或嵌入技术相比,使用语义信息可以获得更好的结果。然而,与人工方法相比,仅使用基于语义的方法仍然缺乏性能质量。结合先进的技术或模型,如注意机制[143]、[144]、自我注意机制、变压器[1]或变分自动编码器(V AEs)的变体,如beta-V AE[145]、VQ-V AE[146],VQ-V AE-2[147]和TDV AE[148]能够更好地生成低维语义信息,以便更好地泛化有限样本,从而能够更好地处理新类别,以及改进的距离函数(欧几里德或余弦),该函数能够对低维嵌入进行稳健分类或聚类

4 CONCLUSION

With the availability of enough data samples and their respective labels, deep learning models can yield better performance by generalizing well to such tasks, but fail where the model has to learn from limited samples. Representation based learning method like few shot learning, meta learning are used improve the ability of the model to learn from limited samples. In this survey, we put forth an investigation on the finding and existing techniques on few shot meta learning techniques for supervised learning in the computer vision domain. We highlighted the fact that why it is imperative to research on techniques where the model needs to generalize well based on limited data samples and the prior knowledge. We classified the techniques into four main categories based on their approach towards solving the few-shot learning problem. We analyzed the performance of these techniques on two benchmarks, Omniglot and MiniImagenet dataset and provided a brief discussion regarding their performance and the ideal settings for those techniques along with a potential future direction for research towards matching or outperforming humans.

有了足够的数据样本和它们各自的标签,深度学习模型可以通过很好地概括这些任务而产生更好的性能,但如果模型必须从有限的样本中学习,则会失败。采用基于表征的学习方法,如小样本学习、元学习等,提高了模型从有限样本中学习的能力。在这项调查中,我们提出了一项调查的发现和现有的技术对小样本元学习技术的监督学习在计算机视觉领域。我们强调了为什么必须研究模型需要基于有限的数据样本和先验知识进行良好概括的技术。我们根据解决小样本学习问题的方法将这些技术分为四大类。我们在Omniglot和MiniImagenet数据集这两个基准上分析了这些技术的性能,并简要讨论了它们的性能和这些技术的理想设置,以及未来研究匹配或超越人类的潜在方向。