调用高通骁龙snpe神经网络分别跑在不同的核上代码的详细过程

####写的比较随便 勿喷谢谢

test的代码:github:https://github.com/LiYangDoYourself/c-_Snpe

1下载snpe的sdk

地址:[snpe下载地址](https://developer.qualcomm.com/downloads/qualcomm-neural-processing-sdk-ai-v129)

2按照以下过程配置好pb转dlc模型的环境

地址:[环境配置过程](https://developer.qualcomm.com/software/qualcomm-neural-processing-sdk/getting-started)

3调用命令生成dlc模型

注意这个在/home/ly/workspace/snpe-sdk/bin/x86_64-linux-clang 下去执行,否则找不到 snpe-tensorflow-to-dlc这个文件

`snpe-tensorflow-to-dlc

--graph xxx.pb

--input_dim 1,64,64,1

--out_node MobilenetV1/Predictions/Reshape_1

--dlc xxx.dlc`

4然后就是android stdio中调用

根据你已经下载好的snpe-sdk中的三个文件,编译好自己的so文件,做jni调用

\snpe-1.25.0.287\examples\NativeCpp\SampleCode\jni\main.cpp

\snpe-1.25.0.287\examples\NativeCpp\SampleCode\jni\Android.mk

\snpe-1.25.0.287\examples\NativeCpp\SampleCode\jni\Application.mk

接下来就是调用自己编译好的so文件过程

注意:自己写的c++接口文件要和1.25.0.287\examples\NativeCpp\SampleCode\jni目录下的文件编译到一个so中否则无法调用

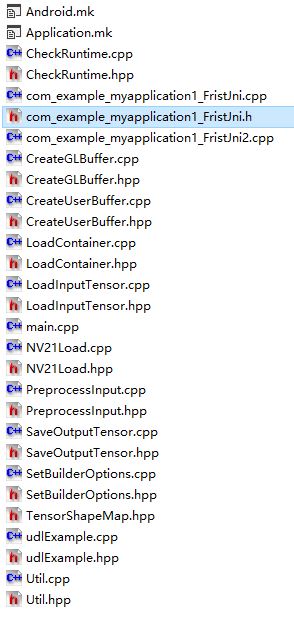

c++调用dlc模型的接口

**com_example_myapplication1_FristJni.cpp

com_example_myapplication1_FristJni.h**

5 com_example_myapplication1_FristJni.cpp分俩部分调用

一 获取图片

初始化过程函数:开启十二个snpe对象去调用传入18张图片跑在不同的核上

JNIEXPORT void JNICALL Java_com_example_myapplication1_FristJni_InitRes

(JNIEnv *env,jclass thiz)

{

//dlc模型的路径(手机下路径)

static std::string dlc="/sdcard/inception/cropped/frozen_graph_new_quantized.dlc";

// 存放图片的地址(xxx/xxxx/xxx/a.jpg)

const char* inputfile="/sdcard/inception/cropped/jpeg_list.txt";

// 有三种调用 我选的是ITENSOR 其他的都调不起来

std::string bufferTypeStr="ITENSOR";

std::string userBufferSourceStr="CPUBUFFER";

bool usingInitCaching=false;

//bool execStatus=false;

enum {UNKNOWN, USERBUFFER_FLOAT, USERBUFFER_TF8, ITENSOR};

enum {CPUBUFFER, GLBUFFER};

//验证dlc模型是否正确

std::ifstream dlcFile(dlc);

//传入图片到inpulist中

std::ifstream inputList(inputfile);

if(!dlcFile||!inputList){

LOGI("inputfile or dlc is not valid,pleace ensure useful");

}

int bufferType;

if(bufferTypeStr == "USERBUFFER_FLOAT")

{

bufferType = USERBUFFER_FLOAT; //1

}

else if(bufferTypeStr == "USERBUFFER_TF8")

{

bufferType = USERBUFFER_TF8; //2

}

else if(bufferTypeStr=="ITENSOR")

{

bufferType = ITENSOR; //3

}

else{

LOGI("buffer is not valid");

}

int userBufferSourceType;

// CPUBUFFER / GLBUFFER 只支持 USERBUFFER_FLOAT

if (bufferType == USERBUFFER_FLOAT) // 1==1

{

if( userBufferSourceStr == "CPUBUFFER" )

{

userBufferSourceType = CPUBUFFER; //0

}

else if( userBufferSourceStr == "GLBUFFER" )

{

#ifndef ANDROID

std::cout << "GLBUFFER mode only support android os" << std::endl;

#endif

userBufferSourceType = GLBUFFER; //1

}

else

{

std::cout<< "Source of user buffer type is not valid"<< std::endl;

}

}

//setup udl

zdl::DlSystem::UDLFactoryFunc udlFunc = sample::MyUDLFactory;

zdl::DlSystem::UDLBundle udlBundle;udlBundle.cookie=(void*)0xdeadbeaf,udlBundle.func=udlFunc;

//hold dlc model 导入模型到容器中

std::unique_ptr container=loadContainerFromFile(dlc);

if(container==nullptr){

LOGI("fail open the contain file");

}

bool useUserSuppliedBuffers=(bufferType==USERBUFFER_FLOAT||bufferType==USERBUFFER_TF8);

zdl::DlSystem::PlatformConfig platformConfig;

//将图片导入到向量中

std::vector> inputs = preprocessInput(inputfile,1);

//结构体 标记几号图片 和对应的地址

for(size_t i=0;i }

二 得出分类结果

void output_res(int index1)

{

struct timeval beginTime = {0,0};

struct timeval endTime = {0,0};

zdl::DlSystem::TensorShape tensorShape;

//dimens

//tensorShape=snpe->getInputDimensions();

//获取模型的输入维度

tensorShape=total_Snpe[index1]->getInputDimensions();

/*

if(total_Snpe[index1]){

LOGI("snpe %d no problem",index1);

}

*/

//batchisize

//输出多少张图片

batchSize = tensorShape.getDimensions()[0];

//LOGI("batchsize is %d",batchSize);

if(1)

{

zdl::DlSystem::TensorMap outputTensorMap_in;

//LOGI("index %d",index1);

ItemRepository singleItemRepository;

// if (get_pic(resgItemRepository1))

//resgItemRepository1=list_pic.front();

//{LOGI("pic path %s",(resgItemRepository1.pic_str)[0].c_str());}

//LOGI("结果有问题...0");

//判断向量中是否为空 ,不为空从向量中获取一张图片,注意(开启多线程)这边需要加锁 来保证对统一数据的调用,

while(get_pic(singleItemRepository)){

//std::unique_ptr inputTensor=loadInputTensor(snpe,resgItemRepository1.pic_str);

#if 1

//std::unique_ptr

//获取一张图片转换到tensor中

inputTensor=loadInputTensor(total_Snpe[index1],singleItemRepository.pic_str);

std::vector vec_pic = singleItemRepository.pic_str;

std::unique_ptr inputTensor;

const auto &strList_opt = total_Snpe[index1]->getInputTensorNames();

const auto &strList=*strList_opt;

std::vector inputVec;

for(size_t i=0;i loadedFile;

std::ifstream in(filePath,std::ifstream::binary);

in.seekg(0,in.end);

size_t length=in.tellg();

in.seekg(0,in.beg);

if (loadedFile.size()==0)

{

loadedFile.resize(length/sizeof(float));

}

else if(loadedFile.size()(&loadedFile[0]),length))

{

LOGI("failed to read");

}

inputVec.insert(inputVec.end(),loadedFile.begin(),loadedFile.end());

}

const auto &inputDims_opt = total_Snpe[index1]->getInputDimensions(strList.at(0));

const auto &inputShape = *inputDims_opt;

inputTensor = zdl::SNPE::SNPEFactory::getTensorFactory().createTensor(inputShape);

std::copy(inputVec.begin(),inputVec.end(),inputTensor->begin());

//对应的是第几张图片

int num=singleItemRepository.flag;

//string a = ((list_pic.front()).pic_str)[0].c_str();

// Execute the input tensor on the model with SNPE

//gettimeofday(&beginTime, NULL);

//bool execStatus = snpe->execute(inputTensor.get(), outputTensorMap_in);

//执行

bool execStatus = total_Snpe[index1]->execute(inputTensor.get(), outputTensorMap_in);

//gettimeofday(&endTime, NULL);

//long long tm_begin = beginTime.tv_sec*1000+beginTime.tv_usec/1000;

//long long tm_end = endTime.tv_sec*1000+endTime.tv_usec/1000;

//LOGI(" 第%d张,图片%s,cost:%lldms",num,(singleItemRepository.pic_str)[0].c_str(),tm_end-tm_begin);

#if 1

// 52个类型 遍历获取 每张图片对应的最高结果得分,并保存起来

zdl::DlSystem::StringList tensorNames = outputTensorMap_in.getTensorNames();

//print pic and score

std::for_each(tensorNames.begin(),tensorNames.end(),[&](const char* name)

{

for(size_t i=0;i<1;i++){

auto tensorPtr=outputTensorMap_in.getTensor(name);

size_t batchChunk=tensorPtr->getSize()/batchSize;

int j=1;

float MAX_NUM=0.0;

int MAX_LOCATE=0;

for(auto it=tensorPtr->cbegin()+i*batchChunk;it!=tensorPtr->cbegin()+(i+1)*batchChunk;++it)

{

float f=*it;

if(f>=MAX_NUM){

MAX_NUM=f;

MAX_LOCATE=j;

}

j++;

}

//LOGI("第%d张 ,最大位置:%d,得分:%f",pic_num+1,MAX_LOCATE,MAX_NUM);

//LOGI("第%d张 ,最大位置:%d,得分:%f",num,MAX_LOCATE,MAX_NUM);

ResItemRepository resgItemRepository;

resgItemRepository.flag=num;

resgItemRepository.location=MAX_LOCATE;

resgItemRepository.score=MAX_NUM;

//存的时候要上锁

std::unique_lock lock1(end_mtx);

list_res.push_back(resgItemRepository);

lock1.unlock();

}

});

#endif

#endif

}

}

}

#endif

return env->NewStringUTF("DO YOUR SELF");

}

三开启初始化和获取最高分结果

JNIEXPORT jstring JNICALL Java_com_example_myapplication1_FristJni_TestRes

(JNIEnv *env, jclass thiz){

time_cost("main",0);

//开启是个线程分别获取图片 传入到对应snpe核下去执行

#if 1

thread tc(output_res,0);

thread tc2(output_res,1);

thread tc3(output_res,2);

thread tc4(output_res,3);

//thread tc5(output_res,move(cpu5_snpe));

thread tg(output_res,4);

thread tg2(output_res,5);

thread tg3(output_res,6);

thread tg4(output_res,7);

//thread tg5(output_res,move(gpu5_snpe));

thread td(output_res,8);

thread td2(output_res,9);

thread td3(output_res,10);

thread td4(output_res,11);

//thread td5(output_res,move(dsp5_snpe));

tc.join();

tc2.join();

tc3.join();

tc4.join();

//tc5.join();

tg.join();

tg2.join();

tg3.join();

tg4.join();

//tg5.join();

td.join();

td2.join();

td3.join();

td4.join();

//td5.join();

#endif

time_cost("main",1);

#if 1

//给结果按图片序号的顺序排序

list_res.sort();

list::iterator plist;

LOGI("----------------------------------------result");

LOGI("----------------------------------------list_len:%d",list_res.size());

//打印每张图片对应的结果

for(plist=list_res.begin();plist!=list_res.end();plist++)

{

if(plist->flag){

int pic_label=plist->flag;

//zdl::DlSystem::TensorMap outputTensorMap=plist->OTM;

int location=plist->location;

float score=plist->score;

LOGI("The %d pic ,max_locate:%d ,score:%f",pic_label,location,score);

}

else{

LOGI("list_content is None。。。。。。。。。。。。。");

}

}

//最后是清除全局变量中的图片,防止开启下一次的点击

while(1){

bool F=!list_res.empty();

if(F){

list_res.pop_front();}

else{

break;

}

}

#endif

//测试用例

return env->NewStringUTF("DO YOUR SELF");

}