基于随机森林算法的葡萄酒种类识别

文章目录

- 唠两句

- 1. 随机森林算法原理

-

- 1.1 决策树的构建(CART算法)

- 1.2 Gini系数

- 1.3 随机森林的构建

- 2. 数据集来源

- 3. 代码实现(核心代码)

-

- 3.1 随机森林函数

- 3.2 决策树生成函数

- 3.3 决策树决策函数

- 4. 系统分析

唠两句

这篇文章是自己写实验报告的时候突发奇想写的,把这学期的计算时能答辩的课题改编成自己的博客,嗯总算实现老师说的写博客的意义了。源代码是借鉴自https://blog.csdn.net/cyberliferk800/article/details/90549795

但是很可惜啊这哥们儿(姐们儿)的代码根本跑不出来(不是diss您可能是matlab版本问题也好像是您确实sample错了如果有幸看到勿喷咱们理智辩解),我理解完了debug然后改写了整个逻辑,最后正确率还蛮喜人的嘿嘿嘿。

本文里葡萄酒种类预测只是为了满足了老师所吩咐的“现实意义”这一要求,并没有太大的研究意义,我就重点介绍随机森林算法了,接下来进入正题。

1. 随机森林算法原理

1.1 决策树的构建(CART算法)

CART算法由以下两步组成:

决策树生成:基于训练数据集生成决策树,生成的决策树要尽量大;

决策树剪枝:用验证数据集对已生成的树进行剪枝并选择最优子树,这时损失函数最小作为剪枝的标准。

CART决策树的生成就是递归地构建二叉决策树的过程。CART决策树既可以用于分类也可以用于回归。本文我们仅讨论用于分类的CART。对分类树而言,CART用Gini系数最小化准则来进行特征选择,生成二叉树。

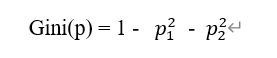

1.2 Gini系数

决策树建立后使用Gini系数判断其是否为一颗好树

Gini系数代表了模型的不纯度,基尼系数越小,不纯度越低,特征越好。

假设K个类别,第k个类别的概率为pk,概率分布的基尼系数表达式:

![]()

由于本文葡萄酒种类只存在两个类别,所以基尼系数表达式是:

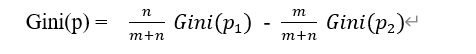

又由需求最佳划分点,划分点左右两侧都有样本存在,左边样本点为n个,右边样本点为个,所以基尼系数时表达式应为:

1.3 随机森林的构建

决策树相当于一个大师,通过自己在数据集中学到的知识对于新的数据进行分类。那么随机森林的具体构建有两个方面:数据的随机性选取,以及待选特征的随机选取。

1.数据的随机选取:

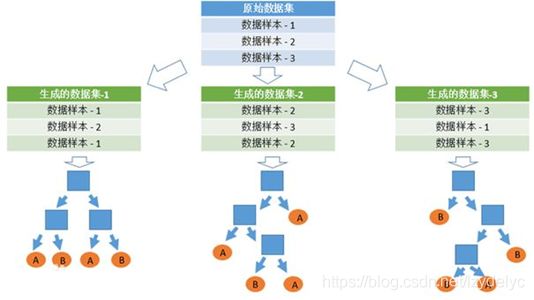

首先,从原始的数据集中采取有放回的抽样,构造子数据集,子数据集的数据量是和原始数据集相同的。不同子数据集的元素可以重复,同一个子数据集中的元素也可以重复。第二,利用子数据集来构建子决策树,将这个数据放到每个子决策树中,每个子决策树输出一个结果。最后,如果有了新的数据需要通过随机森林得到分类结果,就可以通过对子决策树的判断结果的投票,得到随机森林的输出结果了。如下图,假设随机森林中有3棵子决策树,2棵子树的分类结果是A类,1棵子树的分类结果是B类,那么随机森林的分类结果就是A类。

图2-3 一个具有3个数据样本的数据集中的数据的随机选取

2.待选特征的随机选取

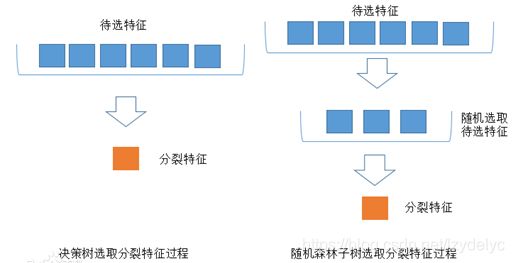

与数据集的随机选取类似,随机森林中的子树的每一个分裂过程并未用到所有的待选特征,而是从所有的待选特征中随机选取一定的特征,之后再在随机选取的特征中选取最优的特征进行划分。这样能够使得随机森林中的决策树都能够彼此不同,提升系统的多样性,从而提升分类性能。

下图中,蓝色的方块代表所有可以被选择的特征,也就是目前的待选特征。橙色的方块是分裂特征。左边是一棵决策树的特征选取过程,通过在待选特征中选取最优的分裂特征(本文采用CART算法),完成分裂。右边是一个随机森林中的子树的特征选取过程。

2. 数据集来源

3. 代码实现(核心代码)

3.1 随机森林函数

%随机森林,共有trees_num棵树

function result=random_forest(sample,trees_num,data,sample_select,decision_select,sample_limit)

type1=0;

type0=0;

conclusion=zeros(1,trees_num);

%data的最后一个改为自定义值,待会儿改成GUI传进来的值

data(size(data,1),:) = sample;

for i=1:trees_num

[path,boundary,~,result]=decision_tree(data,sample_select,decision_select,sample_limit);

conclusion(i)=decide(path,boundary,result);

if conclusion(i)==1

type1=type1+1;

else

type0=type0+1;

end

end

if type1>type0

result=1;

else

result=0;

end

3.2 决策树生成函数

%生成决策树,输入原始数据,采样样本数,采样决策属性数,预剪枝样本限制

function [path,boundary,gini,result]=decision_tree(data,sample_select,decision_select,sample_limit)

score=100;

flag=0;

temp=inf;

%data(size(data,1),:)=sample;

%评价函数得分

while(score>(sample_select*0.3)) %直到找到好树才停止

%%设置两个变量conclusion4_0和conclusion4_1,如果分类在第三层停下确保0和1的数量不一样

conclusion3_0=0;

conclusion3_1=0;

%设置两个变量conclusion4_0和conclusion4_1,如果叶子结点数量多于一个要判断conclusion4_0和conclusion4_1的数量谁更多

conclusion4_0=0;

conclusion4_1=0;

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%分界%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

data_new=select_sample_decision(data,sample_select,decision_select);

%计算初始gini系数

gini_now=gini_self(data_new);

%主程序

layer=1; %记录决策树当前层数

leaf_sample=zeros(1,sample_select); %记录子结点样本个数

leaf_gini=zeros(1,sample_select); %叶子节点gini系数

leaf_num=0; %记录叶子数

path=zeros(decision_select,2^(decision_select-1)); %初始化路径

gini=ones(decision_select,2^(decision_select-1)); %初始化gini

boundary=zeros(decision_select,2^(decision_select-1)); %初始化划分边界

result=ones(decision_select,2^(decision_select-1)); %初始化结果

path(:)=inf;

gini(:)=inf;

boundary(:)=inf;

result(1:4,1:8)=inf;

%第一层

[decision_global_best,boundary_global_best,data_new1,gini_now1,data_new2,gini_now2,~]=generate_node(data_new);

path(layer,1)=data_new(size(data_new,1),decision_global_best);

boundary(layer,1)=boundary_global_best;

gini(layer,1)=gini_now;

layer=layer+1;

gini(layer,1)=gini_now1;

gini(layer,2)=gini_now2;

%第二层

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%二层1%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

if ((size(data_new1,1)-1)>=sample_limit)&&(gini(layer,1)>0)

[decision_global_best,boundary_global_best,data_new1_1,gini_now1_1,data_new1_2,gini_now1_2,~]=generate_node(data_new1);

path(layer,1)=data_new1(size(data_new1,1),decision_global_best);

boundary(layer,1)=boundary_global_best;

layer=layer+1;

gini(layer,1)=gini_now1_1;

gini(layer,2)=gini_now1_2;

%%%%%%%%%%%%%%%%%%%%%%%%%三层1%%%%%%%%%%%%%%%%%%%%%%%%%%%%

if (size(data_new1_1,1)-1)>=sample_limit&&(gini(layer,1)>0)

for i=1:size(data_new1_1,1)

if(data_new1_1(i,end)==1)

conclusion3_1=conclusion3_1+1;

else

conclusion3_0=conclusion3_0+1;

end

end

[decision_global_best,boundary_global_best,data_new1_1_1,gini_now1_1_1,data_new1_1_2,gini_now1_1_2,~]=generate_node(data_new1_1);

path(layer,1)=data_new1_1(size(data_new1_1,1),decision_global_best);

boundary(layer,1)=boundary_global_best;

layer=layer+1;

gini(layer,1)=gini_now1_1_1;

%test

temp1=0;

temp2=0;

for i=1:size(data_new1_1_1,1)

if(data_new1_1_1(i,end)==1)

temp1=temp1+1;

else

temp2=temp2+1;

end

end

if(temp1>temp2)

temp=1;

conclusion4_1=conclusion4_1+1;

elseif temp1<temp2

temp=0;

conclusion4_0=conclusion4_0+1;

else

flag=1;

end

result(layer,1)=temp;

leaf_num=leaf_num+1;

leaf_gini(leaf_num)=gini_now1_1_1;

leaf_sample(leaf_num)=size(data_new1_1_1,1)-1;

gini(layer,2)=gini_now1_1_2;

%%%%%%%%%%%%%%%%%%%%%%%%%四层2%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%test

temp1=0;

temp2=0;

for i=1:size(data_new1_1_2,1)

if(data_new1_1_2(i,end)==1)

temp1=temp1+1;

else

temp2=temp2+1;

end

end

if(temp1>temp2)

temp=1;

conclusion4_1=conclusion4_1+1;

elseif temp1<temp2

temp=0;

conclusion4_0=conclusion4_1+0;

else

flag=1;

end

result(layer,2)=temp;

leaf_num=leaf_num+1;

leaf_gini(leaf_num)=gini_now1_1_2;

leaf_sample(leaf_num)=size(data_new1_1_2,1)-1;

else

%%%%%%%%%%%%%%%%%%%%%%%%%三层1else%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%test

temp1=0;

temp2=0;

for i=1:size(data_new1_1,1)

if(data_new1_1(i,end)==1)

temp1=temp1+1;

else

temp2=temp2+1;

end

end

if(temp1>temp2)

temp=1;

conclusion4_1=conclusion4_1+1;

elseif temp1<temp2

temp=0;

conclusion4_0=conclusion4_0+1;

else

flag=1;

end

result(layer,1)=temp;

leaf_num=leaf_num+1;

leaf_gini(leaf_num)=gini_now1_1;

leaf_sample(leaf_num)=size(data_new1_1,1)-1;

path(layer,1)=nan;

boundary(layer,1)=nan;

gini(layer+1,1:2)=nan;

end

layer=3;

%%%%%%%%%%%%%%%%%%%%%%%%%三层2%%%%%%%%%%%%%%%%%%%%%%%%%%%%

if (size(data_new1_2,1)-1)>=sample_limit&&(gini(layer,2)>0)

for i=1:size(data_new1_2,1)

if(data_new1_2(i,end)==1)

conclusion3_1=conclusion3_1+1;

else

conclusion3_0=conclusion3_0+1;

end

end

[decision_global_best,boundary_global_best,data_new1_2_1,gini_now1_2_1,data_new1_2_2,gini_now1_2_2,~]=generate_node(data_new1_2);

path(layer,2)=data_new1_2(size(data_new1_2,1),decision_global_best);

boundary(layer,2)=boundary_global_best;

layer=layer+1;

gini(layer,3)=gini_now1_2_1;

%test

temp1=0;

temp2=0;

for i=1:size(data_new1_2_1,1)

if(data_new1_2_1(i,end)==1)

temp1=temp1+1;

else

temp2=temp2+1;

end

end

if(temp1>temp2)

temp=1;

conclusion4_1=conclusion4_1+1;

elseif temp1<temp2

temp=0;

conclusion4_0=conclusion4_0+1;

else

flag=1;

end

result(layer,3)=temp;

leaf_num=leaf_num+1;

leaf_gini(leaf_num)=gini_now1_2_1;

leaf_sample(leaf_num)=size(data_new1_2_1,1)-1;

gini(layer,4)=gini_now1_2_2;

%%%%%%%%%%%%%%%%%%%%%%%%%四层4%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%test

temp1=0;

temp2=0;

for i=1:size(data_new1_2_2,1)

if(data_new1_2_2(i,end)==1)

temp1=temp1+1;

else

temp2=temp2+1;

end

end

if(temp1>temp2)

temp=1;

conclusion4_1=conclusion4_1+1;

elseif temp1<temp2

temp=0;

conclusion4_0=conclusion4_0+1;

else

flag=1;

end

result(layer,4)=temp;

leaf_num=leaf_num+1;

leaf_gini(leaf_num)=gini_now1_2_2;

leaf_sample(leaf_num)=size(data_new1_2_2,1)-1;

else

%%%%%%%%%%%%%%%%%%%%%%%%%三层2else%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%test

temp1=0;

temp2=0;

for i=1:size(data_new1_2,1)

if(data_new1_2(i,end)==1)

temp1=temp1+1;

else

temp2=temp2+1;

end

end

if(temp1>temp2)

temp=1;

conclusion4_1=conclusion4_1+1;

elseif temp1<temp2

temp=0;

conclusion4_0=conclusion4_0+1;

else

flag=1;

end

result(layer,2)=temp;

leaf_num=leaf_num+1;

leaf_gini(leaf_num)=gini_now1_2;

leaf_sample(leaf_num)=size(data_new1_2,1)-1;

path(layer,2)=nan;

boundary(layer,2)=nan;

gini(layer+1,3:4)=nan;

end

else

%%%%%%%%%%%%%%%%%%%%%%%%%二层1else%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%test

temp1=0;

temp2=0;

for i=1:size(data_new1,1)

if(data_new1(i,end)==1)

temp1=temp1+1;

else

temp2=temp2+1;

end

end

if(temp1>temp2)

temp=1;

conclusion4_1=conclusion4_1+1;

elseif temp1<temp2

temp=0;

conclusion4_0=conclusion4_0+1;

else

flag=1;

end

result(layer,1)=temp;

leaf_num=leaf_num+1;

leaf_gini(leaf_num)=gini_now1;

leaf_sample(leaf_num)=size(data_new1,1)-1;

path(layer,1)=nan;

boundary(layer,1)=nan;

layer=layer+1;

gini(layer,1:2)=nan;

%第三层

path(layer,1:2)=nan;

boundary(layer,1:2)=nan;

%gini第四层叶子

layer=layer+1;

gini(layer,1:4)=nan;

end

layer=2;

%%%%%%%%%%%%%%%%%%%%%%%%%二层2%%%%%%%%%%%%%%%%%%%%%%%%%%%%

if (size(data_new2,1)-1)>=sample_limit&&(gini(layer,2)>0)

[decision_global_best,boundary_global_best,data_new2_1,gini_now2_1,data_new2_2,gini_now2_2,~]=generate_node(data_new2);

path(layer,2)=data_new2(size(data_new2,1),decision_global_best);

boundary(layer,2)=boundary_global_best;

layer=layer+1;

gini(layer,3)=gini_now2_1;

gini(layer,4)=gini_now2_2;

%第三层

%%%%%%%%%%%%%%%%%%%%%%%%%三层3%%%%%%%%%%%%%%%%%%%%%%%%%%%%

if (size(data_new2_1,1)-1)>=sample_limit&&(gini(layer,3)>0)

for i=1:size(data_new2_1,1)

if(data_new2_1(i,end)==1)

conclusion3_1=conclusion3_1+1;

else

conclusion3_0=conclusion3_0+1;

end

end

[decision_global_best,boundary_global_best,data_new2_1_1,gini_now2_1_1,data_new2_1_2,gini_now2_1_2,~]=generate_node(data_new2_1);

path(layer,3)=data_new2_1(size(data_new2_1,1),decision_global_best);

boundary(layer,3)=boundary_global_best;

layer=layer+1;

gini(layer,5)=gini_now2_1_1;

%test

temp1=0;

temp2=0;

for i=1:size(data_new2_1_1,1)

if(data_new2_1_1(i,end)==1)

temp1=temp1+1;

else

temp2=temp2+1;

end

end

if(temp1>temp2)

temp=1;

conclusion4_1=conclusion4_1+1;

elseif temp1<temp2

temp=0;

conclusion4_0=conclusion4_0+1;

else

flag=1;

end

result(layer,5)=temp;

leaf_num=leaf_num+1;

leaf_gini(leaf_num)=gini_now2_1_1;

leaf_sample(leaf_num)=size(data_new2_1_1,1)-1;

gini(layer,6)=gini_now2_1_2;

%test

temp1=0;

temp2=0;

for i=1:size(data_new2_1_2,1)

if(data_new2_1_2(i,end)==1)

temp1=temp1+1;

else

temp2=temp2+1;

end

end

if(temp1>temp2)

temp=1;

conclusion4_1=conclusion4_1+1;

elseif temp1<temp2

temp=0;

conclusion4_0=conclusion4_0+1;

else

flag=1;

end

result(layer,6)=temp;

leaf_num=leaf_num+1;

leaf_gini(leaf_num)=gini_now2_1_2;

leaf_sample(leaf_num)=size(data_new2_1_2,1)-1;

else

%%%%%%%%%%%%%%%%%%%%%%%%%三层3else%%%%%%%%%%%%%%%%%%%%%%%%%%%%

temp1=0;

temp2=0;

for i=1:size(data_new2_1,1)

if(data_new2_1(i,end)==1)

temp1=temp1+1;

else

temp2=temp2+1;

end

end

if(temp1>temp2)

temp=1;

conclusion4_1=conclusion4_1+1;

elseif temp1<temp2

temp=0;

conclusion4_0=conclusion4_0+1;

else

flag=1;

end

result(layer,3)=temp;

leaf_num=leaf_num+1;

leaf_gini(leaf_num)=gini_now2_1;

leaf_sample(leaf_num)=size(data_new2_1,1)-1;

path(layer,3)=nan;

boundary(layer,3)=nan;

gini(layer+1,5:6)=nan;

end

layer=3;

%%%%%%%%%%%%%%%%%%%%%%%%%三层4%%%%%%%%%%%%%%%%%%%%%%%%%%%%

if (size(data_new2_2,1)-1)>=sample_limit&&(gini(layer,4)>0)

for i=1:size(data_new2_2,1)

if(data_new2_2(i,end)==1)

conclusion3_1=conclusion3_1+1;

else

conclusion3_0=conclusion3_0+1;

end

end

[decision_global_best,boundary_global_best,data_new2_2_1,gini_now2_2_1,data_new2_2_2,gini_now2_2_2,~]=generate_node(data_new2_2);

path(layer,4)=data_new2_2(size(data_new2_2,1),decision_global_best);

boundary(layer,4)=boundary_global_best;

layer=layer+1;

gini(layer,7)=gini_now2_2_1;

% %test

temp1=0;

temp2=0;

for i=1:size(data_new2_2_1,1)

if(data_new2_2_1(i,end)==1)

temp1=temp1+1;

else

temp2=temp2+1;

end

end

if(temp1>temp2)

temp=1;

conclusion4_1=conclusion4_1+1;

elseif temp1<temp2

temp=0;

conclusion4_0=conclusion4_0+1;

else

flag=1;

end

result(layer,7)=temp;

leaf_num=leaf_num+1;

leaf_gini(leaf_num)=gini_now2_2_1;

leaf_sample(leaf_num)=size(data_new2_2_1,1)-1;

gini(layer,8)=gini_now2_2_2;

%test

temp1=0;

temp2=0;

for i=1:size(data_new2_2_2,1)

if(data_new2_2_2(i,end)==1)

temp1=temp1+1;

else

temp2=temp2+1;

end

end

if(temp1>temp2)

temp=1;

conclusion4_1=conclusion4_1+1;

elseif temp1<temp2

temp=0;

conclusion4_0=conclusion4_0+1;

else

flag=1;

end

result(layer,8)=temp;

leaf_num=leaf_num+1;

leaf_gini(leaf_num)=gini_now2_2_2;

leaf_sample(leaf_num)=size(data_new2_2_2,1)-1;

else

%%%%%%%%%%%%%%%%%%%%%%%%%三层4else%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%test

temp1=0;

temp2=0;

for i=1:size(data_new2_2,1)

if(data_new2_2(i,end)==1)

temp1=temp1+1;

else

temp2=temp2+1;

end

end

if(temp1>temp2)

temp=1;

conclusion4_1=conclusion4_1+1;

elseif temp1<temp2

temp=0;

conclusion4_0=conclusion4_0+1;

else

flag=1;

end

result(layer,4)=temp;

leaf_num=leaf_num+1;

leaf_gini(leaf_num)=gini_now2_2;

leaf_sample(leaf_num)=size(data_new2_2,1)-1;

path(layer,4)=nan;

boundary(layer,4)=nan;

gini(layer+1,7:8)=nan;

end

else

%%%%%%%%%%%%%%%%%%%%%%%%%二层2else%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%test

temp1=0;

temp2=0;

for i=1:size(data_new2,1)

if(data_new2(i,end)==1)

temp1=temp1+1;

else

temp2=temp2+1;

end

end

if(temp1>temp2)

temp=1;

conclusion4_1=conclusion4_1+1;

elseif temp1<temp2

temp=0;

conclusion4_0=conclusion4_0+1;

else

flag=1;

end

result(layer,1)=temp;

leaf_num=leaf_num+1;

leaf_gini(leaf_num)=gini_now2;

leaf_sample(leaf_num)=size(data_new2,1)-1;

path(layer,2)=nan;

boundary(layer,2)=nan;

layer=layer+1;

gini(layer,3:4)=nan;

%第三层

path(layer,3:4)=nan;

boundary(layer,3:4)=nan;

%gini第四层叶子

layer=layer+1;

gini(layer,5:8)=nan;

end

if flag==1||conclusion4_1==conclusion4_0||(conclusion3_0==conclusion3_1&&conclusion4_1==0&&conclusion4_0==0)

score=100;

else

score=evaluation(leaf_num,leaf_sample,leaf_gini);

end

flag=0;

result(2,:)=nan;

end

3.3 决策树决策函数

%样本决策函数,输入样本与决策树,输出判断结果

function conclusion=decide(path,boundary,result)

%

%disp(sample(path(1,1)));

%disp(boundary(1,1));

%sample

conclusion0=0;

conclusion1=0;

%是否有到达第四层

flag=0;

if path(1,1)<boundary(1,1)

if result(2,1)==0||result(2,1)==1

conclusion=result(2,1);

else

%sample

if path(2,1)<boundary(2,1)

if result(2,1)==0||result(2,1)==1

conclusion=result(3,1);

else

%sample

if path(3,1)<boundary(3,1)

if result(4,1)==1

conclusion1=conclusion1+1;

else

conclusion0=conclusion0+1;

end

flag=1;

else

if result(4,2)==1

conclusion1=conclusion1+1;

else

conclusion0=conclusion0+1;

end

flag=1;

end

end

else

if result(3,2)==0||result(3,2)==1

conclusion=result(3,2);

else

%sample

if path(3,2)<boundary(3,2)

if result(4,3)==1

conclusion1=conclusion1+1;

else

conclusion0=conclusion0+1;

end

flag=1;

else

if result(4,4)==1

conclusion1=conclusion1+1;

else

conclusion0=conclusion0+1;

end

flag=1;

end

end

end

end

else

if result(2,2)==0||result(2,2)==1

conclusion=result(2,2);

else

%sample

if path(2,2)<boundary(2,2)

if result(3,3)==0||result(3,3)==1

conclusion=result(3,3);

else

%sample

if path(3,3)<boundary(3,3)

if result(4,5)==1

conclusion1=conclusion1+1;

else

conclusion0=conclusion0+1;

end

flag=1;

else

if result(4,1)==1

conclusion1=conclusion1+1;

else

conclusion0=conclusion0+1;

end

flag=1;

end

end

else

if result(3,4)==0||result(3,4)==1

conclusion=result(3,4);

else

%sample

if path(3,4)<boundary(3,4)

if result(4,7)==1

conclusion1=conclusion1+1;

else

conclusion0=conclusion0+1;

end

flag=1;

else

if result(4,8)==1

conclusion1=conclusion1+1;

else

conclusion0=conclusion0+1;

end

flag=1;

end

end

end

end

end

if flag==1

if conclusion1>conclusion0

conclusion=1;

else

conclusion=0;

end

end

整个系统的代码我会放在另外一篇博客里大家emm有机自取吧,不喜勿喷。

4. 系统分析

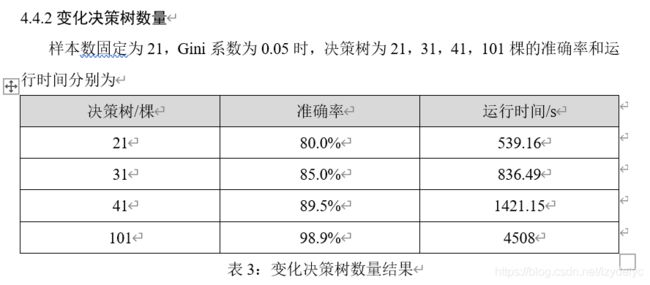

因为做自身对比分析的时候还存在一些小bug,所以准确率普遍偏低了3%-4%,也就是说在变量定义的不够好的时候准确率也是可以比较高的,也证明了随机森林的优势——“性能优化过程刚好又提高了模型的准确性,这种精彩表现并不常有”

今天太累了要休息了,有空的时候我重新做一个对比然后给大家品一品,老的对比就暂时放一张大家蛮看(从自己的课程论文里截图出来的,丑了点,随意观赏)。