基于卷积神经网络的数字重建 - Unet Matlab代码

两年前写论文时,发过一篇在MATLAB上用神经网络实现数字重建的帖子。当时是想着方便日后自己复习,没想到收到了不少小伙伴的私信,希望能把Unet的代码分享一下。其实,在发帖时自己也忽略了这一点,所以现在专门写一篇帖子把代码分享给大家。*代码好像是从MATLAB官网论坛上下载,非原创

代码

话不多说,先上代码。

function lgraph = createUnet_regression()

% EDIT: modify these parameters for your application.

% encoderDepth 决定了对称网络的深度,即先 encode (N次) 后再decode(N次)

% 注意输入图片的尺寸。由于加入了MaxPooling, 所以图片的尺寸会递减 32->16->8->4...

encoderDepth = 4;

initialEncoderNumChannels = 32;

%inputTileSize = [256 256 1];

%inputTileSize = [128 128 1];

% 输入图片的尺寸

inputTileSize = [32 32 1];

convFilterSize = [3 3];

%inputNumChannels = 3;

inputNumChannels = 1;

inputlayer = imageInputLayer(inputTileSize,'Name','ImageInputLayer');

[encoder, finalNumChannels] = iCreateEncoder(encoderDepth, convFilterSize, initialEncoderNumChannels, inputNumChannels);

firstConv = createAndInitializeConvLayer(convFilterSize, finalNumChannels, ...

2*finalNumChannels, 'Bridge-Conv-1');

firstReLU = reluLayer('Name','Bridge-ReLU-1');

secondConv = createAndInitializeConvLayer(convFilterSize, 2*finalNumChannels, ...

2*finalNumChannels, 'Bridge-Conv-2');

secondReLU = reluLayer('Name','Bridge-ReLU-2');

encoderDecoderBridge = [firstConv; firstReLU; secondConv; secondReLU];

dropOutLayer = dropoutLayer(0.5,'Name','Bridge-DropOut');

encoderDecoderBridge = [encoderDecoderBridge; dropOutLayer];

initialDecoderNumChannels = finalNumChannels;

upConvFilterSize = 2;

[decoder, finalDecoderNumChannels] = iCreateDecoder(encoderDepth, upConvFilterSize, convFilterSize, initialDecoderNumChannels);

layers = [inputlayer; encoder; encoderDecoderBridge; decoder];

finalConv = convolution2dLayer(1,1,...

'BiasL2Factor',0,...

'Name','Final-ConvolutionLayer');

%finalConv.Weights = randn(1,1,finalDecoderNumChannels,initialEncoderNumChannels);

%finalConv.Bias = zeros(1,1,initialEncoderNumChannels);

%smLayer = softmaxLayer('Name','Softmax-Layer');

%pixelClassLayer = pixelClassificationLayer('Name','Segmentation-Layer');

%layers = [layers; finalConv; smLayer; pixelClassLayer];

finalregressionLayer= regressionLayer('name','Reg.Layer');

layers = [layers; finalConv;finalregressionLayer];

lgraph = layerGraph(layers);

for depth = 1:encoderDepth

startLayer = sprintf('Encoder-Stage-%d-ReLU-2',depth);

endLayer = sprintf('Decoder-Stage-%d-DepthConcatenation/in2',encoderDepth-depth + 1);

lgraph = connectLayers(lgraph,startLayer, endLayer);

end

end

%--------------------------------------------------------------------------

function [encoder, finalNumChannels] = iCreateEncoder(encoderDepth, convFilterSize, initialEncoderNumChannels, inputNumChannels)

encoder = [];

for stage = 1:encoderDepth

% Double the layer number of channels at each stage of the encoder.

encoderNumChannels = initialEncoderNumChannels * 2^(stage-1);

if stage == 1

firstConv = createAndInitializeConvLayer(convFilterSize, inputNumChannels, encoderNumChannels, ['Encoder-Stage-' num2str(stage) '-Conv-1']);

else

firstConv = createAndInitializeConvLayer(convFilterSize, encoderNumChannels/2, encoderNumChannels, ['Encoder-Stage-' num2str(stage) '-Conv-1']);

end

firstReLU = reluLayer('Name',['Encoder-Stage-' num2str(stage) '-ReLU-1']);

secondConv = createAndInitializeConvLayer(convFilterSize, encoderNumChannels, encoderNumChannels, ['Encoder-Stage-' num2str(stage) '-Conv-2']);

secondReLU = reluLayer('Name',['Encoder-Stage-' num2str(stage) '-ReLU-2']);

encoder = [encoder;firstConv; firstReLU; secondConv; secondReLU];

if stage == encoderDepth

dropOutLayer = dropoutLayer(0.5,'Name',['Encoder-Stage-' num2str(stage) '-DropOut']);

encoder = [encoder; dropOutLayer];

end

maxPoolLayer = maxPooling2dLayer(2, 'Stride', 2, 'Name',['Encoder-Stage-' num2str(stage) '-MaxPool']);

encoder = [encoder; maxPoolLayer];

end

finalNumChannels = encoderNumChannels;

end

%--------------------------------------------------------------------------

function [decoder, finalDecoderNumChannels] = iCreateDecoder(encoderDepth, upConvFilterSize, convFilterSize, initialDecoderNumChannels)

decoder = [];

for stage = 1:encoderDepth

% Half the layer number of channels at each stage of the decoder.

decoderNumChannels = initialDecoderNumChannels / 2^(stage-1);

upConv = createAndInitializeUpConvLayer(upConvFilterSize, 2*decoderNumChannels, decoderNumChannels, ['Decoder-Stage-' num2str(stage) '-UpConv']);

upReLU = reluLayer('Name',['Decoder-Stage-' num2str(stage) '-UpReLU']);

% Input feature channels are concatenated with deconvolved features within the decoder.

depthConcatLayer = depthConcatenationLayer(2, 'Name', ['Decoder-Stage-' num2str(stage) '-DepthConcatenation']);

firstConv = createAndInitializeConvLayer(convFilterSize, 2*decoderNumChannels, decoderNumChannels, ['Decoder-Stage-' num2str(stage) '-Conv-1']);

firstReLU = reluLayer('Name',['Decoder-Stage-' num2str(stage) '-ReLU-1']);

secondConv = createAndInitializeConvLayer(convFilterSize, decoderNumChannels, decoderNumChannels, ['Decoder-Stage-' num2str(stage) '-Conv-2']);

secondReLU = reluLayer('Name',['Decoder-Stage-' num2str(stage) '-ReLU-2']);

decoder = [decoder; upConv; upReLU; depthConcatLayer; firstConv; firstReLU; secondConv; secondReLU];

end

finalDecoderNumChannels = decoderNumChannels;

end

%--------------------------------------------------------------------------

function convLayer = createAndInitializeConvLayer(convFilterSize, inputNumChannels, outputNumChannels, layerName)

convLayer = convolution2dLayer(convFilterSize,outputNumChannels,...

'Padding', 'same',...

'BiasL2Factor',0,...

'Name',layerName);

% He initialization is used

convLayer.Weights = sqrt(2/((convFilterSize(1)*convFilterSize(2))*inputNumChannels)) ...

* randn(convFilterSize(1),convFilterSize(2), inputNumChannels, outputNumChannels);

convLayer.Bias = zeros(1,1,outputNumChannels);

convLayer.BiasLearnRateFactor = 2;

end

%--------------------------------------------------------------------------

function upConvLayer = createAndInitializeUpConvLayer(UpconvFilterSize, inputNumChannels, outputNumChannels, layerName)

upConvLayer = transposedConv2dLayer(UpconvFilterSize, outputNumChannels,...

'Stride',2,...

'BiasL2Factor',0,...

'Name',layerName);

% The transposed conv filter size is a scalar

upConvLayer.Weights = sqrt(2/((UpconvFilterSize^2)*inputNumChannels)) ...

* randn(UpconvFilterSize,UpconvFilterSize,outputNumChannels,inputNumChannels);

upConvLayer.Bias = zeros(1,1,outputNumChannels);

upConvLayer.BiasLearnRateFactor = 2;

end

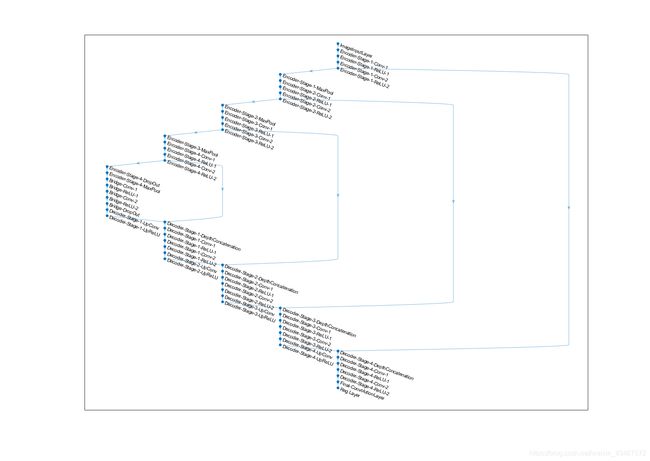

这里附上一张生成的Unet结构图。 顺便解释几句,希望能减少初学者的学习成本。

Unet本来是用来做图像分割的,但是为了用了实现图像的重建,最后的输出层应改为regression layer,这也是我的命名方法。在之前帖子里提到的createUnet_regression_withoutConnection_LeakyReLU() 其实去掉了前后的连接,并改用了LeakyReLu激活函数的变种。去掉前后的桥接并不复杂,想尝试的朋友可以注销encoderDecoderBridge 相关代码。

最后,希望大家多尝试,多思考,多交流,多分享。有什么问题,欢迎留言讨论。