【目标检测】|重参数化 ACNET

在模型设计上区分参数和结构

传统方法训练参数即为推理参数,本文使用重参数化网络,通过参数等效转换,将训练的大模型转为小参数。

是ICCV 2019的一个新CNN架构ACNet(全称为Asymmetric Convolution Net)

ACNet的切入点为获取更好的特征表达,但和其它方法最大的区别在于它没有带来额外的超参数,而且在推理阶段没有增加计算量,这是十分具有吸引力的。

通过 一个新型的卷积模块,可以用来替代卷积网络中常规的卷积层

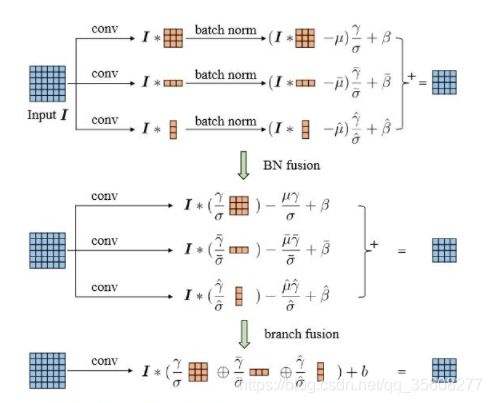

原理:其实原理非常简单。如果你在3x3卷积旁边加上1x3和3x1卷积层,在同样的输入上并行地做3x3, 1x3和3x1卷积,然后将三路的输出加起来,这个过程在数学上等价于,

先将1x3和3x1的卷积核加到3x3上得到一个新的卷积核(设想一下,把一个十字形的补丁给“钉”到3x3卷积核的中间,也就是我们说的kernel skeleton),然后用这个卷积核在同样的输入上进行卷积。注意,由于1x3和3x1卷积核是data-independent的,这个变换可以在训练完成后一步完成,从此以后你部署的模型里就再也见不到1x3和3x1卷积层了,只剩3x3了,也就是说,你最终得到的模型结构跟你只用3x3训练的时候完全一样,因此运行开销也完全一样。等效原理和训练完成后的变换过程如下图所示。注意,这种等效性只是在inference的时候成立的,训练的时候training dynamics是不同的,因而会训出更好的性能。

对于输入特征图I,先进行K(1)和I卷积,K(2)和I卷积后再对结果进行相加,与先进行K(1)和K(2)的逐点相加后再和I进行卷积得到的结果是一致的。

![]()

# 去掉因为3x3卷积的padding多出来的行或者列

class CropLayer(nn.Module):

# E.g., (-1, 0) means this layer should crop the first and last rows of the feature map. And (0, -1) crops the first and last columns

def __init__(self, crop_set):

super(CropLayer, self).__init__()

self.rows_to_crop = - crop_set[0]

self.cols_to_crop = - crop_set[1]

assert self.rows_to_crop >= 0

assert self.cols_to_crop >= 0

def forward(self, input):

return input[:, :, self.rows_to_crop:-self.rows_to_crop, self.cols_to_crop:-self.cols_to_crop]

# 论文提出的3x3+1x3+3x1

class ACBlock(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, padding_mode='zeros', deploy=False):

super(ACBlock, self).__init__()

self.deploy = deploy

if deploy:

self.fused_conv = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=(kernel_size,kernel_size), stride=stride,

padding=padding, dilation=dilation, groups=groups, bias=True, padding_mode=padding_mode)

else:

self.square_conv = nn.Conv2d(in_channels=in_channels, out_channels=out_channels,

kernel_size=(kernel_size, kernel_size), stride=stride,

padding=padding, dilation=dilation, groups=groups, bias=False,

padding_mode=padding_mode)

self.square_bn = nn.BatchNorm2d(num_features=out_channels)

center_offset_from_origin_border = padding - kernel_size // 2

ver_pad_or_crop = (center_offset_from_origin_border + 1, center_offset_from_origin_border)

hor_pad_or_crop = (center_offset_from_origin_border, center_offset_from_origin_border + 1)

if center_offset_from_origin_border >= 0:

self.ver_conv_crop_layer = nn.Identity()

ver_conv_padding = ver_pad_or_crop

self.hor_conv_crop_layer = nn.Identity()

hor_conv_padding = hor_pad_or_crop

else:

self.ver_conv_crop_layer = CropLayer(crop_set=ver_pad_or_crop)

ver_conv_padding = (0, 0)

self.hor_conv_crop_layer = CropLayer(crop_set=hor_pad_or_crop)

hor_conv_padding = (0, 0)

self.ver_conv = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=(3, 1),

stride=stride,

padding=ver_conv_padding, dilation=dilation, groups=groups, bias=False,

padding_mode=padding_mode)

self.hor_conv = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=(1, 3),

stride=stride,

padding=hor_conv_padding, dilation=dilation, groups=groups, bias=False,

padding_mode=padding_mode)

self.ver_bn = nn.BatchNorm2d(num_features=out_channels)

self.hor_bn = nn.BatchNorm2d(num_features=out_channels)

# forward函数

def forward(self, input):

if self.deploy:

return self.fused_conv(input)

else:

square_outputs = self.square_conv(input)

square_outputs = self.square_bn(square_outputs)

# print(square_outputs.size())

# return square_outputs

vertical_outputs = self.ver_conv_crop_layer(input)

vertical_outputs = self.ver_conv(vertical_outputs)

vertical_outputs = self.ver_bn(vertical_outputs)

# print(vertical_outputs.size())

horizontal_outputs = self.hor_conv_crop_layer(input)

horizontal_outputs = self.hor_conv(horizontal_outputs)

horizontal_outputs = self.hor_bn(horizontal_outputs)

# print(horizontal_outputs.size())

return square_outputs + vertical_outputs + horizontal_outputs

CNet有一个特点是它提升了模型对图像翻转和旋转的鲁棒性,

因此,引入1*3这样的水平卷积核可以提升模型对图像上下翻转的鲁棒性,竖直方向的卷积核同理。

ref

https://zhuanlan.zhihu.com/p/93966695

https://zhuanlan.zhihu.com/p/104114196