相机匹配点的选取(基于竖直直杆)

上一篇博客已经进行霍夫直线检测的过程,那么之前的霍夫直线检测出来的直线进行拟合到一条直线之后有什么用呢?

我的想法是进行直线拟合,就可以知道这条直线的表达式,通过提取相机匹配图像之中的匹配点距离这条直线的距离,从而提取出来最佳的匹配点.(当然这里只是我需要进行的,如果不是截取距离一条直线的距离,就不能这么用)

最初的想法

下面的代码的思路就是将左边提取出来的特征点,依次计算他们距离拟合直线的距离,得到的距离从小到达进行排序,依据排除的顺序,选取第一个和第八个大小的数值,作为提取出来的特殊匹配点,进行下面的操作.(整好跟之前的项目进行接轨)

代码如下所示:

#include

#include

#include

#include

#include

#include

#include

#include

#include

using namespace cv;

using namespace std;

using namespace cv::xfeatures2d;

float K = 7.23106;

float B = -8556.64;

int main()

{

//创建检测器

//cv::Ptr detector = SiftFeatureDetector::create();//sift

Ptr detector = SurfFeatureDetector::create(100);//创建SIFT特征检测器,可改成SURF/ORB

//Ptr descriptor_extractor = DescriptorExtractor::create("SURF");//创建特征向量生成器,可改成SURF/ORB

Ptr descriptor_matcher = DescriptorMatcher::create("BruteForce");//创建特征匹配器

if (detector.empty())

cout << "fail to create detector!";

//读入图像

Mat img1 = imread("左相机123.bmp", 0);

Mat img2 = imread("右相机123.bmp", 0);

//特征点检测

vector m_LeftKey, m_RightKey;

detector->detect(img1, m_LeftKey);//检测img1中的SIFT特征点,存储到m_LeftKey中

detector->detect(img2, m_RightKey);

cout << "图像1特征点个数:" << m_LeftKey.size() << endl;

cout << "图像2特征点个数:" << m_RightKey.size() << endl;

vector key_points_1, key_points_2;

cv::Mat dstImage1, dstImage2;

detector->detectAndCompute(img1, Mat(), key_points_1, dstImage1);

detector->detectAndCompute(img2, Mat(), key_points_2, dstImage2);

//根据特征点计算特征描述子矩阵,即特征向量矩阵

double t = getTickCount();//当前滴答数

t = ((double)getTickCount() - t) / getTickFrequency();

cout << "SIFT算法用时:" << t << "秒" << endl;

cout << "图像1特征描述矩阵大小:" << dstImage1.size()

<< ",特征向量个数:" << dstImage1.rows << ",维数:" << dstImage1.cols << endl;

cout << "图像2特征描述矩阵大小:" << dstImage2.size()

<< ",特征向量个数:" << dstImage2.rows << ",维数:" << dstImage2.cols << endl;

//画出特征点

Mat img_m_LeftKey, img_m_RightKey;

drawKeypoints(img1, m_LeftKey, img_m_LeftKey, Scalar::all(-1), 0);

drawKeypoints(img2, m_RightKey, img_m_RightKey, Scalar::all(-1), 0);

//imshow("Src1",img_m_LeftKey);

//imshow("Src2",img_m_RightKey);

//特征匹配

vector matches;//匹配结果

descriptor_matcher->match(dstImage1, dstImage2, matches);//匹配两个图像的特征矩阵

cout << "Match个数:" << matches.size() << endl;

//计算匹配结果中距离的最大和最小值

//距离是指两个特征向量间的欧式距离,表明两个特征的差异,值越小表明两个特征点越接近

double max_dist = 0;

double min_dist = 100;

for (int i = 0; i < matches.size(); i++)

{

double dist = matches[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

cout << "最大距离:" << max_dist << endl;

cout << "最小距离:" << min_dist << endl;

//筛选出较好的匹配点

vector goodMatches;

for (int i = 0; i < matches.size(); i++)

{

if (matches[i].distance < 0.2 * max_dist)

{

goodMatches.push_back(matches[i]);

}

}

cout << "goodMatch个数:" << goodMatches.size() << endl;

//画出匹配结果

Mat img_matches;

//红色连接的是匹配的特征点对,绿色是未匹配的特征点

drawMatches(img1, m_LeftKey, img2, m_RightKey, goodMatches, img_matches,

Scalar::all(-1), CV_RGB(0, 255, 0), Mat(), 2);

imshow("MatchSIFT", img_matches);

imwrite("E:/项目使用版/合并版/Sift+RANSAC/Sift+RANSAC/MatchSIFT.jpg", img_matches);

IplImage result = img_matches;

waitKey(0);

//RANSAC匹配过程

vector m_Matches = goodMatches;

// 分配空间

int ptCount = (int)m_Matches.size();

Mat p1(ptCount, 2, CV_32F);

Mat p2(ptCount, 2, CV_32F);

// 把Keypoint转换为Mat

Point2f pt;

for (int i = 0; i < ptCount; i++)

{

pt = m_LeftKey[m_Matches[i].queryIdx].pt;

p1.at(i, 0) = pt.x;

p1.at(i, 1) = pt.y;

pt = m_RightKey[m_Matches[i].trainIdx].pt;

p2.at(i, 0) = pt.x;

p2.at(i, 1) = pt.y;

}

// 用RANSAC方法计算F

Mat m_Fundamental;

vector m_RANSACStatus; // 这个变量用于存储RANSAC后每个点的状态

findFundamentalMat(p1, p2, m_RANSACStatus, FM_RANSAC);

// 计算野点个数

int OutlinerCount = 0;

for (int i = 0; i < ptCount; i++)

{

if (m_RANSACStatus[i] == 0) // 状态为0表示野点

{

OutlinerCount++;

}

}

int InlinerCount = ptCount - OutlinerCount; // 计算内点

cout << "内点数为:" << InlinerCount << endl;

// 这三个变量用于保存内点和匹配关系

vector m_LeftInlier;

vector m_RightInlier;

vector m_InlierMatches;

m_InlierMatches.resize(InlinerCount);

m_LeftInlier.resize(InlinerCount);

m_RightInlier.resize(InlinerCount);

InlinerCount = 0;

float inlier_minRx = img1.cols; //用于存储内点中右图最小横坐标,以便后续融合

float distance;//点到直线的距离公式

for (int i = 0, j = 0; i < ptCount; i++)

{

if (m_RANSACStatus[i] != 0)

{

m_LeftInlier[InlinerCount].x = p1.at(i, 0);

m_LeftInlier[InlinerCount].y = p1.at(i, 1);

m_RightInlier[InlinerCount].x = p2.at(i, 0);

m_RightInlier[InlinerCount].y = p2.at(i, 1);

m_InlierMatches[InlinerCount].queryIdx = InlinerCount;

m_InlierMatches[InlinerCount].trainIdx = InlinerCount;

cout << j << "左图像:" << m_LeftInlier[InlinerCount].x << " " << m_LeftInlier[InlinerCount].y << endl;

cout << j << "右图像:" << m_RightInlier[InlinerCount].x << " " << m_RightInlier[InlinerCount].y << endl;

j++;

if (m_RightInlier[InlinerCount].x < inlier_minRx) inlier_minRx = m_RightInlier[InlinerCount].x; //存储内点中右图最小横坐标

InlinerCount++;

}

}

// 把内点转换为drawMatches可以使用的格式

vector key1(InlinerCount);

vector key2(InlinerCount);

KeyPoint::convert(m_LeftInlier, key1);

KeyPoint::convert(m_RightInlier, key2);

// 显示计算F过后的内点匹配

Mat OutImage;

drawMatches(img1, key1, img2, key2, m_InlierMatches, OutImage);

cvNamedWindow("Match features", 1);

cvShowImage("Match features", &IplImage(OutImage));

waitKey(0);

return 0;

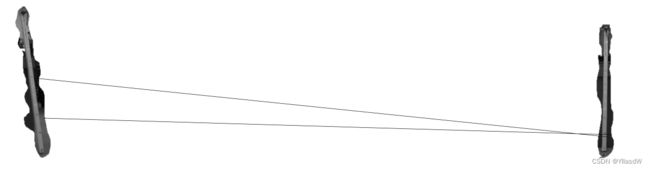

} 挑选的自己想要的点: 看到上面的点就是出现了问题,就是匹配的时候,针对于自己想要的点没有匹配好.

看到上面的点就是出现了问题,就是匹配的时候,针对于自己想要的点没有匹配好.

这个地方对上一个博客之中那个竖直检测的代码进行了修改,发现了一些问题.

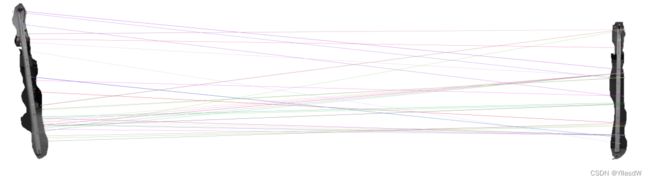

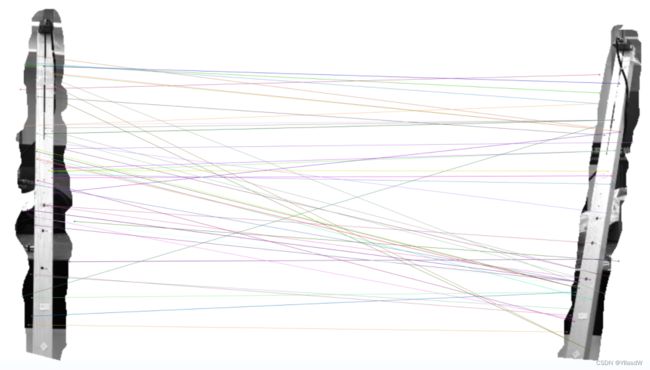

对相机的焦距进行了调整之后,可以得到如下匹配图,效果就是跟之前相比,匹配点是多了很多.

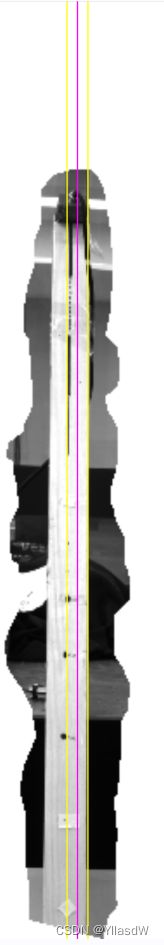

但是发现上上面的那个图并不是我们想要的点,因为对比下面的这条直线,明显结果是错误的.

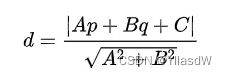

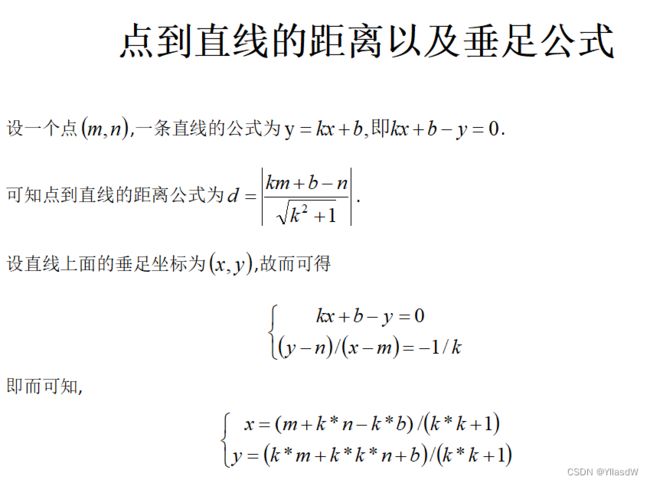

点到直线的距离公式如下所示:

对于点到直线的距离对于特征点进行评价,找出符合要求自己想要的点.对于算法再次进行改进,分为垂直和不垂直两种情况进行分别探讨.使用一个vertical标志进行表征,相应的代码如下所示:

#include

#include

#include

#include

#include

#include

#include

#include

#include

using namespace cv;

using namespace std;

using namespace cv::xfeatures2d;

/*

void BubbleSort1(float arr[], int length) {//从大到小排序

float temp;

for (int i = 0; i < length - 1; i++) {//最外层循环,一共运行数组长度-1次,代表了一共要比较的次数

for (int j = length - 1; j > i; j--) {//每次循环代表了找出每次的最大值。

if (arr[j] > arr[j - 1]) {

temp = arr[j];

arr[j] = arr[j - 1];

arr[j - 1] = temp;

}

}

}

}

*/

void BubbleSort1(float arr[], int length) {//从小到大排序

float temp;

for (int i = 0; i < length - 1; i++) {

for (int j = length - 1; j > i; j--) {

if (arr[j] < arr[j - 1]) {

temp = arr[j];

arr[j] = arr[j - 1];

arr[j - 1] = temp;

}

}

}

}

bool vertical = true;

float K = 10000000;

float B = 1763;

int main()

{

//创建检测器

//cv::Ptr detector = SiftFeatureDetector::create();//sift

Ptr detector = SurfFeatureDetector::create(100);//创建SIFT特征检测器,可改成SURF/ORB

//Ptr descriptor_extractor = DescriptorExtractor::create("SURF");//创建特征向量生成器,可改成SURF/ORB

Ptr descriptor_matcher = DescriptorMatcher::create("BruteForce");//创建特征匹配器

if (detector.empty())

cout << "fail to create detector!";

//读入图像

Mat img1 = imread("左相机123.bmp", 0);

Mat img2 = imread("右相机123.bmp", 0);

Mat combine;

hconcat(img1, img2, combine); //横向拼接

//特征点检测

vector m_LeftKey, m_RightKey;

detector->detect(img1, m_LeftKey);//检测img1中的SIFT特征点,存储到m_LeftKey中

detector->detect(img2, m_RightKey);

cout << "图像1特征点个数:" << m_LeftKey.size() << endl;

cout << "图像2特征点个数:" << m_RightKey.size() << endl;

vector key_points_1, key_points_2;

cv::Mat dstImage1, dstImage2;

detector->detectAndCompute(img1, Mat(), key_points_1, dstImage1);

detector->detectAndCompute(img2, Mat(), key_points_2, dstImage2);

//根据特征点计算特征描述子矩阵,即特征向量矩阵

double t = getTickCount();//当前滴答数

t = ((double)getTickCount() - t) / getTickFrequency();

cout << "SIFT算法用时:" << t << "秒" << endl;

cout << "图像1特征描述矩阵大小:" << dstImage1.size()

<< ",特征向量个数:" << dstImage1.rows << ",维数:" << dstImage1.cols << endl;

cout << "图像2特征描述矩阵大小:" << dstImage2.size()

<< ",特征向量个数:" << dstImage2.rows << ",维数:" << dstImage2.cols << endl;

//画出特征点

Mat img_m_LeftKey, img_m_RightKey;

drawKeypoints(img1, m_LeftKey, img_m_LeftKey, Scalar::all(-1), 0);

drawKeypoints(img2, m_RightKey, img_m_RightKey, Scalar::all(-1), 0);

//imshow("Src1",img_m_LeftKey);

//imshow("Src2",img_m_RightKey);

//特征匹配

vector matches;//匹配结果

descriptor_matcher->match(dstImage1, dstImage2, matches);//匹配两个图像的特征矩阵

cout << "Match个数:" << matches.size() << endl;

//计算匹配结果中距离的最大和最小值

//距离是指两个特征向量间的欧式距离,表明两个特征的差异,值越小表明两个特征点越接近

double max_dist = 0;

double min_dist = 300;

for (int i = 0; i < matches.size(); i++)

{

double dist = matches[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

cout << "最大距离:" << max_dist << endl;

cout << "最小距离:" << min_dist << endl;

//筛选出较好的匹配点

vector goodMatches;

for (int i = 0; i < matches.size(); i++)

{

if (matches[i].distance < 0.2 * max_dist)

{

goodMatches.push_back(matches[i]);

}

}

cout << "goodMatch个数:" << goodMatches.size() << endl;

//画出匹配结果

Mat img_matches;

//红色连接的是匹配的特征点对,绿色是未匹配的特征点

drawMatches(img1, m_LeftKey, img2, m_RightKey, goodMatches, img_matches,

Scalar::all(-1), CV_RGB(0, 255, 0), Mat(), 2);

imshow("MatchSIFT", img_matches);

imwrite("MatchSIFT.jpg", img_matches);

IplImage result = img_matches;

waitKey(0);

//RANSAC匹配过程

vector m_Matches = goodMatches;

// 分配空间

int ptCount = (int)m_Matches.size();

Mat p1(ptCount, 2, CV_32F);

Mat p2(ptCount, 2, CV_32F);

// 把Keypoint转换为Mat

Point2f pt;

for (int i = 0; i < ptCount; i++)

{

pt = m_LeftKey[m_Matches[i].queryIdx].pt;

p1.at(i, 0) = pt.x;

p1.at(i, 1) = pt.y;

pt = m_RightKey[m_Matches[i].trainIdx].pt;

p2.at(i, 0) = pt.x;

p2.at(i, 1) = pt.y;

}

// 用RANSAC方法计算F

Mat m_Fundamental;

vector m_RANSACStatus; // 这个变量用于存储RANSAC后每个点的状态

findFundamentalMat(p1, p2, m_RANSACStatus, FM_RANSAC);

// 计算野点个数

int OutlinerCount = 0;

for (int i = 0; i < ptCount; i++)

{

if (m_RANSACStatus[i] == 0) // 状态为0表示野点

{

OutlinerCount++;

}

}

int InlinerCount = ptCount - OutlinerCount; // 计算内点

cout << "内点数为:" << InlinerCount << endl;

// 这三个变量用于保存内点和匹配关系

vector m_LeftInlier;

vector m_RightInlier;

vector m_InlierMatches;

m_InlierMatches.resize(InlinerCount);

m_LeftInlier.resize(InlinerCount);

m_RightInlier.resize(InlinerCount);

InlinerCount = 0;

float inlier_minRx = img1.cols; //用于存储内点中右图最小横坐标,以便后续融合

float distance = 0;//点到直线的距离公式

float point[80];//声明一个数组,用来存放80个数组,也就是80个数值距离,里面可能是存在0元素的

for (int i = 0, j = 0; i < ptCount; i++)

{

if (m_RANSACStatus[i] != 0)

{

m_LeftInlier[InlinerCount].x = p1.at(i, 0);

m_LeftInlier[InlinerCount].y = p1.at(i, 1);

m_RightInlier[InlinerCount].x = p2.at(i, 0);

m_RightInlier[InlinerCount].y = p2.at(i, 1);

m_InlierMatches[InlinerCount].queryIdx = InlinerCount;

m_InlierMatches[InlinerCount].trainIdx = InlinerCount;

cout << j << "左图像:" << m_LeftInlier[InlinerCount].x << " " << m_LeftInlier[InlinerCount].y << endl;

cout << j << "右图像:" << m_RightInlier[InlinerCount].x << " " << m_RightInlier[InlinerCount].y << endl;

if ( vertical == false )

{

if (j < 80)

{

cout << "j:" << j << endl;

point[j] = (float)abs((K * m_LeftInlier[InlinerCount].x + B - m_LeftInlier[InlinerCount].y) / sqrt(K * K + 1 * 1));

cout << "(K * m_LeftInlier[InlinerCount].x + B - m_LeftInlier[InlinerCount].y)" << (K * m_LeftInlier[InlinerCount].x + B - m_LeftInlier[InlinerCount].y) << endl;

cout << "sqrt(K * K + 1 * 1)" << sqrt(K * K + 1 * 1) << endl;

cout << "point[j]:" << point[j] << endl;

}

}

else {

if (j < 80)

{

cout << "j:" << j << endl;

point[j] = (float)abs(( m_LeftInlier[InlinerCount].x - B));

cout << "(m_LeftInlier[InlinerCount].x)" << m_LeftInlier[InlinerCount].x << endl;

cout << "point[j]:" << point[j] << endl;

}

}

j++;

if (m_RightInlier[InlinerCount].x < inlier_minRx) inlier_minRx = m_RightInlier[InlinerCount].x; //存储内点中右图最小横坐标

InlinerCount++;

}

}

// 把内点转换为drawMatches可以使用的格式

vector key1(InlinerCount);

vector key2(InlinerCount);

KeyPoint::convert(m_LeftInlier, key1);

KeyPoint::convert(m_RightInlier, key2);

// 显示计算F过后的内点匹配

Mat OutImage;

drawMatches(img1, key1, img2, key2, m_InlierMatches, OutImage);

imwrite("test1.bmp", OutImage);

cvNamedWindow("Match features better", 1);

cvShowImage("Match features better ", &IplImage(OutImage));

waitKey(0);

BubbleSort1(point, 80);//将上面的距离进行了排序,从小进行排序

float pointselect[8];//

int select = 0;

for (int i = 0; i < 80; i++)//提出为0的距离,从小到大选出8个数值

{

if (point[i] > 0.5 )

{

pointselect[select] = point[i];

cout << "select元素:" << pointselect[select] << endl;

select++;

if (select == 8)

{

break;

}

}

}

cout << "select:" << select << endl;

/****************************************************************************************************/

//这里我们接下来就是选取几个数值之中距离最小和最大的.

m_InlierMatches.resize(InlinerCount);

m_LeftInlier.resize(InlinerCount);

m_RightInlier.resize(InlinerCount);

InlinerCount = 0;

inlier_minRx = img1.cols; //用于存储内点中右图最小横坐标,以便后续融合

for (int i = 0, j = 0; i < ptCount; i++)

{

if (m_RANSACStatus[i] != 0)

{

m_LeftInlier[InlinerCount].x = p1.at(i, 0);

m_LeftInlier[InlinerCount].y = p1.at(i, 1);

m_RightInlier[InlinerCount].x = p2.at(i, 0);

m_RightInlier[InlinerCount].y = p2.at(i, 1);

m_InlierMatches[InlinerCount].queryIdx = InlinerCount;

m_InlierMatches[InlinerCount].trainIdx = InlinerCount;

//cout << j << "左图像:" << m_LeftInlier[InlinerCount].x << " " << m_LeftInlier[InlinerCount].y << endl;

//cout << j << "右图像:" << m_RightInlier[InlinerCount].x << " " << m_RightInlier[InlinerCount].y << endl;

j++;

if (vertical == false)

{

if ((float)abs((K*m_LeftInlier[InlinerCount].x + B - m_LeftInlier[InlinerCount].y) / sqrt(K * K + 1 * 1)) == pointselect[0])//最小点

{

cout << j << "左图像选出的点1:" << m_LeftInlier[InlinerCount].x << " " << m_LeftInlier[InlinerCount].y << endl;

cout << j << "右图像选出的点1:" << m_RightInlier[InlinerCount].x << " " << m_RightInlier[InlinerCount].y << endl;

line(combine, Point2d(m_LeftInlier[InlinerCount].x, m_LeftInlier[InlinerCount].y), Point2d(4096 + m_RightInlier[InlinerCount].x, m_RightInlier[InlinerCount].y), Scalar(0, 255, 255), 2);

}

if ((float)abs((K*m_LeftInlier[InlinerCount].x + B - m_LeftInlier[InlinerCount].y) / sqrt(K * K + 1 * 1)) == pointselect[7])//最大点

{

cout << j << "左图像选出的点2:" << m_LeftInlier[InlinerCount].x << " " << m_LeftInlier[InlinerCount].y << endl;

cout << j << "右图像选出的点2:" << m_RightInlier[InlinerCount].x << " " << m_RightInlier[InlinerCount].y << endl;

line(combine, Point2d(m_LeftInlier[InlinerCount].x, m_LeftInlier[InlinerCount].y), Point2d(4096 + m_RightInlier[InlinerCount].x, m_RightInlier[InlinerCount].y), Scalar(0, 255, 255), 2);

}

}

else

{

if ((float)abs(m_LeftInlier[InlinerCount].x - B) == pointselect[0])//最小点

{

cout << j << "左图像选出的点1:" << m_LeftInlier[InlinerCount].x << " " << m_LeftInlier[InlinerCount].y << endl;

cout << j << "右图像选出的点1:" << m_RightInlier[InlinerCount].x << " " << m_RightInlier[InlinerCount].y << endl;

line(combine, Point2d(m_LeftInlier[InlinerCount].x, m_LeftInlier[InlinerCount].y), Point2d(4096 + m_RightInlier[InlinerCount].x, m_RightInlier[InlinerCount].y), Scalar(0, 255, 255), 2);

}

if ((float)abs(m_LeftInlier[InlinerCount].x - B)== pointselect[7])//最大点

{

cout << j << "左图像选出的点2:" << m_LeftInlier[InlinerCount].x << " " << m_LeftInlier[InlinerCount].y << endl;

cout << j << "右图像选出的点2:" << m_RightInlier[InlinerCount].x << " " << m_RightInlier[InlinerCount].y << endl;

line(combine, Point2d(m_LeftInlier[InlinerCount].x, m_LeftInlier[InlinerCount].y), Point2d(4096 + m_RightInlier[InlinerCount].x, m_RightInlier[InlinerCount].y), Scalar(0, 255, 255), 2);

}

}

if (m_RightInlier[InlinerCount].x < inlier_minRx) inlier_minRx = m_RightInlier[InlinerCount].x; //存储内点中右图最小横坐标

InlinerCount++;

}

}

imwrite("combine.bmp",combine);

resize(combine, combine, Size(1024, 540));//显示改变大小

imshow("输出想要的匹配点", combine);

waitKey(0);

return 0;

}

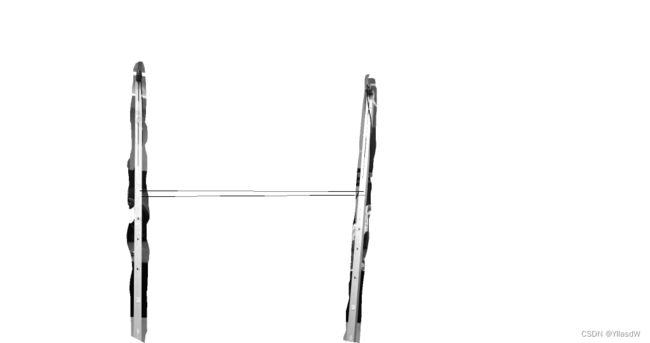

可见上面的点是比较正常的.

提取点到直线的垂足

(对上面的代码再次进行迭代)

点到直线的垂足公式,这个地方是比较容易推导的,下面是我自己进行推导的过程.

还是要提前进行情况的分析,①不垂直的情况是可以用上面的公式进行表征;②垂直的情况,

基于上述的代码再次进行分析.

#include

#include

#include

#include

#include

#include

#include

#include

#include

using namespace cv;

using namespace std;

using namespace cv::xfeatures2d;

/*

void BubbleSort1(float arr[], int length) {//从大到小排序

float temp;

for (int i = 0; i < length - 1; i++) {//最外层循环,一共运行数组长度-1次,代表了一共要比较的次数

for (int j = length - 1; j > i; j--) {//每次循环代表了找出每次的最大值。

if (arr[j] > arr[j - 1]) {

temp = arr[j];

arr[j] = arr[j - 1];

arr[j - 1] = temp;

}

}

}

}

*/

void BubbleSort1(float arr[], int length) {//从小到大排序

float temp;

for (int i = 0; i < length - 1; i++) {

for (int j = length - 1; j > i; j--) {

if (arr[j] < arr[j - 1]) {

temp = arr[j];

arr[j] = arr[j - 1];

arr[j - 1] = temp;

}

}

}

}

float xll1, xrr1, yll1, yrr1;

float xll2, xrr2, yll2, yrr2;

bool vertical1 = true;

float K1 = 10000000;

float B1 = 1763;

bool vertical2 = false;

float K2= -6.11746;

float B2 = 4138.73;

int main()

{

//创建检测器

//cv::Ptr detector = SiftFeatureDetector::create();//sift

Ptr detector = SurfFeatureDetector::create(100);//创建SIFT特征检测器,可改成SURF/ORB

//Ptr descriptor_extractor = DescriptorExtractor::create("SURF");//创建特征向量生成器,可改成SURF/ORB

Ptr descriptor_matcher = DescriptorMatcher::create("BruteForce");//创建特征匹配器

if (detector.empty())

cout << "fail to create detector!";

//读入图像

Mat img1 = imread("左相机123.bmp", 0);

Mat img2 = imread("右相机123.bmp", 0);

Mat combine;

hconcat(img1, img2, combine); //横向拼接

//特征点检测

vector m_LeftKey, m_RightKey;

detector->detect(img1, m_LeftKey);//检测img1中的SIFT特征点,存储到m_LeftKey中

detector->detect(img2, m_RightKey);

cout << "图像1特征点个数:" << m_LeftKey.size() << endl;

cout << "图像2特征点个数:" << m_RightKey.size() << endl;

vector key_points_1, key_points_2;

cv::Mat dstImage1, dstImage2;

detector->detectAndCompute(img1, Mat(), key_points_1, dstImage1);

detector->detectAndCompute(img2, Mat(), key_points_2, dstImage2);

//根据特征点计算特征描述子矩阵,即特征向量矩阵

double t = getTickCount();//当前滴答数

t = ((double)getTickCount() - t) / getTickFrequency();

cout << "SIFT算法用时:" << t << "秒" << endl;

cout << "图像1特征描述矩阵大小:" << dstImage1.size()

<< ",特征向量个数:" << dstImage1.rows << ",维数:" << dstImage1.cols << endl;

cout << "图像2特征描述矩阵大小:" << dstImage2.size()

<< ",特征向量个数:" << dstImage2.rows << ",维数:" << dstImage2.cols << endl;

//画出特征点

Mat img_m_LeftKey, img_m_RightKey;

drawKeypoints(img1, m_LeftKey, img_m_LeftKey, Scalar::all(-1), 0);

drawKeypoints(img2, m_RightKey, img_m_RightKey, Scalar::all(-1), 0);

//imshow("Src1",img_m_LeftKey);

//imshow("Src2",img_m_RightKey);

//特征匹配

vector matches;//匹配结果

descriptor_matcher->match(dstImage1, dstImage2, matches);//匹配两个图像的特征矩阵

cout << "Match个数:" << matches.size() << endl;

//计算匹配结果中距离的最大和最小值

//距离是指两个特征向量间的欧式距离,表明两个特征的差异,值越小表明两个特征点越接近

double max_dist = 0;

double min_dist = 300;

for (int i = 0; i < matches.size(); i++)

{

double dist = matches[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

cout << "最大距离:" << max_dist << endl;

cout << "最小距离:" << min_dist << endl;

//筛选出较好的匹配点

vector goodMatches;

for (int i = 0; i < matches.size(); i++)

{

if (matches[i].distance < 0.2 * max_dist)

{

goodMatches.push_back(matches[i]);

}

}

cout << "goodMatch个数:" << goodMatches.size() << endl;

//画出匹配结果

Mat img_matches;

//红色连接的是匹配的特征点对,绿色是未匹配的特征点

drawMatches(img1, m_LeftKey, img2, m_RightKey, goodMatches, img_matches,

Scalar::all(-1), CV_RGB(0, 255, 0), Mat(), 2);

imshow("MatchSIFT", img_matches);

imwrite("MatchSIFT.jpg", img_matches);

IplImage result = img_matches;

waitKey(0);

//RANSAC匹配过程

vector m_Matches = goodMatches;

// 分配空间

int ptCount = (int)m_Matches.size();

Mat p1(ptCount, 2, CV_32F);

Mat p2(ptCount, 2, CV_32F);

// 把Keypoint转换为Mat

Point2f pt;

for (int i = 0; i < ptCount; i++)

{

pt = m_LeftKey[m_Matches[i].queryIdx].pt;

p1.at(i, 0) = pt.x;

p1.at(i, 1) = pt.y;

pt = m_RightKey[m_Matches[i].trainIdx].pt;

p2.at(i, 0) = pt.x;

p2.at(i, 1) = pt.y;

}

// 用RANSAC方法计算F

Mat m_Fundamental;

vector m_RANSACStatus; // 这个变量用于存储RANSAC后每个点的状态

findFundamentalMat(p1, p2, m_RANSACStatus, FM_RANSAC);

// 计算野点个数

int OutlinerCount = 0;

for (int i = 0; i < ptCount; i++)

{

if (m_RANSACStatus[i] == 0) // 状态为0表示野点

{

OutlinerCount++;

}

}

int InlinerCount = ptCount - OutlinerCount; // 计算内点

cout << "内点数为:" << InlinerCount << endl;

// 这三个变量用于保存内点和匹配关系

vector m_LeftInlier;

vector m_RightInlier;

vector m_InlierMatches;

m_InlierMatches.resize(InlinerCount);

m_LeftInlier.resize(InlinerCount);

m_RightInlier.resize(InlinerCount);

InlinerCount = 0;

float inlier_minRx = img1.cols; //用于存储内点中右图最小横坐标,以便后续融合

float distance = 0;//点到直线的距离公式

float point[80];//声明一个数组,用来存放80个数组,也就是80个数值距离,里面可能是存在0元素的

for (int i = 0, j = 0; i < ptCount; i++)

{

if (m_RANSACStatus[i] != 0)

{

m_LeftInlier[InlinerCount].x = p1.at(i, 0);

m_LeftInlier[InlinerCount].y = p1.at(i, 1);

m_RightInlier[InlinerCount].x = p2.at(i, 0);

m_RightInlier[InlinerCount].y = p2.at(i, 1);

m_InlierMatches[InlinerCount].queryIdx = InlinerCount;

m_InlierMatches[InlinerCount].trainIdx = InlinerCount;

cout << j << "左图像:" << m_LeftInlier[InlinerCount].x << " " << m_LeftInlier[InlinerCount].y << endl;

cout << j << "右图像:" << m_RightInlier[InlinerCount].x << " " << m_RightInlier[InlinerCount].y << endl;

if ( vertical1 == false )

{

if (j < 80)

{

cout << "j:" << j << endl;

point[j] = (float)abs((K1 * m_LeftInlier[InlinerCount].x + B1 - m_LeftInlier[InlinerCount].y) / sqrt(K1 * K1 + 1 * 1));

cout << "(K1 * m_LeftInlier[InlinerCount].x + B1 - m_LeftInlier[InlinerCount].y)" << (K1 * m_LeftInlier[InlinerCount].x + B1 - m_LeftInlier[InlinerCount].y) << endl;

cout << "sqrt(K1 * K1 + 1 * 1)" << sqrt(K1 * K1 + 1 * 1) << endl;

cout << "point[j]:" << point[j] << endl;

}

}

else {

if (j < 80)

{

cout << "j:" << j << endl;

point[j] = (float)abs(( m_LeftInlier[InlinerCount].x - B1));

cout << "(m_LeftInlier[InlinerCount].x)" << m_LeftInlier[InlinerCount].x << endl;

cout << "point[j]:" << point[j] << endl;

}

}

j++;

if (m_RightInlier[InlinerCount].x < inlier_minRx) inlier_minRx = m_RightInlier[InlinerCount].x; //存储内点中右图最小横坐标

InlinerCount++;

}

}

// 把内点转换为drawMatches可以使用的格式

vector key1(InlinerCount);

vector key2(InlinerCount);

KeyPoint::convert(m_LeftInlier, key1);

KeyPoint::convert(m_RightInlier, key2);

// 显示计算F过后的内点匹配

Mat OutImage;

drawMatches(img1, key1, img2, key2, m_InlierMatches, OutImage);

imwrite("test1.bmp", OutImage);

cvNamedWindow("Match features better", 1);

cvShowImage("Match features better ", &IplImage(OutImage));

waitKey(0);

BubbleSort1(point, 80);//将上面的距离进行了排序,从小进行排序

float pointselect[8];//

int select = 0;

for (int i = 0; i < 80; i++)//提出为0的距离,从小到大选出8个数值

{

if (point[i] > 0.5 )

{

pointselect[select] = point[i];

cout << "select元素:" << pointselect[select] << endl;

select++;

if (select == 8)

{

break;

}

}

}

cout << "select:" << select << endl;

/****************************************************************************************************/

//这里我们接下来就是选取几个数值之中距离最小和最大的.

m_InlierMatches.resize(InlinerCount);

m_LeftInlier.resize(InlinerCount);

m_RightInlier.resize(InlinerCount);

InlinerCount = 0;

inlier_minRx = img1.cols; //用于存储内点中右图最小横坐标,以便后续融合

for (int i = 0, j = 0; i < ptCount; i++)

{

if (m_RANSACStatus[i] != 0)

{

m_LeftInlier[InlinerCount].x = p1.at(i, 0);

m_LeftInlier[InlinerCount].y = p1.at(i, 1);

m_RightInlier[InlinerCount].x = p2.at(i, 0);

m_RightInlier[InlinerCount].y = p2.at(i, 1);

m_InlierMatches[InlinerCount].queryIdx = InlinerCount;

m_InlierMatches[InlinerCount].trainIdx = InlinerCount;

//cout << j << "左图像:" << m_LeftInlier[InlinerCount].x << " " << m_LeftInlier[InlinerCount].y << endl;

//cout << j << "右图像:" << m_RightInlier[InlinerCount].x << " " << m_RightInlier[InlinerCount].y << endl;

j++;

if (vertical1 == false && vertical2 == false)//直线1 和直线2都不垂直

{

if ((float)abs((K1*m_LeftInlier[InlinerCount].x + B1 - m_LeftInlier[InlinerCount].y) / sqrt(K1 * K1 + 1 * 1)) == pointselect[0])//最小点

{

//匹配点

cout << j << "左图像选出的点1:" << m_LeftInlier[InlinerCount].x << " " << m_LeftInlier[InlinerCount].y << endl;

cout << j << "右图像选出的点1:" << m_RightInlier[InlinerCount].x << " " << m_RightInlier[InlinerCount].y << endl;

line(combine, Point2d(m_LeftInlier[InlinerCount].x, m_LeftInlier[InlinerCount].y), Point2d(4096 + m_RightInlier[InlinerCount].x, m_RightInlier[InlinerCount].y), Scalar(0, 255, 255), 2);

//垂足

xll1 = (float)(m_LeftInlier[InlinerCount].x + K1 * m_LeftInlier[InlinerCount].y - K1 * B1) / (K1 * K1 + 1);

yll1 = (float)(K1 * m_LeftInlier[InlinerCount].x + K1 * K1 * m_LeftInlier[InlinerCount].y + B1) / (K1 * K1 + 1);

xrr1 = (float)(m_RightInlier[InlinerCount].x + K2 * m_RightInlier[InlinerCount].y - K2 * B2) / (K2 * K2 + 1);

yrr1 = (float)(K2 * m_RightInlier[InlinerCount].x + K2 * K2 * m_RightInlier[InlinerCount].y + B2) / (K2 * K2 + 1);

}

if ((float)abs((K1*m_LeftInlier[InlinerCount].x + B1 - m_LeftInlier[InlinerCount].y) / sqrt(K1 * K1 + 1 * 1)) == pointselect[7])//最大点

{

cout << j << "左图像选出的点2:" << m_LeftInlier[InlinerCount].x << " " << m_LeftInlier[InlinerCount].y << endl;

cout << j << "右图像选出的点2:" << m_RightInlier[InlinerCount].x << " " << m_RightInlier[InlinerCount].y << endl;

line(combine, Point2d(m_LeftInlier[InlinerCount].x, m_LeftInlier[InlinerCount].y), Point2d(4096 + m_RightInlier[InlinerCount].x, m_RightInlier[InlinerCount].y), Scalar(0, 255, 255), 2);

//垂足

xll2 = (float)(m_LeftInlier[InlinerCount].x + K1 * m_LeftInlier[InlinerCount].y - K1 * B1) / (K1 * K1 + 1);

yll2 = (float)(K1 * m_LeftInlier[InlinerCount].x + K1 * K1 * m_LeftInlier[InlinerCount].y + B1) / (K1 * K1 + 1);

xrr2 = (float)(m_RightInlier[InlinerCount].x + K2 * m_RightInlier[InlinerCount].y - K2 * B2) / (K2 * K2 + 1);

yrr2 = (float)(K2 * m_RightInlier[InlinerCount].x + K2 * K2 * m_RightInlier[InlinerCount].y + B2) / (K2 * K2 + 1);

}

}

else if (vertical1 == true && vertical2 == true) //直线1和直线2都垂直

{

if ((float)abs(m_LeftInlier[InlinerCount].x - B1) == pointselect[0])//最小点x

{

cout << j << "左图像选出的点1:" << m_LeftInlier[InlinerCount].x << " " << m_LeftInlier[InlinerCount].y << endl;

cout << j << "右图像选出的点1:" << m_RightInlier[InlinerCount].x << " " << m_RightInlier[InlinerCount].y << endl;

line(combine, Point2d(m_LeftInlier[InlinerCount].x, m_LeftInlier[InlinerCount].y), Point2d(4096 + m_RightInlier[InlinerCount].x, m_RightInlier[InlinerCount].y), Scalar(0, 255, 255), 2);

//垂足

xll1 = (float)(B1);

yll1 = (float)(m_LeftInlier[InlinerCount].y);

xrr1 = (float)(B2);

yrr1 = (float)(m_RightInlier[InlinerCount].y);

}

if ((float)abs(m_LeftInlier[InlinerCount].x - B1)== pointselect[7])//最大点

{

cout << j << "左图像选出的点2:" << m_LeftInlier[InlinerCount].x << " " << m_LeftInlier[InlinerCount].y << endl;

cout << j << "右图像选出的点2:" << m_RightInlier[InlinerCount].x << " " << m_RightInlier[InlinerCount].y << endl;

line(combine, Point2d(m_LeftInlier[InlinerCount].x, m_LeftInlier[InlinerCount].y), Point2d(4096 + m_RightInlier[InlinerCount].x, m_RightInlier[InlinerCount].y), Scalar(0, 255, 255), 2);

//垂足

xll2 = (float)(B1);

yll2 = (float)(m_LeftInlier[InlinerCount].y);

xrr2 = (float)(B2);

yrr2 = (float)(m_RightInlier[InlinerCount].y);

}

}

else if (vertical1 == true && vertical2 == false)//直线1垂直 直线二不垂直

{

if ((float)abs(m_LeftInlier[InlinerCount].x - B1) == pointselect[0])//最小点x

{

cout << j << "左图像选出的点1:" << m_LeftInlier[InlinerCount].x << " " << m_LeftInlier[InlinerCount].y << endl;

cout << j << "右图像选出的点1:" << m_RightInlier[InlinerCount].x << " " << m_RightInlier[InlinerCount].y << endl;

line(combine, Point2d(m_LeftInlier[InlinerCount].x, m_LeftInlier[InlinerCount].y), Point2d(4096 + m_RightInlier[InlinerCount].x, m_RightInlier[InlinerCount].y), Scalar(0, 255, 255), 2);

//垂足

xll1 = (float)(B1);

yll1 = (float)(m_LeftInlier[InlinerCount].y);

xrr1 = (float)(m_RightInlier[InlinerCount].x + K2 * m_RightInlier[InlinerCount].y - K2 * B2) / (K2 * K2 + 1);

yrr1 = (float)(K2 * m_RightInlier[InlinerCount].x + K2 * K2 * m_RightInlier[InlinerCount].y + B2) / (K2 * K2 + 1);

}

if ((float)abs(m_LeftInlier[InlinerCount].x - B1) == pointselect[7])//最大点

{

cout << j << "左图像选出的点2:" << m_LeftInlier[InlinerCount].x << " " << m_LeftInlier[InlinerCount].y << endl;

cout << j << "右图像选出的点2:" << m_RightInlier[InlinerCount].x << " " << m_RightInlier[InlinerCount].y << endl;

line(combine, Point2d(m_LeftInlier[InlinerCount].x, m_LeftInlier[InlinerCount].y), Point2d(4096 + m_RightInlier[InlinerCount].x, m_RightInlier[InlinerCount].y), Scalar(0, 255, 255), 2);

//垂足

xll2 = (float)(B1);

yll2 = (float)(m_LeftInlier[InlinerCount].y);

xrr2 = (float)(m_RightInlier[InlinerCount].x + K2 * m_RightInlier[InlinerCount].y - K2 * B2) / (K2 * K2 + 1);

yrr2 = (float)(K2 * m_RightInlier[InlinerCount].x + K2 * K2 * m_RightInlier[InlinerCount].y + B2) / (K2 * K2 + 1);

}

}

if (m_RightInlier[InlinerCount].x < inlier_minRx) inlier_minRx = m_RightInlier[InlinerCount].x; //存储内点中右图最小横坐标

InlinerCount++;

}

if (vertical1 == false && vertical2 == false)//直线1不垂直 直线2垂直

{

if ((float)abs((K1*m_LeftInlier[InlinerCount].x + B1 - m_LeftInlier[InlinerCount].y) / sqrt(K1 * K1 + 1 * 1)) == pointselect[0])//最小点

{

//匹配点

cout << j << "左图像选出的点1:" << m_LeftInlier[InlinerCount].x << " " << m_LeftInlier[InlinerCount].y << endl;

cout << j << "右图像选出的点1:" << m_RightInlier[InlinerCount].x << " " << m_RightInlier[InlinerCount].y << endl;

line(combine, Point2d(m_LeftInlier[InlinerCount].x, m_LeftInlier[InlinerCount].y), Point2d(4096 + m_RightInlier[InlinerCount].x, m_RightInlier[InlinerCount].y), Scalar(0, 255, 255), 2);

//垂足

xll1 = (float)(m_LeftInlier[InlinerCount].x + K1 * m_LeftInlier[InlinerCount].y - K1 * B1) / (K1 * K1 + 1);

yll1 = (float)(K1 * m_LeftInlier[InlinerCount].x + K1 * K1 * m_LeftInlier[InlinerCount].y + B1) / (K1 * K1 + 1);

xrr1 = (float)(B2);

yrr1 = (float)(m_RightInlier[InlinerCount].y);

}

if ((float)abs((K1*m_LeftInlier[InlinerCount].x + B1 - m_LeftInlier[InlinerCount].y) / sqrt(K1 * K1 + 1 * 1)) == pointselect[7])//最大点

{

cout << j << "左图像选出的点2:" << m_LeftInlier[InlinerCount].x << " " << m_LeftInlier[InlinerCount].y << endl;

cout << j << "右图像选出的点2:" << m_RightInlier[InlinerCount].x << " " << m_RightInlier[InlinerCount].y << endl;

line(combine, Point2d(m_LeftInlier[InlinerCount].x, m_LeftInlier[InlinerCount].y), Point2d(4096 + m_RightInlier[InlinerCount].x, m_RightInlier[InlinerCount].y), Scalar(0, 255, 255), 2);

//垂足

xll2 = (float)(m_LeftInlier[InlinerCount].x + K1 * m_LeftInlier[InlinerCount].y - K1 * B1) / (K1 * K1 + 1);

yll2 = (float)(K1 * m_LeftInlier[InlinerCount].x + K1 * K1 * m_LeftInlier[InlinerCount].y + B1) / (K1 * K1 + 1);

xrr2 = (float)(B2);

yrr2 = (float)(m_RightInlier[InlinerCount].y);

}

}

}

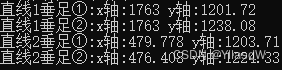

cout << "直线1垂足①:" << "x轴:" << xll1 << " " << "y轴:" << yll1 << endl;

cout << "直线1垂足②:" << "x轴:" << xll2 << " " << "y轴:" << yll2 << endl;

cout << "直线2垂足①:" << "x轴:" << xrr1 << " " << "y轴:" << yrr1 << endl;

cout << "直线2垂足②:" << "x轴:" << xrr2 << " " << "y轴:" << yrr2 << endl;

imwrite("combine.bmp",combine);

resize(combine, combine, Size(1024, 540));//显示改变大小

imshow("输出想要的匹配点", combine);

waitKey(0);

return 0;

}

可以得到如下的垂足

说明结果正确. (代码写的太烂了,我自己都不想看了,不想改了)