U-net 训练自己的数据集

文章目录

- 一、网络准备

- 二、数据集准备

-

- 2.1 JSON标签数据转png

-

- 2.1.1 安装LABELME

- 2.1.2 制作标签图片

- 2.1.3 将json转换格式

- 3 训练模型

- 3.1 训练集和数据集的划分

- 3.2 修改参数

-

- 3.3 结果预测

一、网络准备

二、数据集准备

首先原图和标签数据老师发给我了。

2.1 JSON标签数据转png

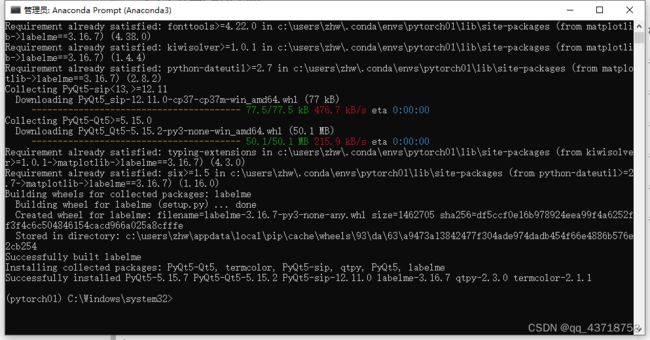

2.1.1 安装LABELME

在conda命令行输入:

pip install labelme==3.16.7

2.1.2 制作标签图片

命令行输入labelme打开软件

labelme

点击opendir打开文件夹

打开文件时出现问题:

问题原因:版本不匹配的问题,我安装的是3.17,但是打开后发现标签文件的版本是5.0.1

解决办法:卸载重装高版本labelme

出现问题:‘labelme’ 不是内部或外部命令,也不是可运行的程序

解决办法:卸载重装

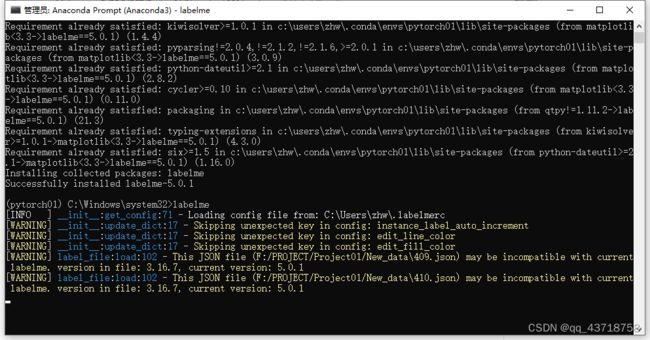

再次命令行输入:labelme

成功打开

2.1.3 将json转换格式

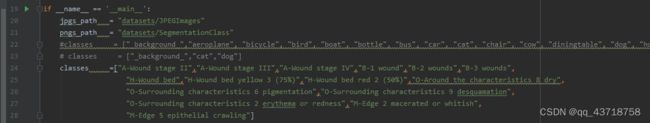

打开py文件:json_to_dataset.py

修改classes,改为自己的类,增加一个类为背景:background

修改后,运行这个py文件。

报错:

Traceback (most recent call last):

File "F:/pytorch_project/unet-pytorch-main/unet-pytorch-main/json_to_dataset.py", line 71, in <module>

utils.lblsave(osp.join(pngs_path, count[i].split(".")[0]+'.png'), new)

File "C:\Users\zhw\.conda\envs\pytorch01\lib\site-packages\labelme\utils\_io.py", line 15, in lblsave

lbl_pil = PIL.Image.fromarray(lbl.astype(np.uint8), mode="P")

File "C:\Users\zhw\.conda\envs\pytorch01\lib\site-packages\PIL\Image.py", line 2965, in fromarray

raise ValueError(f"Too many dimensions: {ndim} > {ndmax}.")

ValueError: Too many dimensions: 3 > 2.

错误原因:版本太高

参考博文:https://blog.csdn.net/m0_48095841/article/details/123998484

解决办法:卸载5.0.1,安装3.16.7

pip uninstall labelme

pip install labelme==3.16.7

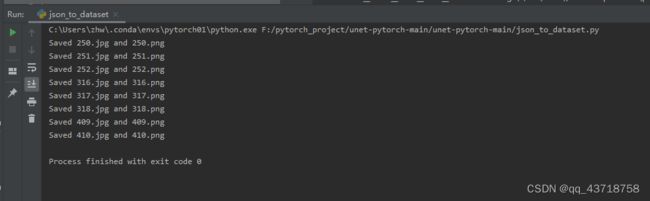

再次运行json_to_dataset:

最后:将JPEGImages和SegmentationClass复制到VOC2007文件夹下面

3 训练模型

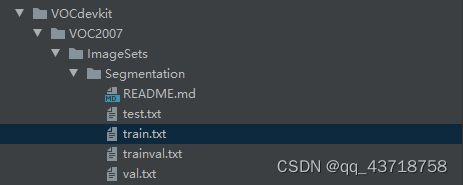

3.1 训练集和数据集的划分

运行:voc_annotation.py进行划分

由于数据较少,所以全部数据用于训练

运行结果:

train: 用于训练

val: 用于验证

3.2 修改参数

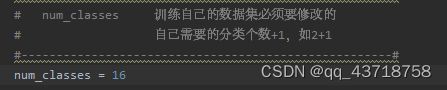

修改train.py中的num_classes 区分的类别个数+1

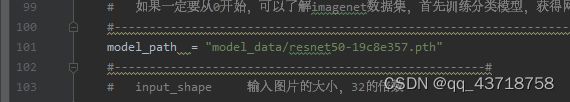

读Readme文件,下载modelpath

运行报错:

initialize network with normal type

Load weights model_data/resnet50-19c8e357.pth.

Traceback (most recent call last):

File "F:/pytorch_project/unet-pytorch-main/unet-pytorch-main/train.py", line 284, in <module>

pretrained_dict = torch.load(model_path, map_location = device)

File "C:\Users\zhw\.conda\envs\pytorch01\lib\site-packages\torch\serialization.py", line 795, in load

return _legacy_load(opened_file, map_location, pickle_module, **pickle_load_args)

File "C:\Users\zhw\.conda\envs\pytorch01\lib\site-packages\torch\serialization.py", line 987, in _legacy_load

return legacy_load(f)

File "C:\Users\zhw\.conda\envs\pytorch01\lib\site-packages\torch\serialization.py", line 924, in legacy_load

storage._storage, storage_offset, numel, stride)

RuntimeError: Attempted to set the storage of a tensor on device "cpu" to a storage on different device "cuda:0". This is no longer allowed; the devices must match.

Process finished with exit code 1

问题:可能是版本问题

解决办法:下载其他权重使用

运行出现新问题:

Traceback (most recent call last):

File "F:/pytorch_project/unet-pytorch-main/unet-pytorch-main/train.py", line 410, in <module>

raise ValueError("数据集过小,无法继续进行训练,请扩充数据集。")

ValueError: 数据集过小,无法继续进行训练,请扩充数据集。

代码文件:

if epoch_step == 0 or epoch_step_val == 0:

raise ValueError("数据集过小,无法继续进行训练,请扩充数据集。")

通过看代码,发现是数据集的划分有问题,没有划分测试集。

所以修改测试集代码

运行annotation代码。

划分成功。

运行train.py运行成功。

运行一半出现新问题。

Total Loss: 0.359 || Val Loss: 1.937

Epoch 51/100: 0%| | 0/7 [00:00<?, ?it/s<class 'dict'>]Start Train

Epoch 51/100: 29%|██▊ | 2/7 [00:08<00:18, 3.75s/it, f_score=0.837, lr=4.88e-5, total_loss=0.555]Traceback (most recent call last):

File "F:/pytorch_project/unet-pytorch-main/unet-pytorch-main/train.py", line 491, in <module>

epoch_step, epoch_step_val, gen, gen_val, UnFreeze_Epoch, Cuda, dice_loss, focal_loss, cls_weights, num_classes, fp16, scaler, save_period, save_dir, local_rank)

File "F:\pytorch_project\unet-pytorch-main\unet-pytorch-main\utils\utils_fit.py", line 58, in fit_one_epoch

loss.backward()

File "C:\Users\zhw\.conda\envs\pytorch01\lib\site-packages\torch\_tensor.py", line 488, in backward

self, gradient, retain_graph, create_graph, inputs=inputs

File "C:\Users\zhw\.conda\envs\pytorch01\lib\site-packages\torch\autograd\__init__.py", line 199, in backward

allow_unreachable=True, accumulate_grad=True) # Calls into the C++ engine to run the backward pass

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 192.00 MiB (GPU 0; 2.00 GiB total capacity; 1.37 GiB already allocated; 0 bytes free; 1.66 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

Epoch 51/100: 29%|██▊ | 2/7 [00:09<00:23, 4.74s/it, f_score=0.837, lr=4.88e-5, total_loss=0.555]

Process finished with exit code 1

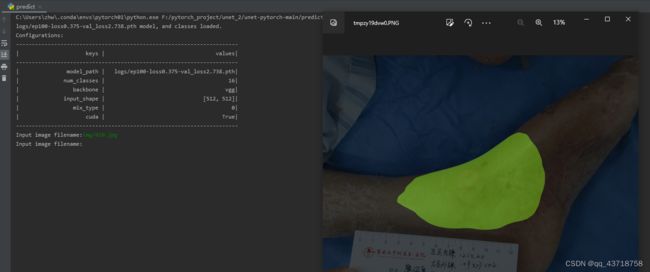

3.3 结果预测

修改unet.py中的数据:

视频中说可以直接运行predicate.py进行预测

但是我修改了下 num_classes

运行: