机器学习入门(6)—— 支持向量机(1)

文章目录

- 1.支持向量机简介

-

- 简易分类数据

- 支持向量机:边界最大化

1.支持向量机简介

支持向量机是一种二元分类模型,是一种非常强大的,灵活的有监督学习算法,既可以用于回归又可以用于预测。其核心思想是,训练阶段在特征空间中寻找一个超平面,它能(或尽量能)将训练样本中的正例和负例分离它两侧,预测时以该超平面作为决策边界判断输入实例的类别。

简易分类数据

import matplotlib.pyplot as plt

from sklearn.datasets.samples_generator import make_blobs

x,y=make_blobs(n_samples=50,

centers=2,

random_state=0,

cluster_std=0.60

)

print(x)

print(y)

plt.scatter(x[:,0],x[:,1],c=y,s=50,cmap='autumn')

[[ 1.41281595 1.5303347 ]

[ 1.81336135 1.6311307 ]

[ 1.43289271 4.37679234]

[ 1.87271752 4.18069237]

[ 2.09517785 1.0791468 ]

[ 2.73890793 0.15676817]

[ 3.18515794 0.08900822]

[ 2.06156753 1.96918596]

[ 2.03835818 1.15466278]

[-0.04749204 5.47425256]

[ 1.71444449 5.02521524]

[ 0.22459286 4.77028154]

[ 1.06923853 4.53068484]

[ 1.53278923 0.55035386]

[ 1.4949318 3.85848832]

[ 1.1641107 3.79132988]

[ 0.74387399 4.12240568]

[ 2.29667251 0.48677761]

[ 0.44359863 3.11530945]

[ 0.91433877 4.55014643]

[ 1.67467427 0.68001896]

[ 2.26908736 1.32160756]

[ 1.5108885 0.9288309 ]

[ 1.65179125 0.68193176]

[ 2.49272186 0.97505341]

[ 2.33812285 3.43116792]

[ 0.67047877 4.04094275]

[-0.55552381 4.69595848]

[ 2.16172321 0.6565951 ]

[ 2.09680487 3.7174206 ]

[ 2.18023251 1.48364708]

[ 0.43899014 4.53592883]

[ 1.24258802 4.50399192]

[ 0.00793137 4.17614316]

[ 1.89593761 5.18540259]

[ 1.868336 0.93136287]

[ 2.13141478 1.13885728]

[ 1.06269622 5.17635143]

[ 2.33466499 -0.02408255]

[ 0.669787 3.59540802]

[ 1.07714851 1.17533301]

[ 1.54632313 4.212973 ]

[ 1.56737975 -0.1381059 ]

[ 1.35617762 1.43815955]

[ 1.00372519 4.19147702]

[ 1.29297652 1.47930168]

[ 2.94821884 2.03519717]

[ 0.3471383 3.45177657]

[ 2.76253526 0.78970876]

[ 0.76752279 4.39759671]]

[1 1 0 0 1 1 1 1 1 0 0 0 0 1 0 0 0 1 0 0 1 1 1 1 1 0 0 0 1 0 1 0 0 0 0 1 1

0 1 0 1 0 1 1 0 1 1 0 1 0]

方法部分参数详解:

- n_samples是待生成的样本的总数。

- n_features是每个样本的特征数。

- centers表示类别数。

- cluster_std表示每个类别的方差,例如我们希望生成2类数据,其中一类比另一类具有更大的方差,可以将cluster_std设置为[1.0,3.0]。

from sklearn.datasets.samples_generator import make_blobs

x,y=make_blobs(n_samples=50,centers=2,

random_state=0,cluster_std=0.60

)

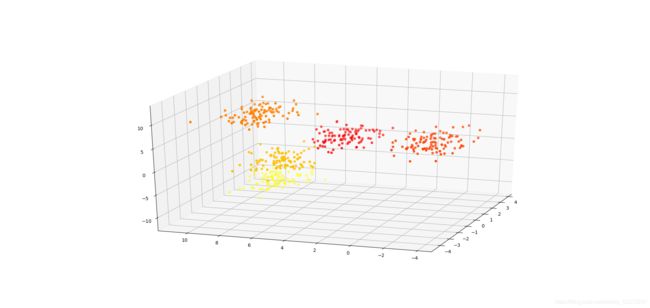

我们现在先尝试画出三维的离散数据图像看看长什么样:

简易数据分类

代码

import matplotlib.pyplot as plt

from matplotlib import cm

from sklearn.datasets.samples_generator import make_blobs

import matplotlib

x,y=make_blobs(n_samples=500,

n_features=3,

centers=5,

random_state=0,

cluster_std=1.00

)

print(x)

print(y)

ax = plt.axes(projection='3d') # 设置三维轴

ax.scatter3D(x[:,0],x[:,1],x[:,2],c=y,cmap='autumn') # 三个数组对应三个维度(三个数组中的数一一对应)

这个线性判别分类器尝试画一条将数据分成两部分的直线,这样就构成了一个分类模型。对于上图的二维数据来说,这个任务其实可以手动完成。但是我们马上发现一个问题:在这两种类型之间,有不只一条直线可以将他们完美分割

源码:

import matplotlib.pyplot as plt

from sklearn.datasets.samples_generator import make_blobs

import numpy as np

x,y=make_blobs(n_samples=50,

centers=2,

random_state=0,

cluster_std=0.60

)

print(x)

print(y)

plt.scatter(x[:,0],x[:,1],c=y,s=50,cmap='autumn')

xfit=np.linspace(-1,3.5)

plt.plot([0.6],[2.1],'x',color="red",markeredgewidth=2,markersize=10)

for m,b in [(1,0.65),(0.5,1.6),(-0.2,2.9)]:

plt.plot(xfit,m*xfit+b,"-k")

plt.xlim(-1,3.5)

虽然这三个不同的分割器都能完美地判别这些样本,但是选择不同的分割线,可能会让新

的数据点(例如图5-54中白“×点)分配到不同的标签。显然,“画一条分割不同类型

的直线”还不够,我们需要进一步思考。

支持向量机:边界最大化

支持向量机提供了改进这个问题的方法,它直观的解释是:不再画一条细线来区分类

型,而是画一条到最近点边界、有宽度的线条。具体形式如下面的示例所示(如图

import matplotlib.pyplot as plt

from sklearn.datasets.samples_generator import make_blobs

import numpy as np

x,y=make_blobs(n_samples=50,

centers=2,

random_state=0,

cluster_std=0.60

)

print(x)

print(y)

plt.scatter(x[:,0],x[:,1],c=y,s=50,cmap='autumn')

xfit=np.linspace(-1,3.5)

plt.plot([0.6],[2.1],'x',color="red",markeredgewidth=2,markersize=10)

for m,b,d in [(1,0.65,0.33),(0.5,1.6,0.55),(-0.2,2.9,0.2)]:

yfit=m*xfit+b

plt.plot(xfit,yfit,"-k")

plt.fill_between(xfit,yfit-d,yfit+d,color='#00AAAA',alpha=0.4)

plt.xlim(-1,3.5)

由于时间关系,暂时只能写到这里了,敬请期待下一篇的更加深入的讲解。