彩信猫 发送彩信失败

Today, training and running ML models on a local or remote machine is not a big deal as there are different frameworks and tools with lots of tutorials that enable you to get some decent results. However, when it comes to serving or deploying models at scale, it may seem a reasonable problem for most newcomers when they start to think about scalability, availability, and reliability of their models in production.

如今,在本地或远程机器上训练和运行ML模型已不再是一件大事,因为有不同的框架和工具以及大量教程,可让您获得不错的结果。 但是,当涉及到大规模服务或部署模型时,对于大多数新手来说,当他们开始考虑生产中模型的可伸缩性,可用性和可靠性时,这似乎是一个合理的问题。

Multi-Model-Server (addressed as MMS hereafter), a tool created by AWS Labs, makes model serving easy and flexible for most ML/DL frameworks. It helps to create an HTTP service for handling your inference requests which could be a good start to have a microservice while building a bigger platform around it.

由AWS Labs创建的工具Multi-Model-Server (此后称为MMS)使大多数ML / DL框架的模型服务变得容易且灵活。 它有助于创建一个HTTP服务来处理您的推理请求,这可能是拥有微服务并围绕其构建更大平台的良好开端。

If you look at the examples directory of the MMS source repository, you will see several ready implementation examples of different CNN and RNN architectures for Computer Vision and NLP tasks. However, I could not find usable architecture for Human Pose estimation, an important Computer Vision problem, which I needed to use for my own project. What I knew from my experience was that most of the Human Pose estimation models were based on multi-stage processing including Region Proposals and Pose estimation modules and thus, it would require multi-step inferencing which could be beyond the scope of MMS examples. So, I thought why not create my own MMS implementation for that task?

如果查看MMS源存储库的examples目录 ,将看到针对计算机视觉和NLP任务的不同CNN和RNN体系结构的几个现成的实现示例。 但是,我找不到用于人体姿势估计的可用架构,这是一个重要的计算机视觉问题,需要在我自己的项目中使用。 根据我的经验,我知道大多数人的姿势估计模型都是基于多阶段处理,包括区域提议和姿势估计模块,因此,它需要多步推断,而这可能超出了MMS示例的范围。 因此,我想为什么不为该任务创建自己的MMS实现?

In this post, we will walk through the steps of serving the Human Pose estimation model (AlphaPose) on MMS.

在本文中,我们将逐步介绍在MMS上服务于人体姿势估计模型( AlphaPose )的步骤。

必备知识,可以更好地理解 (Prerequisite knowledge for better understanding)

- Python Python

- MXNet MXNet

- GluonCV 胶水

- OpenCV OpenCV

- Docker 码头工人

For the simplicity of this post to make it really easy taking off for beginners, I am using ready and easier solutions. Definitely, you can try out other tools and frameworks which could outweigh the current method. But, just to keep you in the track of other possible solutions I will count several of them. Training and prediction of Human-Pose model: OpenPose using Caffe, OpenPose using Tensorflow, AlphaPose using Pytorch, SimplePose using Pytorch, SimplePose using Tensorflow, and so on. Deployment and Serving of model: NVIDIA Triton Server, Pytorch Serve, Tensorflow Serving, AWS SageMaker, Google Cloud AI Platform. However, you may be wondering why I am not advising more custom and simpler web frameworks like Flask, FastAPI, Tornado for wrapping models as API service. The reason is that they may bring you more headaches in terms of scalability, maintainability, availability in the long run if you are not a senior architect to design the whole system. But, that is solely my opinion and I may be wrong :)

为了使这篇文章简单易行,使它对于初学者来说非常容易,我使用了现成的,更简单的解决方案。 当然,您可以尝试其他工具和框架,这些工具和框架可能会超过当前方法。 但是,为了让您了解其他可能的解决方案,我将列举其中几个。 人体姿势模型的训练和预测: 使用Caffe的 OpenPose,使用Tensorflow的OpenPose , 使用Pytorch的 AlphaPose , 使用Pytorch的 SimplePose,使用Tensorflow的 SimplePose等等。 模型的部署和服务: NVIDIA Triton服务器 , Pytorch服务 , Tensorflow服务 , AWS SageMaker , Google Cloud AI平台 。 但是,您可能想知道为什么我不建议使用更多自定义和更简单的Web框架(例如Flask , FastAPI , Tornado)将模型包装为API服务。 原因是,如果您不是设计整个系统的高级架构师,那么从长远来看,它们可能使您在可伸缩性,可维护性和可用性方面更加头痛。 但是,那只是我的意见,我可能是错的:)

So, let’s start the implementation.

因此,让我们开始执行。

检查演示 (Checking Demo)

First, we will start by checking the pretrained demo model example given in GluonCV whether we can run it simply in Google Colab.

首先,我们将从检查GluonCV中提供的预训练演示模型示例开始,以确定是否可以在Google Colab中简单地运行它。

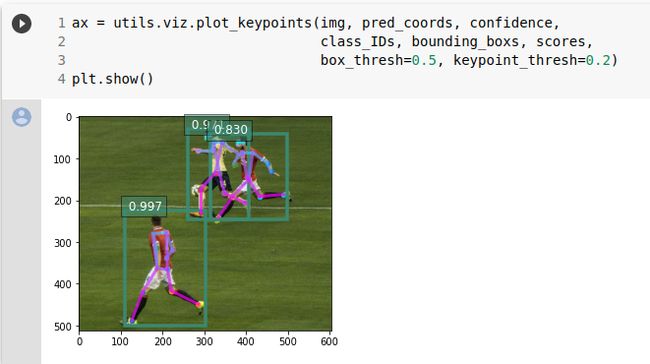

Checking Demo Code 检查演示代码 Final Result after execution in Google Colab 在Google Colab中执行后的最终结果As we run it in Google Colab, we see that demo has been successfully tested and ready for serving.

当我们在Google Colab中运行该演示时,我们看到该演示已成功测试并可以投放。

You see how easy it was to test the pretrained model :)

您会看到测试预训练模型非常容易:)

准备彩信服务 (Preparing MMS Service)

Now, we need to write our compatible service with MMS based on the above demo. So we look at Custom Service implementation from MMS docs:

现在,我们需要根据上述演示编写与MMS兼容的服务。 因此,我们从MMS doc看自定义服务的实现:

The above code template may seem scary for someone, but actually it is not and it is pretty straightforward as it is the base template for most of the ML inference use-cases. Let me explain:

上面的代码模板对于某些人来说似乎很吓人,但实际上并非如此,它非常直接,因为它是大多数ML推理用例的基础模板。 让我解释:

class ModelHandler

ModelHandler类

initializes () — the setup stage, where you initialize and load your model weights.

initializes() —设置阶段,在此阶段初始化并加载模型权重。

preprocess() — the first stage where the request is received and should be prepared for inference with some preprocessing.

preprocess() —接收请求的第一阶段,应准备好进行一些预处理的推断。

inference() — the inference stage where the real prediction comes in and it predicts the preprocessed data (in our case it predicts human joint coordinates from a picture)

inference() —实际预测进入的推理阶段,它预测预处理的数据(在我们的情况下,它是根据图片预测人的关节坐标)

postprocess() — the last stage where predicted output may be wrapped into some human-readable format and returned as a response

postprocess() -预测输出可以包装为某些人类可读格式并作为响应返回的最后阶段

handle() — a controller of a service that controls the flow of the inference from request to response generation

handle() —服务的控制器,控制从请求到响应生成的推理流程

As you are guessing now, we need to divide our example codebase from GluonCV into these 5 steps. We start by copying the whole MMS Custom Service example as handler.py and will modify it as shown below:

正如您现在所猜测的那样,我们需要将示例代码库从GluonCV划分为这5个步骤。 首先,将整个MMS定制服务示例复制为handler.py ,并将对其进行如下修改:

By the way, the ready repository of this post can be found here.

顺便说一句,可以在这里找到这篇文章的现成资料库。

1. initialize()—步骤 (1. initialize() — step)

handler.py

handler.py

We put the loading and initialization of models from the GluonCV example into our initialize() method. As I have stated at the beginning about human-pose models, this implementation also uses multi-stage processing that consists of object-detector and human-pose estimator models. For object-detector we use yolov3 and for the human-pose estimator, we use AlphaPose. So we get pretrained ones from GluonCV Model Zoo and initialize them in this step. Then in the last line, we reset our object-detector model for detecting only humans as our main purpose of this service is to find only human joint points.

我们将GluonCV示例中的模型加载和初始化放入我们的initialize()方法中。 正如我在开始时关于人体姿势模型所说的那样,此实现还使用了由对象检测器模型和人体姿势估计器模型组成的多阶段处理。 对于对象检测器,我们使用yolov3 ;对于人体姿势估计器,我们使用AlphaPose 。 因此,我们从GluonCV Model Zoo中获得了预训练的模型 ,并在此步骤中对其进行了初始化。 然后,在最后一行中,我们重置对象检测器模型以仅检测人,因为此服务的主要目的是仅查找人的关节点。

2. preprocess()-步骤 (2. preprocess() — step)

This is how could it look if we have just copy-pasted the code from the GluonCV example. But as we are receiving a request with bytes representing a picture in our MMS, this implementation is wrong and we have to slightly modify the code:

如果我们只是复制粘贴了GluonCV示例中的代码,这就是它的外观。 但是,由于我们在MMS中收到带有表示图片的字节的请求,因此此实现是错误的,我们必须对代码进行一些修改:

And this is how our final implementation of the preprocess() method should look like. First, we decode the image bytes from the request (in our case request is defined as a batch) to mxnet.NDArray which is supported multi-dimensional array format for MXNet and GluonCV. Second, we transform our image into yolov3 readable format. Basically, we do everything exactly wrong implementation wanted to do except downloading and reading the image.

这就是我们preprocess()方法的最终实现的样子。 首先 ,我们将请求中的图像字节(在本例中,请求定义为batch ) 解码为mxnet.NDArray ,这是MXNet和GluonCV支持的多维数组格式。 其次 ,我们将图像转换为yolov3可读格式。 基本上,除了下载和读取图像之外,我们所做的一切事情都是完全错误的实现。

3. inference()—步骤 (3. inference() — step)

We put the rest part of our GluonCV example to the inference() and then return the prediction results as the output of the function.

我们将GluonCV示例的其余部分放入inference() ,然后将预测结果作为函数的输出返回。

4. postprocess()-步骤 (4. postprocess() — step)

This is finally how we postprocess our inference_output and give it as a response from our MMS.

最终,这就是我们对inference_output进行后处理并作为MMS响应的方式。

We won’t modify the rest part of the handler.py as it already does its job.

我们不会修改handler.py的其余部分,因为它已经完成了工作。

准备基础设施 (Preparing Infrastructure)

Now our service implementation is ready and it is time to prepare the infrastructure to deploy to. We will use Docker for ease of configuration and not waste our precious time in tinkering and tweaking the infrastructure.

现在我们的服务实施已经准备就绪,现在该准备部署基础架构了。 我们将使用Docker简化配置,而不会浪费我们宝贵的时间来修改和调整基础架构。

Let’s dive into Dockerfile where we define our infrastructure as a code for MMS:

让我们深入研究Dockerfile ,在其中我们将基础架构定义为MMS的代码:

Dockerfile

Docker文件

We use awsdeeplearningteam/multi-model-server as our base image. Then we install GluonCV (upgrading the other 2 libraries for the sake of package compatibility requirements) as we fully depend on the GluonCV example.

我们使用awsdeeplearningteam / multi-model-server作为我们的基本映像。 然后,由于我们完全依赖于GluonCV示例,因此我们将安装GluonCV(为了封装兼容性要求而升级其他2个库)。

After finishing our Dockerfile, we move on the MMS configuration file for several adjustments:

在完成Dockerfile之后,我们继续进行MMS配置文件的一些调整:

config.properties

config.properties

This configuration file is downloaded from the MMS repository and changes that are made are as follows:

该配置文件是从MMS存储库下载的,所做的更改如下:

preload=true — Helps when you want to spin up several workers and you do not want for each worker to load your model separately, so a model is loaded before spinning up the model workers, and forked for any number of model workers.

preload = true —在您希望增加几个工作人员并且不希望每个工作人员分别加载模型时提供帮助,因此在旋转模型工作人员之前先加载模型,然后对任意数量的模型工作人员进行分叉。

default_workers_per_model=1— number of workers to create for each model that loaded at startup time. This is to make sure that only one worker is created per model and not to waste on resource allocation.

default_workers_per_model = 1 —为在启动时加载的每个模型创建的工作程序数。 这是为了确保每个模型仅创建一个工作程序,并且不会浪费资源。

Other important configuration decisions can be made by looking at the Advanced configuration of MMS.

通过查看MMS的高级配置,可以做出其他重要的配置决策。

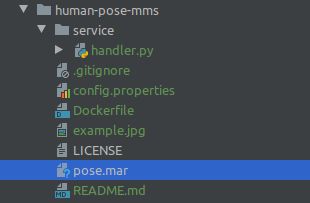

Now, we will run several commands, so before you start make sure that your project structure is the same as here and run all of these commands from the project root directory.

现在,我们将运行几个命令,因此在开始之前,请确保您的项目结构与此处相同,并从项目根目录运行所有这些命令。

We build our image for the MMS:

我们为MMS建立形象:

$ docker build -t human-pose-mms .We enter into the bash of the MMS to run one script to prepare our deployment model:

我们进入MMS的重击中,运行一个脚本来准备我们的部署模型:

$ docker run --rm -it -v $(pwd):/tmp human-pose-mms:latest bashWe run our script to prepare our deployment model:

我们运行脚本以准备我们的部署模型:

$ model-archiver --model-name pose --model-path /tmp/service --handler handler:handle --runtime python3 --export-path /tmpWe exit from the container by pressing CTRL-D.

我们通过按CTRL-D退出容器。

Then you will see a new pose.mar file created in your project root directory. We will use this file while starting our server.

然后,您将在项目根目录中看到一个新的pose.mar文件。 我们将在启动服务器时使用该文件。

This .mar file is the model format that the MMS reads and understands to handle inference requests. To learn more about .mar extension and model-archiver read here.

此.mar文件是MMS读取并理解以处理推理请求的模型格式。 要了解有关.mar扩展名和模型存档器的更多信息,请在此处阅读。

运行/测试服务器 (Running/Testing Server)

Finally, after endless, long, and boring explanations we are here to run and test our server. Hurrah!!!

最后,经过无休止,冗长而无聊的解释,我们在这里运行和测试我们的服务器。 欢呼!!!

Let’s start the server by running our ready container through terminal:

让我们通过终端运行我们准备好的容器来启动服务器:

$ docker run --rm -it -p 8080:8080 -p 8081:8081 -v $(pwd):/tmp mms-human-pose:latest multi-model-server --start --mms-config /tmp/config.properties --models posenet=pose.mar --model-store /tmpThis will start the MMS listening on 8080 and 8081 HTTP ports.

这将启动MMS在8080和8081 HTTP端口上的侦听。

Let’s test it now.

让我们现在测试一下。

By the way, when you run the container, you have to wait from several seconds to several minutes(depending on your internet speed and computer power) for MMS to initialize properly. This is needed because MMS downloads 2 models that we have stated above and it allocates memory for those models so model workers can handle them. We could have created persistence storage for models not to download them every time, but this would be out of the scope of this post.

顺便说一句,当您运行容器时,您必须等待几秒钟到几分钟(取决于您的Internet速度和计算机能力),才能使MMS正确初始化。 之所以需要这样做,是因为MMS下载了我们上面提到的2个模型,并为这些模型分配了内存,以便模型工作者可以处理它们。 我们可以为模型创建持久性存储,而不是每次都不下载它们,但这超出了本文的范围。

Then, we will open a terminal and send a request with the example image:

然后,我们将打开一个终端并发送带有示例图像的请求:

Joshua Earle on 约书亚·厄尔 ( Unsplash Unslash)摄$ curl -X POST http://localhost:8080/predictions/posenet/ -T example.jpgWe will get this kind of response:

我们将得到这种回应:

Yes, we see that our inference server is now working and ready to make predictions. Here in the generated response, we see 17 joint coordinates(based on AlphaPose) of the person in the picture and their corresponding confidence scores. If you want to learn more about the AlphaPose model, please visit the official AlphaPose implementation repository.

是的,我们看到推理服务器现在可以正常工作并可以进行预测了。 在生成的响应中,我们在图片中看到该人的17个联合坐标( 基于AlphaPose )及其相应的置信度得分。 如果您想了解有关AlphaPose模型的更多信息,请访问官方的AlphaPose实现库 。

Before using these prediction results you should keep in mind that our object detector which is yolov3 receives frames where height and width should be factors of 32. So every time before inferencing our picture, the picture is resized and these results that you see are not proportional to ground truth dimensions of the picture. You need to look into the method transform_test() that is used in the preprocess() step to learn about its resizing method.

在使用这些预测结果之前,您应该记住,我们的对象检测器yolov3接收到的帧的高度和宽度应为32的因子。因此,每次在推断图片之前,都会调整图片的大小,并且您看到的这些结果不成比例确定图片的真实尺寸。 您需要研究方法transform_test() 在preprocess()步骤中使用它来了解其调整大小的方法。

奖金 (Bonus)

But, for the sake of completeness of this post, let’s make something tangible. We will implement a simple client in python that works with our human-pose MMS service.

但是,为了这篇文章的完整性,让我们做些切实的事情。 我们将在python中实现一个与我们的人为MMS服务一起使用的简单客户端。

The following steps are needed to implement a client:

要实现客户端,需要执行以下步骤:

Rewriting GluonCV function

transform_test()of yolov3 with OpenCV用OpenCV重写yolov3的GluonCV函数

transform_test()Rewriting GluonCV function

plot_keypoints()with OpenCV用OpenCV重写GluonCV函数

plot_keypoints()- Writing HTTP client for request handling. 编写HTTP客户端以处理请求。

Let’s start it. By the way, you can check out the ready client here.

让我们开始吧。 顺便说一句,您可以在此处签出准备好的客户端。

Firstly, if we look inside the functiontransform_test() of GluonCV, it resizes, tensorifies, and normalizes the image for yolov3 input. But for our case, we just need to resize the image because as I stated above, we may get wrong coordinates in response from our MMS service if we do not resize the image in our client implementation.

首先,如果我们查看GluonCV的函数transform_test() ,它将为yolov3输入调整图像的大小,张量化和归一化。 但是对于我们的情况,我们只需要调整图像大小,因为如上所述,如果不在客户端实现中调整图像大小,则响应MMS服务可能会得到错误的坐标。

This is simply the conversion of transform_test() where we only need resizing. But you can look at the original implementation here.

这仅是transform_test()的转换,我们只需要调整大小即可。 但是您可以在这里查看原始的实现。

Secondly, if we look inside the function plot_keypoints() , we see arguments like class_ids, bounding_boxes, scores which are part of object-detector and we actually do not need them. Also, this function uses matplotlib, but in our case OpenCV so we also remove matplotlib usage.

其次,如果我们查看函数plot_keypoints() , plot_keypoints()看到诸如class_ids , bounding_boxes , scores类的参数,它们是对象检测器的一部分,实际上我们不需要它们。 另外,此函数使用matplotlib ,但在我们的情况下为OpenCV,因此我们也删除了matplotlib的用法。

Here is how we converted GluonCV implementation into OpenCV implementation. But you can look at the original implementation here.

这是我们将GluonCV实现转换为OpenCV实现的方式。 但是您可以在这里查看原始的实现。

Lastly, we implement a client that handles requests and shows the result.

最后,我们实现了一个处理请求并显示结果的客户端。

Except using OpenCV, we also use Typer for faster CLI implementations.

除了使用OpenCV,我们还使用Typer来实现更快的CLI实施。

Basically, that’s what we need for our simple client and let’s run it. Run the below script from the project root directory. By the way, do not forget to install these python packages with pip:

基本上,这就是我们的简单客户端所需要的,让我们运行它。 从项目根目录运行以下脚本。 顺便说一句,不要忘记使用pip安装这些python软件包 :

$ python client/cli.pyThis is what you get when you run the above command. Only colors may be different in your case, but the basic joints structure should be similar.

这是运行上述命令时得到的。 在您的情况下,只有颜色可能有所不同,但是基本的关节结构应该相似。

# project repositoryhttps://github.com/bedilbek/human-pose-mms注意 (Note)

My apologies if my codebase seems error-prone because it is error-prone. The reason is that I wanted to keep all of this explanation too simple as this post was for beginners entering into the tiniest part of ML infrastructure stuff. If I had written a more robust codebase, it would have been the longest post you have ever read in Medium(it is long though). That is why I highly not recommend using this example in your production environment!!! We are not taking into consideration batch configuration, strict input validation, error handling, context-based dynamic MMS setup, resource allocation, docker configuration, model storage persistence, and other miscellaneous but important stuff that needs to be done by MLOps, ML Infrastructure Engineer, or in general Software Engineer.

如果我的代码库因为容易出错而显得容易出错,我深表歉意。 原因是我想使所有解释都过于简单,因为这篇文章是针对初学者进入ML基础架构中最微小的部分。 如果我写了一个更强大的代码库,那将是您在Medium中读过的最长的文章(虽然很长)。 这就是为什么我极不建议您在生产环境中使用此示例的原因!!! 我们没有考虑批处理配置,严格的输入验证,错误处理,基于上下文的动态MMS设置,资源分配,docker配置,模型存储持久性以及ML基础结构工程师MLOps需要完成的其他杂项但重要的工作,或一般的软件工程师。

结论 (Conclusion)

What I really wanted to show here is that thinking about ML infrastructure should be one of your important priorities if you do not want to end up just using some frameworks/libraries in jupyter notebook and want to go beyond training/testing your model. And this is one of the real working ways of converting your jupyter notebook codebase into a servable and deployable environment with MMS.

我真正想在这里显示的是,如果您不想仅在jupyter Notebook中使用某些框架/库而不仅仅是训练/测试模型,那么思考ML基础架构应该是您的重要优先事项之一。 这是使用MMS将jupyter笔记本代码库转换为可服务且可部署的环境的实际工作方式之一。

翻译自: https://medium.com/swlh/serve-human-pose-on-mms-44fcb5239ea0

彩信猫 发送彩信失败