基于遗传算法超参数优化

超参数调整| AutoML | 随机搜索和网格搜索 (Hyper-parameter Tuning | AutoML | RandomSearch & GridSearch)

Most Professional Machine Learning practitioners follow the ML Pipeline as a standard, to keep their work efficient and to keep the flow of work. A pipeline is created to allow data flow from its raw format to some useful information. All sub-fields in this pipeline’s modules are equally important for us to produce quality results, and one of them is Hyper-Parameter Tuning.

大多数专业机器学习从业人员都遵循ML管道作为标准,以确保他们的工作高效并保持工作流程。 创建管道以允许数据从其原始格式流到一些有用的信息。 该管道模块中的所有子字段对于我们产生高质量结果都同样重要,其中之一是超参数调整。

Most of us know the best way to proceed with Hyper-Parameter Tuning is to use the GridSearchCV or RandomSearchCV from the sklearn module. But apart from these algorithms, there are many other Advanced methods for Hyper-Parameter Tuning. This is what the article is all about, Introduction to Advanced Hyper-Parameter Optimization, Transfer Learning and when & how to use these algorithms to make out the best of them.

我们大多数人都知道进行超参数调整的最佳方法是使用sklearn模块中的GridSearchCV或RandomSearchCV 。 但是除了这些算法之外,还有许多其他用于超参数调整的高级方法。 这就是本文的全部内容,高级超参数优化介绍,转移学习以及何时以及如何使用这些算法来充分利用它们。

Both of the algorithms, Grid-Search and Random-Search are instances of Uninformed Search. Now, let’s dive deep !!

网格搜索和随机搜索这两种算法都是无信息搜索的实例。 现在,让我们深入探讨!

不知情的搜索 (Uninformed search)

Here in these algorithms, each iteration of the Hyper-parameter tuning does not learn from the previous iterations. This is what allows us to parallelize our work. But, this isn’t very efficient and costs a lot of computational power.

此处,在这些算法中,超参数调整的每个迭代都不从先前的迭代中学习。 这就是我们并行处理工作的原因。 但是,这不是很有效,并且需要大量的计算能力。

Random search tries out a bunch of hyperparameters from a uniform distribution randomly over the preset list/hyperparameter search space (the number iterations is defined). It is good in testing a wide range of values and normally reaches to a very good combination very fastly, but the problem is that, it doesn’t guarantee to give the best parameter’s combination.

随机搜索从预设列表/超参数搜索空间(已定义次数迭代)的均匀分布中随机尝试一堆超参数。 它可以很好地测试各种值,并且通常可以非常快速地达到一个很好的组合,但是问题是,它不能保证给出最佳参数的组合。

On the other hand, Grid search will give the best combination, but it can takes a lot of time and the computational cost is high.

另一方面,网格搜索将提供最佳的组合,但是会花费很多时间,并且计算成本很高。

It may look like grid search is the better option, compared to the random one, but bare in mind that when the dimensionality is high, the number of combinations we have to search is enormous. For example, to grid-search ten boolean (yes/no) parameters you will have to test 1024 (2¹⁰) different combinations. This is the reason, why random search is sometimes combined with clever heuristics, is often used.

与随机选择相比,网格搜索看起来似乎是更好的选择,但请记住,当维数较高时,我们必须搜索的组合数量非常多。 例如,要对十个布尔值(是/否)进行网格搜索,您将必须测试1024个(2×1)不同的组合。 这就是为什么经常使用随机搜索和巧妙的启发式方法的原因。

为什么要在网格搜索中引入随机性? [数学解释] (Why bring Randomness in Grid Search? [Mathematical Explanation])

Random search is more of a stochastic search/optimization perspective — the reason we introduce noise (or some form of stochasticity) into the process is to potentially bounce out of poor local minima. While this is more typically used to explain the intuition in general optimization (like stochastic gradient descent for updating parameters, or learning temperature-based models), we can think of humans looking through the meta-parameter space as simply a higher-level optimization problem. Since most would agree that these dimensional spaces (reasonably high) lead to a non-convex form of optimization, we humans, armed even with some clever heuristics from the previous research, can get stuck in the local optima. Therefore, Randomly exploring the search space might give us better coverage, and more importantly, it might help us find better local optima.

随机搜索更多是从随机搜索/优化角度出发的–我们将噪声(或某种形式的随机性)引入流程的原因是可能会从较差的局部最小值中反弹。 虽然这通常用于解释一般优化中的直觉(例如用于更新参数的随机梯度下降,或基于温度的模型的学习),但我们可以认为人类通过元参数空间查看只是简单的高级优化问题。 由于大多数人都认为这些维数空间(合理地高)会导致优化的非凸形式,因此,即使使用先前研究中的一些巧妙启发法,我们人类也可以陷入局部最优中。 因此,随机探索搜索空间可以为我们提供更好的覆盖范围,更重要的是,它可以帮助我们找到更好的局部最优值。

So far in Grid and Random Search Algorithms, we have been creating all the models at once and combining their scores before deciding the best model at the end. An alternative approach would be to build models sequentially, learning from each iteration. This approach is termed as Informed Search.

到目前为止,在电网和随机搜索算法,我们一直在创造一次所有的车型,并在年底决定最好的模型前,他们的得分组合。 另一种方法是顺序构建模型,从每次迭代中学习。 这种方法称为“知情搜索” 。

明智的方法:粗调到微调 (Informed Method: Coarse to Fine Tuning)

A basic informed search methodology.The process follows:1. Random search2. Find promising areas in the search space3. Grid search in the smaller area4. Continue until optimal score is obtainedYou could substitute (3) with random searches before the grid search.

基本的知情搜索方法。 该过程如下: 1.随机搜索2。 在搜索空间中找到有希望的领域3。 较小区域中的网格搜索4。 继续直到获得最佳分数您可以在网格搜索之前用随机搜索替换(3)。

为什么要粗到细? (Why Coarse to Fine?)

Coarse to Fine tuning optimizes and uses the advantages of both grid and random search.

粗略微调优化并利用了网格搜索和随机搜索的优势。

- Wide searching capabilities of random search 广泛的随机搜索功能

- Deeper search once you know where a good spot is likely to be一旦知道了哪里可能是一个好地方,就可以进行更深入的搜索

No need to waste time on search spaces that are not giving good results !! Therefore, this better utilizes the spending of time and computational efforts, i.e we can iterate quickly, also there is boost in the performance.

无需在搜索效果不佳的搜索空间上浪费时间! 因此,这更好地利用了时间和计算上的花费,即我们可以快速迭代,并且性能也得到了提高。

通报的方法:贝叶斯统计 (Informed Method: Bayesian Statistics)

The most popular informed search method is Bayesian Optimization. Bayesian Optimization was originally designed to optimize black-box functions.

最受欢迎的知情搜索方法是贝叶斯优化。 贝叶斯优化最初旨在优化黑盒功能。

This is a basic theorem or rule from Probability Theory and Statistics, in case if you want to brush up and get refreshed with the terms used here, refer this.

这是“概率论与统计”中的一个基本定理或规则,以防万一,如果您想重新使用此处所使用的术语并感到耳目一新,请参阅此。

Bayes Rule | TheoremA statistical method of using new evidence to iteratively update our beliefs about some outcome. In simpler words, it is used to calculate the probability of an event based on its association with another event.

贝叶斯规则| 定理一种使用新证据迭代更新我们对某些结果的信念的统计方法。 简而言之,它用于基于事件与另一个事件的关联来计算事件的概率。

- LHS is the probability of A, given B has occurred. B is some new evidence. This is known as the ‘posterior’. LHS是给定B发生时A的概率。 B是一些新证据。 这就是所谓的“后验”。

- RHS is how we calculate this. RHS是我们计算的方式。

- P(A) is the ‘prior’. The initial hypothesis about the event. It is different to P(A|B), the P(A|B) is the probability given new evidence. P(A)是“先验”。 有关事件的初始假设。 与P(A | B)不同,P(A | B)是给出新证据的概率。

- P(B) is the ‘marginal likelihood’ and it is the probability of observing this new evidence. P(B)是“边际可能性”,它是观察到这一新证据的概率。

- P(B|A) is the ‘likelihood’ which is the probability of observing the evidence, given the event we care about. P(B | A)是“可能性”,是给定我们关心的事件,观察证据的可能性。

Applying the logic of Bayes rule to hyperparameter tuning:1. Pick a hyperparameter combination2. Build a model3. Get new evidence (i.e the score of the model)4. Update our beliefs and chose better hyperparameters next round

将贝叶斯规则的逻辑应用于超参数调整: 1.选择一个超参数组合2。 建立模型3。 获取新证据(即模型的分数)4。 更新我们的信念,并在下一轮选择更好的超参数

Bayesian hyperparameter tuning is quite new but is very popular for larger and more complex hyperparameter tuning tasks as they work well to find optimal hyperparameter combinations in these kinds of situations.

贝叶斯超参数调优是一个很新的技术,但是在较大和更复杂的超参数调优任务中非常流行,因为它们可以很好地在这种情况下找到最佳的超参数组合。

注意 (Note)

For more complex cases you might want to dig a bit deeper and explore all the details about Bayesian optimization. Bayesian optimization can only work on continuous hyper-parameters, and not categorical ones.

对于更复杂的情况,您可能需要更深入地挖掘并探索有关贝叶斯优化的所有细节。 贝叶斯优化只能在连续的超参数上起作用,而不能在分类参数上起作用。

使用HyperOpt进行贝叶斯超参数调整 (Bayesian Hyper-parameter Tuning with HyperOpt)

HyperOpt package, uses a form of Bayesian optimization for parameter tuning that allows us to get the best parameters for a given model. It can optimize a model with hundreds of parameters on a very large scale.

HyperOpt软件包使用贝叶斯优化形式进行参数调整,这使我们能够为给定模型获得最佳参数。 它可以大规模优化具有数百个参数的模型。

HyperOpt: HyperOpt : Distributed Hyper-parameter Optimization分布式超参数优化To know more about this library and the parameters of HyperOpt library feel free to visit here. And visit here for a quick tutorial with adequate explanation on how to use HyperOpt for Regression and Classification.

要了解有关此库和HyperOpt库参数的更多信息,请随时访问此处。 并参观这里 快速教程,其中包含有关如何使用HyperOpt进行回归和分类的充分说明。

介绍HyperOpt软件包。 (Introducing the HyperOpt package.)

To undertake Bayesian hyperparameter tuning we need to:1. Set the Domain: Our Grid i.e. search space (with a bit of a twist)2. Set the Optimization algorithm (default: TPE)3. Objective function to minimize: we use “1-Accuracy”

要进行贝叶斯超参数调整,我们需要:1。 设置域:我们的网格,即搜索空间(稍有变化)2。 设置优化算法(默认值:TPE)3。 目标函数最小化:我们使用“ 1-Accuracy”

Algorithms for Advanced Hyper-Parameter Optimization 高级超参数优化算法Know more about the Optimization Algorithm used, Original Paper of TPE (Tree of Parzen Estimators)

了解有关使用的优化算法的更多信息,TPE原始论文(Parzen估计器树)

使用HyperOpt [随机森林]的示例代码(Sample Code for using HyperOpt [ Random Forest ])

HyperOpt does not use point values on the grid but instead, each point represents probabilities for each hyperparameter value. Here, simple uniform distribution is used, but there are many more if you check the documentation.

HyperOpt不在网格上使用点值,而是每个点代表每个超参数值的概率。 在这里,使用简单的统一分发,但是如果您查看文档,还有更多信息。

# Set up space dictionary with specified hyperparameters

space = {

'max_depth': hp.quniform('max_depth', 2, 10, 2),

'min_samples_leaf': hp.quniform('min_samples_leaf', 2, 8, 2),

'learning_rate': hp.uniform('learning_rate', 0.001, 0.9)

}

# Set up objective function

def objective(params):

params = {

'max_depth': int(params['max_depth']),

'min_samples_leaf': int(params['min_samples_leaf']),

'learning_rate': params['learning_rate']

}

gbm_clf = GradientBoostingClassifier(n_estimators=100, **params)

best_score = cross_val_score(gbm_clf, X_train, y_train, scoring='accuracy', cv=2, n_jobs=4).mean()

loss = 1 - best_score

return loss

# Run the algorithm

best_result = fmin(

fn=objective,

space=space,

max_evals=100,

rstate=np.random.RandomState(73),

algo=tpe.suggest

)

print(best_result)

''' OUTPUT

{'learning_rate': 0.023790662701828766, 'max_depth': 2.0, 'min_samples_leaf': 8.0}

'''To really see this in action !! try on a larger search space, with more trials, more CVs and a larger dataset size.

要真正看到这个动作! 尝试使用更大的搜索空间,更多的试验,更多的简历和更大的数据集大小。

有关HyperOpt的实际实现,请参考: (For practical implementation of HyperOpt refer:)

[1] Hyperopt Bayesian Optimization for Xgboost and Neural network[2] Tuning using HyperOpt in python

[1]针对Xgboost和神经网络的Hyperopt贝叶斯优化[2]在python中使用HyperOpt进行调整

明智的方法:遗传算法(Informed Method: Genetic Algorithms)

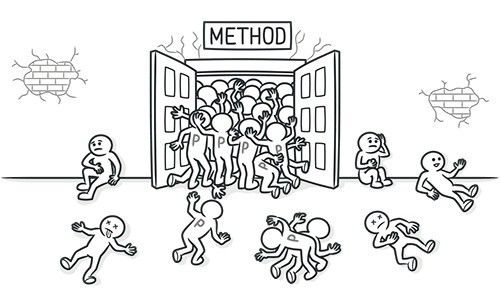

Why does this work well?1. It allows us to learn from previous iterations, just like Bayesian hyperparameter tuning.2. It has the additional advantage of some randomness3. TPOT will automate the most tedious part of machine learning by intelligently exploring thousands of possible pipelines to find the best one for your data.

为什么运作良好? 1.它使我们可以从以前的迭代中学习,就像贝叶斯超参数调整一样。2。 它具有一些随机性的额外优势3 。 TPOT将智能地探索数千种可能的管道以找到最适合您的数据的管道,从而使机器学习中最繁琐的部分自动化。

遗传超参数调整的有用库:TPOT (A useful library for genetic hyperparameter tuning: TPOT)

TPOT is a Python Automated Machine Learning tool that optimizes machine learning pipelines using genetic programming.Consider TPOT your Data Science Assistant for advanced optimization.

TPOT是Python自动机器学习工具,可使用遗传编程来优化机器学习管道。 TPOT是您的数据科学助手,可以进行高级优化。

Pipelines not only include the model (or multiple models) but also work on features and other aspects of the process. Plus it returns the Python code of the pipeline for you! TPOT is designed to run for many hours to find the best model. You should have a much larger population and offspring size as well as hundreds of more generations to find a good model.

管道不仅包括模型(或多个模型),而且还涉及过程的功能和其他方面。 加上它为您返回管道的Python代码! TPOT设计运行许多小时才能找到最佳模型。 您应该拥有更大的人口和更多的后代,以及数百个后代才能找到一个好的模型。

TPOT组件(主要参数) (TPOT Components ( Key Arguments ))

generations — Iterations to run training for

世代—为进行训练而进行的迭代

population_size — The number of models to keep after each iteration

mass_size —每次迭代后要保留的模型数

offspring_size — Number of models to produce in each iteration

offspring_size-每次迭代中要生成的模型数

mutation_rate — The proportion of pipelines to apply randomness to

variant_rate —将随机性应用于

crossover_rate — The proportion of pipelines to breed each iteration

crossover_rate —繁殖每次迭代的管道比例

scoring — The function to determine the best models

评分-确定最佳模型的功能

cv — Cross-validation strategy to use

cv-使用的交叉验证策略

from tpot import TPOTClassifier

# Create the tpot classifier

tpot_clf = TPOTClassifier(generations=3, population_size=4,

offspring_size=3, scoring="accuracy",

verbosity=2, random_state=73, cv=2)

# Fit the classifier to the training data

tpot_clf.fit(X_train, y_train)

# Score on the test set

print(tpot_clf.score(X_test, y_test))We will keep default values for mutation_rate and crossover_rate as they are best left to the default without deeper knowledge on genetic programming.

我们将保留mutation_rate和crossover_rate的默认值,因为最好将其保留为默认值,而无需对基因编程有更深入的了解。

注意:没有特定于算法的超参数? (Notice: No algorithm-specific hyperparameters?)

Since TPOT is an open-source library for performing AutoML in Python. AutoML ??Automated Machine Learning (AutoML) refers to techniques for automatically discovering well-performing models for predictive modeling tasks with very little user involvement.

由于TPOT是用于在Python中执行AutoML的开源库。 AutoML自动化机器学习(AutoML)是指用于自动发现性能良好的模型的技术,这些模型可以在很少用户参与的情况下进行预测性建模任务。

Output for the above code snippet 以上代码段的输出TPOT is quite unstable when not run for a reasonable amount of time. The below code snippets shows the instability of TPOT. Here, only the random state has been changed in the below three codes, but the Output shows major differences in choosing the pipeline, i.e. model and it’s hyperparameters.

如果在相当长的时间内不运行,TPOT会非常不稳定。 下面的代码段显示了TPOT的不稳定。 此处,以下三个代码仅更改了随机状态,但输出显示了选择管道(即模型及其超参数)的主要差异。

# Create the tpot classifier

tpot_clf = TPOTClassifier(generations=2, population_size=4, offspring_size=3, scoring='accuracy', cv=2,

verbosity=2, random_state=42)

# Fit the classifier to the training data

tpot_clf.fit(X_train, y_train)

# Score on the test set

print(tpot_clf.score(X_test, y_test))

''' OUTPUT

Warning: xgboost.XGBClassifier is not available and will not be used by TPOT.

Generation 1 - Current best internal CV score: 0.7549688742218555

Generation 2 - Current best internal CV score: 0.7549688742218555

Best pipeline: DecisionTreeClassifier(input_matrix, criterion=gini, max_depth=7, min_samples_leaf=11, min_samples_split=12)

0.75

'''# Create the tpot classifier

tpot_clf = TPOTClassifier(generations=2, population_size=4, offspring_size=3, scoring='accuracy', cv=2,

verbosity=2, random_state=122)

# Fit the classifier to the training data

tpot_clf.fit(X_train, y_train)

# Score on the test set

print(tpot_clf.score(X_test, y_test))

''' OUTPUT

Warning: xgboost.XGBClassifier is not available and will not be used by TPOT.

Generation 1 - Current best internal CV score: 0.7675066876671917

Generation 2 - Current best internal CV score: 0.7675066876671917

Best pipeline: KNeighborsClassifier(MaxAbsScaler(input_matrix), n_neighbors=57, p=1, weights=distance)

0.75

'''# Create the tpot classifier

tpot_clf = TPOTClassifier(generations=2, population_size=4, offspring_size=3, scoring='accuracy', cv=2,

verbosity=2, random_state=99)

# Fit the classifier to the training data

tpot_clf.fit(X_train, y_train)

# Score on the test set

print(tpot_clf.score(X_test, y_test))

''' OUTPUT

Warning: xgboost.XGBClassifier is not available and will not be used by TPOT.

Generation 1 - Current best internal CV score: 0.8075326883172079

Generation 2 - Current best internal CV score: 0.8075326883172079

Best pipeline: RandomForestClassifier(SelectFwe(input_matrix, alpha=0.033), bootstrap=False, criterion=gini, max_features=1.0, min_samples_leaf=19, min_samples_split=10, n_estimators=100)

0.78

'''You can see in the output the score produced by the chosen model (in this case a version of Naive Bayes) over each generation, and then the final accuracy score with the hyperparameters chosen for the final model. This is a great first example of using TPOT for automated hyperparameter tuning. You can now extend this on your own and build great machine learning models!

您可以在输出中看到所选模型(在本例中为Naive Bayes的版本)每一代生成的分数,然后是最终精度分数以及为最终模型选择的超参数。 这是使用TPOT进行自动超参数调整的一个很好的示例。 您现在可以自己扩展它,并构建出色的机器学习模型!

要了解有关TPOT的更多信息: (To understand more about TPOT:)

[1] TPOT for Automated Machine Learning in Python[2] For more information in using TPOT, visit the documentation.

[1]用于使用Python进行自动机器学习的TPOT [2]有关使用TPOT的更多信息,请访问文档。

概要 (Summary)

In informed search, each iteration learns from the last, whereas in Grid and Random, modelling is all done at once and then the best is picked. In case for small datasets, GridSearch or RandomSearch would be fast and sufficient. AutoML approaches provide a neat solution to properly select the required hyperparameters that improve the model’s performance.

在明智的搜索中,每个迭代都从最后一次学习,而在Grid和Random中,建模一次完成,然后选择最佳。 如果是小型数据集,则GridSearch或RandomSearch将足够快速且足够。 AutoML方法提供了一种精巧的解决方案,可以正确选择所需的超参数,从而改善模型的性能。

Informed methods explored were:1. ‘Coarse to Fine’ (Iterative random then grid search).2. Bayesian hyperparameter tuning, updating beliefs using evidence on model performance (HyperOpt).3. Genetic algorithms, evolving your models over generations (TPOT).

探索的信息方法是: 1.“从粗到精”(迭代随机然后网格搜索)2。 贝叶斯超参数调整,使用模型性能证据更新信念(HyperOpt); 3。 遗传算法,几代人不断发展的模型(TPOT)。

I hope you’ve learned some useful methodologies for your future work undertaking hyperparameter tuning in Python!

希望您在以后的工作中学习了一些有用的方法学,以便在Python中进行超参数调整!

If you are curious to know about Golang’s Routers and want to try out a simple web development project using Go, I suggest to read the above article.

如果您想了解Golang的路由器并想尝试使用Go进行简单的Web开发项目,建议阅读以上文章。

For more informative articles from me, follow me on medium.

And if you’re passionate about Data Science/Machine Learning, feel free to add me on LinkedIn.

有关我的更多有益文章,请在medium上关注我。 如果您对数据科学/机器学习充满热情,请随时在LinkedIn上加我。

❤ Clapping is Caring ❤ ❤拍手就是关怀❤Don’t forget to Smash that CLAP button before you go :D

在您离开之前,请不要忘记按一下CLAP按钮D:D

翻译自: https://medium.com/analytics-vidhya/algorithms-for-advanced-hyper-parameter-optimization-tuning-cebea0baa5d6

基于遗传算法超参数优化