吴恩达深度学习学习笔记——C4W2——深度卷积网络实例探究——作业2——残差网络

这里主要梳理一下作业的主要内容和思路,完整作业文件可参考:

https://github.com/pandenghuang/Andrew-Ng-Deep-Learning-notes/tree/master/assignments/C4W2/Excercise/KerasTutorial

作业完整截图,参考本文结尾:作业完整截图。

Residual Networks(残差网络)

Welcome to the second assignment of this week! You will learn how to build very deep convolutional networks, using Residual Networks (ResNets). In theory, very deep networks can represent very complex functions; but in practice, they are hard to train. Residual Networks, introduced by He et al., allow you to train much deeper networks than were previously practically feasible.

In this assignment, you will:

- Implement the basic building blocks of ResNets.

- Put together these building blocks to implement and train a state-of-the-art neural network for image classification.

This assignment will be done in Keras.

Before jumping into the problem, let's run the cell below to load the required packages.

...

1 - The problem of very deep neural networks(深度神经网络面临的问题)

Last week, you built your first convolutional neural network. In recent years, neural networks have become deeper, with state-of-the-art networks going from just a few layers (e.g., AlexNet) to over a hundred layers.

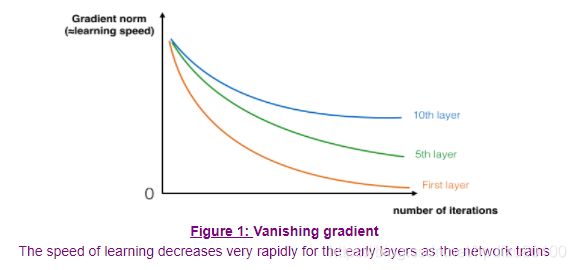

The main benefit of a very deep network is that it can represent very complex functions. It can also learn features at many different levels of abstraction, from edges (at the lower layers) to very complex features (at the deeper layers). However, using a deeper network doesn't always help. A huge barrier to training them is vanishing gradients: very deep networks often have a gradient signal that goes to zero quickly, thus making gradient descent unbearably slow. More specifically, during gradient descent, as you backprop from the final layer back to the first layer, you are multiplying by the weight matrix on each step, and thus the gradient can decrease exponentially quickly to zero (or, in rare cases, grow exponentially quickly and "explode" to take very large values).

During training, you might therefore see the magnitude (or norm) of the gradient for the earlier layers descrease to zero very rapidly as training proceeds:

2 - Building a Residual Network(搭建残差网络)

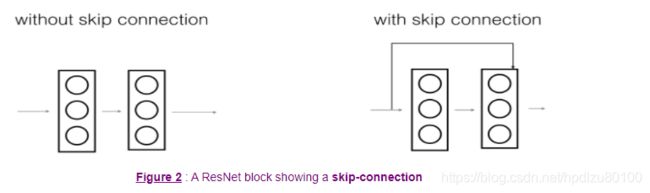

In ResNets, a "shortcut" or a "skip connection" allows the gradient to be directly backpropagated to earlier layers:

The image on the left shows the "main path" through the network. The image on the right adds a shortcut to the main path. By stacking these ResNet blocks on top of each other, you can form a very deep network.

We also saw in lecture that having ResNet blocks with the shortcut also makes it very easy for one of the blocks to learn an identity function. This means that you can stack on additional ResNet blocks with little risk of harming training set performance. (There is also some evidence that the ease of learning an identity function--even more than skip connections helping with vanishing gradients--accounts for ResNets' remarkable performance.)

Two main types of blocks are used in a ResNet, depending mainly on whether the input/output dimensions are same or different. You are going to implement both of them.

2.1 - The identity block(恒等块/残差块)

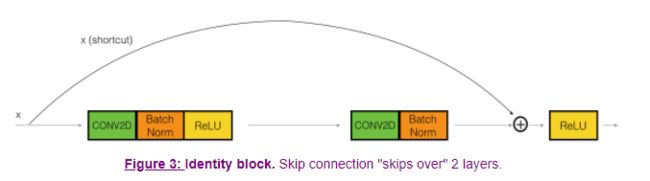

The identity block is the standard block used in ResNets, and corresponds to the case where the input activation (say []a[l]) has the same dimension as the output activation (say [+2]). To flesh out the different steps of what happens in a ResNet's identity block, here is an alternative diagram showing the individual steps:

The upper path is the "shortcut path." The lower path is the "main path." In this diagram, we have also made explicit the CONV2D and ReLU steps in each layer. To speed up training we have also added a BatchNorm step. Don't worry about this being complicated to implement--you'll see that BatchNorm is just one line of code in Keras!

...

2.2 - The convolutional block(卷积块)

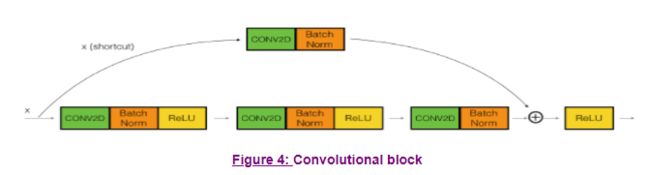

You've implemented the ResNet identity block. Next, the ResNet "convolutional block" is the other type of block. You can use this type of block when the input and output dimensions don't match up. The difference with the identity block is that there is a CONV2D layer in the shortcut path:

The CONV2D layer in the shortcut path is used to resize the input to a different dimension, so that the dimensions match up in the final addition needed to add the shortcut value back to the main path. (This plays a similar role as the matrix discussed in lecture.) For example, to reduce the activation dimensions's height and width by a factor of 2, you can use a 1x1 convolution with a stride of 2. The CONV2D layer on the shortcut path does not use any non-linear activation function. Its main role is to just apply a (learned) linear function that reduces the dimension of the input, so that the dimensions match up for the later addition step.

...

3 - Building your first ResNet model (50 layers)(搭建你的第一个残差网络模型(50层))

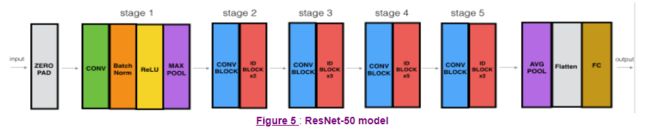

You now have the necessary blocks to build a very deep ResNet. The following figure describes in detail the architecture of this neural network. "ID BLOCK" in the diagram stands for "Identity block," and "ID BLOCK x3" means you should stack 3 identity blocks together.

The details of this ResNet-50 model are:

- Zero-padding pads the input with a pad of (3,3)

- Stage 1:

- The 2D Convolution has 64 filters of shape (7,7) and uses a stride of (2,2). Its name is "conv1".

- BatchNorm is applied to the channels axis of the input.

- MaxPooling uses a (3,3) window and a (2,2) stride.

- Stage 2:

- The convolutional block uses three set of filters of size [64,64,256], "f" is 3, "s" is 1 and the block is "a".

- The 2 identity blocks use three set of filters of size [64,64,256], "f" is 3 and the blocks are "b" and "c".

- Stage 3:

- The convolutional block uses three set of filters of size [128,128,512], "f" is 3, "s" is 2 and the block is "a".

- The 3 identity blocks use three set of filters of size [128,128,512], "f" is 3 and the blocks are "b", "c" and "d".

...

4 - Test on your own image (Optional/Ungraded)(使用自己的图片测试)

If you wish, you can also take a picture of your own hand and see the output of the model. To do this:

1. Click on "File" in the upper bar of this notebook, then click "Open" to go on your Coursera Hub.

2. Add your image to this Jupyter Notebook's directory, in the "images" folder

3. Write your image's name in the following code

4. Run the code and check if the algorithm is right! ...

What you should remember:

- Very deep "plain" networks don't work in practice because they are hard to train due to vanishing gradients.

- The skip-connections help to address the Vanishing Gradient problem. They also make it easy for a ResNet block to learn an identity function.

- There are two main type of blocks: The identity block and the convolutional block.

- Very deep Residual Networks are built by stacking these blocks together.

作业完整截图: