wandb使用

wandb和tensorboard最大区别是tensorboard的数据是存在本地的,wandb是存在wandb远端服务器,wandb会为开发真创建一个账户并生成登陆api的key。运行自己程序之前需要先登陆wandb

第一步 安装wandb

pip install wandb

第二步 登陆wandb网页,注册账户并获取api key

wandb注册首页, 一般用google账户或者github账户注册都行, 注册时注意保存username

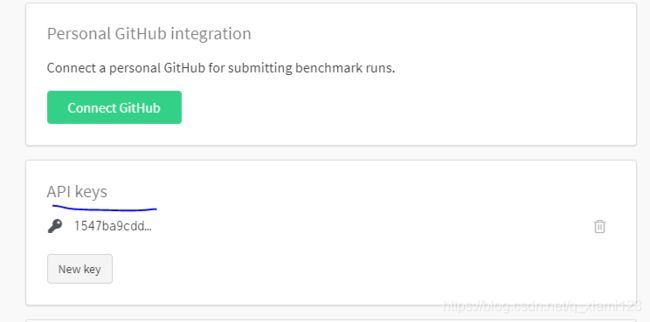

注册成功会在这个页面有注册信息展示,api key也是在这个页面获取的

第三步 复制api key和用户名到你自己机器上面

在自己机器终端上运行 wandb login命令, 会在/root/.netrc 下生成.netrc文件, 修改这个文件,这里的password就是第二步获取的api keys

第四步 再次登陆验证

第三步配置完成在终端中重新键入 wandb login, 由于第三步已经自动配置登陆用户名和密码,所以在交互窗口什么也不用做,出现下面提示,证明登陆成功

![]()

第五步 示例验证

运行下面这段代码,验证下参数可视化情况。

from __future__ import print_function

import argparse

import random # to set the python random seed

import numpy # to set the numpy random seed

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

# Ignore excessive warnings

import logging

logging.propagate = False

logging.getLogger().setLevel(logging.ERROR)

# WandB – Import the wandb library

import wandb

# WandB – Login to your wandb account so you can log all your metrics

# 定义Convolutional Neural Network:

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

# In our constructor, we define our neural network architecture that we'll use in the forward pass.

# Conv2d() adds a convolution layer that generates 2 dimensional feature maps

# to learn different aspects of our image.

self.conv1 = nn.Conv2d(3, 6, kernel_size=5)

self.conv2 = nn.Conv2d(6, 16, kernel_size=5)

# Linear(x,y) creates dense, fully connected layers with x inputs and y outputs.

# Linear layers simply output the dot product of our inputs and weights.

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

# Here we feed the feature maps from the convolutional layers into a max_pool2d layer.

# The max_pool2d layer reduces the size of the image representation our convolutional layers learnt,

# and in doing so it reduces the number of parameters and computations the network needs to perform.

# Finally we apply the relu activation function which gives us max(0, max_pool2d_output)

x = F.relu(F.max_pool2d(self.conv1(x), 2))

x = F.relu(F.max_pool2d(self.conv2(x), 2))

# Reshapes x into size (-1, 16 * 5 * 5)

# so we can feed the convolution layer outputs into our fully connected layer.

x = x.view(-1, 16 * 5 * 5)

# We apply the relu activation function and dropout to the output of our fully connected layers.

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

# Finally we apply the softmax function to squash the probabilities of each class (0-9)

# and ensure they add to 1.

return F.log_softmax(x, dim=1)

def train(config, model, device, train_loader, optimizer, epoch):

# switch model to training mode. This is necessary for layers like dropout, batchNorm etc.

# which behave differently in training and evaluation mode.

model.train()

# we loop over the data iterator, and feed the inputs to the network and adjust the weights.

for batch_id, (data, target) in enumerate(train_loader):

if batch_id > 20:

break

# Loop the input features and labels from the training dataset.

data, target = data.to(device), target.to(device)

# Reset the gradients to 0 for all learnable weight parameters

optimizer.zero_grad()

# Forward pass: Pass image data from training dataset, make predictions

# about class image belongs to (0-9 in this case).

output = model(data)

# Define our loss function, and compute the loss

loss = F.nll_loss(output, target)

# Backward pass:compute the gradients of loss,the model's parameters

loss.backward()

# update the neural network weights

optimizer.step()

# wandb.log用来记录一些日志(accuracy,loss and epoch), 便于随时查看网路的性能

def test(args, model, device, test_loader, classes):

model.eval()

# switch model to evaluation mode.

# This is necessary for layers like dropout, batchNorm etc. which behave differently in training and evaluation mode

test_loss = 0

correct = 0

example_images = []

with torch.no_grad():

for data, target in test_loader:

# Load the input features and labels from the test dataset

data, target = data.to(device), target.to(device)

# Make predictions: Pass image data from test dataset,

# make predictions about class image belongs to(0-9 in this case)

output = model(data)

# Compute the loss sum up batch loss

test_loss += F.nll_loss(output, target, reduction='sum').item()

# Get the index of the max log-probability

pred = output.max(1, keepdim=True)[1]

correct += pred.eq(target.view_as(pred)).sum().item()

# Log images in your test dataset automatically,

# along with predicted and true labels by passing pytorch tensors with image data into wandb.

example_images.append(wandb.Image(

data[0], caption="Pred:{} Truth:{}".format(classes[pred[0].item()], classes[target[0]])))

# wandb.log(a_dict) logs the keys and values of the dictionary passed in and associates the values with a step.

# You can log anything by passing it to wandb.log(),

# including histograms, custom matplotlib objects, images, video, text, tables, html, pointclounds and other 3D objects.

# Here we use it to log test accuracy, loss and some test images (along with their true and predicted labels).

wandb.log({

"Examples": example_images,

"Test Accuracy": 100. * correct / len(test_loader.dataset),

"Test Loss": test_loss

})

# 初始化一个wandb run, 并设置超参数

# Initialize a new run

wandb.init(project="pytorch-intro")

wandb.watch_called = False # Re-run the model without restarting the runtime, unnecessary after our next release

# config is a variable that holds and saves hyper parameters and inputs

config = wandb.config # Initialize config

config.batch_size = 4 # input batch size for training (default:64)

config.test_batch_size = 10 # input batch size for testing(default:1000)

config.epochs = 50 # number of epochs to train(default:10)

config.lr = 0.1 # learning rate(default:0.01)

config.momentum = 0.1 # SGD momentum(default:0.5)

config.no_cuda = False # disables CUDA training

config.seed = 42 # random seed(default:42)

config.log_interval = 10 # how many batches to wait before logging training status

def main():

use_cuda = not config.no_cuda and torch.cuda.is_available()

device = torch.device("cuda:0" if use_cuda else "cpu")

kwargs = {'num_workers': 1, 'pin_memory': True} if use_cuda else {}

# Set random seeds and deterministic pytorch for reproducibility

# random.seed(config.seed) # python random seed

torch.manual_seed(config.seed) # pytorch random seed

# numpy.random.seed(config.seed) # numpy random seed

torch.backends.cudnn.deterministic = True

# Load the dataset: We're training our CNN on CIFAR10.

# First we define the transformations to apply to our images.

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

])

# Now we load our training and test datasets and apply the transformations defined above

train_loader = DataLoader(datasets.CIFAR10(

root='dataset',

train=True,

download=True,

transform=transform

), batch_size=config.batch_size, shuffle=True, **kwargs)

test_loader = DataLoader(datasets.CIFAR10(

root='dataset',

train=False,

download=True,

transform=transform

), batch_size=config.batch_size, shuffle=False, **kwargs)

classes = ('plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

# Initialize our model, recursively go over all modules and convert their parameters

# and buffers to CUDA tensors (if device is set to cuda)

model = Net().to(device)

optimizer = optim.SGD(model.parameters(), lr=config.lr, momentum=config.momentum)

# wandb.watch() automatically fetches all layer dimensions, gradients, model parameters

# and logs them automatically to your dashboard.

# using log="all" log histograms of parameter values in addition to gradients

wandb.watch(model, log="all")

for epoch in range(1, config.epochs + 1):

train(config, model, device, train_loader, optimizer, epoch)

test(config, model, device, test_loader, classes)

# Save the model checkpoint. This automatically saves a file to the cloud

torch.save(model.state_dict(), 'model.h5')

wandb.save('model.h5')

if __name__ == '__main__':

main()

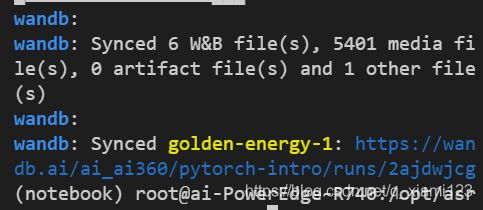

上面这段代码运行结束以后会给一个远程链接

复制上面这个链接到浏览器或者直接双击打开,来到这个展示界面,下面就能愉快使用wandb了

第六步 复盘

综合来看,除了注册耗时以外,wandb还是比较轻量好用。在原生代码里只需要设置这几个地方

- import wandb

- 想要记录数据地方,比如test函数里面

wandb.log({

"Examples": example_images,

"Test Accuracy": 100. * correct / len(test_loader.dataset),

"Test Loss": test_loss

})

- 超参数配置:config其实是一个class, 但充当字典使用

# config is a variable that holds and saves hyper parameters and inputs

config = wandb.config # Initialize config

config.batch_size = 4 # input batch size for training (default:64)

config.test_batch_size = 10 # input batch size for testing(default:1000)

config.epochs = 50 # number of epochs to train(default:10)

config.lr = 0.1 # learning rate(default:0.01)

config.momentum = 0.1 # SGD momentum(default:0.5)

config.no_cuda = False # disables CUDA training

config.seed = 42 # random seed(default:42)

- 训练时候记录数据和训练结束保存模型

wandb.watch(model, log="all")

for epoch in range(1, config.epochs + 1):

train(config, model, device, train_loader, optimizer, epoch)

test(config, model, device, test_loader, classes)

# Save the model checkpoint. This automatically saves a file to the cloud

torch.save(model.state_dict(), 'model.h5')

wandb.save('model.h5')

以上就是wandb使用基本记录,由于本人也是才开始使用,暂时没有一些高级trick, 后续如果有高阶内容会继续更新