表情识别:

如果有其他图像处理图像识别或者深度学习的作业或者毕设需要代做的话,可以联系我的qq1735375343,价格公道,无需押金,做完以后再付款!!!

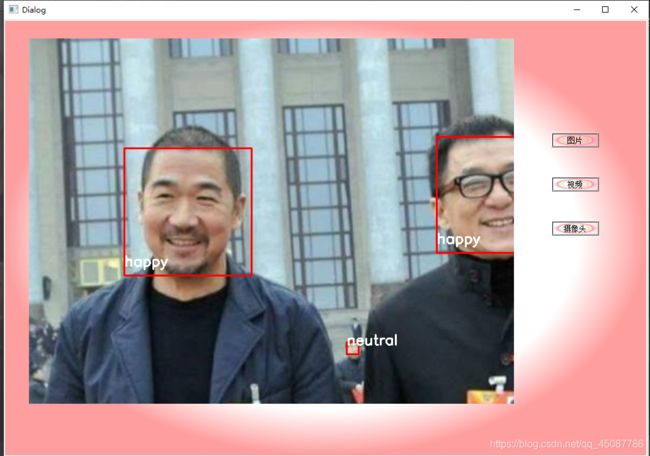

本博文是基于tensorflow的mtcnn算法来检测人脸,效果超好,然后用fer2013数据集训练一个表情识别的网络,最后将检测的人脸进行情绪识别。一共可以识别以下7种表情:

angry

disgust

fear

happy

sad

surprise

neutral

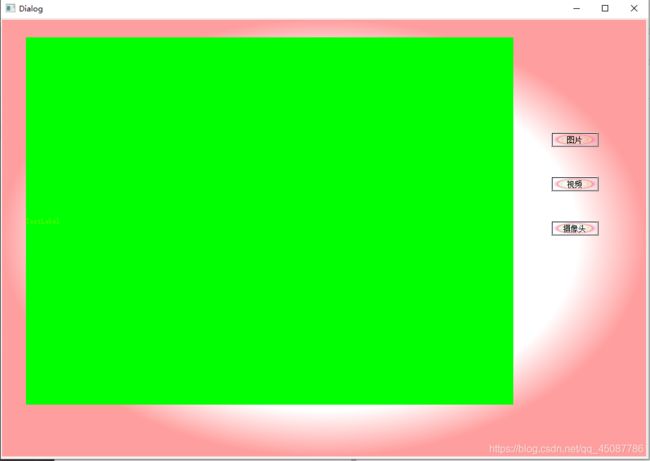

可以支持图片视频和摄像头,多人脸的情绪识别。

下面是部分代码,如需要全部代码可自行下载:

import os

import cv2

import numpy as np

from keras.applications.imagenet_utils import preprocess_input

from model_bq import mini_XCEPTION

import utils.utils as utils

from net.mobilenet import MobileNet

from net.mtcnn import mtcnn

def preprocess_input(x, v2=True):

x = x.astype('float32')

x = x / 255.0

if v2:

x = x - 0.5

x = x * 2.0

return x

class face_rec():

def __init__(self):

#-------------------------#

# 创建mtcnn的模型

# 用于检测人脸

#-------------------------#

self.mtcnn_model = mtcnn()

self.threshold = [0.5,0.6,0.8]

#-------------------------#

# 创建mobilenet的模型

# 用于判断是否佩戴口罩

#-------------------------#

self.classes_path = "model_data/classes.txt"

self.class_names = self._get_class()

self.Crop_HEIGHT = 224

self.Crop_WIDTH = 224

self.NUM_CLASSES = len(self.class_names)

self.mask_model = mini_XCEPTION(input_shape=(48,48,1),num_classes=7)

self.mask_model.load_weights("./model_data/ep122-loss0.845-val_loss0.971.h5")

def _get_class(self):

classes_path = os.path.expanduser(self.classes_path)

with open(classes_path) as f:

class_names = f.readlines()

class_names = [c.strip() for c in class_names]

return class_names

def recognize(self,draw):

height,width,_ = np.shape(draw)

draw_rgb = cv2.cvtColor(draw,cv2.COLOR_BGR2RGB)

#--------------------------------#

# 检测人脸

#--------------------------------#

rectangles = self.mtcnn_model.detectFace(draw_rgb, self.threshold)

if len(rectangles)==0:

return

rectangles = np.array(rectangles,dtype=np.int32)

rectangles_temp = utils.rect2square(rectangles)

rectangles_temp[:, [0,2]] = np.clip(rectangles_temp[:, [0,2]], 0, width)

rectangles_temp[:, [1,3]] = np.clip(rectangles_temp[:, [1,3]], 0, height)

#-----------------------------------------------#

# 对检测到的人脸进行编码

#-----------------------------------------------#

classes_all = []

for rectangle in rectangles_temp:

#---------------#

# 截取图像

#---------------#

landmark = np.reshape(rectangle[5:15], (5,2)) - np.array([int(rectangle[0]), int(rectangle[1])])

crop_img = draw_rgb[int(rectangle[1]):int(rectangle[3]), int(rectangle[0]):int(rectangle[2])]

#-----------------------------------------------#

# 利用人脸关键点进行人脸对齐

#-----------------------------------------------#

crop_img,_ = utils.Alignment_1(crop_img,landmark)

crop_img = cv2.resize(crop_img, (self.Crop_WIDTH,self.Crop_HEIGHT))

#crop_img = preprocess_input(np.reshape(np.array(crop_img, np.float64),[1, self.Crop_HEIGHT, self.Crop_WIDTH, 3]))

new=cv2.cvtColor(crop_img,cv2.COLOR_BGR2GRAY)

new=cv2.resize(new,(48,48))

new=np.reshape(new,(1,48,48,1))

new=preprocess_input(new)

classes = self.class_names[np.argmax(self.mask_model.predict(new)[0])]

classes_all.append(classes)

rectangles = rectangles[:, 0:4]

#-----------------------------------------------#

# 画框~!~

#-----------------------------------------------#

for (left, top, right, bottom), c in zip(rectangles,classes_all):

cv2.rectangle(draw, (left, top), (right, bottom), (0, 0, 255), 2)

font = cv2.FONT_HERSHEY_SIMPLEX

cv2.putText(draw, c, (left , bottom - 15), font, 0.75, (255, 255, 255), 2)

return draw

if __name__ == "__main__":

dududu = face_rec()

draw=cv2.imread(r'C:\Users\Administrator\Desktop/1.png')

dududu.recognize(draw)

#video_capture.release()

cv2.imshow('a12',draw)

cv2.waitKey(0)

cv2.destroyAllWindows()

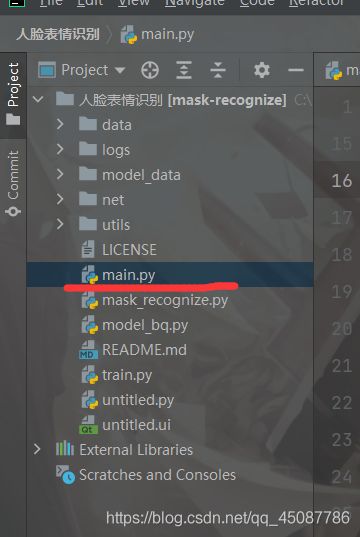

main.py

from mask_recognize import *

import sys, cv2, time

from untitled import Ui_Dialog

from PyQt5 import QtCore, QtGui, QtWidgets

from PyQt5.QtWidgets import QFileDialog,QTabWidget

from PyQt5.QtCore import QTimer, QThread, pyqtSignal, Qt

from PyQt5.QtGui import QPixmap, QImage

from PyQt5.QtWidgets import QLabel,QWidget

dududu = face_rec()

class mywindow(QTabWidget,Ui_Dialog): #这个窗口继承了用QtDesignner 绘制的窗口

def __init__(self):

super(mywindow,self).__init__()

self.setupUi(self)

self.pushButton_2.clicked.connect(self.videoprocessing)

self.pushButton.clicked.connect(self.picture)

self.pushButton_3.clicked.connect(self.videoprocessing)

def picture(self):

picName, picType = QFileDialog.getOpenFileName(self,

"打开图片",

"",

" *.jpg;;*.png;;*.jpeg;;*.bmp;;All Files (*)")

src=cv2.imread(picName)

try:

dududu.recognize(src)

except:

return

src=self.cv_qt(src)

self.label.setPixmap(QPixmap.fromImage(src))

def cv_qt(self,src):

h,w,d=src.shape

bytesperline=d*w

# self.src=cv.cvtColor(self.src,cv.COLOR_BGR2RGB)

qt_image=QImage(src.data,w,h,bytesperline,QImage.Format_RGB888).rgbSwapped()

return qt_image

#self.label.setPixmap(QPixmap.fromImage(self.qt_image))

def videoprocessing(self):

button = self.sender()

print(button.objectName())

global videoName #在这里设置全局变量以便在线程中使用

if button.objectName()=='pushButton_2':

videoName,videoType= QFileDialog.getOpenFileName(self,

"打开视频",

"",

#" *.jpg;;*.png;;*.jpeg;;*.bmp")

" *.mp4;;*.avi;;All Files (*)")

elif button.objectName()=='pushButton_3':

videoName=0#'http://admin:[email protected]:8081/'

#cap = cv2.VideoCapture(str(videoName))

th = Thread(self)

th.changePixmap.connect(self.setImage)

th.start()

def setImage(self, image):

self.label.setPixmap(QPixmap.fromImage(image))

# def imageprocessing(self):

# print("hehe")

# imgName,imgType= QFileDialog.getOpenFileName(self,

# "打开图片",

# "",

# #" *.jpg;;*.png;;*.jpeg;;*.bmp")

# " *.jpg;;*.png;;*.jpeg;;*.bmp;;All Files (*)")

#

# #利用qlabel显示图片

# print(str(imgName))

# png = QtGui.QPixmap(imgName).scaled(self.label_2.width(), self.label_2.height())#适应设计label时的大小

# self.label_2.setPixmap(png)

class Thread(QThread):#采用线程来播放视频

changePixmap = pyqtSignal(QtGui.QImage)

def run(self):

cap = cv2.VideoCapture(videoName)

print(videoName)

while (cap.isOpened()==True):

ret, frame = cap.read()

if ret:

#print(12)

dududu.recognize(frame)

rgbImage = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

convertToQtFormat = QtGui.QImage(rgbImage.data, rgbImage.shape[1], rgbImage.shape[0], QImage.Format_RGB888)#在这里可以对每帧图像进行处理,

p = convertToQtFormat.scaled(770, 580, Qt.KeepAspectRatio)

self.changePixmap.emit(p)

time.sleep(0.03) #控制视频播放的速度

else:

break

if __name__ == '__main__':

app = QtWidgets.QApplication(sys.argv)

window = mywindow()

window.show()

sys.exit(app.exec_())

**代码下载地址:**下载链接:下载地址