大数据-玩转数据-Hive应用小结

说明

本文只为说明功能,除十二节外,案例数据并不连贯。

一、 HIVE是什么

HIVE是基于Hadoop的一个数据仓库工具,它是一个可以将Sql翻译为MR程序的工具;HIVE支持用户将HDFS上的文件映射为表结构,然后用户就可以输入SQL对这些表(HDFS上的文件)进行查询分析,HIVE将用户定义的库、表结构等信息存储到HIVE的元数据库(可以是本地derby,也可以是远程mysql)中。 其优点是学习成本低,可以通过类SQL语句快速实现简单的MapReduce统计,不必开发专门的MapReduce应用,十分适合数据仓库的统计分析。

二、登录交互模式

我们假设已经启动了HDFS,Yarn

[root@hadoop1 ~]# jps

1991 NameNode

2122 DataNode

2556 Jps

2414 NodeManager

1、单机交互式

已经配置了HIVE环境变量(参考HIVE安装篇)

[root@hadoop1 ~]# hive

2、hive服务交互

后台启动运行hive服务

[root@hadoop1 hive]# nohup bin/hiveserver2 1>/dev/dull 2>&1 &

[1] 3034

查看服务是否启动(需要一点时间)

[root@hadoop1 hive]# netstat -nltp|grep 10000

如果看到10000的端口的服务说明服务已经启动

![]()

任一台安装了hive的客户端连接启动的服务

[root@hadoop1 hive]# bin/beeline -u jdbc:hive2://hadoop1:10000 -n hadoop

如果报错:

21/03/17 18:04:41 [main]: WARN jdbc.HiveConnection: Failed to connect to hadoop102:10000

Error: Could not open client transport with JDBC Uri: jdbc:hive2://hadoop102:10000: Failed to open new session: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: kuber is not allowed to impersonate kuber (state=08S01,code=0)

首先在./etc/hadoop/core-site.xml文件里面加上:

<property>

<name>hadoop.proxyuser.kuber.hostsname>

<value>*value>

property>

<property>

<name>hadoop.proxyuser.kuber.groupsname>

<value>*value>

property>

然后重启hdfs和yarn,重启hiveserver,hiveserver2但是对我来说并没用。

随后,我在hive/conf/hive.site.xml里面加了下面一句:

<property>

<name>hive.server2.enable.doAsname>

<value>falsevalue>

property>

重新启动服务登录

3、将hive作为命令shell运行

bin/hive -e “sql1;sql2;sql3;sql4”

事先将sql语句写入一个文件比如 q.hql ,然后用hive命令执行:

[root@hadoop1 ~]# hive -e "select count(1) from default.t_big24"

当前目录下创建q.hql

[root@hadoop1 ~]# hive -f q.hql

4、shell脚本中调用

编写一个shell脚本文件etl.sh

#!/bin/bash

hive -e "create table t_count_text(sex string,number int)"

hive -e "isnert into t_count_text select sex,count(1) from default.t_big24 group by sex"

运行shell 脚本

[root@hadoop1 ~]# sh etl.sh

三、HIVE的DDL语法

1、建库

登录hive

jdbc:hive2://hadoop1:10000> create database db1;

hive就会在默认仓库路径 /user/hive/warehouse/下建一个文件夹: db1.db

2、建内部表

2.1创建表

jdbc:hive2://hadoop1:10000> use db1;

jdbc:hive2://hadoop1:10000>create table t_2(id int,name string,salary bigint,add string)

row format delimited

fields terminated by ',';

建表后,hive会在仓库目录中建一个表目录: /user/hive/warehouse/db1.db/t_test1,数据格式的分隔符是’,’,如果不指定,默认分割划是^A,用ctrl + v可以输入 ^符号 ,ctrl + a 可以输入A,linux下用cat命令是看不到这个默认分割符的。

2.2 添加列

jdbc:hive2://hadoop1:10000> alter table t_seq add columns(address string,age int);

2.3 全部替换

jdbc:hive2://hadoop1:10000> alter table t_seq replace columns(id int,name string,address string,age int);

2.4 修改已存在的列定义

jdbc:hive2://hadoop1:10000> alter table t_seq change userid uid string;

3、建外部表

jdbc:hive2://hadoop1:10000> create external table t_3(id int,name string,salary bigint,add string)

row format delimited

fields terminated by ','

location '/aa/bb';

4、内部表和外部表区别

内部表的目录由hive创建在默认的仓库目录下:/user/hive/warehouse/…

外部表的目录由用户建表时自己指定: location ‘/位置/’

drop一个内部表时,表的元信息和表数据目录都会被删除;

drop一个外部表时,只删除表的元信息,表的数据目录不会删除;

意义: 通常,一个数据仓库系统,数据总有一个源头,而源头一般是别的应用系统产生的,其目录无定法,为了方便映射,就可以在hive中用外部表进行映射;并且,就算在hive中把这个表给drop掉,也不会删除源数据目录,也就不会影响到别的应用系统;

5、分区表

分区关键字 PARTITIONED BY

jdbc:hive2://hadoop1:10000> create table t_4(ip string,url string,staylong int)

partitioned by (day string)

row format delimited

fields terminated by ',';

分区标识不能存在于表字段中。

6、修改表的分区

6.1 添加分区

jdbc:hive2://hadoop1:10000> alter table t_4 add partition(day='2017-04-10') partition(day='2017-04-11');

添加完成后,可以检查t_4的分区情况:

jdbc:hive2://hadoop1:10000> show partitions t_4;

然后,可以向新增的分区中导入数据:

jdbc:hive2://hadoop1:10000> load data local inpath '/root/weblog.3' into table t_4 partition(day='2017-04-10');

jdbc:hive2://hdp-nn-01:10000> select * from t_4 where day='2017-04-10';

–还可以使用insert

insert into table t_4 partition(day='2017-04-11')

select ip,url,staylong from t_4 where day='2017-04-08' and staylong>30;

6.2 删除分区

jdbc:hive2://hadoop1:10000> alter table t_4 drop partition(day='2017-04-11');

四、数据的导入

先把数据存放到HDFS上指定目录

hdfs dfs -mkdir /hive_operate

hdfs dfs -mkdir /hive_operate/movie_table

hdfs dfs -mkdir /hive_operate/rating_table

hdfs dfs -put movies.csv /hive_operate/movie_table

hdfs dfs -put ratings.csv /hive_operate/rating_table

1、本地导入数据hive表

将hive运行所在机器的本地磁盘上的文件导入表中

hive>load data local inpath '/root/weblog.1' into[overwrite] table t_1;

2 、将hdfs中的文件导入hive表

jdbc:hive2://hadoop1:10000> load data inpath '/user.data.2' into table t_1;

不加local关键字,则是从hdfs的路径中移动文件到表目录中;

3、 从别的表查询数据后插入到一张新建表中

jdbc:hive2://hadoop1:10000> create table t_1_jz

as

select id,name from t_1;

4、 从别的表查询数据后插入到一张已存在的表中

加入已存在一张表:可以先建好:

jdbc:hive2://hadoop1:10000> create table t_1_hd like t_1;

然后从t_1中查询一些数据出来插入到t_1_hd中:

jdbc:hive2://hadoop1:10000> insert into table t_1_hd

select

id,name,add

from t_1

where add='handong';

5、导入数据到不同的分区目录

jdbc:hive2://hadoop1:10000> load data local inpath '/root/weblog.1' into table t_4 partition(day='2017-04-08');

jdbc:hive2://hadoop1:10000> load data local inpath '/root/weblog.2' into table t_4 partition(day='2017-04-09');

五、数据的导出

1、将数据从hive的表中导出到hdfs的目录中

jdbc:hive2://hadoop1:10000> insert overwrite directory '/aa/bb'

select * from t_1 where add='jingzhou';

2 、将数据从hive的表中导出到本地磁盘目录中

jdbc:hive2://hadoop1:10000> insert overwrite local directory '/aa/bb'

select * from t_1 where add='jingzhou';

六 、显示命令

show tables

show databases

show partitions

例子: show partitions t_4;

show functions – 显示hive中所有的内置函数

desc t_name; – 显示表定义

desc extended t_name; – 显示表定义的详细信息

desc formatted table_name;

– 显示表定义的详细信息,并且用比较规范的格式显示

show create table table_name – 显示建表语句

七、hive 中 DML 语句

同sql语句

八、HIVE的内置函数

1、时间处理函数

from_unixtime(21938792183,'yyyy-MM-dd HH:mm:ss') --> '2017-06-03 17:50:30'

select current_date from dual;

select current_timestamp from dual;

select unix_timestamp() from dual;

--1491615665

select unix_timestamp('2011-12-07 13:01:03') from dual;

--1323234063

select unix_timestamp('20111207 13:01:03','yyyyMMdd HH:mm:ss') from dual;

--1323234063

select from_unixtime(1323234063,'yyyy-MM-dd HH:mm:ss') from dual;

--获取日期、时间

select year('2011-12-08 10:03:01') from dual;

--2011

select year('2012-12-08') from dual;

--2012

select month('2011-12-08 10:03:01') from dual;

--12

select month('2011-08-08') from dual;

--8

select day('2011-12-08 10:03:01') from dual;

--8

select day('2011-12-24') from dual;

--24

select hour('2011-12-08 10:03:01') from dual;

--10

select minute('2011-12-08 10:03:01') from dual;

--3

select second('2011-12-08 10:03:01') from dual;

--1

--日期增减

select date_add('2012-12-08',10) from dual;

--2012-12-18

date_sub (string startdate, int days) : string

--例:

select date_sub('2012-12-08',10) from dual;

--2012-11-28

2、类型转换函数

from_unixtime(cast('21938792183' as bigint),'yyyy-MM-dd HH:mm:ss')

3、字符串截取和拼接

substr("abcd",1,3) --> 'abc'

concat('abc','def') --> 'abcdef'

4、Json数据解析函数

get_json_object('{\"key1\":3333,\"key2\":4444}' , '$.key1') --> 3333

json_tuple('{\"key1\":3333,\"key2\":4444}','key1','key2') as(key1,key2) --> 3333, 4444

5、url解析函数

parse_url_tuple('http://www.edu360.cn/bigdata/baoming?userid=8888','HOST','PATH','QUERY','QUERY:userid')

---> www.edu360.cn /bigdata/baoming userid=8888 8888

6、函数:explode 和 lateral view

可以将一个数组变成列

加入有一个表,其中的字段为array类型

表数据:

1,zhangsan,数学:语文:英语:生物

2,lisi,数学:语文

3,wangwu,化学:计算机:java编程

建表:

create table t_xuanxiu(uid string,name string,kc array<string>)

row format delimited

fields terminated by ','

collection items terminated by ':';

** explode效果示例:

select explode(kc) from t_xuanxiu where uid=1;

数学

语文

英语

生物

** lateral view 表生成函数

hive> select uid,name,tmp.* from t_xuanxiu

> lateral view explode(kc) tmp as course;

1 zhangsan 数学

1 zhangsan 语文

1 zhangsan 英语

1 zhangsan 生物

2 lisi 数学

2 lisi 语文

3 wangwu 化学

3 wangwu 计算机

3 wangwu java编程

利用explode和lateral view 实现hive版的wordcount 有以下数据:

a b c d e f g

a b c

e f g a

b c d b

对数据建表:

create table t_juzi(line string) row format delimited;

导入数据:

load data local inpath '/root/words.txt' into table t_juzi;

select a.word,count(1) cnt

from

(select tmp.* from t_juzi lateral view explode(split(line,' ')) tmp as word) a

group by a.word

order by cnt desc;

7、row_number() over() 函数

常用于求分组TOPN

有如下数据:

zhangsan,kc1,90

zhangsan,kc2,95

zhangsan,kc3,68

lisi,kc1,88

lisi,kc2,95

lisi,kc3,98

建表:

create table t_rowtest(name string,kcId string,score int)

row format delimited

fields terminated by ',';

导入数据:

利用row_number() over() 函数看下效果:

select *,row_number() over(partition by name order by score desc) as rank from t_rowtest;

从而,求分组topn就变得很简单了:

select name,kcid,score

from

(select *,row_number() over(partition by name order by score desc) as rank from t_rowtest) tmp

where rank<3;

create table t_rate_topn_uid

as

select uid,movie,rate,ts

from

(select *,row_number() over(partition by uid order by rate desc) as rank from t_rate) tmp

where rank<11;

九、 自定义函数

略

十、hive中的复合数据类型

1、array

有如下数据:

战狼2,吴京:吴刚:龙母,2017-08-16

三生三世十里桃花,刘亦菲:痒痒,2017-08-20

普罗米修斯,苍老师:小泽老师:波多老师,2017-09-17

美女与野兽,吴刚:加藤鹰,2017-09-17

– 建表映射:

create table t_movie(movie_name string,actors array<string>,first_show date)

row format delimited fields terminated by ','

collection items terminated by ':';

– 导入数据

load data local inpath '/root/hivetest/actor.dat' into table t_movie;

load data local inpath '/root/hivetest/actor.dat.2' into table t_movie;

– 查询

select movie_name,actors[0],first_show from t_movie;

select movie_name,actors,first_show

from t_movie where array_contains(actors,'吴刚');

select movie_name

,size(actors) as actor_number

,first_show

from t_movie;

2、map

有如下数据:

1,zhangsan,father:xiaoming#mother:xiaohuang#brother:xiaoxu,28

2,lisi,father:mayun#mother:huangyi#brother:guanyu,22

3,wangwu,father:wangjianlin#mother:ruhua#sister:jingtian,29

4,mayun,father:mayongzhen#mother:angelababy,26

– 建表映射上述数据

create table t_family(id int,name string,family_members map<string,string>,age int)

row format delimited fields terminated by ','

collection items terminated by '#'

map keys terminated by ':';

– 导入数据

load data local inpath '/root/hivetest/fm.dat' into table t_family;

– 查出每个人的 爸爸、姐妹

select id,name,family_members["father"] as father,family_members["sister"] as sister,age

from t_family;

– 查出每个人有哪些亲属关系

select id,name,map_keys(family_members) as relations,age

from t_family;

– 查出每个人的亲人名字

select id,name,map_values(family_members) as relations,age

from t_family;

– 查出每个人的亲人数量

select id,name,size(family_members) as relations,age

from t_family;

– 查出所有拥有兄弟的人及他的兄弟是谁

– 方案1:一句话写完

select id,name,age,family_members['brother']

from t_family where array_contains(map_keys(family_members),'brother');

– 方案2:子查询

select id,name,age,family_members['brother']

from

(select id,name,age,map_keys(family_members) as relations,family_members

from t_family) tmp

where array_contains(relations,'brother');

3、struct

假如有以下数据:

1,zhangsan,18:male:深圳

2,lisi,28:female:北京

3,wangwu,38:male:广州

4,赵六,26:female:上海

5,钱琪,35:male:杭州

6,王八,48:female:南京

– 建表映射上述数据

drop table if exists t_user;

create table t_user(id int,name string,info struct<age:int,sex:string,addr:string>)

row format delimited fields terminated by ','

collection items terminated by ':';

– 导入数据

load data local inpath '/root/hivetest/user.dat' into table t_user;

– 查询每个人的id name和地址

select id,name,info.addr

from t_user;

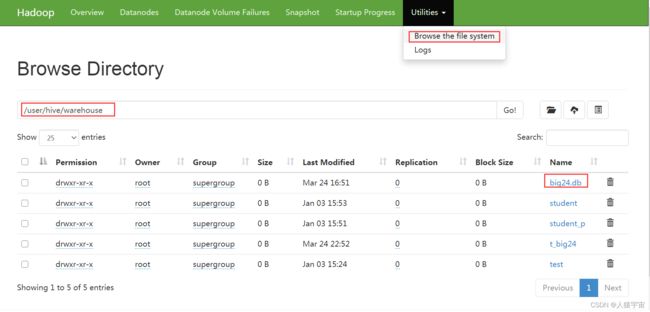

十、WEB管理

http://192.168.80.2:50070/explorer.html#/user/hive/warehouse

十一 HIVE的存储文件格式

HIVE支持很多种文件格式: SEQUENCE FILE | TEXT FILE | PARQUET FILE | RC FILE

试验:先创建一张表t_seq,指定文件格式为sequencefile

create table t_seq(id int,name string,add string)

stored as sequencefile;

然后,往表t_seq中插入数据,hive就会生成sequence文件插入表目录中

insert into table t_seq

select * from t_1 where add='handong';

十二、一个日活、日增的分析案例

1 、需求分析

我们有一个 web 系统。

每天都产生数据。

求,日新:每天新来的用户。

求,日活:每天的活跃用户。

2 ,数据

log2017-09-15

192.168.33.6,hunter,2017-09-15 10:30:20,/a

192.168.33.7,hunter,2017-09-15 10:30:26,/b

192.168.33.6,jack,2017-09-15 10:30:27,/a

192.168.33.8,tom,2017-09-15 10:30:28,/b

192.168.33.9,rose,2017-09-15 10:30:30,/b

192.168.33.10,julia,2017-09-15 10:30:40,/c

log2017-09-16

192.168.33.16,hunter,2017-09-16 10:30:20,/a

192.168.33.18,jerry,2017-09-16 10:30:30,/b

192.168.33.26,jack,2017-09-16 10:30:40,/a

192.168.33.18,polo,2017-09-16 10:30:50,/b

192.168.33.39,nissan,2017-09-16 10:30:53,/b

192.168.33.39,nissan,2017-09-16 10:30:55,/a

192.168.33.39,nissan,2017-09-16 10:30:58,/c

192.168.33.20,ford,2017-09-16 10:30:54,/c

log2017-09-17

192.168.33.46,hunter,2017-09-17 10:30:21,/a

192.168.43.18,jerry,2017-09-17 10:30:22,/b

192.168.43.26,tom,2017-09-17 10:30:23,/a

192.168.53.18,bmw,2017-09-17 10:30:24,/b

192.168.63.39,benz,2017-09-17 10:30:25,/b

192.168.33.25,baval,2017-09-17 10:30:30,/c

192.168.33.10,julia,2017-09-17 10:30:40,/c

3 ,建表 ,分区表

create table web_log(ip string,uid string,access_time string,url string)

partitioned by (dt string)

row format delimited fields terminated by ',';

4 ,导入数据

load data local inpath '/root/hivetest/log2017-09-15' into table web_log partition(dt='2017-09-15');

load data local inpath '/root/hivetest/log2017-09-16' into table web_log partition(dt='2017-09-16');

load data local inpath '/root/hivetest/log2017-09-17' into table web_log partition(dt='2017-09-17');

5 ,查看数据,查看分区

select * from web_log;

show partitions web_log;

6 、日活数据 : 建表

ip :用户的 ip 地址,如果他用过很多 ip 来访问我们,我们就取出他的最早访问的那一条

uid :用户 id

first_access :如果用户来过很多次,我们记录第一次

url :他访问了我们的哪个页面

sql :

create table t_user_access_day(ip string,uid string,first_access string,url string) partitioned by(dt string);

7 、日活数据,查询 : 每个用户访问最早的一条 sql 的进化

select ip,uid,access_time,url from web_log;

select ip,uid,access_time,url from web_log where dt='2017-09-15';

select ip,uid,access_time,url,

row_number() over(partition by uid order by access_time) as rn

from web_log

where dt='2017-09-15';

select ip,uid,access_time,url

from

(select ip,uid,access_time,url,

row_number() over(partition by uid order by access_time) as rn

from web_log

where dt='2017-09-15') tmp

where rn=1;

结果 :

+----------------+---------+----------------------+------+--+

| ip | uid | access_time | url |

+----------------+---------+----------------------+------+--+

| 192.168.33.6 | hunter | 2017-09-15 10:30:20 | /a |

| 192.168.33.6 | jack | 2017-09-15 10:30:27 | /a |

| 192.168.33.10 | julia | 2017-09-15 10:30:40 | /c |

| 192.168.33.9 | rose | 2017-09-15 10:30:30 | /b |

| 192.168.33.8 | tom | 2017-09-15 10:30:28 | /b |

+----------------+---------+----------------------+------+--+

8 ,将查询到的数据,存储到日活表

insert into table t_user_access_day partition(dt='2017-09-15')

select ip,uid,access_time,url

from

(select ip,uid,access_time,url,

row_number() over(partition by uid order by access_time) as rn

from web_log

where dt='2017-09-15') tmp

where rn=1;

9 ,活跃用户总结

15 号活跃用户 :

insert into table t_user_access_day partition(dt='2017-09-15')

select ip,uid,access_time,url

from

(select ip,uid,access_time,url,

row_number() over(partition by uid order by access_time) as rn

from web_log

where dt='2017-09-15') tmp

where rn=1;

16 号活跃用户 :

insert into table t_user_access_day partition(dt='2017-09-16')

select ip,uid,access_time,url

from

(select ip,uid,access_time,url,

row_number() over(partition by uid order by access_time) as rn

from web_log

where dt='2017-09-16') tmp

where rn=1;

17 号活跃用户 :

insert into table t_user_access_day partition(dt='2017-09-17')

select ip,uid,access_time,url

from

(select ip,uid,access_time,url,

row_number() over(partition by uid order by access_time) as rn

from web_log

where dt='2017-09-17') tmp

where rn=1;

10 ,日新 :思路

建历史表。

用今天的日活用户关联历史表。

日活有数据,历史表没有数据,就是当天的新用户,将数据插入到当天新增用户表。

查询过后,将当天的新用户,加入到历史表中。

11 ,日新 : 找到历史表中没有,日活有的用户 ( 日新 )

建表 : 历史用户表

create table t_user_history(uid string);

建表 : 新用户表

create table t_user_new_day like t_user_access_day;

看一下,3 张表 :

t_user_access_day 日活用户表

t_user_history 历史用户表

t_user_new_day 日新用户表

找出新用户 : 历史表没有,日活表有的数据

select a.*

from t_user_access_day a left join t_user_history b on a.uid=b.uid

where a.dt='2017-09-15' and b.uid is null;

将这些数据,存储进日新表 :

insert into table t_user_new_day partition(dt='2017-09-15')

select a.ip,a.uid,a.first_access,a.url

from t_user_access_day a left join t_user_history b on a.uid=b.uid

where a.dt='2017-09-15' and b.uid is null;

将这些新用户插入历史表 :

insert into t_user_history

select uid from t_user_new_day where dt='2017-09-15';

12 ,编写脚本

vim rixin.sh

#!/bin/bash

day_str=`date -d '-1 day' +'%Y-%m-%d'`

echo "准备处理 $day_str 的数据......"

HQL_user_active_day="

insert into table sfl.t_user_active_day partition(day=\"$day_str\")

select ip,uid,access_time,url

from

(select ip,uid,access_time,url,

row_number() over(partition by uid order by access_time) as rn

where day=\"$day_str\") tmp

where rn=1

"

echo "executing sql :"

echo $HQL_user_active_day

hive -e "$HQL_user_active_day"

十三、hive 系统参数

本地模式

set hive.exec.mode.local.auto=true

动态分区

set hive.exec.dynamic.partition.mode=nonstrict;

#指定开启分桶

set hive.enforce.bucketing = true;

set mapreduce.job.reduces=4;