隐马尔可夫模型例子

前言

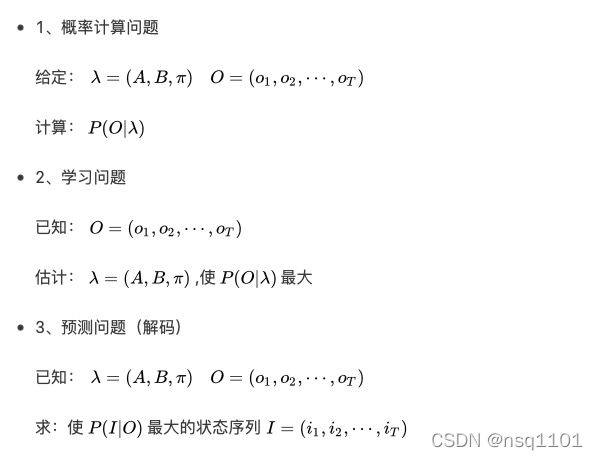

隐马尔可夫模型(Hidden Markov Model,HMM),是统计模型,它用来描述一个含有隐含未知参数的马尔可夫过程。其难点是从可观察的参数中确定该过程的隐含参数。然后利用这些参数来作进一步的分析,例如模式识别。(百度百科)

马尔科夫假设:t 时刻的状态只与 t-1 时刻的状态有关

马尔可夫链:是随机变量 X1, … , Xn 的一个数列

马尔可夫过程:每个状态的转移只依赖于之前的 n 个状态,这个过程被称为1个 n 阶的模型,其中 n 是影响转移状态的数目。

两个假设:

- 马尔可夫假设: t 时刻的状态只与 t-1 时刻的状态有关

- 观测独立性假设: t 时刻的观测变量只与 t 时刻的状态有关

隐马尔可夫模型主要包含下列5个要素:

- 观测状态V:可通过直接观测而得到的状态,即隐含状态的外在表现。

- 观测概率B:某时刻隐含状态处于某个观测状态的概率。

- 初始概率PI:隐含状态在初始时刻处于某个状态的概率

- 隐含状态Q:实际所隐藏的状态,通常无法通过直接观测得到

- 转移概率A:隐含状态在下一时刻是另一隐含状态的概率。

程序

例子

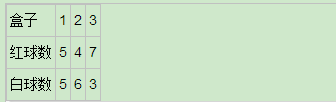

假设我们有3个盒子,每个盒子里都有红色和白色两种球,这三个盒子里球的数量分别是:

按照下面的方法从盒子里抽球,开始的时候,从第一个盒子抽球的概率是0.2,从第二个盒子抽球的概率是0.4,从第三个盒子抽球的概率是0.4。以这个概率抽一次球后,将球放回。然后从当前盒子转移到下一个盒子进行抽球。

规则是:如果当前抽球的盒子是第一个盒子,则以0.5的概率仍然留在第一个盒子继续抽球,以0.2的概率去第二个盒子抽球,以0.3的概率去第三个盒子抽球。如果当前抽球的盒子是第二个盒子,则以0.5的概率仍然留在第二个盒子继续抽球,以0.3的概率去第一个盒子抽球,以0.2的概率去第三个盒子抽球。如果当前抽球的盒子是第三个盒子,则以0.5的概率仍然留在第三个盒子继续抽球,以0.2的概率去第一个盒子抽球,以0.3的概率去第二个盒子抽球。

手撕代码

import numpy as np

class HiddenMarkov:

def forward(self, Q, V, A, B, O, PI): # 使用前向算法

N = len(Q) # 状态序列的大小

M = len(O) # 观测序列的大小

alphas = np.zeros((N, M)) # alpha值

T = M # 有几个时刻,有几个观测序列,就有几个时刻

for t in range(T): # 遍历每一时刻,算出alpha值

indexOfO = V.index(O[t]) # 找出序列对应的索引

for i in range(N):

if t == 0: # 计算初值

alphas[i][t] = PI[t][i] * B[i][indexOfO] # P176(10.15)

print('alpha1(%d)=p%db%db(o1)=%f' % (i, i, i, alphas[i][t]))

else:

alphas[i][t] = np.dot([alpha[t - 1] for alpha in alphas], [a[i] for a in A]) * B[i][

indexOfO] # 对应P176(10.16)

print('alpha%d(%d)=[sigma alpha%d(i)ai%d]b%d(o%d)=%f' % (t, i, t - 1, i, i, t, alphas[i][t]))

# print(alphas)

P = np.sum([alpha[M - 1] for alpha in alphas]) # P176(10.17)

print(P)

# alpha11 = pi[0][0] * B[0][0] #代表a1(1)

# alpha12 = pi[0][1] * B[1][0] #代表a1(2)

# alpha13 = pi[0][2] * B[2][0] #代表a1(3)

def backward(self, Q, V, A, B, O, PI): # 后向算法

N = len(Q) # 状态序列的大小

M = len(O) # 观测序列的大小

betas = np.ones((N, M)) # beta

for i in range(N):

print('beta%d(%d)=1' % (M, i))

for t in range(M - 2, -1, -1):

indexOfO = V.index(O[t + 1]) # 找出序列对应的索引

for i in range(N):

betas[i][t] = np.dot(np.multiply(A[i], [b[indexOfO] for b in B]), [beta[t + 1] for beta in betas])

realT = t + 1

realI = i + 1

print('beta%d(%d)=[sigma a%djbj(o%d)]beta%d(j)=(' % (realT, realI, realI, realT + 1, realT + 1),

end='')

for j in range(N):

print("%.2f*%.2f*%.2f+" % (A[i][j], B[j][indexOfO], betas[j][t + 1]), end='')

print("0)=%.3f" % betas[i][t])

# print(betas)

indexOfO = V.index(O[0])

P = np.dot(np.multiply(PI, [b[indexOfO] for b in B]), [beta[0] for beta in betas])

print("P(O|lambda)=", end="")

for i in range(N):

print("%.1f*%.1f*%.5f+" % (PI[0][i], B[i][indexOfO], betas[i][0]), end="")

print("0=%f" % P)

def viterbi(self, Q, V, A, B, O, PI):

N = len(Q) # 状态序列的大小

M = len(O) # 观测序列的大小

deltas = np.zeros((N, M))

psis = np.zeros((N, M))

I = np.zeros((1, M))

for t in range(M):

realT = t+1

indexOfO = V.index(O[t]) # 找出序列对应的索引

for i in range(N):

realI = i+1

if t == 0:

deltas[i][t] = PI[0][i] * B[i][indexOfO]

psis[i][t] = 0

print('delta1(%d)=pi%d * b%d(o1)=%.2f * %.2f=%.2f'%(realI, realI, realI, PI[0][i], B[i][indexOfO], deltas[i][t]))

print('psis1(%d)=0' % (realI))

else:

deltas[i][t] = np.max(np.multiply([delta[t-1] for delta in deltas], [a[i] for a in A])) * B[i][indexOfO]

print('delta%d(%d)=max[delta%d(j)aj%d]b%d(o%d)=%.2f*%.2f=%.5f'%(realT, realI, realT-1, realI, realI, realT, np.max(np.multiply([delta[t-1] for delta in deltas], [a[i] for a in A])), B[i][indexOfO], deltas[i][t]))

psis[i][t] = np.argmax(np.multiply([delta[t-1] for delta in deltas], [a[i] for a in A]))

print('psis%d(%d)=argmax[delta%d(j)aj%d]=%d' % (realT, realI, realT-1, realI, psis[i][t]))

print(deltas)

print(psis)

I[0][M-1] = np.argmax([delta[M-1] for delta in deltas])

print('i%d=argmax[deltaT(i)]=%d' % (M, I[0][M-1]+1))

for t in range(M-2, -1, -1):

I[0][t] = psis[int(I[0][t+1])][t+1]

print('i%d=psis%d(i%d)=%d' % (t+1, t+2, t+2, I[0][t]+1))

print(I)

if __name__ == '__main__':

Q = [1, 2, 3] # 状态个数

V = ['红', '白'] # 观测状态个数

A = [[0.5, 0.2, 0.3], [0.3, 0.5, 0.2], [0.2, 0.3, 0.5]] # 状态转移概率

B = [[0.5, 0.5], [0.4, 0.6], [0.7, 0.3]] # 观测状态

# O = ['红', '白', '红', '红', '白', '红', '白', '白']

O = ['红', '白', '红'] #习题10.1的例子

PI = [[0.2, 0.4, 0.4]] # 初始状态概率

HMM = HiddenMarkov()

# HMM.forward(Q, V, A, B, O, PI)

# HMM.backward(Q, V, A, B, O, PI)

HMM.viterbi(Q, V, A, B, O, PI)

结果展示

delta1(1)=pi1 * b1(o1)=0.20 * 0.50=0.10

psis1(1)=0

delta1(2)=pi2 * b2(o1)=0.40 * 0.40=0.16

psis1(2)=0

delta1(3)=pi3 * b3(o1)=0.40 * 0.70=0.28

psis1(3)=0

delta2(1)=max[delta1(j)aj1]b1(o2)=0.06*0.50=0.02800

psis2(1)=argmax[delta1(j)aj1]=2

delta2(2)=max[delta1(j)aj2]b2(o2)=0.08*0.60=0.05040

psis2(2)=argmax[delta1(j)aj2]=2

delta2(3)=max[delta1(j)aj3]b3(o2)=0.14*0.30=0.04200

psis2(3)=argmax[delta1(j)aj3]=2

delta3(1)=max[delta2(j)aj1]b1(o3)=0.02*0.50=0.00756

psis3(1)=argmax[delta2(j)aj1]=1

delta3(2)=max[delta2(j)aj2]b2(o3)=0.03*0.40=0.01008

psis3(2)=argmax[delta2(j)aj2]=1

delta3(3)=max[delta2(j)aj3]b3(o3)=0.02*0.70=0.01470

psis3(3)=argmax[delta2(j)aj3]=2

[[0.1 0.028 0.00756]

[0.16 0.0504 0.01008]

[0.28 0.042 0.0147 ]]

[[0. 2. 1.]

[0. 2. 1.]

[0. 2. 2.]]

i3=argmax[deltaT(i)]=3

i2=psis3(i3)=3

i1=psis2(i2)=3

[[2. 2. 2.]]

调包代码

import numpy as np

from hmmlearn import hmm

states = ["box 1", "box 2", "box3"]

n_states = len(states)

observations = ["red", "white"]

n_observations = len(observations)

start_probability = np.array([0.2, 0.4, 0.4])

transition_probability = np.array([

[0.5, 0.2, 0.3],

[0.3, 0.5, 0.2],

[0.2, 0.3, 0.5]

])

emission_probability = np.array([

[0.5, 0.5],

[0.4, 0.6],

[0.7, 0.3]

])

model = hmm.MultinomialHMM(n_components=n_states)

model.startprob_=start_probability

model.transmat_=transition_probability

model.emissionprob_=emission_probability

# 跑一跑HMM问题三维特比算法的解码过程,使用和原理篇一样的观测序列来解码,代码如下:

seen = np.array([[0,1,0]]).T

logprob, box = model.decode(seen, algorithm="viterbi")

# print("The ball picked: ", " ".join(map(lambda x: observations[x], [0,1,0])))

print("The ball picked: ", " ".join(map(lambda x: observations[x], seen.T.flatten().tolist())))

print("The hidden box: ", ", ".join(map(lambda x: states[x], box)))

print("Viterbi概率值:", end="")

print(np.exp(logprob)) # 这个是因为在hmmlearn底层将概率进行了对数化,防止出现乘积为0的情况

print(logprob)

# score函数返回的是以自然对数为底的对数概率值

# 概率问题

score = model.score(seen)

print("出现的概率值:", end="")

np.exp(score)

结果展示

The ball picked: red white red

The hidden box: box3, box3, box3

Viterbi概率值:0.014699999999999996

-4.219907785197447

出现的概率值:

0.13021800000000003

求解模型参数的问题。由于鲍姆-韦尔奇算法是基于EM算法的近似算法,

所以我们需要多跑几次,比如下面我们跑三次,选择一个比较优的模型参数,代码如下:

import numpy as np

from hmmlearn import hmm

states = ["box 1", "box 2", "box3"]

n_states = len(states)

observations = ["red", "white"]

n_observations = len(observations)

model2 = hmm.MultinomialHMM(n_components=n_states, n_iter=20, tol=0.01)

X2 = np.array([[0],[1],[0],[1],[0],[0],[0],[1],[1],[0],[1],[1]])

model2.fit(X2)

print (model2.startprob_)

print(model2.transmat_)

print(model2.emissionprob_)

print (model2.score(X2))

model2.fit(X2)

print (model2.startprob_)

print(model2.transmat_)

print(model2.emissionprob_)

print (model2.score(X2))

model2.fit(X2)

print (model2.startprob_)

print(model2.transmat_)

print(model2.emissionprob_)

print (model2.score(X2))

结果展示

[1.20727212e-69 6.97452409e-17 1.00000000e+00]

[[4.32990773e-01 8.34477205e-03 5.58664455e-01]

[6.94714883e-03 1.30971141e-03 9.91743140e-01]

[7.06610684e-01 2.93389316e-01 4.63122930e-13]]

[[2.54407588e-05 9.99974559e-01]

[6.64561832e-01 3.35438168e-01]

[9.99998763e-01 1.23675854e-06]]

-5.98821368556404

[1.00000000e+00 4.14565215e-19 4.81370708e-24]

[[1.45341413e-10 4.16742704e-01 5.83257296e-01]

[8.77754320e-01 5.65441368e-02 6.57015434e-02]

[4.58294151e-01 2.39193407e-01 3.02512442e-01]]

[[9.99995511e-01 4.48883036e-06]

[3.12095027e-01 6.87904973e-01]

[3.75480559e-02 9.62451944e-01]]

-6.360215135430859

[4.36564070e-12 1.27082082e-10 1.00000000e+00]

[[2.13000281e-01 1.93701805e-01 5.93297914e-01]

[1.84442949e-01 1.72210452e-01 6.43346599e-01]

[5.62253476e-01 4.37729514e-01 1.70102253e-05]]

[[1.49005602e-01 8.50994398e-01]

[1.86066340e-01 8.13933660e-01]

[9.99346932e-01 6.53068461e-04]]

-6.543366154783859

总结

- HMM是一个双重随机过程,两个集合,三个矩阵

- 概率计算问题

解决算法包括穷举搜索、向前算法(Forward Algorithm)、向后算法(Backward Algorithm) - 学习问题(learning)

鲍姆-韦尔奇算法(Baum-Welch Algorithm) (约等于EM算法) - 预测问题(Decoding)

维特比算法(Viterbi Algorithm)