使用朴素贝叶斯进行垃圾邮件分类

目录

理论

贝叶斯定理

先验概率

后验概率

朴素贝叶斯的优缺点

使用朴素贝叶斯对电子邮件分类

流程

收集数据

数据处理

数据读取并输出

数据分析

测试算法

使用算法

整体代码

理论

贝叶斯定理

先验概率

P(cj)代表还没有训练模型之前,根据历史数据/经验估算cj拥有的初始概率。P(cj)常被称为cj的先验概率(prior probability) ,它反映了cj的概率分布,该分布独立于样本。

后验概率

给定数据样本x时cj成立的概率P(cj | x )被称为后验概率(posterior probability),因为它反映了在看到数据样本 x后 cj 成立的置信度。

已知两个独立事件A和B,事件B发生的前提下,事件A发生的概率可以表示为P(A|B),即上图中橙色部分占红色部分的比例,即:

P(A) 是”先验概率”,指在B事件发生之前,我们对A事件概率的一个判断。如:正常收到一封邮件,该邮件为垃圾邮件的概率就是“先验概率”。

P(A|B)是”后验概率”, 指在B事件发生之后,我们对A事件概率的重新评估,一般是我们求解的目标。如:邮件中含有“中奖”这个词,该邮件为垃圾邮件的概率就是“后验概率”。

P(B|A)/P(B)是可能性函数,这是一个调整因子,使得预估概率更接近真实概率。

条件概率:后验概率=先验概率*调整因子

朴素贝叶斯的优缺点

优点:在数据较少的情况下仍然有效,可以处理多类问题

缺点:对于输入数据的准备方式较为敏感

适用数据类型:标称型数据

使用朴素贝叶斯对电子邮件分类

流程

1.收集数据

2.数据处理

3.训练算法

4.测试算法

5.使用算法

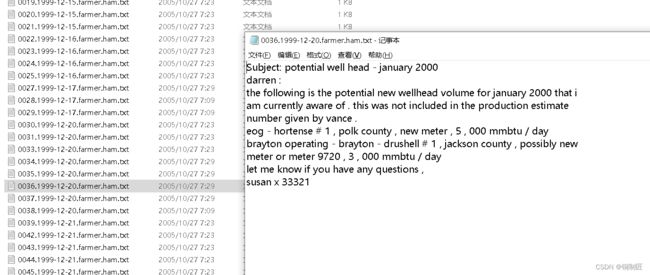

收集数据

数据来源于网络

数据处理

数据读取并输出

import os

import re

import string

import math

DATA_DIR = 'enron'

target_names = ['ham', 'spam']

def get_data(DATA_DIR):

subfolders = ['enron%d' % i for i in range(1,7)] #获得enron下面的文件夹

data = []

target = []

for subfolder in subfolders:

#垃圾邮件 spam

spam_files = os.listdir(os.path.join(DATA_DIR, subfolder, 'spam')) #将文件夹路径进行组合

for spam_file in spam_files: #遍历所有垃圾文件

with open(os.path.join(DATA_DIR, subfolder, 'spam', spam_file), encoding="latin-1") as f:

data.append(f.read())

target.append(1)

#正常邮件 pam

ham_files = os.listdir(os.path.join(DATA_DIR, subfolder, 'ham'))

for ham_file in ham_files:

with open(os.path.join(DATA_DIR, subfolder, 'ham', ham_file), encoding="latin-1") as f:

data.append(f.read())

target.append(0)

return data, target

X, y = get_data(DATA_DIR)

print(X,y)数据分析

class SpamDetector_1(object):

"""Implementation of Naive Bayes for binary classification"""

#清除空格

def clean(self, s):

translator = str.maketrans("", "", string.punctuation)

return s.translate(translator)

#分开每个单词

def tokenize(self, text):

text = self.clean(text).lower()

return re.split("\W+", text)

#计算某个单词出现的次数

def get_word_counts(self, words):

word_counts = {}

for word in words:

word_counts[word] = word_counts.get(word, 0.0) + 1.0

return word_counts

class SpamDetector_2(SpamDetector_1):

# X:data,Y:target标签(垃圾邮件或正常邮件)

def fit(self, X, Y):

self.num_messages = {}

self.log_class_priors = {}

self.word_counts = {}

# 建立一个集合存储所有出现的单词

self.vocab = set()

# 统计spam和ham邮件的个数

self.num_messages['spam'] = sum(1 for label in Y if label == 1)

self.num_messages['ham'] = sum(1 for label in Y if label == 0)

# 计算先验概率,即所有的邮件中,垃圾邮件和正常邮件所占的比例

self.log_class_priors['spam'] = math.log(

self.num_messages['spam'] / (self.num_messages['spam'] + self.num_messages['ham']))

self.log_class_priors['ham'] = math.log(

self.num_messages['ham'] / (self.num_messages['spam'] + self.num_messages['ham']))

self.word_counts['spam'] = {}

self.word_counts['ham'] = {}

for x, y in zip(X, Y):

c = 'spam' if y == 1 else 'ham'

# 构建一个字典存储单封邮件中的单词以及其个数

counts = self.get_word_counts(self.tokenize(x))

for word, count in counts.items():

if word not in self.vocab:

self.vocab.add(word)#确保self.vocab中含有所有邮件中的单词

# 下面语句是为了计算垃圾邮件和非垃圾邮件的词频,即给定词在垃圾邮件和非垃圾邮件中出现的次数。

# c是0或1,垃圾邮件的标签

if word not in self.word_counts[c]:

self.word_counts[c][word] = 0.0

self.word_counts[c][word] += count

MNB = SpamDetector_2()

MNB.fit(X[100:], y[100:])测试算法

class SpamDetector(SpamDetector_2):

def predict(self, X):

result = []

flag_1 = 0

# 遍历所有的测试集

for x in X:

counts = self.get_word_counts(self.tokenize(x)) # 生成可以记录单词以及该单词出现的次数的字典

spam_score = 0

ham_score = 0

flag_2 = 0

for word, _ in counts.items():

if word not in self.vocab: continue

#下面计算P(内容|垃圾邮件)和P(内容|正常邮件),所有的单词都要进行拉普拉斯平滑

else:

# 该单词存在于正常邮件的训练集和垃圾邮件的训练集当中

if word in self.word_counts['spam'].keys() and word in self.word_counts['ham'].keys():

log_w_given_spam = math.log(

(self.word_counts['spam'][word] + 1) / (sum(self.word_counts['spam'].values()) + len(self.vocab)))

log_w_given_ham = math.log(

(self.word_counts['ham'][word] + 1) / (sum(self.word_counts['ham'].values()) + len(

self.vocab)))

# 该单词存在于垃圾邮件的训练集当中,但不存在于正常邮件的训练集当中

if word in self.word_counts['spam'].keys() and word not in self.word_counts['ham'].keys():

log_w_given_spam = math.log(

(self.word_counts['spam'][word] + 1) / (sum(self.word_counts['spam'].values()) + len(self.vocab)))

log_w_given_ham = math.log( 1 / (sum(self.word_counts['ham'].values()) + len(

self.vocab)))

# 该单词存在于正常邮件的训练集当中,但不存在于垃圾邮件的训练集当中

if word not in self.word_counts['spam'].keys() and word in self.word_counts['ham'].keys():

log_w_given_spam = math.log( 1 / (sum(self.word_counts['spam'].values()) + len(self.vocab)))

log_w_given_ham = math.log(

(self.word_counts['ham'][word] + 1) / (sum(self.word_counts['ham'].values()) + len(

self.vocab)))

# 把计算到的P(内容|垃圾邮件)和P(内容|正常邮件)加起来

spam_score += log_w_given_spam

ham_score += log_w_given_ham

flag_2 += 1

# 最后,还要把先验加上去,即P(垃圾邮件)和P(正常邮件)

spam_score += self.log_class_priors['spam']

ham_score += self.log_class_priors['ham']

# 最后进行预测,如果spam_score > ham_score则标志为1,即垃圾邮件

if spam_score > ham_score:

result.append(1)

else:

result.append(0)

flag_1 += 1

return result使用算法

MNB = SpamDetector()

MNB.fit(X[100:], y[100:])

pred = MNB.predict(X[:100])

true = y[:100]

accuracy = 0

for i in range(100):

if pred[i] == true[i]:

accuracy += 1

print(accuracy) 整体代码

import os

import re

import string

import math

DATA_DIR = 'enron'

target_names = ['ham', 'spam']

def get_data(DATA_DIR):

subfolders = ['enron%d' % i for i in range(1,7)]

data = []

target = []

for subfolder in subfolders:

# spam

spam_files = os.listdir(os.path.join(DATA_DIR, subfolder, 'spam'))

for spam_file in spam_files:

with open(os.path.join(DATA_DIR, subfolder, 'spam', spam_file), encoding="latin-1") as f:

data.append(f.read())

target.append(1)

# ham

ham_files = os.listdir(os.path.join(DATA_DIR, subfolder, 'ham'))

for ham_file in ham_files:

with open(os.path.join(DATA_DIR, subfolder, 'ham', ham_file), encoding="latin-1") as f:

data.append(f.read())

target.append(0)

return data, target

X, y = get_data(DATA_DIR)

class SpamDetector_1(object):

"""Implementation of Naive Bayes for binary classification"""

#清除空格

def clean(self, s):

translator = str.maketrans("", "", string.punctuation)

return s.translate(translator)

#分开每个单词

def tokenize(self, text):

text = self.clean(text).lower()

return re.split("\W+", text)

#计算某个单词出现的次数

def get_word_counts(self, words):

word_counts = {}

for word in words:

word_counts[word] = word_counts.get(word, 0.0) + 1.0

return word_counts

class SpamDetector_2(SpamDetector_1):

# X:data,Y:target标签(垃圾邮件或正常邮件)

def fit(self, X, Y):

self.num_messages = {}

self.log_class_priors = {}

self.word_counts = {}

# 建立一个集合存储所有出现的单词

self.vocab = set()

# 统计spam和ham邮件的个数

self.num_messages['spam'] = sum(1 for label in Y if label == 1)

self.num_messages['ham'] = sum(1 for label in Y if label == 0)

# 计算先验概率,即所有的邮件中,垃圾邮件和正常邮件所占的比例

self.log_class_priors['spam'] = math.log(

self.num_messages['spam'] / (self.num_messages['spam'] + self.num_messages['ham']))

self.log_class_priors['ham'] = math.log(

self.num_messages['ham'] / (self.num_messages['spam'] + self.num_messages['ham']))

self.word_counts['spam'] = {}

self.word_counts['ham'] = {}

for x, y in zip(X, Y):

c = 'spam' if y == 1 else 'ham'

# 构建一个字典存储单封邮件中的单词以及其个数

counts = self.get_word_counts(self.tokenize(x))

for word, count in counts.items():

if word not in self.vocab:

self.vocab.add(word)#确保self.vocab中含有所有邮件中的单词

# 下面语句是为了计算垃圾邮件和非垃圾邮件的词频,即给定词在垃圾邮件和非垃圾邮件中出现的次数。

# c是0或1,垃圾邮件的标签

if word not in self.word_counts[c]:

self.word_counts[c][word] = 0.0

self.word_counts[c][word] += count

MNB = SpamDetector_2()

MNB.fit(X[100:], y[100:])

class SpamDetector(SpamDetector_2):

def predict(self, X):

result = []

flag_1 = 0

# 遍历所有的测试集

for x in X:

counts = self.get_word_counts(self.tokenize(x)) # 生成可以记录单词以及该单词出现的次数的字典

spam_score = 0

ham_score = 0

flag_2 = 0

for word, _ in counts.items():

if word not in self.vocab: continue

#下面计算P(内容|垃圾邮件)和P(内容|正常邮件),所有的单词都要进行拉普拉斯平滑

else:

# 该单词存在于正常邮件的训练集和垃圾邮件的训练集当中

if word in self.word_counts['spam'].keys() and word in self.word_counts['ham'].keys():

log_w_given_spam = math.log(

(self.word_counts['spam'][word] + 1) / (sum(self.word_counts['spam'].values()) + len(self.vocab)))

log_w_given_ham = math.log(

(self.word_counts['ham'][word] + 1) / (sum(self.word_counts['ham'].values()) + len(

self.vocab)))

# 该单词存在于垃圾邮件的训练集当中,但不存在于正常邮件的训练集当中

if word in self.word_counts['spam'].keys() and word not in self.word_counts['ham'].keys():

log_w_given_spam = math.log(

(self.word_counts['spam'][word] + 1) / (sum(self.word_counts['spam'].values()) + len(self.vocab)))

log_w_given_ham = math.log( 1 / (sum(self.word_counts['ham'].values()) + len(

self.vocab)))

# 该单词存在于正常邮件的训练集当中,但不存在于垃圾邮件的训练集当中

if word not in self.word_counts['spam'].keys() and word in self.word_counts['ham'].keys():

log_w_given_spam = math.log( 1 / (sum(self.word_counts['spam'].values()) + len(self.vocab)))

log_w_given_ham = math.log(

(self.word_counts['ham'][word] + 1) / (sum(self.word_counts['ham'].values()) + len(

self.vocab)))

# 把计算到的P(内容|垃圾邮件)和P(内容|正常邮件)加起来

spam_score += log_w_given_spam

ham_score += log_w_given_ham

flag_2 += 1

# 最后,还要把先验加上去,即P(垃圾邮件)和P(正常邮件)

spam_score += self.log_class_priors['spam']

ham_score += self.log_class_priors['ham']

# 最后进行预测,如果spam_score > ham_score则标志为1,即垃圾邮件

if spam_score > ham_score:

result.append(1)

else:

result.append(0)

flag_1 += 1

return result

MNB = SpamDetector()

MNB.fit(X[100:], y[100:])

pred = MNB.predict(X[:100])

true = y[:100]

accuracy = 0

for i in range(100):

if pred[i] == true[i]:

accuracy += 1

print(accuracy)