机器臂(二)--视觉控制关节点

在先前配置好的机器臂教程接着下做

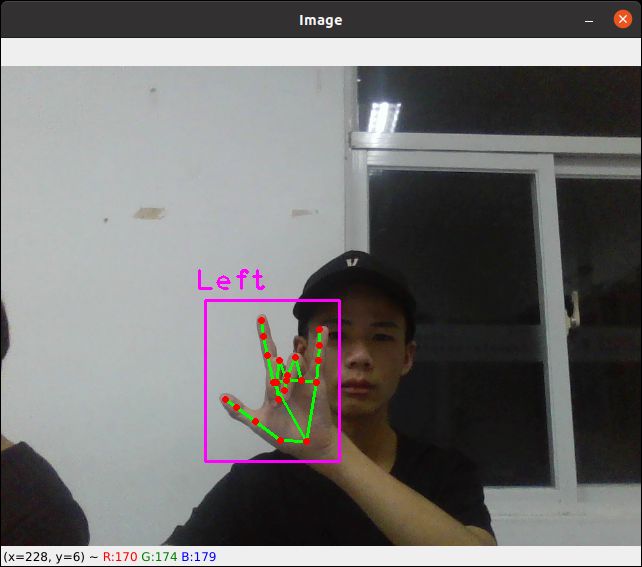

先是视觉方面demo

手势识别的mediapipe 是谷歌开源项目 这个的主要功能是识别手势 然后封装成类给下面的控制模块调用

import cv2

import mediapipe as mp

class HandDetector:

def __init__(self, mode=False, maxHands=2, detectionCon=0.5, minTrackCon=0.5):

self.mode = mode

self.maxHands = maxHands

self.detectionCon = detectionCon

self.minTrackCon = minTrackCon

self.mpHands = mp.solutions.hands

self.hands = self.mpHands.Hands(static_image_mode=self.mode, max_num_hands=self.maxHands,

min_detection_confidence=self.detectionCon,

min_tracking_confidence=self.minTrackCon)

self.mpDraw = mp.solutions.drawing_utils

self.tipIds = [4, 8, 12, 16, 20]

self.fingers = []

self.lmList = []

def findHands(self, img, draw=True, flipType=True):

imgRGB = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

self.results = self.hands.process(imgRGB)

allHands = []

h, w, c = img.shape

if self.results.multi_hand_landmarks:

for handType, handLms in zip(self.results.multi_handedness, self.results.multi_hand_landmarks):

myHand = {}

## lmList

mylmList = []

xList = []

yList = []

for id, lm in enumerate(handLms.landmark):

px, py, pz = int(lm.x * w), int(lm.y * h), int(lm.z * w)

mylmList.append([px, py, pz])

xList.append(px)

yList.append(py)

## bbox

xmin, xmax = min(xList), max(xList)

ymin, ymax = min(yList), max(yList)

boxW, boxH = xmax - xmin, ymax - ymin

bbox = xmin, ymin, boxW, boxH

cx, cy = bbox[0] + (bbox[2] // 2), \

bbox[1] + (bbox[3] // 2)

myHand["lmList"] = mylmList

myHand["bbox"] = bbox

myHand["center"] = (cx, cy)

if flipType:

if handType.classification[0].label == "Right":

myHand["type"] = "Left"

else:

myHand["type"] = "Right"

else:

myHand["type"] = handType.classification[0].label

allHands.append(myHand)

## draw

if draw:

self.mpDraw.draw_landmarks(img, handLms,

self.mpHands.HAND_CONNECTIONS)

cv2.rectangle(img, (bbox[0] - 20, bbox[1] - 20),

(bbox[0] + bbox[2] + 20, bbox[1] + bbox[3] + 20),

(255, 0, 255), 2)

cv2.putText(img, myHand["type"], (bbox[0] - 30, bbox[1] - 30), cv2.FONT_HERSHEY_PLAIN,

2, (255, 0, 255), 2)

if draw:

return allHands, img

else:

return allHands

def fingersUp(self, myHand):

"""

判断多少个手指起来

"""

myHandType = myHand["type"]

myLmList = myHand["lmList"]

if self.results.multi_hand_landmarks:

fingers = []

# Thumb

if myHandType == "Right":

fingers.append(0)#右手

if myLmList[self.tipIds[0]][0] > myLmList[self.tipIds[0] - 1][0]:

fingers.append(1)

else:

fingers.append(0)

else:

fingers.append(1)#左手

if myLmList[self.tipIds[0]][0] < myLmList[self.tipIds[0] - 1][0]:

fingers.append(1)

else:

fingers.append(0)

# 4 Fingers

for id in range(1, 5):

if myLmList[self.tipIds[id]][1] < myLmList[self.tipIds[id] - 2][1]:

fingers.append(1)

else:

fingers.append(0)

return fingers

def main():

cap = cv2.VideoCapture(0)

detector = HandDetector(detectionCon=0.8, maxHands=2)

while True:

# Get image frame

success, img = cap.read()

# Find the hand and its landmarks

hands, img = detector.findHands(img) # with draw

# hands = detector.findHands(img, draw=False) # without draw

if hands:

# Hand 1

hand1 = hands[0]

lmList1 = hand1["lmList"] # List of 21 Landmark points

handType1 = hand1["type"] # Handtype Left or Right

fingers1 = detector.fingersUp(hand1)

print(fingers1)

if len(hands) == 2:

# Hand 2

hand2 = hands[1]

lmList2 = hand2["lmList"] # List of 21 Landmark points

bbox2 = hand2["bbox"] # Bounding box info x,y,w,h

centerPoint2 = hand2['center'] # center of the hand cx,cy

handType2 = hand2["type"] # Hand Type "Left" or "Right"

fingers2 = detector.fingersUp(hand2)

print(fingers2)

# Display

cv2.imshow("Image", img)

cv2.waitKey(1)

if __name__ == "__main__":

main()控制关节点的python demo 模块

#! /usr/bin/python3.8

# -*- coding: utf-8 -*-

from operator import ge

from time import sleep

import HandTrackingModule as Hd

import rospy

import cv2

from sensor_msgs.msg import JointState

import sys, termios

msg = """

Control Your Turtlebot!

---------------------------

Moving around:

q w e r t y

a s d f g h

j/l : increase/decrease precision by 0.05

space key, k : reset

anything else : stop smoothly

b : switch to arm_four/arm_six

precision is not less than or equal to zero

CTRL-C to quit

"""

mode = 0 #六自由度模式

precision = 0.05 #默认精度(rad)

#键值对应转动方向

rotateBindings = {

'q':(1,1),#第一个关节顺时针

'a':(1,-1),

'w':(2,1),

's':(2,-1),

'e':(3,1),

'd':(3,-1),

'r':(4,1),

'f':(4,-1),

't':(5,1),

'g':(5,-1),

'y':(6,1),

'h':(6,-1)

}

#键值对应精度增量

precisionBindings={

'j':0.01,

'l':-0.01

}

#以字符串格式返回当前控制精度

def prec(precision):

return "currently:\tprecision %s " %precision

# 检测手势

def detect_hands_gesture(result):

#0 1 2 3 4 5 6

#7 8 9 10 11 12

if result[0]==0:

if (result[1:] == [0,1,0,0,0]):

gesture = "a" #

return gesture

elif (result[1:] == [0,1,1,0,0]):

gesture = "s"

return gesture

elif (result[1:] == [0,0,1,1,1]):

gesture = "d"

return gesture

elif (result[1:] == [0,1,1,1,1]):

gesture = "f"

return gesture

elif (result[1:] == [1,1,1,1,1]):

gesture = "g"

return gesture

elif (result[1:] == [1,0,0,0,1]):

gesture = "h"

return gesture

elif (result[1:] == [1,1,0,0,1]):

gesture = " " #R&K手臂直立

return gesture

elif result[0]==1:

if (result[1:] == [0,1,0,0,0]):

gesture = "q"

return gesture

elif (result[1:] == [0,1,1,0,0]):

gesture = "w"

return gesture

elif (result[1:] == [0,0,1,1,1]):

gesture = "e"

return gesture

elif (result[1:] == [0,1,1,1,1]):

gesture = "r"

return gesture

elif (result[1:] == [1,1,1,1,1]):

gesture = "t"

return gesture

elif (result[1:] == [1,0,0,0,1]):#小拇指

gesture = "y"

return gesture

#主函数

if __name__=="__main__":

settings = termios.tcgetattr(sys.stdin) #获取键值初始化,读取终端相关属性

rospy.init_node('arm_teleop') #创建ROS节点

pub = rospy.Publisher('/joint_states', JointState, queue_size=5) #创建机械臂状态话题发布者

#关节1-6对应弧度状态

joints = [0,0,0,0,0,0]

"""机械臂关节初始化"""

jointState = JointState() #创建ROS机械臂装态话题消息变量

jointState.header.stamp = rospy.Time.now()

jointState.name=["joint1","joint2","joint3","joint4","joint5","joint6"]

jointState.position=joints

pub.publish(jointState) #ROS发布机械臂状态话题

# 接入USB摄像头时,注意修改cap设备的编号

cap = cv2.VideoCapture(0)

detector = Hd.HandDetector(detectionCon=0.8, maxHands=2)

if not cap.isOpened():

print("Can not open camera.")

exit()

try:

print(msg) #打印控制说明

print(prec(precision)) #打印当前精度

key = " "

while(1):

# Get image frame

success, img = cap.read()

# Find the hand and its landmarks

hands, img = detector.findHands(img) # with draw

# hands = detector.findHands(img, draw=False) # without draw

cv2.imshow("Image", img)

cv2.waitKey(1)

if hands :

key = detect_hands_gesture(detector.fingersUp(hands[0]))

#判断键值是在控制机械臂运动的键值内

if key in rotateBindings.keys():

joints[rotateBindings[key][0]-1] = joints[rotateBindings[key][0]-1] + precision*rotateBindings[key][1]

if joints[rotateBindings[key][0]-1]>1.57:

joints[rotateBindings[key][0]-1]=1.57

elif joints[rotateBindings[key][0]-1]<-1.57:

joints[rotateBindings[key][0]-1]=-1.57

#判断键值是否在精度增量键值内

elif key in precisionBindings.keys():

if (precision+precisionBindings[key])<0.01 or (precision+precisionBindings[key])>0.1:

pass

else:

precision+=precisionBindings[key]

print(prec(precision)) #精度发生变化,打印出来

#根据机械臂自由度给joint_states话题赋予消息

if mode:

jointState.header.stamp = rospy.Time.now()

jointState.name=["joint1","joint2","joint3","joint4"]

jointState.position=joints[0:4]

else:

jointState.header.stamp = rospy.Time.now()

jointState.name=["joint1","joint2","joint3","joint4","joint5","joint6"]

jointState.position=joints

pub.publish(jointState) #ROS发布关节状态话题

elif key ==" ":

joints = [0,0,0,0,0,0]

#运行出现问题则程序终止并打印相关错误信息

except Exception as e:

print(e)

#程序结束前发布速度为0的速度话题

finally:

print("Keyboard control off")

#程序结束前打印消息提示

termios.tcsetattr(sys.stdin, termios.TCSADRAIN, settings)感觉判断的地方有点小拉跨

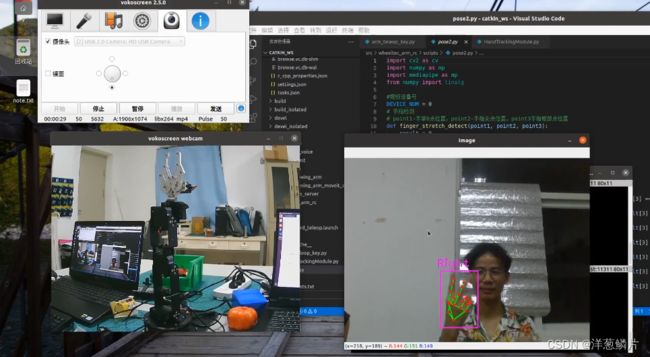

所有的python 写完记得

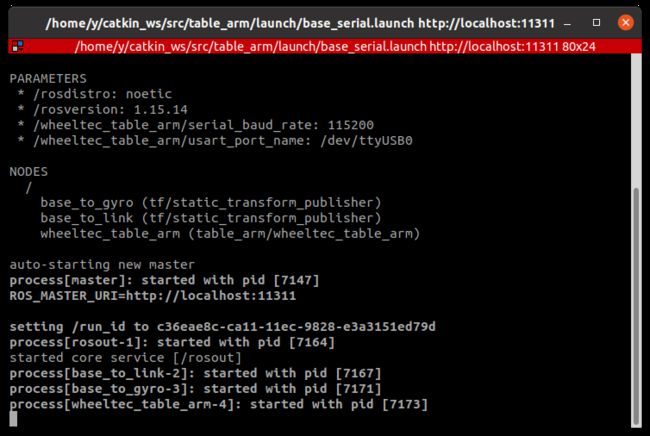

sudo chmod 777 *.py启动桌面机器人的基础服务

roslaunch table_arm base_serial.launch启动

roslaunch table_arm arm_opencv_move.py

ros_opencv_机器爪控制-2_哔哩哔哩_bilibili