机器学习之MATLAB代码--IWOA_BILSTM(基于改进鲸鱼算法优化的BiLSTM预测算法)(十六)

机器学习之MATLAB代码--IWOA_BILSTM基于改进鲸鱼算法优化的BiLSTM预测算法(十六)

- 代码

-

- 数据

-

- 结果

代码

1、

%% 基于改进鲸鱼算法优化的BiLSTM预测算法

clear;close all;

clc

rng('default')

%% 读取负荷数据

load('QLD1.mat')

data = QLD1(1:2000);

%序列的前 90% 用于训练,后 10% 用于测试

numTimeStepsTrain = floor(0.9*numel(data));

dataTrain = data(1:numTimeStepsTrain+1)';

dataTest = data(numTimeStepsTrain+1:end)';

%数据预处理,将训练数据标准化为具有零均值和单位方差。

mu = mean(dataTrain);

sig = std(dataTrain);

dataTrainStandardized = (dataTrain - mu) / sig;

%输入BiLSTM的时间序列交替一个时间步

XTrain = dataTrainStandardized(1:end-1);

YTrain = dataTrainStandardized(2:end);

%数据预处理,将测试数据标准化为具有零均值和单位方差。

mu = mean(dataTest);

sig = std(dataTest);

dataTestStandardized = (dataTest - mu) / sig;

XTest = dataTestStandardized(1:end-1);

YTest = dataTestStandardized(2:end);

%%

%创建BiLSTM回归网络,指定BiLSTM层的隐含单元个数96*3

%序列预测,因此,输入一维,输出一维

numFeatures = 1;

numResponses = 1;

%% 定义改进鲸鱼算法优化参数

pop=5; %种群数量

Max_iteration=10; % 设定最大迭代次数

dim = 4;%维度,即BiLSTM网路包含的隐藏单元数目,最大训练周期,初始学习率,L2参数

lb = [20,50,10E-5,10E-6];%下边界

ub = [200,300,0.1,0.1];%上边界

fobj = @(x) fun(x,numFeatures,numResponses,XTrain,YTrain,XTest,YTest);

[Best_score,Best_pos,IWOA_curve,netIWOA,pos_curve]=IWOA(pop,Max_iteration,lb,ub,dim,fobj); %开始优化

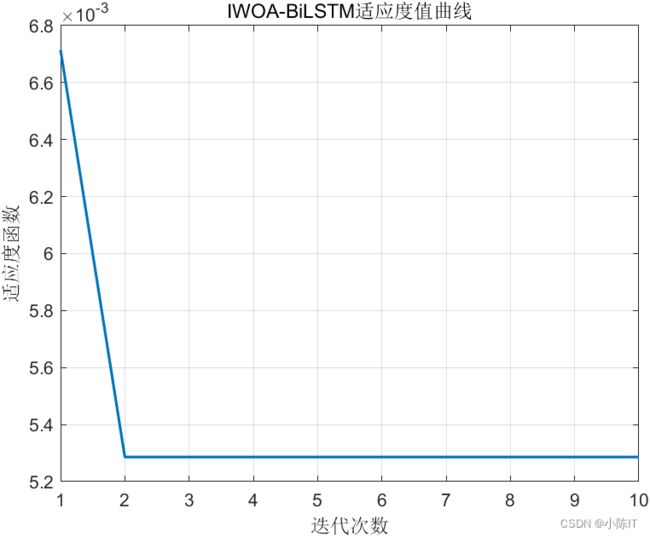

figure

plot(IWOA_curve,'linewidth',1.5);

grid on

xlabel('迭代次数')

ylabel('适应度函数')

title('IWOA-BiLSTM适应度值曲线')

figure

subplot(221)

plot(pos_curve(:,1),'linewidth',1.5);

grid on

xlabel('迭代次数')

ylabel('隐藏单元数目')

title('隐藏单元数目迭代曲线')

subplot(222)

plot(pos_curve(:,2),'linewidth',1.5);

grid on

xlabel('迭代次数')

ylabel('训练周期')

title('训练周期迭代曲线')

subplot(223)

plot(pos_curve(:,3),'linewidth',1.5);

grid on

xlabel('迭代次数')

ylabel('学习率')

title('学习率迭代曲线')

subplot(224)

plot(pos_curve(:,4),'linewidth',1.5);

grid on

xlabel('迭代次数')

ylabel('L2参数')

title('L2参数迭代曲线')

%训练集测试

PredictTrainIWOA = predict(netIWOA,XTrain, 'ExecutionEnvironment','gpu');

%测试集测试

PredictTestIWOA = predict(netIWOA,XTest, 'ExecutionEnvironment','gpu');

%训练集mse

mseTrainIWOA= mse(YTrain-PredictTrainIWOA);

%测试集mse

mseTestIWOA = mse(YTest-PredictTestIWOA);

%% IWOA-BiLSTM优化参数

numHiddenUnits = round(Best_pos(1));%BiLSTM网路包含的隐藏单元数目

maxEpochs = round(Best_pos(2));%最大训练周期

InitialLearnRate = Best_pos(3);%初始学习率

L2Regularization = Best_pos(4);%L2参数

%设置网络

layers = [ ...

sequenceInputLayer(numFeatures)

bilstmLayer(numHiddenUnits)

fullyConnectedLayer(numResponses)

regressionLayer];

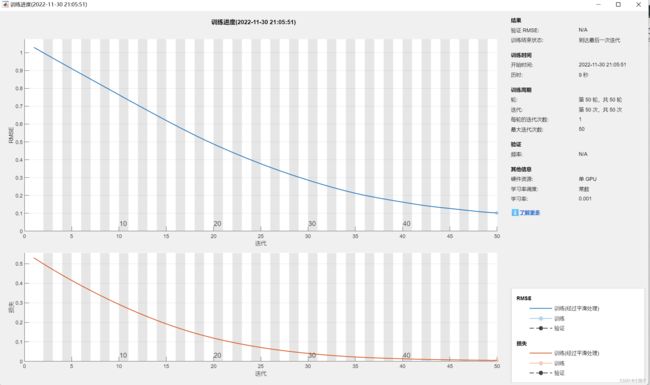

%指定训练选项

options = trainingOptions('adam', ...

'MaxEpochs',maxEpochs, ...

'ExecutionEnvironment' ,'gpu',...

'InitialLearnRate',InitialLearnRate,...

'GradientThreshold',1, ...

'L2Regularization',L2Regularization, ...

'Plots','training-progress',...

'Verbose',0);

%训练BiLSTM

[net,info] = trainNetwork(XTrain,YTrain,layers,options);

%% 训练过程识别准确度曲线

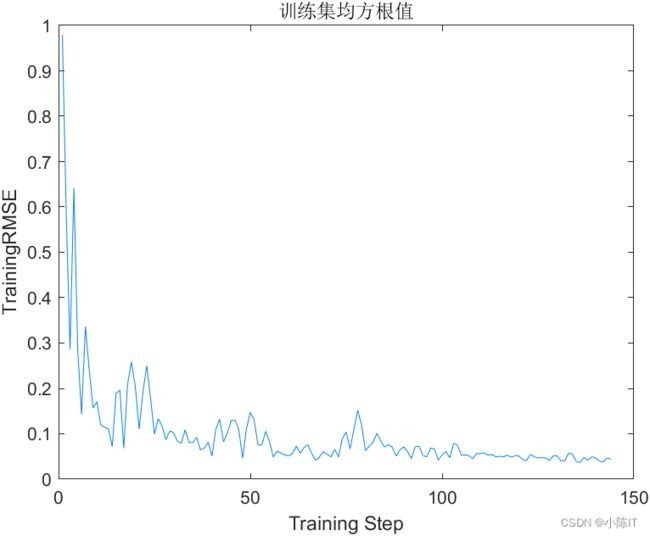

figure;

plot(info.TrainingRMSE,'Color',[0 0.5 1] );

ylabel('TrainingRMSE')

xlabel('Training Step');

title(['训练集均方根值']);

%% 训练过程损失值曲线

figure;

plot(info.TrainingLoss,'Color',[1 0.5 0] );

ylabel('Training Loss')

xlabel('Training Step');

title(['损失函数值' ]);

%% 基础BiLSTM测试

numHiddenUnits = 50;

layers = [ ...

sequenceInputLayer(numFeatures)

bilstmLayer(numHiddenUnits)

fullyConnectedLayer(numResponses)

regressionLayer];

%指定训练选项

options = trainingOptions('adam', ...

'MaxEpochs',50, ...

'ExecutionEnvironment' ,'gpu',...

'GradientThreshold',1, ...

'InitialLearnRate',0.001, ...

'L2Regularization',0.0001,...

'Plots','training-progress',...

'Verbose',1);

%训练BiLSTM

net = trainNetwork(XTrain,YTrain,layers,options);

%训练集测试

PredictTrain = predict(net,XTrain, 'ExecutionEnvironment','gpu');

%测试集测试

PredictTest = predict(net,XTest, 'ExecutionEnvironment','gpu');

%训练集mse

mseTrain = mse(YTrain-PredictTrain);

%测试集mse

mseTest = mse(YTest-PredictTest);

disp('-------------------------------------------------------------')

disp('IWOA-BiLSTM优化得到的最优参数为:')

disp(['IWOA-BiLSTM优化得到的隐藏单元数目为:',num2str(round(Best_pos(1)))]);

disp(['IWOA-BiLSTM优化得到的最大训练周期为:',num2str(round(Best_pos(2)))]);

disp(['IWOA-BiLSTM优化得到的InitialLearnRate为:',num2str((Best_pos(3)))]);

disp(['IWOA-BiLSTM优化得到的L2Regularization为:',num2str((Best_pos(4)))]);

disp('-------------------------------------------------------------')

disp('IWOA-BiLSTM结果:')

disp(['IWOA-BiLSTM训练集MSE:',num2str(mseTrainIWOA)]);

disp(['IWOA-BiLSTM测试集MSE:',num2str(mseTestIWOA)]);

disp('BiLSTM结果:')

disp(['BiLSTM训练集MSE:',num2str(mseTrain)]);

disp(['BiLSTM测试集MSE:',num2str(mseTest)]);

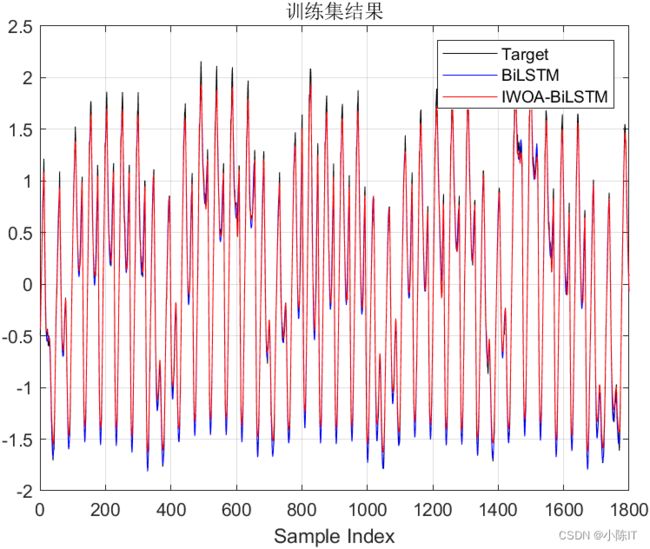

%% 训练集结果绘图

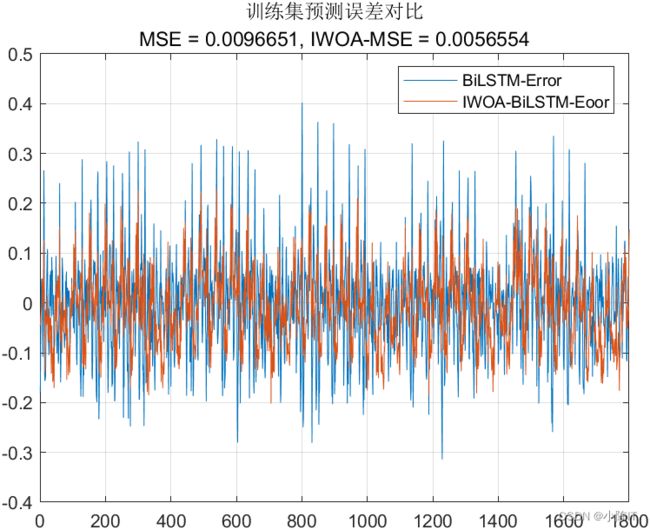

errors=YTrain-PredictTrain;

errorsIWOA=YTrain-PredictTrainIWOA;

MSE=mean(errors.^2);

RMSE=sqrt(MSE);

MSEIWOA=mean(errorsIWOA.^2);

RMSEIWOA=sqrt(MSEIWOA);

error_mean=mean(errors);

error_std=std(errors);

error_meanIWOA=mean(errorsIWOA);

error_stdIWOA=std(errorsIWOA);

figure;

plot(YTrain,'k');

hold on;

plot(PredictTrain,'b');

plot(PredictTrainIWOA,'r');

legend('Target','BiLSTM','IWOA-BiLSTM');

title('训练集结果');

xlabel('Sample Index');

grid on;

figure;

plot(errors);

hold on

plot(errorsIWOA);

legend('BiLSTM-Error','IWOA-BiLSTM-Eoor');

title({'训练集预测误差对比';['MSE = ' num2str(MSE), ', IWOA-MSE = ' num2str(MSEIWOA)]});

grid on;

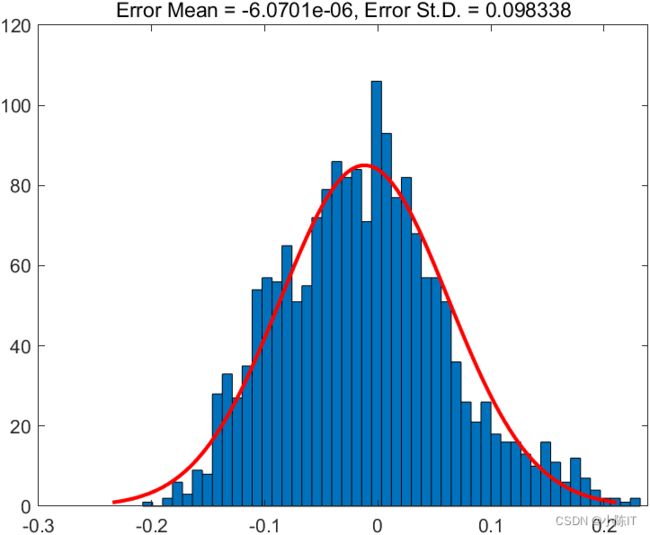

figure;

histfit(errorsIWOA, 50);

title(['Error Mean = ' num2str(error_mean), ', Error St.D. = ' num2str(error_std)]);

%% 测试集结果绘图

errors=YTest-PredictTest;

errorsIWOA=YTest-PredictTestIWOA;

MSE=mean(errors.^2);

RMSE=sqrt(MSE);

MSEIWOA=mean(errorsIWOA.^2);

RMSEIWOA=sqrt(MSEIWOA);

error_mean=mean(errors);

error_std=std(errors);

error_meanIWOA=mean(errorsIWOA);

error_stdIWOA=std(errorsIWOA);

figure;

plot(YTest,'k');

hold on;

plot(PredictTest,'b');

plot(PredictTestIWOA,'r');

legend('Target','BiLSTM','IWOA-BiLSTM');

title('测试集结果');

xlabel('Sample Index');

grid on;

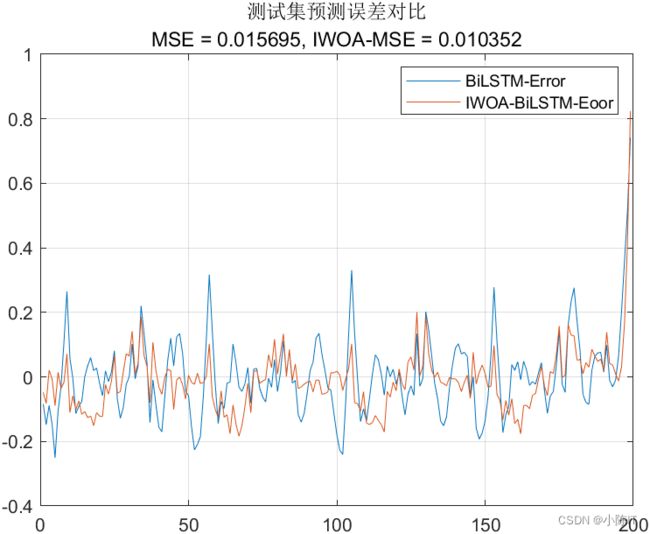

figure;

plot(errors);

hold on

plot(errorsIWOA);

legend('BiLSTM-Error','IWOA-BiLSTM-Eoor');

title({'测试集预测误差对比';['MSE = ' num2str(MSE), ', IWOA-MSE = ' num2str(MSEIWOA)]});

grid on;

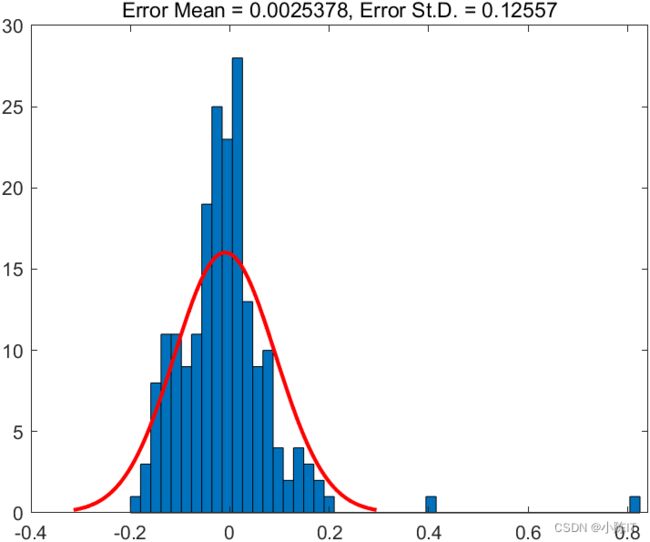

figure;

histfit(errorsIWOA, 50);

title(['Error Mean = ' num2str(error_mean) ', Error St.D. = ' num2str(error_std)]);

2、

%% [1]武泽权,牟永敏.一种改进的鲸鱼优化算法[J].计算机应用研究,2020,37(12):3618-3621.

function [Leader_score,Leader_pos,Convergence_curve,BestNet,pos_curve]=IWOA(SearchAgents_no,Max_iter,lb,ub,dim,fobj)

% initialize position vector and score for the leader

net = {};

Leader_pos=zeros(1,dim);

Leader_score=inf; %change this to -inf for maximization problems

%% 改进点:准反向初始化

Positions=initializationNew(SearchAgents_no,dim,ub,lb,fobj);

Convergence_curve=zeros(1,Max_iter);

t=0;% Loop counter

% Main loop

while t<Max_iter

for i=1:size(Positions,1)

% Return back the search agents that go beyond the boundaries of the search space

Flag4ub=Positions(i,:)>ub;

Flag4lb=Positions(i,:)<lb;

Positions(i,:)=(Positions(i,:).*(~(Flag4ub+Flag4lb)))+ub.*Flag4ub+lb.*Flag4lb;

% Calculate objective function for each search agent

% fitness=fobj(Positions(i,:));

[fitness,net] = fobj(Positions(i,:));

% Update the leader

if fitness<Leader_score % Change this to > for maximization problem

Leader_score=fitness; % Update alpha

Leader_pos=Positions(i,:);

end

end

BestNet = net;

%% 改进点:非线性收敛因子

a=2 - sin(t*pi/(2*Max_iter) + 0);

% a2 linearly dicreases from -1 to -2 to calculate t in Eq. (3.12)

a2=-1+t*((-1)/Max_iter);

%% 改进点:自适应权重

w = 1 - (exp(t/Max_iter) - 1)/(exp(1) -1);

% Update the Position of search agents

for i=1:size(Positions,1)

r1=rand(); % r1 is a random number in [0,1]

r2=rand(); % r2 is a random number in [0,1]

A=2*a*r1-a; % Eq. (2.3) in the paper

C=2*r2; % Eq. (2.4) in the paper

b=1; % parameters in Eq. (2.5)

l=(a2-1)*rand+1; % parameters in Eq. (2.5)

p = rand(); % p in Eq. (2.6)

for j=1:size(Positions,2)

if p<0.5

if abs(A)>=1

rand_leader_index = floor(SearchAgents_no*rand()+1);

X_rand = Positions(rand_leader_index, :);

D_X_rand=abs(C*X_rand(j)-Positions(i,j)); % Eq. (2.7)

Positions(i,j)=w*X_rand(j)-A*D_X_rand; % 引入权重

elseif abs(A)<1

D_Leader=abs(C*Leader_pos(j)-Positions(i,j)); % Eq. (2.1)

Positions(i,j)=w*Leader_pos(j)-A*D_Leader; % 引入权重

end

elseif p>=0.5

distance2Leader=abs(Leader_pos(j)-Positions(i,j));

% Eq. (2.5)

Positions(i,j)=distance2Leader*exp(b.*l).*cos(l.*2*pi)+w*Leader_pos(j); % 引入权重

end

end

%边界处理

Flag4ub=Positions(i,:)>ub;

Flag4lb=Positions(i,:)<lb;

Positions(i,:)=(Positions(i,:).*(~(Flag4ub+Flag4lb)))+ub.*Flag4ub+lb.*Flag4lb;

%% 改进点:随机差分变异

Rindex = randi(SearchAgents_no);%随机选择一个个体

r1 = rand; r2 = rand;

Temp = r1.*(Leader_pos - Positions(i,:)) + r2.*(Positions(Rindex,:) - Positions(i,:));

Flag4ub=Temp>ub;

Flag4lb=Temp<lb;

Temp=(Temp.*(~(Flag4ub+Flag4lb)))+ub.*Flag4ub+lb.*Flag4lb;

if fobj(Temp) < fobj(Positions(i,:))

Positions(i,:) = Temp;

end

end

t=t+1;

Convergence_curve(t)=Leader_score;

pos_curve(t,:)=Leader_pos;

fprintf(1,'%g\n',t);

end

3、

%% 基于准反向策略的种群初始化

function Positions=initializationNew(SearchAgents_no,dim,ub,lb,fun)

Boundary_no= size(ub,2); % numnber of boundaries

BackPositions = zeros(SearchAgents_no,dim);

if Boundary_no==1

PositionsF=rand(SearchAgents_no,dim).*(ub-lb)+lb;

%求取反向种群

BackPositions = ub + lb - PositionsF;

end

% If each variable has a different lb and ub

if Boundary_no>1

for i=1:dim

ub_i=ub(i);

lb_i=lb(i);

PositionsF(:,i)=rand(SearchAgents_no,1).*(ub_i-lb_i)+lb_i;

%求取反向种群

BackPositions(:,i) = (ub_i+lb_i) - PositionsF(:,i);

end

end

%% 准反向操作

for i = 1:SearchAgents_no

for j = 1:dim

if Boundary_no==1

if (ub + lb)/2 <BackPositions(i,j)

Lb = (ub + lb)/2;

Ub = BackPositions(i,j);

PBackPositions(i,j) = (Ub - Lb)*rand + Lb;

else

Lb = BackPositions(i,j);

Ub = (ub + lb)/2;

PBackPositions(i,j) = (Ub - Lb)*rand + Lb;

end

else

if (ub(j) + lb(j))/2 <BackPositions(i,j)

Lb = (ub(j) + lb(j))/2;

Ub = BackPositions(i,j);

PBackPositions(i,j) = (Ub - Lb)*rand + Lb;

else

Lb = BackPositions(i,j);

Ub = (ub(j) + lb(j))/2;

PBackPositions(i,j) = (Ub - Lb)*rand + Lb;

end

end

end

end

%合并种群

AllPositionsTemp = [PositionsF;PBackPositions];

AllPositions = AllPositionsTemp;

for i = 1:size(AllPositionsTemp,1)

% fitness(i) = fun(AllPositionsTemp(i,:));

[fitness(i),net{i}] = fun(AllPositionsTemp(i,:));

fprintf(1,'%g\n',i);

end

[fitness, index]= sort(fitness);%排序

for i = 1:2*SearchAgents_no

AllPositions(i,:) = AllPositionsTemp(index(i),:);

end

%取适应度排名靠前的作为种群的初始化

Positions = AllPositions(1:SearchAgents_no,:);

end

4、

%适应度函数

%mse作为适应度值

function [fitness,net] = fun(x,numFeatures,numResponses,XTrain,YTrain,XTest,YTest)

disp('进行一次训练中....')

%% 获取优化参数

numHiddenUnits = round(x(1));%BiLSTM网路包含的隐藏单元数目

maxEpochs = round(x(2));%最大训练周期

InitialLearnRate = x(3);%初始学习率

L2Regularization = x(4);%L2参数

%设置网络

layers = [ ...

sequenceInputLayer(numFeatures)

bilstmLayer(numHiddenUnits)

fullyConnectedLayer(numResponses)

regressionLayer];

%指定训练选项,采用cpu训练, 这里用cpu是为了保证能直接运行,如果需要gpu训练,改成gpu就行了,且保证cuda有安装

options = trainingOptions('adam', ...

'MaxEpochs',maxEpochs, ...

'ExecutionEnvironment' ,'gpu',...

'InitialLearnRate',InitialLearnRate,...

'GradientThreshold',1, ...

'L2Regularization',L2Regularization, ...

'Verbose',0);

%'Plots','training-progress'

%训练LSTM

net = trainNetwork(XTrain,YTrain,layers,options);

%训练集测试

PredictTrain = predict(net,XTrain, 'ExecutionEnvironment','gpu');

%测试集测试

PredictTest = predict(net,XTest, 'ExecutionEnvironment','gpu');

%训练集mse

mseTrain = mse(YTrain-PredictTrain);

%测试集mse

mseTest = mse(YTest-PredictTest);

%% 测试集准确率

fitness =mseTrain+mseTest;

disp('训练结束....')

end