Tensorflow简单项目实战——fashion_mnist

目录

一、数据集简介

二、实验环境

三、实验细节

3.1 数据集准备

3.2 tf.keras.Sequential构建网络

3.3 利用tf.keras.Model构建模型

一、数据集简介

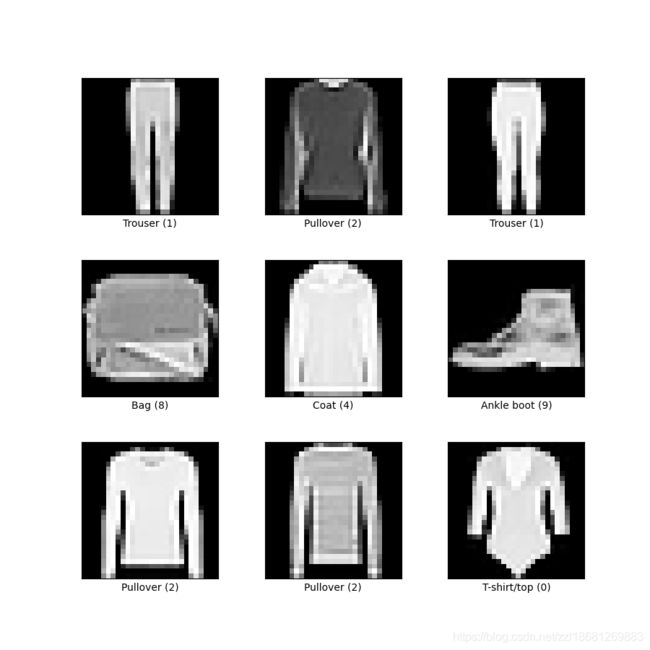

采用Tensorflow官方数据集fashion_mnist

训练集由60000个图像组成,测试集图像10000张。图片依据衣服分为10个类别

每张图都是28x28的灰度图像,

FeaturesDict({

'image': Image(shape=(28, 28, 1), dtype=tf.uint8),

'label': ClassLabel(shape=(), dtype=tf.int64, num_classes=10),

})二、实验环境

Python3.6

numpy

tensorflow

matplotlib

tensorflow-datasets (安装好tensorflow后直接pip install tensorflow-datasets)

三、实验细节

3.1 数据集准备

首先利用tensorflow-datasets的tfds.load实现一行代码载入数据集

#载入数据集,利用tfds.load

#载入名称为'fashion_mnist',选择train和test进行载入,打乱图像,下载数据集,将数据集作为二元组(input,label)返回,

(fashion_train,fashion_test),fashion_info=tfds.load(name='fashion_mnist',split=['train','test'],shuffle_files=True,

download=True,as_supervised=True,with_info=True)可以先运行一下来加载数据集,第一次下载比较费时间,后面就不会了

接下来让我们看一下训练集里面的图片和标签是什么样的

fig=tfds.show_examples(fashion_train,fashion_info)对于训练集,由于图像类别是uint8,我们要先将其转化为float32,并进行归一化

#tf.cast格式转换

defnormalize_img(image,label):

returntf.cast(image,tf.float32)/255,0,label

#方法map 功能将map_func映射到此数据集的元素

fashion_train=fashion_train.map(map_func=normalize_img)接着是一些常规操作缓存→打乱→batch→prefetch预期

#缓存,未指定文件时会缓存到内存中

fashion_train=fashion_train.cache()

#打乱,对于完美的混洗,缓冲区大小需要大于或等于数据集的完整大小

fashion_train=fashion_train.shuffle(buffer_size=fashion_info.splits['train'].num_examples,seed=1)

#batch

fashion_train=fashion_train.batch(128)

#prefetch,tf.data.experimental.AUTOTUNE表示动态调整缓冲区大小

fashion_train=fashion_train.prefetch(tf.data.experimental.AUTOTUNE)对于测试集,不需要打乱操作

fashion_test=fashion_test.map(map_func=normalize_img)

fashion_test=fashion_test.cache()

fashion_test=fashion_test.batch(128)

fashion_test=fashion_test.prefetch(tf.data.experimental.AUTOTUNE)至此数据集就准备完成 , 开始构建模型 并训练, 可以采用两个方法,一是利用tf.keras.Sequential构建网络并训练,另外是利用tf.model构建

3.2 tf.keras.Sequential构建网络

#构建网络结构

model=tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28,28)),

f.keras.layers.Dense(128,activation='relu'),

tf.keras.layers.Dense(10)

])

#配置用于训练的模型

model.compile(

optimizer=tf.keras.optimizers.Adam(),#优化器

loss=tf.keras.losses.SparseCategoricalCrossentropy(),#损失函数

metrics=[tf.keras.metrics.SparseCategoricalCrossentropy()]#精度指标

)

#训练模型

model.fit(

fashion_train,

epochs=50,

validation_data=fashion_test

)

完整的代码

import tensorflow_datasets as tfds

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

# 配置GPU模式

gpus = tf.config.list_physical_devices(device_type='GPU')

for gpu in gpus:

tf.config.experimental.set_memory_growth(device=gpu, enable=True)

# 载入数据集,利用tfds.load

# 载入名称为'fashion_mnist' , 选择 train和test进行载入,打乱图像,下载数据集,将数据集作为二元组(input,label)返回,

(fashion_train, fashion_test), fashion_info = tfds.load(name='fashion_mnist', split=['train', 'test'],

shuffle_files=True,

download=True, as_supervised=True, with_info=True)

# fig = tfds.show_examples(fashion_train,fashion_info)

# tf.cast 格式转换

def normalize_img(image, label):

return tf.cast(image, tf.float32) / 255, 0, label

# 方法 map 功能 将 map_func 映射到此数据集的元素

fashion_train = fashion_train.map(map_func=normalize_img)

# 缓存,未指定文件时会缓存到内存中

fashion_train = fashion_train.cache()

# 打乱, 对于完美的混洗,缓冲区大小需要大于或等于数据集的完整大小

fashion_train = fashion_train.shuffle(buffer_size=fashion_info.splits['train'].num_examples, seed=1)

# batch

fashion_train = fashion_train.batch(128)

# prefetch , tf.data.experimental.AUTOTUNE表示动态调整缓冲区大小

fashion_train = fashion_train.prefetch(tf.data.experimental.AUTOTUNE)

fashion_test = fashion_test.map(map_func=normalize_img)

fashion_test = fashion_test.cache()

fashion_test = fashion_test.batch(128)

fashion_test = fashion_test.prefetch(tf.data.experimental.AUTOTUNE)

#构建网络结构

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28,28)),

f.keras.layers.Dense(128,activation='relu'),

tf.keras.layers.Dense(10)

])

#配置用于训练的模型

model.compile(

optimizer=tf.keras.optimizers.Adam(),#优化器

loss = tf.keras.losses.SparseCategoricalCrossentropy(),#损失函数

metrics=[tf.keras.metrics.SparseCategoricalCrossentropy()]#精度指标

)

#训练模型

model.fit(

fashion_train,

epochs=10,

validation_data=fashion_test

)

evaluate = model.evaluate(

fashion_test,

verbose=1

)

3.3 利用tf.keras.Model构建模型

class MyModel(tf.keras.Model):

def __init__(self):

super().__init__()

self.flatten = tf.keras.layers.Flatten(input_shape=(28,28))

self.dense1 = tf.keras.layers.Dense(128,activation='relu')

self.dense2 = tf.keras.layers.Dense(10,activation='sigmoid')

def call(self, x):

x = self.flatten(x)

x = self.dense1(x)

output = self.dense2(x)

return output完整代码如下

import tensorflow_datasets as tfds

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

class MyModel(tf.keras.Model):

def __init__(self):

super().__init__()

self.flatten = tf.keras.layers.Flatten(input_shape=(28,28))

self.dense1 = tf.keras.layers.Dense(128,activation='relu')

self.dense2 = tf.keras.layers.Dense(10,activation='sigmoid')

def call(self, x):

x = self.flatten(x)

x = self.dense1(x)

output = self.dense2(x)

return output

# 配置GPU模式

gpus = tf.config.list_physical_devices(device_type='GPU')

for gpu in gpus:

tf.config.experimental.set_memory_growth(device=gpu, enable=True)

# 载入数据集,利用tfds.load

# 载入名称为'fashion_mnist' , 选择 train和test进行载入,打乱图像,下载数据集,将数据集作为二元组(input,label)返回,

(fashion_train, fashion_test), fashion_info = tfds.load(name='fashion_mnist', split=['train', 'test'],

shuffle_files=True,

download=True, as_supervised=True, with_info=True)

# fig = tfds.show_examples(fashion_train,fashion_info)

# tf.cast 格式转换

def normalize_img(image, label):

return tf.cast(image, tf.float32) / 255, 0, label

# 方法 map 功能 将 map_func 映射到此数据集的元素

fashion_train = fashion_train.map(map_func=normalize_img)

# 缓存,未指定文件时会缓存到内存中

fashion_train = fashion_train.cache()

# 打乱, 对于完美的混洗,缓冲区大小需要大于或等于数据集的完整大小

fashion_train = fashion_train.shuffle(buffer_size=fashion_info.splits['train'].num_examples, seed=1)

# batch

fashion_train = fashion_train.batch(128)

# prefetch , tf.data.experimental.AUTOTUNE表示动态调整缓冲区大小

fashion_train = fashion_train.prefetch(tf.data.experimental.AUTOTUNE)

fashion_test = fashion_test.map(map_func=normalize_img)

fashion_test = fashion_test.cache()

fashion_test = fashion_test.batch(128)

fashion_test = fashion_test.prefetch(tf.data.experimental.AUTOTUNE)

#利用tf.keras.Model构建模型

model = MyModel()#实例化对象

model.compile(optimizer="Adam", loss="mse", metrics=["mae"])

model.fit(fashion_train, epochs=5)

model.summary()