mindspore的model.train怎么实现pytorch的optimizer.zero_grad()和loss.backward()

问题描述:

【功能模块】

开发环境:Win10

【操作步骤&问题现象】

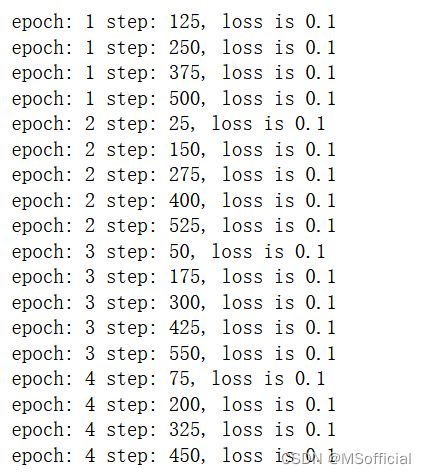

1、我在使用msp复现我的pytorch代码时,直接用model.train()训练模型,但是损失函数一直是0.1。和我的pytorch代码唯一不一样的是我在msp中没有optimizer.zero_grad()、loss.backward()和optimizer.step()

2、我一直没有找到上诉三个在msp中的实现方法

【截图信息】

pytorch代码:

def train(epoch):

model.train()

scheduler.step()

start_time = time.time()

total_loss = 0

for i, (images, labels) in enumerate(data_loader_train):

optimizer.zero_grad()

images = Variable(images)

one_hot = torch.zeros(100, 10).scatter(1, labels.unsqueeze(1), 1)

labels = Variable(one_hot)

outputs = model(images)

loss = cost(outputs, labels)

total_loss += float(loss)

loss.backward()

optimizer.step()

def eval(epoch, if_test):

model.eval()

correct = 0

total = 0

if if_test:

for i, (images, labels) in enumerate(data_loader_test):

images = Variable(images)

labels = Variable(labels)

outputs= model(images)

pred = outputs.max(1)[1]

total += labels.size(0)

correct += (pred == labels).sum()

acc = 100.0 * correct.item() / total

print('Test correct: %d Accuracy: %.2f%%' % (correct, acc))

test_scores.append(acc)

if acc > max(test_scores):

save_file = str(epoch) + '.pt'

torch.save(model, os.path.join(save_path, save_file))

else:

for i, (images, labels) in enumerate(data_loader_train):

images = Variable(images)

labels = Variable(labels)

outputs = model(images)

pred = outputs.max(1)[1]

total += labels.size(0)

correct += (pred == labels).sum()

acc = 100.0 * correct.item() / total

print('Train correct: %d Accuracy: %.2f%%' % (correct, acc))

train_scores.append(acc)

test_scores = []

train_scores = []

save_path = './LISNN_'

if not os.path.exists(save_path):

os.mkdir(save_path)

n_epoch = 10

for epoch in range(n_epoch):

train(epoch)

if (epoch + 1) % 2 == 0:

eval(epoch, if_test = True)

if (epoch + 1) % 20 == 0:

eval(epoch, if_test = False)

if (epoch + 1) % 20 == 0:

print('Best Test Accuracy in %d: %.2f%%' % (epoch + 1, max(test_scores)))

print('Best Train Accuracy in %d: %.2f%%' % (epoch + 1, max(train_scores)))

mindspore代码:

# 定义训练函数

def train(epoch, ckpoint_cb, sink_mode):

model.train(epoch,ds_train,callbacks=[ckpoint_cb, LossMonitor(125)], dataset_sink_mode=sink_mode) # 每过200个step就输出训练损失值

# 定义验证函数

def eval():

acc = model.eval(ds_test, dataset_sink_mode=False)

print("{}".format(acc))

test_scores = []

train_scores = []

save_path = './LISNN_'

if not os.path.exists(save_path):

os.mkdir(save_path)

n_epoch = 20

train(n_epoch,ckpoint,False) # 调用训练函数

eval()解答:

1. PyTorch为Tensor建立了grad属性和backward方法,tensor.grad是通过tensor.backward方法(本质是PyTorch.autograd.backward)计算的,且在计算中进行梯度值累加,因此一般在调用tensor.backward方法前,需要手动将grad属性清零。MindSpore没有为Tensor和grad建立直接联系,在使用时不需要手动清零。

2. 损失函数一直都是0.1,是否在训练之前给网络加入优化器,优化器的用法如下:

from mindspore import context, Tensor, ParameterTuple

from mindspore import nn, Model, ops

import numpy as np

from mindspore import dtype as mstype

class Net(nn.Cell):

def __init__(self):

super(Net, self).__init__()

self.conv = nn.Conv2d(3, 64, 3)

self.bn = nn.BatchNorm2d(64)

def construct(self, x):

x = self.conv(x)

x = self.bn(x)

return x

net = Net()

loss = nn.MSELoss()

optimizer = nn.SGD(params=net.trainable_params(), learning_rate=0.01)

# 使用Model接口

model = Model(net, loss_fn=loss, optimizer=optimizer, metrics={"accuracy"})https://www.mindspore.cn/docs/migration_guide/zh-CN/r1.6/optim.html?highlight=zero_grad()