Hive的表查询操作

首先开启Hadoop集群:

cd /opt/hadoop-3.1.4/sbin

./start-dfs.sh

./start-yarn.sh

./mr-jobhistory-daemon.sh start historyserver

jps然后打开hive:

cd /usr/local/hive/conf

source /etc/profile

schematool -dbType mysql -initSchema

hive --service metastore &

hive下面依旧进行先数据库和表的建立,我先将之前的数据库和表都删了,重新进行建立,这里的删除不只是使用drop,还要在master:50070中删除。

create database taitan;

use taitan;

create table tidanic(

passengerid int,

survived int,

pclass int,

name string,

sex string,

age int,

sibsp int,

parch int,

ticket string,

fare double,

cabin string,

embarked string)

row format delimited fields terminated by ','; 必须要加上use这一句,否则数据库和表不是前后相关联的,而是并列的:

然后从本地系统的centos导入train.csv数据,加上local:

load data local inpath '/opt/train.csv' overwrite into table tidanic;然后就是删除刚才上传的文件:

dfs -rm -r /user/hive/warehouse/taitan.db/tidanic/train.csv;从hdfs系统导入数据(不要加local),首先先把数据传到hdfs系统下,再导入数据:

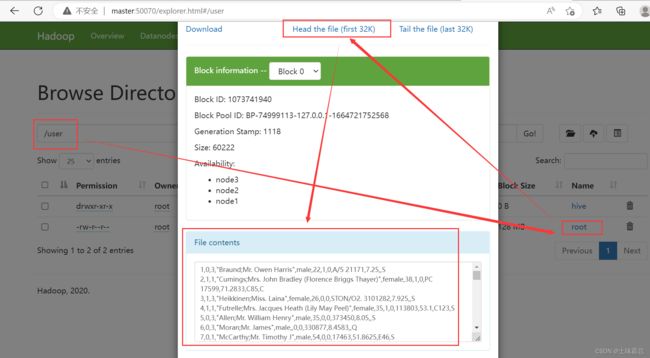

dfs -put /opt/train.csv /user/root;

load data inpath '/user/root/train.csv' overwrite into table tidanic;下面进行多表查询导入数据:

create table tidanic_save like tidanic;

create table tidanic_died like tidanic;

from tidanic

insert overwrite table tidanic_save select * where survived=1

insert overwrite table tidanic_died select * where survived=0;将数据导出到centos本地上:

insert overwrite local directory '/opt/tidanic_save' row format delimited fields terminated by ',' select * from tidanic_save;![]() 下面是一些查询任务的例子,分别统计性别与生存率的关系、客舱等级与生存率的关系、登船港口与生存率的关系:

下面是一些查询任务的例子,分别统计性别与生存率的关系、客舱等级与生存率的关系、登船港口与生存率的关系:

select sex,s_count/all_count as s_percent from(select sex,count(*) as s_count from tidanic where survived=1) a join (select count(*) as all_count from tidanic where survived=1) b on 1=1;

select pclass,s_count/all_count as s_percent from(select pclass,count(*) as s_count from tidanic where survived=1 group by pclass) a join (select count(*) as all_count from tidanic where survived=1) b on 1=1;

select embarked,s_count/all_count as s_percent from(select embarked,count(*) as s_count from tidanic where survived=1 group by embarked) a join (select count(*) as all_count from tidanic where survived=1) b on 1=1;如果报错的话,如图加入两行代码,再重新运行:

set hive.strict.checks.cartesian.product=false;

set hive.mapred.mode=nonstrict;