企业运维----Docker-kubernetes-监控(资源限制、资源监控、HPA伸缩实例、Helm)

kubernetes-监控

-

-

- k8s容器资源限制

-

-

- 内存限制

- CPU限制

-

- k8s容器资源监控

-

-

- metrics-server部署

- Dashboard部署

-

- HPA

- Helm

-

- 部署

-

- Helm 添加第三方 Chart 库

- Helm部署nfs-client-provisioner

- Helm部署部署metrics-server监控

-

k8s容器资源限制

Kubernetes采用request和limit两种限制类型来对资源进行分配。

request(资源需求):即运行Pod的节点必须满足运行Pod的最基本需求才能运行Pod。

limit(资源限额):即运行Pod期间,可能内存使用量会增加,那最多能使用多少内存,这就是资源限额。

资源类型:

CPU 的单位是核心数,内存的单位是字节。

一个容器申请0.5个CPU,就相当于申请1个CPU的一半,你也可以加个后缀m 表示千分之一的概念。比如说100m的CPU,100豪的CPU和0.1个CPU都是一样的。

内存单位:

K、M、G、T、P、E #通常是以1000为换算标准的。

Ki、Mi、Gi、Ti、Pi、Ei #通常是以1024为换算标准的。

内存限制

编辑资源清单 需求200M limits100M pod不能运行

[root@server2 ~]# mkdir limit

[root@server2 ~]# cd limit/

[root@server2 limit]# vim pod.yaml

[root@server2 limit]# cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: memory-demo

spec:

containers:

- name: memory-demo

image: stress

args:

- --vm

- "1"

- --vm-bytes

- 200M

resources:

requests:

memory: 50Mi

limits:

memory: 100Mi

[root@server2 limit]# kubectl apply -f pod.yaml

pod/memory-demo created

[root@server2 limit]# kubectl get pod

NAME READY STATUS RESTARTS AGE

memory-demo 0/1 ContainerCreating 0 8s

mypod 1/1 Running 0 5h18m

[root@server2 limit]# kubectl get pod

NAME READY STATUS RESTARTS AGE

memory-demo 0/1 CrashLoopBackOff 2 52s

mypod 1/1 Terminating 0 5h19m

[root@server2 limit]# kubectl get pod

NAME READY STATUS RESTARTS AGE

memory-demo 0/1 CrashLoopBackOff 3 81s

mypod 0/1 Terminating 0 5h19m

更改limits为300 pod 可以runing

[root@server2 limit]# vim pod.yaml

[root@server2 limit]# cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: memory-demo

spec:

containers:

- name: memory-demo

image: stress

args:

- --vm

- "1"

- --vm-bytes

- 200M

resources:

requests:

memory: 50Mi

limits:

memory: 300Mi

[root@server2 limit]# kubectl delete -f pod.yaml

pod "memory-demo" deleted

[root@server2 limit]# kubectl apply -f pod.yaml

pod/memory-demo created

[root@server2 limit]# kubectl get pod

NAME READY STATUS RESTARTS AGE

memory-demo 1/1 Running 0 9s

CPU限制

Pending 是因为申请的CPU资源低于 requests

[root@server2 limit]# kubectl delete -f pod.yaml

pod "memory-demo" deleted

[root@server2 limit]# vim pod1.yaml

[root@server2 limit]# cat pod1.yaml

apiVersion: v1

kind: Pod

metadata:

name: cpu-demo

spec:

containers:

- name: cpu-demo

image: stress

resources:

limits:

cpu: "10"

requests:

cpu: "5"

args:

- -c

- "2"

[root@server2 limit]# kubectl apply -f pod1.yaml

pod/cpu-demo created

[root@server2 limit]# kubectl get pod

NAME READY STATUS RESTARTS AGE

cpu-demo 0/1 Pending 0 9s

[root@server2 limit]# kubectl describe pod

Name: cpu-demo

Namespace: default

Priority: 0

Node: <none>

Labels: <none>

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Containers:

cpu-demo:

Image: stress

Port: <none>

Host Port: <none>

Args:

-c

2

Limits:

cpu: 10

Requests:

cpu: 5

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-kr2rc (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

kube-api-access-kr2rc:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 25s (x3 over 108s) default-scheduler 0/3 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 2 Insufficient cpu.

更改之后 runing

[root@server2 limit]# vim pod1.yaml

[root@server2 limit]# cat pod1.yaml

apiVersion: v1

kind: Pod

metadata:

name: cpu-demo

spec:

containers:

- name: cpu-demo

image: stress

resources:

limits:

cpu: "2"

requests:

cpu: "0.1"

args:

- -c

- "2"

[root@server2 limit]# kubectl delete -f pod1.yaml

pod "cpu-demo" deleted

[root@server2 limit]# kubectl apply -f pod1.yaml

pod/cpu-demo created

[root@server2 limit]# kubectl get pod

NAME READY STATUS RESTARTS AGE

cpu-demo 1/1 Running 0 12s

running成功

为namespace设置资源限制

[root@server2 limit]# cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: memory-demo

spec:

containers:

- name: memory-demo

image: nginx

# resources:

# requests:

# memory: 50Mi

# limits:

# memory: 300Mi

[root@server2 limit]# vim limitrange.yaml

[root@server2 limit]# cat limitrange.yaml

apiVersion: v1

kind: LimitRange

metadata:

name: limitrange-memory

spec:

limits:

- default:

cpu: 0.5

memory: 512Mi

defaultRequest:

cpu: 0.1

memory: 256Mi

max:

cpu: 1

memory: 1Gi

min:

cpu: 0.1

memory: 100Mi

type: Container

[root@server2 limit]# kubectl apply -f limitrange.yaml

limitrange/limitrange-memory created

[root@server2 limit]# kubectl describe limitranges

Name: limitrange-memory

Namespace: default

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container cpu 100m 1 100m 500m -

Container memory 100Mi 1Gi 256Mi 512Mi -

[root@server2 limit]# kubectl delete -f pod1.yaml

pod "cpu-demo" deleted

[root@server2 limit]# kubectl apply -f pod.yaml

pod/memory-demo created

[root@server2 limit]# kubectl get pod

NAME READY STATUS RESTARTS AGE

memory-demo 1/1 Running 0 4s

running成功

[root@server2 limit]# vim limitrange.yaml

[root@server2 limit]# cat limitrange.yaml

apiVersion: v1

kind: LimitRange

metadata:

name: limitrange-memory

spec:

limits:

- default:

cpu: 0.5

memory: 512Mi

defaultRequest:

cpu: 0.1

memory: 256Mi

max:

cpu: 1

memory: 1Gi

min:

cpu: 0.1

memory: 100Mi

type: Container

---

apiVersion: v1

kind: ResourceQuota

metadata:

name: mem-cpu-demo

spec:

hard:

requests.cpu: "1"

requests.memory: 1Gi

limits.cpu: "2"

limits.memory: 2Gi

[root@server2 limit]# kubectl apply -f limitrange.yaml

limitrange/limitrange-memory configured

resourcequota/mem-cpu-demo created

如果删除限制

[root@server2 limit]# kubectl delete limitranges limitrange-memory

limitrange "limitrange-memory" deleted

[root@server2 limit]# kubectl get limitranges

No resources found in default namespace.

[root@server2 limit]# kubectl delete -f pod.yaml

pod "memory-demo" deleted

[root@server2 limit]# kubectl apply -f pod.yaml

Error from server (Forbidden): error when creating "pod.yaml": pods "memory-demo" is forbidden: failed quota: mem-cpu-demo: must specify limits.cpu,limits.memory,requests.cpu,requests.memory

报错:必须添加 memory cpu的限制

k8s容器资源监控

metrics-server部署

Metrics-Server是集群核心监控数据的聚合器,用来替换之前的heapster。

容器相关的 Metrics 主要来自于 kubelet 内置的 cAdvisor 服务,有了Metrics-Server之后,用户就可以通过标准的 Kubernetes API 来访问到这些监控数据。

Metrics API 只可以查询当前的度量数据,并不保存历史数据。

Metrics API URI 为 /apis/metrics.k8s.io/,在 k8s.io/metrics 维护。

必须部署 metrics-server 才能使用该 API,metrics-server 通过调用 Kubelet Summary API 获取数据。

[root@server2 ~]# mkdir metrics-server

[root@server2 ~]# cd metrics-server/

[root@server2 metrics-server]# wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

133 - --secure-port=4443

137 image: metrics-server:v0.5.0

148 - containerPort: 4443

启用TLS Bootstrap 证书签发

server2,3,4都在config.yaml最后面加上 serverTLSBootstrap: true

[root@server2 metrics-server]# vim /var/lib/kubelet/config.yaml

[root@server3 ~]# vim /var/lib/kubelet/config.yaml

[root@server4 ~]# vim /var/lib/kubelet/config.yaml

serverTLSBootstrap: true

[root@server2 metrics-server]# systemctl restart kubelet

[root@server3 ~]# systemctl restart kubelet

[root@server4 ~]# systemctl restart kubelet

[root@server2 metrics-server]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

csr-jtps7 48s kubernetes.io/kubelet-serving system:node:server3 Pending

csr-pp7hd 45s kubernetes.io/kubelet-serving system:node:server4 Pending

csr-z82vb 75s kubernetes.io/kubelet-serving system:node:server2 Pending

[root@server2 metrics-server]# kubectl certificate approve csr-jtps7

certificatesigningrequest.certificates.k8s.io/csr-jtps7 approved

[root@server2 metrics-server]# kubectl certificate approve csr-pp7hd

certificatesigningrequest.certificates.k8s.io/csr-pp7hd approved

[root@server2 metrics-server]# kubectl certificate approve csr-z82vb

certificatesigningrequest.certificates.k8s.io/csr-z82vb approved

因为没有内网的DNS服务器,所以metrics-server无法解析节点名字。可以直接修改coredns的configmap,将各个节点的主机名加入到hosts中

[root@server2 metrics-server]# kubectl -n kube-system edit cm coredns

14 hosts {

15 172.25.12.2 server2

16 172.25.12.3 server3

17 172.25.12.4 server4

18 fallthrough

19 }

configmap/coredns edited

执行yaml

[root@server2 metrics-server]# kubectl apply -f components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

[root@server2 metrics-server]# kubectl -n kube-system get pod

NAME READY STATUS RESTARTS AGE

metrics-server-86d6b8bbcc-75g7n 1/1 Running 0 116s

[root@server2 metrics-server]# kubectl -n kube-system describe svc metrics-server

Name: metrics-server

Namespace: kube-system

Labels: k8s-app=metrics-server

Annotations: <none>

Selector: k8s-app=metrics-server

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.106.131.25

IPs: 10.106.131.25

Port: https 443/TCP

TargetPort: https/TCP

Endpoints: 10.244.5.84:4443

Session Affinity: None

Events: <none>

部署成功

[root@server2 metrics-server]# kubectl top node

W0803 21:00:00.624096 16123 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

server2 193m 9% 1143Mi 60%

server3 57m 5% 539Mi 60%

server4 44m 4% 393Mi 44%

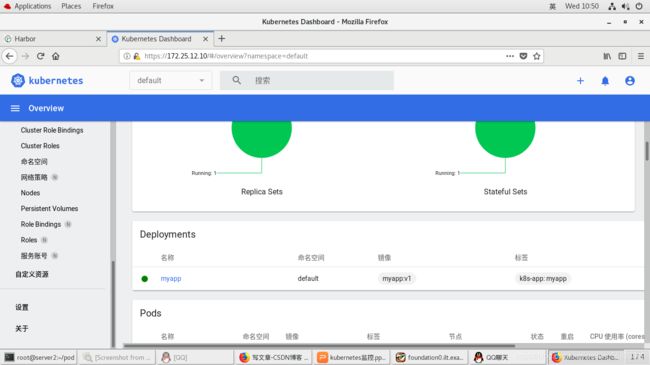

Dashboard部署

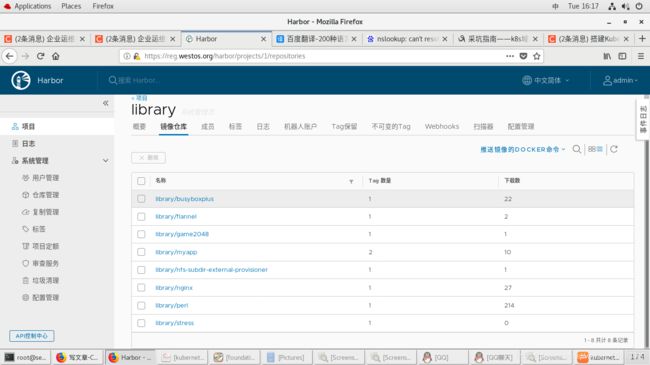

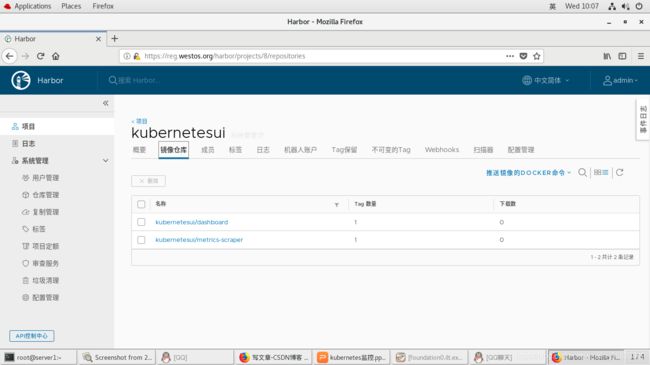

镜像准备

[root@server1 ~]# docker pull kubernetesui/dashboard:v2.3.1

v2.3.1: Pulling from kubernetesui/dashboard

b82bd84ec244: Pull complete

21c9e94e8195: Pull complete

Digest: sha256:ec27f462cf1946220f5a9ace416a84a57c18f98c777876a8054405d1428cc92e

Status: Downloaded newer image for kubernetesui/dashboard:v2.3.1

docker.io/kubernetesui/dashboard:v2.3.1

[root@server1 ~]# docker pull kubernetesui/metrics-scraper

Using default tag: latest

latest: Pulling from kubernetesui/metrics-scraper

c3666b2c63e0: Pull complete

be16e025141c: Pull complete

Digest: sha256:5c37adf540819136b22e96148cf361403cff8ce93bacdbe9bd3dbe304da0cbac

Status: Downloaded newer image for kubernetesui/metrics-scraper:latest

docker.io/kubernetesui/metrics-scraper:latest

[root@server1 ~]# docker tag kubernetesui/dashboard:v2.3.1 reg.westos.org/kubernetesui/dashboard:v2.3.1

[root@server1 ~]# docker push reg.westos.org/kubernetesui/dashboard:v2.3.1

The push refers to repository [reg.westos.org/kubernetesui/dashboard]

c94f86b1c637: Pushed

8ca79a390046: Pushed

v2.3.1: digest: sha256:e5848489963be532ec39d454ce509f2300ed8d3470bdfb8419be5d3a982bb09a size: 736

[root@server1 ~]# docker tag kubernetesui/metrics-scraper:latest reg.westos.org/kubernetesui/metrics-scraper:latest

[root@server1 ~]# docker push reg.westos.org/kubernetesui/metrics-scraper:latest

The push refers to repository [reg.westos.org/kubernetesui/metrics-scraper]

1821304d60a6: Pushed

7d5c8ff74d44: Pushed

latest: digest: sha256:6842867c0c3cd24d17d9fe4359dab20a58e24bf6a6298189c2af4c5e3c0fa8b6 size: 736

[root@server2 ~]# mkdir dashboard

[root@server2 ~]# cd dashboard/

[root@server2 ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-rc5/aio/deploy/recommended.yaml

[root@server2 dashboard]# ls

recommended.yaml

[root@server2 dashboard]# vim recommended.yaml

190 image: kubernetesui/dashboard:v2.3.1

274 image: kubernetesui/metrics-scraper:lates

查看信息

[root@server2 dashboard]# kubectl get ns

NAME STATUS AGE

kubernetes-dashboard Active 16s

[root@server2 dashboard]# kubectl -n kubernetes-dashboard get all

NAME READY STATUS RESTARTS AGE

pod/dashboard-metrics-scraper-997775586-jzrqm 0/1 ErrImagePull 0 2m11s

pod/kubernetes-dashboard-74f786f696-j7l5m 1/1 Running 0 2m11s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/dashboard-metrics-scraper ClusterIP 10.98.78.156 <none> 8000/TCP 2m11s

service/kubernetes-dashboard ClusterIP 10.96.235.7 <none> 443/TCP 2m12s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/dashboard-metrics-scraper 0/1 1 0 2m11s

deployment.apps/kubernetes-dashboard 1/1 1 1 2m11s

NAME DESIRED CURRENT READY AGE

replicaset.apps/dashboard-metrics-scraper-997775586 1 1 0 2m11s

replicaset.apps/kubernetes-dashboard-74f786f696 1 1 1 2m11s

编辑配置文件 LoadBalancer

[root@server2 dashboard]# kubectl -n kubernetes-dashboard edit svc kubernetes-dashboard

service/kubernetes-dashboard edited

32 type: LoadBalancer

[root@server2 dashboard]# kubectl -n kubernetes-dashboard get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.98.78.156 <none> 8000/TCP 4m39s

kubernetes-dashboard LoadBalancer 10.96.235.7 172.25.12.10 443:30640/TCP 4m40s

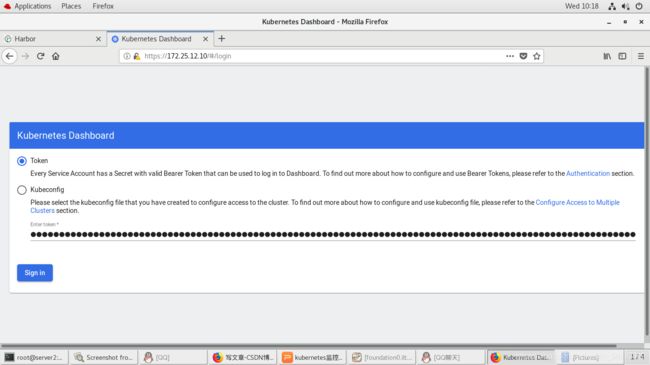

登陆dashboard需要认证,需要获取dashboard pod的token

获取token

[root@server2 dashboard]# kubectl -n kubernetes-dashboard get secrets

NAME TYPE DATA AGE

default-token-s7bqf kubernetes.io/service-account-token 3 6m37s

kubernetes-dashboard-certs Opaque 0 6m37s

kubernetes-dashboard-csrf Opaque 1 6m37s

kubernetes-dashboard-key-holder Opaque 2 6m37s

kubernetes-dashboard-token-9k9z6 kubernetes.io/service-account-token 3 6m37s

[root@server2 dashboard]# kubectl describe secrets kubernetes-dashboard-token-9k9z6 -n kubernetes-dashboard

Name: kubernetes-dashboard-token-9k9z6

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard

kubernetes.io/service-account.uid: a790d5a7-13c8-483d-9698-79594d030ece

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1066 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6Il9mM2s4bzllWjVIX1Azd0lMWVp3aWJGRGZyWVlOajRuLXp1MDd4am9WVnMifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi05azl6NiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImE3OTBkNWE3LTEzYzgtNDgzZC05Njk4LTc5NTk0ZDAzMGVjZSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.KxqjDXaNSWVRO092ZP8m2m2xN1PqUqeNMeVu_kL2vllOw7D5YeIs6ALh0fV7HzM49guMz5oHSf5FBMP73AOzjxiH1FnDHxPclYqBzOoa9hlLy1GORyEUDxOFZWfsONOc423ZmsUKRCAill63iFETSbh1wBFQgVi1bKvNIbEMp9B--oEKDBFiBtmAyt7TYUwiXke780MhzD34sGAqVrc7Q-6Kg5t2_gKcFx9-WILMS0kHiVbXHiFjnGf3KCSsVNKWbgazJIrmAdXDBYjr1ZPuxlxo-597JFec-Z6htBvGi8HM5XIK8AhINOJ-SceUNuj7pdPmWfvTch-e94tiF3o7NQ

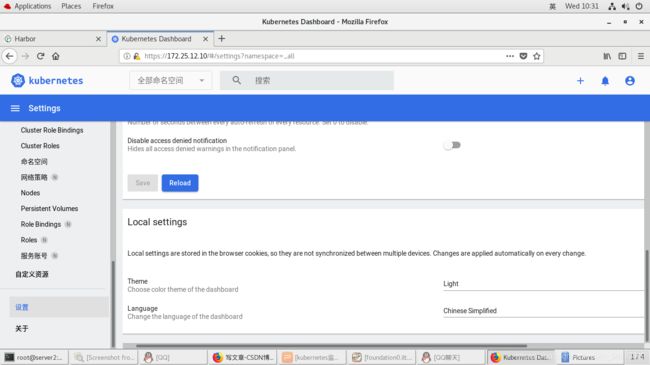

登陆dashboard

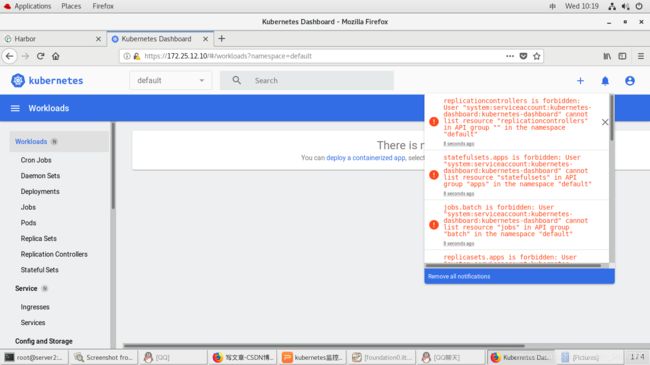

默认dashboard对集群没有操作权限,需要授权

[root@server2 dashboard]# vim rbac.yaml

[root@server2 dashboard]# cat rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

[root@server2 dashboard]# kubectl apply -f rbac.yaml

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-admin created

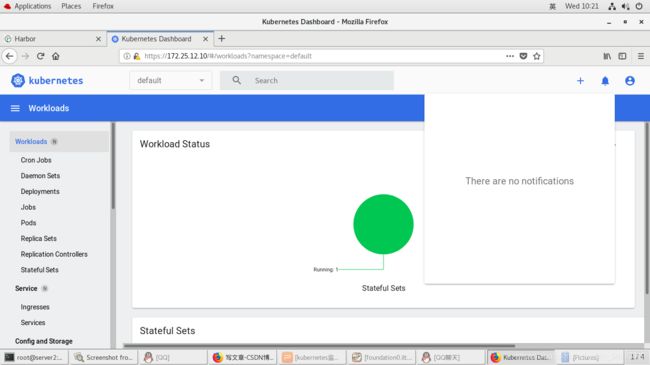

报错消失

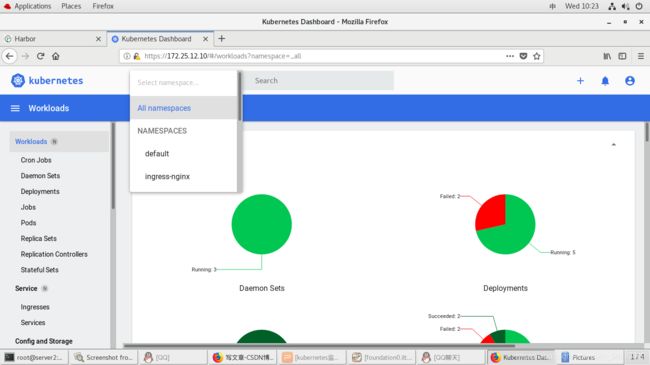

选all namespaces可以看所有的命名空间

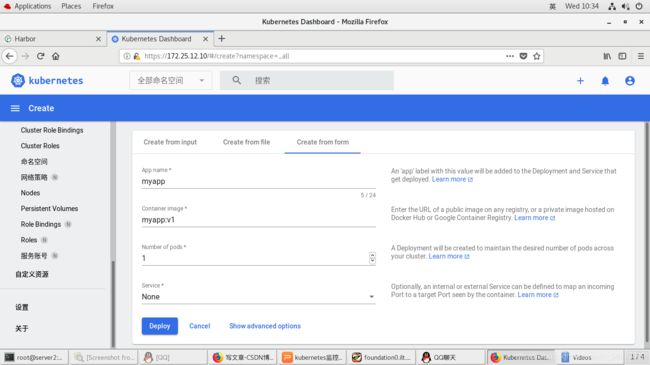

点击加号可以创建

测试:

创建一个pod

[root@server2 dashboard]# kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-7b679f4c6-znlf9 1/1 Running 0 82s

HPA

HPA伸缩过程:

收集HPA控制下所有Pod最近的cpu使用情况(CPU utilization)

对比在扩容条件里记录的cpu限额(CPUUtilization)

调整实例数(必须要满足不超过最大/最小实例数)

每隔30s做一次自动扩容的判断

CPU utilization的计算方法是用cpu usage(最近一分钟的平均值,通过metrics可以直接获取到)除以cpu request(这里cpu request就是我们在创建容器时制定的cpu使用核心数)得到一个平均值,这个平均值可以理解为:平均每个Pod CPU核心的使用占比。

HPA进行伸缩算法:

计算公式:TargetNumOfPods = ceil(sum(CurrentPodsCPUUtilization) / Target)

ceil()表示取大于或等于某数的最近一个整数

每次扩容后冷却3分钟才能再次进行扩容,而缩容则要等5分钟后。

当前Pod Cpu使用率与目标使用率接近时,不会触发扩容或缩容:

触发条件:avg(CurrentPodsConsumption) / Target >1.1 或 <0.9

上传镜像

[root@server1 ~]# ls

1 game2048.tar perl.tar

auth harbor-offline-installer-v1.10.1.tgz registry2.tar

busybox.tar hpa-example.tar rhel7.tar

calico-v3.19.1.tar ingress-nginx-v0.48.1.tar server5

certs nfs-client-provisioner-v4.0.0.tar server6

convoy nfs-client-provisioner.yaml stress.tar

convoy.tar.gz nginx.tar

[root@server1 ~]# docker load -i hpa-example.tar

65f3b0435c42: Loading layer 131MB/131MB

5f70bf18a086: Loading layer 1.024kB/1.024kB

9d98bb4454a2: Loading layer 19.48MB/19.48MB

5e383449eb00: Loading layer 180.4MB/180.4MB

e2aa46f0ee7d: Loading layer 3.584kB/3.584kB

d04b57684734: Loading layer 7.853MB/7.853MB

f199d4d9572d: Loading layer 7.68kB/7.68kB

47f095836e2e: Loading layer 9.728kB/9.728kB

8e20d4eed21b: Loading layer 14.34kB/14.34kB

25f424a0d682: Loading layer 4.096kB/4.096kB

e1c68f832b01: Loading layer 22.53kB/22.53kB

3e2e187efcf8: Loading layer 166.7MB/166.7MB

b94d2422c40f: Loading layer 7.168kB/7.168kB

8418c7d5e2bf: Loading layer 3.584kB/3.584kB

74fb9c58ae65: Loading layer 3.584kB/3.584kB

be5062df19f5: Loading layer 3.584kB/3.584kB

Loaded image: mirrorgooglecontainers/hpa-example:latest

[root@server1 ~]# docker tag mirrorgooglecontainers/hpa-example:latest reg.westos.org/library/hpa-example:latest

[root@server1 ~]# docker push reg.westos.org/library/hpa-example:latest

The push refers to repository [reg.westos.org/library/hpa-example]

be5062df19f5: Pushed

74fb9c58ae65: Pushed

5f70bf18a086: Mounted from library/stress

8418c7d5e2bf: Pushed

b94d2422c40f: Pushed

3e2e187efcf8: Pushed

e1c68f832b01: Pushed

25f424a0d682: Pushed

8e20d4eed21b: Pushed

47f095836e2e: Pushed

f199d4d9572d: Pushed

d04b57684734: Pushed

e2aa46f0ee7d: Pushed

5e383449eb00: Pushed

9d98bb4454a2: Pushed

65f3b0435c42: Pushed

latest: digest: sha256:93c2a5cc37c3088c06f020fa65c05206970b32b49f10406756426ba7b1e54c87 size: 5302

官网下载部署文件

https://kubernetes.io/zh/docs/tasks/run-application/horizontal-pod-autoscale-walkthrough/

[root@server2 ~]# mkdir hpa

[root@server2 ~]# cd hpa/

[root@server2 hpa]# vim deploy.yaml

[root@server2 hpa]# cat deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: php-apache

spec:

selector:

matchLabels:

run: php-apache

replicas: 1

template:

metadata:

labels:

run: php-apache

spec:

containers:

- name: php-apache

image: hpa-example

ports:

- containerPort: 80

resources:

limits:

cpu: 500m

requests:

cpu: 200m

---

apiVersion: v1

kind: Service

metadata:

name: php-apache

labels:

run: php-apache

spec:

ports:

- port: 80

selector:

run: php-apache

[root@server2 hpa]# kubectl apply -f deploy.yaml

deployment.apps/php-apache created

service/php-apache created

[root@server2 hpa]# kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-7b679f4c6-znlf9 1/1 Running 0 58m

php-apache-6cc67f7957-lm29t 1/1 Running 0 47s

[root@server2 hpa]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 10d

php-apache ClusterIP 10.99.36.15 <none> 80/TCP 83s

[root@server2 hpa]# kubectl describe svc php-apache

Name: php-apache

Namespace: default

Labels: run=php-apache

Annotations: <none>

Selector: run=php-apache

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.99.36.15

IPs: 10.99.36.15

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.5.92:80

Session Affinity: None

Events: <none>

[root@server2 hpa]# curl 10.99.36.15

OK![root@server2 hpa]# kubectl autoscale deployment php-apache --cpu-pent=50 --min=1 --max=10

horizontalpodautoscaler.autoscaling/php-apache autoscaled

[root@server2 hpa]# kubectl get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

php-apache 1/1 1 1 4m4s

[root@server2 hpa]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 0%/50% 1 10 1 50s

[root@server2 hpa]# kubectl top pod

W0803 23:52:59.128673 6786 top_pod.go:140] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) MEMORY(bytes)

php-apache-6cc67f7957-lm29t 1m 13Mi

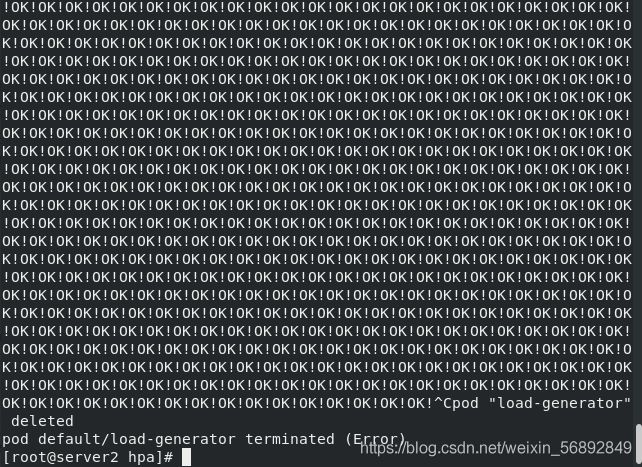

增加负载

[root@server2 hpa]# kubectl run -i --tty load-generator --rm --image=busybox --restart=Never -- /bin/sh -c "while sleep 0.01; do wget -q -O- http://php-apache; done"

再开一个shell

[kiosk@foundation12 ~]$ ssh [email protected]

[email protected]'s password:

Last login: Tue Aug 3 06:38:11 2021 from foundation12.ilt.example.com

[root@server2 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

load-generator 1/1 Running 0 82s

php-apache-6cc67f7957-67729 1/1 Running 0 44s

php-apache-6cc67f7957-lm29t 1/1 Running 0 8m6s

php-apache-6cc67f7957-ph7qm 1/1 Running 0 44s

php-apache-6cc67f7957-wjltv 0/1 ContainerCreating 0 44s

php-apache-6cc67f7957-zgkx7 0/1 ContainerCreating 0 27s

[root@server2 ~]# kubectl top pod

W0803 23:56:56.338360 9861 top_pod.go:140] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) MEMORY(bytes)

load-generator 8m 0Mi

php-apache-6cc67f7957-67729 194m 13Mi

php-apache-6cc67f7957-lm29t 192m 9Mi

php-apache-6cc67f7957-ph7qm 196m 11Mi

[root@server2 ~]# kubectl top pod

W0803 23:57:10.888933 9991 top_pod.go:140] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) MEMORY(bytes)

load-generator 9m 0Mi

php-apache-6cc67f7957-67729 155m 13Mi

php-apache-6cc67f7957-lm29t 153m 9Mi

php-apache-6cc67f7957-ph7qm 122m 11Mi

php-apache-6cc67f7957-wjltv 110m 16Mi

php-apache-6cc67f7957-zgkx7 114m 12Mi

[root@server2 ~]# kubectl get hpa/

error: arguments in resource/name form must have a single resource and name

[root@server2 ~]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 51%/50% 1 10 6 6m20s

[root@server2 ~]# kubectl top pod

W0803 23:58:18.885957 10751 top_pod.go:140] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) MEMORY(bytes)

load-generator 9m 0Mi

php-apache-6cc67f7957-67729 103m 13Mi

php-apache-6cc67f7957-8mnw4 101m 12Mi

php-apache-6cc67f7957-lm29t 101m 9Mi

php-apache-6cc67f7957-ph7qm 100m 11Mi

php-apache-6cc67f7957-wjltv 84m 16Mi

php-apache-6cc67f7957-zgkx7 110m 11Mi

[root@server2 ~]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 50%/50% 1 10 6 6m52s

[root@server2 ~]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 51%/50% 1 10 6 7m16s

[root@server2 ~]# kubectl top pod

W0804 00:03:44.801948 14556 top_pod.go:140] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) MEMORY(bytes)

php-apache-6cc67f7957-67729 1m 10Mi

php-apache-6cc67f7957-8mnw4 1m 11Mi

php-apache-6cc67f7957-lm29t 1m 9Mi

php-apache-6cc67f7957-ph7qm 1m 9Mi

php-apache-6cc67f7957-wjltv 1m 15Mi

php-apache-6cc67f7957-zgkx7 1m 11Mi

[root@server2 ~]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 0%/50% 1 10 6 11m

[root@server2 ~]# kubectl top pod --use-protocol-buffers

NAME CPU(cores) MEMORY(bytes)

php-apache-6cc67f7957-lm29t 1m 8Mi

[root@server2 ~]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 0%/50% 1 10 1 56m

hpa-v2.yaml

[root@server2 ~]# vim hpa-v2.yaml

[root@server2 ~]# cat hpa-v2.yaml

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: php-apache

spec:

maxReplicas: 10

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: php-apache

metrics:

- type: Resource

resource:

name: cpu

target:

averageUtilization: 60

type: Utilization

- type: Resource

resource:

name: memory

target:

averageValue: 50Mi

type: AverageValue

[root@server2 ~]# kubectl apply -f hpa-v2.yaml

Warning: resource horizontalpodautoscalers/php-apache is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

horizontalpodautoscaler.autoscaling/php-apache configured

[root@server2 ~]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 9375744/50Mi, 0%/60% 1 10 1 64m

[root@server2 ~]# kubectl top pod

W0804 00:56:42.700243 19260 top_pod.go:140] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) MEMORY(bytes)

php-apache-6cc67f7957-lm29t 1m 8Mi

[root@server2 ~]# kubectl top pod --use-protocol-buffers flag

Error from server (NotFound): pods "flag" not found

[root@server2 ~]#

[root@server2 ~]# kubectl top pod --use-protocol-buffers

NAME CPU(cores) MEMORY(bytes)

php-apache-6cc67f7957-lm29t 1m 8Mi

Helm

Helm是Kubernetes 应用的包管理工具,主要用来管理 Charts,类似Linux系统的yum。

Helm Chart 是用来封装 Kubernetes 原生应用程序的一系列 YAML 文件。可以在你部署应用的时候自定义应用程序的一些 Metadata,以便于应用程序的分发。

对于应用发布者而言,可以通过 Helm 打包应用、管理应用依赖关系、管理应用版本并发布应用到软件仓库。

对于使用者而言,使用 Helm 后不用需要编写复杂的应用部署文件,可以以简单的方式在 Kubernetes 上查找、安装、升级、回滚、卸载应用程序。

部署

Helm当前最新版本 v3.1.0 官网:https://helm.sh/docs/intro/

[root@server2 ~]# ls

auth limit

calico metallb

calico-v3.19.1.tar metallb-v0.10.2.tar

certs metrics-server

configmap myapp.tar

dashboard nfs-client

helm-v3.4.1-linux-amd64.tar.gz nfs-client-provisioner.yaml

hpa pod

hpa-v2.yaml psp

ingress-nginx roles

ingress-nginx-v0.48.1.tar statefulset

k8s-1.21.3.tar.gz volumes

kube-flannel.yml

[root@server2 ~]# mkdir helm

[root@server2 ~]# cd helm/

[root@server2 helm]# cp /root/helm-v3.4.1-linux-amd64.tar.gz .

[root@server2 helm]# ls

helm-v3.4.1-linux-amd64.tar.gz

[root@server2 helm]# tar zxf helm-v3.4.1-linux-amd64.tar.gz

[root@server2 helm]# ls

helm-v3.4.1-linux-amd64.tar.gz linux-amd64

[root@server2 helm]# cd linux-amd64/

[root@server2 linux-amd64]# ls

helm LICENSE README.md

[root@server2 linux-amd64]# cp helm /usr/local/bin

helm命令补齐

[root@server2 ~]# echo "source <(helm completion bash)" >> ~/.bashrc

[root@server2 ~]# helm

auth .kube/

.bash_history kube-flannel.yml

.bash_logout limit/

.bash_profile metallb/

.bashrc metallb-v0.10.2.tar

calico/ metrics-server/

calico-v3.19.1.tar myapp.tar

certs/ nfs-client/

configmap/ nfs-client-provisioner.yaml

.cshrc .pki/

dashboard/ pod/

.docker/ psp/

helm/ .rnd

helm-v3.4.1-linux-amd64.tar.gz roles/

hpa/ .ssh/

hpa-v2.yaml statefulset/

ingress-nginx/ .tcshrc

ingress-nginx-v0.48.1.tar .viminfo

k8s-1.21.3.tar.gz volumes/

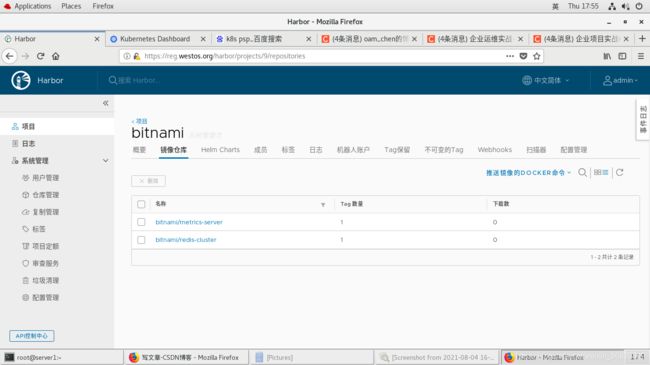

Helm 添加第三方 Chart 库

[root@server2 ~]# helm repo add bitnami https://charts.bitnami.com/bitnami

"bitnami" has been added to your repositories

[root@server2 ~]# helm repo list

NAME URL

bitnami https://charts.bitnami.com/bitnami

拉取应用到本地并且更改仓库

[root@server2 ~]# helm search repo redis

NAME CHART VERSION APP VERSION DESCRIPTION

bitnami/redis 14.8.7 6.2.5 Open source, advanced key-value store. It is of...

bitnami/redis-cluster 6.3.2 6.2.5 Open source, advanced key-value store. It is of...

[root@server2 ~]# helm pull bitnami/redis-cluster

[root@server2 ~]# ls

auth limit

calico metallb

calico-v3.19.1.tar metallb-v0.10.2.tar

certs metrics-server

configmap myapp.tar

dashboard nfs-client

helm nfs-client-provisioner.yaml

helm-v3.4.1-linux-amd64.tar.gz pod

hpa psp

hpa-v2.yaml redis-cluster-6.3.2.tgz

ingress-nginx roles

ingress-nginx-v0.48.1.tar statefulset

k8s-1.21.3.tar.gz volumes

kube-flannel.yml

[root@server2 ~]# mv redis-cluster-6.3.2.tgz helm/

[root@server2 ~]# cd helm/

[root@server2 helm]# ls

helm-v3.4.1-linux-amd64.tar.gz linux-amd64 redis-cluster-6.3.2.tgz

[root@server2 helm]# tar zxf redis-cluster-6.3.2.tgz

[root@server2 helm]# ls

helm-v3.4.1-linux-amd64.tar.gz redis-cluster

linux-amd64 redis-cluster-6.3.2.tgz

[root@server2 helm]# cd redis-cluster/

[root@server2 redis-cluster]# ls

Chart.lock Chart.yaml README.md values.yaml

charts img templates

[root@server2 redis-cluster]# vim values.yaml

12 imageRegistry: "reg.westos.org"

上传镜像

找到标签

[root@server2 redis-cluster]# vim values.yaml

72 tag: 6.2.5-debian-10-r0

[root@server1 ~]# docker pull bitnami/redis-cluster:6.2.5-debian-10-r0

6.2.5-debian-10-r0: Pulling from bitnami/redis-cluster

86a19151e740: Already exists

57a1d2d33896: Pulling fs layer

205fbdb2c4df: Pulling fs layer

b50f4b53d89e: Pulling fs layer

daec4ad90bad: Waiting

3a2283fca554: Waiting

795c1eb71802: Waiting

4084d80f7f4f: Waiting

6.2.5-debian-10-r0: Pulling from bitnami/redis-cluster

86a19151e740: Already exists

57a1d2d33896: Pull complete

205fbdb2c4df: Pull complete

b50f4b53d89e: Pull complete

daec4ad90bad: Pull complete

3a2283fca554: Pull complete

795c1eb71802: Pull complete

4084d80f7f4f: Pull complete

Digest: sha256:0adf6a9c86f820646455894283af74205209ceaa1dd1c3751e811b4c8ef02652

Status: Downloaded newer image for bitnami/redis-cluster:6.2.5-debian-10-r0

docker.io/bitnami/redis-cluster:6.2.5-debian-10-r0

[root@server1 ~]# docker tag docker.io/bitnami/redis-cluster:6.2.5-debian-10-r0 reg.westos.org/bitnami/redis-cluster:6.2.5-debian-10-r0

[root@server1 ~]# docker push reg.westos.org/bitnami/redis-cluster:6.2.5-debian-10-r0

The push refers to repository [reg.westos.org/bitnami/redis-cluster]

265c6517575c: Pushed

27927d559e88: Pushed

f96e0118c822: Pushed

1615551dea1b: Pushed

c65559991f66: Pushed

8a9b93540aad: Pushed

566ccae6ccab: Pushed

3fa01eaf81a5: Mounted from library/metrics-server

6.2.5-debian-10-r0: digest: sha256:0adf6a9c86f820646455894283af74205209ceaa1dd1c3751e811b4c8ef02652 size: 1996

构建一个 Helm Chart

[root@server2 helm]# yum install -y tree

[root@server2 helm]# helm create mychart

Creating mychart

[root@server2 helm]# ls

helm-v3.4.1-linux-amd64.tar.gz redis-cluster

linux-amd64 redis-cluster-6.3.2.tgz

mychart

[root@server2 helm]# tree mychart

mychart

├── charts

├── Chart.yaml

├── templates

│ ├── deployment.yaml

│ ├── _helpers.tpl

│ ├── hpa.yaml

│ ├── ingress.yaml

│ ├── NOTES.txt

│ ├── serviceaccount.yaml

│ ├── service.yaml

│ └── tests

│ └── test-connection.yaml

└── values.yaml

3 directories, 10 files

[root@server2 helm]# cd mychart/

[root@server2 mychart]# ls

charts Chart.yaml templates values.yaml

[root@server2 mychart]# vim Chart.yaml

23 appVersion: v1

[root@server2 mychart]# vim values.yaml

8 repository: myapp

11 tag: "v1"

[root@server2 mychart]# helm lint .

==> Linting .

[INFO] Chart.yaml: icon is recommended

1 chart(s) linted, 0 chart(s) failed

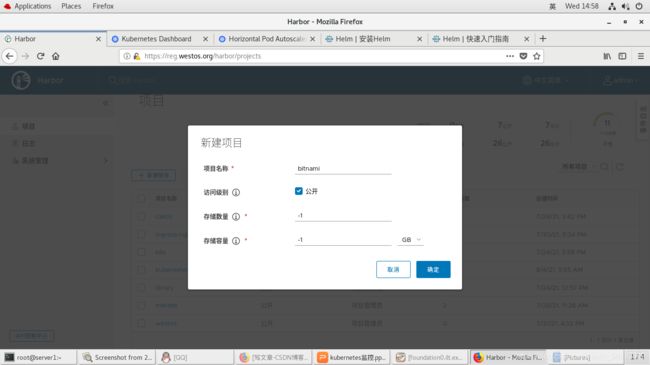

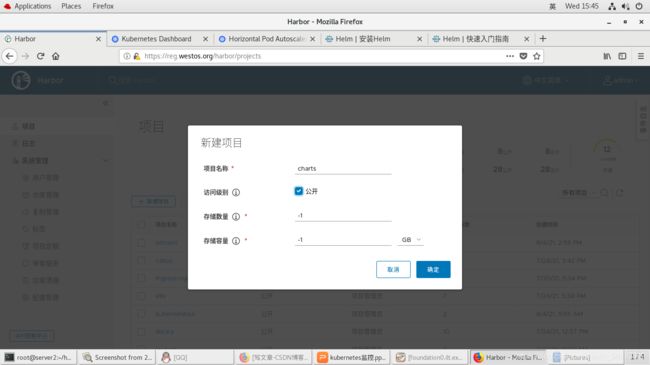

harbor添加参数

[root@server1 harbor]# docker-compose down

[root@server1 harbor]# sh install.sh --help

Note: Please set hostname and other necessary attributes in harbor.yml first. DO NOT use localhost or 127.0.0.1 for hostname, because Harbor needs to be accessed by external clients.

Please set --with-notary if needs enable Notary in Harbor, and set ui_url_protocol/ssl_cert/ssl_cert_key in harbor.yml bacause notary must run under https.

Please set --with-clair if needs enable Clair in Harbor

Please set --with-chartmuseum if needs enable Chartmuseum in Harbor

[root@server1 harbor]# sh install.sh --with-chartmuseum

打包

[root@server2 mychart]# cd ..

[root@server2 helm]# helm package mychart/

Successfully packaged chart and saved it to: /root/helm/mychart-0.1.0.tgz

[root@server2 helm]# ls

helm-v3.4.1-linux-amd64.tar.gz mychart-0.1.0.tgz

linux-amd64 redis-cluster

mychart redis-cluster-6.3.2.tgz

建立本地chart仓库

TLS仓库

[root@server2 ~]# cd /etc/pki/ca-trust/source/anchors/

[root@server2 anchors]# ls

[root@server2 anchors]# cp /etc/docker/certs.d/reg.westos.org/ca.crt .

[root@server2 anchors]# ls

ca.crt

[root@server2 anchors]# update-ca-trust

[root@server2 helm]# helm repo add westos https://reg.westos.org/chartrepo/charts

"westos" has been added to your repositories

安装helm-push插件

helm-push_0.9.0_linux_amd64.tar.gz

[root@server2 helm]# ls

bin mychart

helm-push_0.9.0_linux_amd64.tar.gz mychart-0.1.0.tgz

helm-v3.4.1-linux-amd64.tar.gz plugin.yaml

LICENSE redis-cluster

linux-amd64 redis-cluster-6.3.2.tgz

[root@server2 helm]# helm env

HELM_BIN="helm"

HELM_CACHE_HOME="/root/.cache/helm"

HELM_CONFIG_HOME="/root/.config/helm"

HELM_DATA_HOME="/root/.local/share/helm"

HELM_DEBUG="false"

HELM_KUBEAPISERVER=""

HELM_KUBEASGROUPS=""

HELM_KUBEASUSER=""

HELM_KUBECONTEXT=""

HELM_KUBETOKEN=""

HELM_MAX_HISTORY="10"

HELM_NAMESPACE="default"

HELM_PLUGINS="/root/.local/share/helm/plugins"

HELM_REGISTRY_CONFIG="/root/.config/helm/registry.json"

HELM_REPOSITORY_CACHE="/root/.cache/helm/repository"

HELM_REPOSITORY_CONFIG="/root/.config/helm/repositories.yaml"

[root@server2 helm]# mkdir -p ~/.local/share/helm/plugins/push

[root@server2 helm]# tar zxf helm-push_0.9.0_linux_amd64.tar.gz -C ~/.local/share/helm/plugins/push

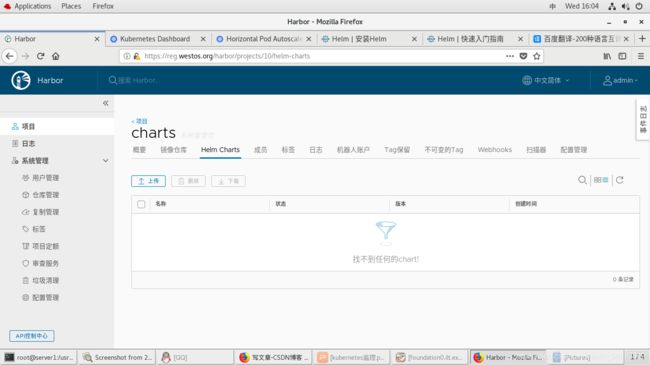

上传mychart

[root@server2 helm]# helm push mychart-0.1.0.tgz westos --insecure -u admin -p westos

Pushing mychart-0.1.0.tgz to westos...

Done.

查看上传的mychart应用

[root@server2 helm]# helm search repo mychart

No results found

[root@server2 helm]# helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "westos" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

[root@server2 helm]# helm search repo mychart

NAME CHART VERSION APP VERSION DESCRIPTION

westos/mychart 0.1.0 v1 A Helm chart for Kubernetes

部署mychart应用到k8s集群

[root@server2 helm]# helm install mychart westos/mychart

NAME: mychart

LAST DEPLOYED: Wed Aug 4 05:17:06 2021

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=mychart,app.kubernetes.io/instance=mychart" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace default $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace default port-forward $POD_NAME 8080:$CONTAINER_PORT

[root@server2 helm]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mychart ClusterIP 10.109.245.8 <none> 80/TCP 31s

[root@server2 helm]# curl 10.109.245.8

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

升级

[root@server2 mychart]# vim values.yaml

11 tag: "v2"

[root@server2 mychart]# vim Chart.yaml

18 version: 0.2.0

23 appVersion: v2

[root@server2 helm]# helm lint mychart

==> Linting mychart

[INFO] Chart.yaml: icon is recommended

1 chart(s) linted, 0 chart(s) failed

[root@server2 helm]# helm package mychart

Successfully packaged chart and saved it to: /root/helm/mychart-0.2.0.tgz

[root@server2 helm]# ls

bin mychart-0.1.0.tgz

helm-push_0.9.0_linux_amd64.tar.gz mychart-0.2.0.tgz

helm-v3.4.1-linux-amd64.tar.gz plugin.yaml

LICENSE redis-cluster

linux-amd64 redis-cluster-6.3.2.tgz

mychart

[root@server2 helm]# helm push mychart-0.2.0.tgz westos --insecure -u admin -p westos

Pushing mychart-0.2.0.tgz to westos...

Done.

[root@server2 helm]# helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "westos" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

[root@server2 helm]# helm search repo mychart

NAME CHART VERSION APP VERSION DESCRIPTION

westos/mychart 0.2.0 v2 A Helm chart for Kubernetes

[root@server2 helm]# helm search repo mychart -l

NAME CHART VERSION APP VERSION DESCRIPTION

westos/mychart 0.2.0 v2 A Helm chart for Kubernetes

westos/mychart 0.1.0 v1 A Helm chart for Kubernetes

[root@server2 helm]# helm upgrade mychart westos/mychart

Release "mychart" has been upgraded. Happy Helming!

NAME: mychart

LAST DEPLOYED: Wed Aug 4 05:45:34 2021

NAMESPACE: default

STATUS: deployed

REVISION: 2

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=mychart,app.kubernetes.io/instance=mychart" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace default $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace default port-forward $POD_NAME 8080:$CONTAINER_PORT

[root@server2 helm]# curl 10.109.245.8

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

回滚

[root@server2 helm]# helm history mychart

REVISION UPDATED STATUS CHART APP VERSION DESCRIPTION

1 Wed Aug 4 05:17:06 2021 superseded mychart-0.1.0 v1 Install complete

2 Wed Aug 4 05:45:34 2021 deployed mychart-0.2.0 v2 Upgrade complete

[root@server2 helm]# helm rollback mychart 1

Rollback was a success! Happy Helming!

[root@server2 helm]#

[root@server2 helm]# curl 10.109.245.8

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

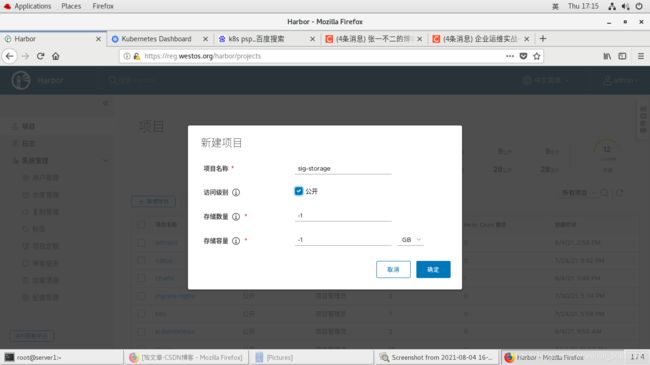

Helm部署nfs-client-provisioner

[root@server1 ~]# ls

1 game2048.tar nginx.tar

auth harbor-offline-installer-v1.10.1.tgz perl.tar

busybox.tar hpa-example.tar registry2.tar

calico-v3.19.1.tar ingress-nginx-v0.48.1.tar rhel7.tar

certs nfs-client-provisioner-v4.0.0.tar server5

convoy nfs-client-provisioner.yaml server6

convoy.tar.gz nfs-provisioner-v4.0.2.tar stress.tar

[root@server1 ~]# docker load -i nfs-provisioner-v4.0.2.tar

1a5ede0c966b: Loading layer 3.052MB/3.052MB

ad321585b8f5: Loading layer 42.02MB/42.02MB

Loaded image: reg.westos.org/sig-storage/nfs-subdir-external-provisioner:v4.0.2

[root@server1 ~]# docker push reg.westos.org/sig-storage/nfs-subdir-external-provisioner:v4.0.2

The push refers to repository [reg.westos.org/sig-storage/nfs-subdir-external-provisioner]

ad321585b8f5: Pushed

1a5ede0c966b: Pushed

v4.0.2: digest: sha256:f741e403b3ca161e784163de3ebde9190905fdbf7dfaa463620ab8f16c0f6423 size: 739

删除之前部署的nfs-client-provisioner

[root@server2 ~]# cd nfs-client/

[root@server2 nfs-client]# ls

nfs-client-provisioner.yaml pod.yaml test-pvc.yaml

[root@server2 nfs-client]# kubectl delete -f nfs-client-provisioner.yaml

serviceaccount "nfs-client-provisioner" deleted

clusterrole.rbac.authorization.k8s.io "nfs-client-provisioner-runner" deleted

clusterrolebinding.rbac.authorization.k8s.io "run-nfs-client-provisioner" deleted

role.rbac.authorization.k8s.io "leader-locking-nfs-client-provisioner" deleted

rolebinding.rbac.authorization.k8s.io "leader-locking-nfs-client-provisioner" deleted

deployment.apps "nfs-client-provisioner" deleted

storageclass.storage.k8s.io "managed-nfs-storage" deleted

[root@server2 nfs-client]# kubectl get ns

NAME STATUS AGE

default Active 11d

ingress-nginx Active 5d8h

kube-node-lease Active 11d

kube-public Active 11d

kube-system Active 11d

kubernetes-dashboard Active 31h

metallb-system Active 6d2h

nfs-client-provisioner Active 4d1h

[root@server2 nfs-client]# kubectl -n nfs-client-provisioner get all

No resources found in nfs-client-provisioner namespace.

删除之前的helm配置服务

[root@server2 nfs-subdir-external-provisioner]# helm list --all-namespaces

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

mychart default 3 2021-08-04 05:49:49.968314794 -0400 EDT deployed mychart-0.1.0 v1

redis-cluster default 1 2021-08-04 03:05:27.808555455 -0400 EDT deployed redis-cluster-6.3.2 6.2.5

[root@server2 nfs-subdir-external-provisioner]# helm uninstall mychart

release "mychart" uninstalled

[root@server2 nfs-subdir-external-provisioner]# helm uninstall redis-cluster

release "redis-cluster" uninstalled

[root@server2 nfs-subdir-external-provisioner]# helm list --all-namespaces

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

删除之前的pvc

[root@server2 nfs-client]# kubectl delete pvc --all

persistentvolumeclaim "redis-data-redis-cluster-0" deleted

persistentvolumeclaim "redis-data-redis-cluster-1" deleted

persistentvolumeclaim "redis-data-redis-cluster-2" deleted

persistentvolumeclaim "redis-data-redis-cluster-3" deleted

persistentvolumeclaim "redis-data-redis-cluster-4" deleted

persistentvolumeclaim "redis-data-redis-cluster-5" deleted

[root@server2 nfs-client]# kubectl get pvc

No resources found in default namespace.

添加nfs-subdir-external-provisioner 到repo

[root@server2 nfs]# helm repo add nfs-subdir-external-provisioner https://kubernetes-sigs.github.io/nfs-subdir-external-provisioner/

"nfs-subdir-external-provisioner" has been added to your repositories

[root@server2 nfs]# helm repo list

NAME URL

bitnami https://charts.bitnami.com/bitnami

harbor https://helm.goharbor.io

westos https://reg.westos.org/chartrepo/charts

nfs-subdir-external-provisioner https://kubernetes-sigs.github.io/nfs-subdir-external-provisioner/

拉取 nfs-subdir-external-provisioner-4.0.13.tgz 并解压

[root@server2 nfs]# helm pull nfs-subdir-external-provisioner/nfs-subdir-external-provisioner

[root@server2 nfs]# ls

nfs-subdir-external-provisioner-4.0.13.tgz

[root@server2 nfs]# tar zxf nfs-subdir-external-provisioner-4.0.13.tgz

[root@server2 nfs]# ls

nfs-subdir-external-provisioner nfs-subdir-external-provisioner-4.0.13.tgz

[root@server2 nfs]# cd nfs-subdir-external-provisioner/

[root@server2 nfs-subdir-external-provisioner]# ls

Chart.yaml ci README.md templates values.yaml

编辑values.yaml

[root@server2 nfs-subdir-external-provisioner]# vim values.yaml

5 repository: sig-storage/nfs-subdir-external-provisioner

11 server: 172.25.12.1

12 path: /mnt/nfs

25 defaultClass: true

38 archiveOnDelete: false

安装nfs

[root@server2 nfs-subdir-external-provisioner]# helm install nfs-client-provisioner . -n nfs-client-provisioner

NAME: nfs-client-provisioner

LAST DEPLOYED: Thu Aug 5 05:33:53 2021

NAMESPACE: nfs-client-provisioner

STATUS: deployed

REVISION: 1

TEST SUITE: None

查看信息

[root@server2 nfs-subdir-external-provisioner]# helm list --all-namespaces

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

nfs-client-provisioner nfs-client-provisioner 1 2021-08-05 05:33:53.641904397 -0400 EDT deployed nfs-subdir-external-provisioner-4.0.13 4.0.2

查看默认sc已配置成功

[root@server2 nfs-subdir-external-provisioner]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client (default) cluster.local/nfs-client-provisioner-nfs-subdir-external-provisioner Delete Immediate true 93s

[root@server2 nfs-subdir-external-provisioner]#

测试:

[root@server2 nfs]# cat test-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: nfs-pv1

annotations:

#volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 100Mi

[root@server2 nfs]# kubectl apply -f test-pvc.yaml

persistentvolumeclaim/nfs-pv1 created

[root@server2 nfs]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nfs-pv1 Bound pvc-6ad80a92-6fc0-40da-8d03-6439be154890 100Mi RWX nfs-client 89s

部署成功

[root@server2 nfs]# kubectl delete -f test-pvc.yaml

persistentvolumeclaim "nfs-pv1" deleted

[root@server2 nfs]# kubectl get pvc

No resources found in default namespace.

测试完毕 删除pvc

Helm部署部署metrics-server监控

删除之前的metrics-server监控服务

[root@server2 ~]# cd metrics-server/

[root@server2 metrics-server]# ls

components.yaml recommended.yaml

[root@server2 metrics-server]# kubectl delete -f components.yaml

serviceaccount "metrics-server" deleted

clusterrole.rbac.authorization.k8s.io "system:aggregated-metrics-reader" deleted

clusterrole.rbac.authorization.k8s.io "system:metrics-server" deleted

rolebinding.rbac.authorization.k8s.io "metrics-server-auth-reader" deleted

clusterrolebinding.rbac.authorization.k8s.io "metrics-server:system:auth-delegator" deleted

clusterrolebinding.rbac.authorization.k8s.io "system:metrics-server" deleted

service "metrics-server" deleted

deployment.apps "metrics-server" deleted

apiservice.apiregistration.k8s.io "v1beta1.metrics.k8s.io" deleted

[root@server2 metrics-server]# kubectl top node

W0805 05:49:55.494682 16272 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

error: Metrics API not available

[root@server2 metrics-server]# kubectl top node --use-protocol-buffers

error: Metrics API not available

上传镜像

[root@server1 ~]# docker pull bitnami/metrics-server:0.5.0-debian-10-r59

0.5.0-debian-10-r59: Pulling from bitnami/metrics-server

2e4c6c15aa52: Pull complete

a894671f5dca: Pull complete

049ba86a208b: Pull complete

5d7966168fc3: Pull complete

9804d459b822: Pull complete

a416b953cdc3: Pull complete

Digest: sha256:118158c95578aa18b42d49d60290328c23cbdb8252b812d4a7c142d46ecabcf6

Status: Downloaded newer image for bitnami/metrics-server:0.5.0-debian-10-r59

docker.io/bitnami/metrics-server:0.5.0-debian-10-r59

[root@server1 ~]#

[root@server1 ~]# docker tag bitnami/metrics-server:0.5.0-debian-10-r59 reg.westos.org/bitnami/metrics-server:0.5.0-debian-10-r59

[root@server1 ~]# docker push reg.westos.org/bitnami/metrics-server:0.5.0-debian-10-r59

The push refers to repository [reg.westos.org/bitnami/metrics-server]

c30a7821a361: Pushed

5ecebc0f05f5: Pushed

6e8fc12b0093: Pushed

7f6d39b64fd8: Pushed

ade06b968aa1: Pushed

209a01d06165: Pushed

0.5.0-debian-10-r59: digest: sha256:118158c95578aa18b42d49d60290328c23cbdb8252b812d4a7c142d46ecabcf6 size: 1578

[root@server2 ~]# kubectl create namespace metrics-server

namespace/metrics-server created

拉取chart metrics-server并解压

[root@server2 ~]# helm pull bitnami/metrics-server

[root@server2 ~]# ls

auth limit

calico metallb

calico-v3.19.1.tar metallb-v0.10.2.tar

certs metrics-server

configmap metrics-server-5.9.2.tgz

dashboard myapp.tar

helm nfs-client

helm-v3.4.1-linux-amd64.tar.gz nfs-client-provisioner.yaml

hpa pod

hpa-v2.yaml psp

ingress-nginx roles

ingress-nginx-v0.48.1.tar schedu

k8s-1.21.3.tar.gz statefulset

kube-flannel.yml volumes

[root@server2 ~]# tar zxf metrics-server-5.9.2.tgz -C /root/helm

[root@server2 ~]# cd helm/

[root@server2 helm]# ls

bin mychart-0.1.0.tgz

helm-push_0.9.0_linux_amd64.tar.gz mychart-0.2.0.tgz

helm-v3.4.1-linux-amd64.tar.gz nfs

LICENSE plugin.yaml

linux-amd64 redis-cluster

metrics-server redis-cluster-6.3.2.tgz

mychart

[root@server2 helm]# cd metrics-server/

[root@server2 metrics-server]# ls

Chart.lock charts Chart.yaml ci README.md templates values.yaml

编辑values.yaml

[root@server2 metrics-server]# vim values.yaml

10 imageRegistry: "reg.westos.org"

安装 按提示解决报错

[root@server2 metrics-server]# helm install metrics-server . -n metrics-server

NAME: metrics-server

LAST DEPLOYED: Thu Aug 5 05:59:55 2021

NAMESPACE: metrics-server

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

** Please be patient while the chart is being deployed **

The metric server has been deployed.

########################################################################################

### ERROR: The metrics.k8s.io/v1beta1 API service is not enabled in the cluster ###

########################################################################################

You have disabled the API service creation for this release. As the Kubernetes version in the cluster

does not have metrics.k8s.io/v1beta1, the metrics API will not work with this release unless:

Option A:

You complete your metrics-server release by running:

helm upgrade --namespace metrics-server metrics-server bitnami/metrics-server \

--set apiService.create=true

Option B:

You configure the metrics API service outside of this Helm chart

[root@server2 metrics-server]# helm upgrade --namespace metrics-server metrics-server bitnami/metrics-server --set apiService.create=true

Release "metrics-server" has been upgraded. Happy Helming!

NAME: metrics-server

LAST DEPLOYED: Thu Aug 5 06:01:57 2021

NAMESPACE: metrics-server

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

** Please be patient while the chart is being deployed **

The metric server has been deployed.

In a few minutes you should be able to list metrics using the following

command:

kubectl get --raw "/apis/metrics.k8s.io/v1beta1/nodes"

[root@server2 metrics-server]# kubectl get --raw "/apis/metrics.k8s.io/v1beta1/nodes"

{"kind":"NodeMetricsList","apiVersion":"metrics.k8s.io/v1beta1","metadata":{},"items":[{"metadata":{"name":"server2","creationTimestamp":"2021-08-05T10:02:19Z","labels":{"beta.kubernetes.io/arch":"amd64","beta.kubernetes.io/os":"linux","disktype":"ssd","kubernetes.io/arch":"amd64","kubernetes.io/hostname":"server2","kubernetes.io/os":"linux","node-role.kubernetes.io/control-plane":"","node-role.kubernetes.io/master":"","node.kubernetes.io/exclude-from-external-load-balancers":""}},"timestamp":"2021-08-05T10:02:09Z","window":"1m1s","usage":{"cpu":"370313132n","memory":"1354776Ki"}},{"metadata":{"name":"server3","creationTimestamp":"2021-08-05T10:02:19Z","labels":{"beta.kubernetes.io/arch":"amd64","beta.kubernetes.io/os":"linux","disktype":"ssd","kubernetes.io/arch":"amd64","kubernetes.io/hostname":"server3","kubernetes.io/os":"linux","roles":"master"}},"timestamp":"2021-08-05T10:02:11Z","window":"1m0s","usage":{"cpu":"43978624n","memory":"470224Ki"}},{"metadata":{"name":"server4","creationTimestamp":"2021-08-05T10:02:19Z","labels":{"beta.kubernetes.io/arch":"amd64","beta.kubernetes.io/os":"linux","kubernetes.io/arch":"amd64","kubernetes.io/hostname":"server4","kubernetes.io/os":"linux"}},"timestamp":"2021-08-05T10:02:15Z","window":"1m1s","usage":{"cpu":"142302214n","memory":"585064Ki"}}]}

查看服务

[root@server2 metrics-server]# kubectl -n metrics-server get all

NAME READY STATUS RESTARTS AGE

pod/metrics-server-849cc8b4c7-h7qxc 1/1 Running 0 77s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/metrics-server ClusterIP 10.99.32.7 <none> 443/TCP 3m19s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/metrics-server 1/1 1 1 3m19s

NAME DESIRED CURRENT READY AGE

replicaset.apps/metrics-server-6b4db5d56b 0 0 0 3m19s

replicaset.apps/metrics-server-849cc8b4c7 1 1 1 77s

测试

[root@server2 metrics-server]# kubectl top node

W0805 06:03:48.166085 26188 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

server2 282m 14% 1343Mi 70%

server3 36m 3% 458Mi 51%

server4 146m 14% 577Mi 64%

部署成功