基于MATLAB的车道线识别、自动驾驶识别

使用单目相机的视觉感知

这个例子展示了如何构建一个能够进行车道边界和车辆检测的单眼摄像机传感器仿真。该传感器将在车辆坐标系中报告这些检测结果。在本例中,您将了解自动驾驶工具箱™使用的坐标系统,以及设计单目相机传感器设计所涉及的计算机视觉技术。

概述

包含ADAS功能或被设计为全自动驾驶的车辆依赖于多个传感器。这些传感器可以包括声纳、雷达、激光雷达和照相机。这个例子说明了单眼相机系统设计中所涉及的一些概念。这样的传感器可以完成许多任务,包括:

车道边界检测

检测车辆、人和其他物体

从自我车辆到障碍物的距离估计

随后,由单眼摄像机传感器返回的读数可用于发出车道偏离警告、碰撞警告,或设计一个车道保持辅助控制系统。与其他传感器一起,它还可以用于实现紧急制动系统和其他安全关键功能。

该例子实现了在一个完全开发的单眼相机系统上发现的特征子集。它检测车道边界和车辆背面,并报告它们在车辆坐标系中的位置。

定义相机配置

了解相机的内在和外在校准参数对于像素和车辆坐标之间的精确转换至关重要。

首先要定义照相机的内在参数。以下参数是在之前使用棋盘校准模式的相机校准程序确定的。你可以使用相机校准器应用程序来获取你的相机。

focalLength = [309.4362, 344.2161]; % [fx, fy] in pixel units %[fx,fy],以像素为单位表示

principalPoint = [318.9034, 257.5352]; % [cx, cy] optical center in pixel coordinates %[cx,cy]光学中心的像素坐标

imageSize = [480, 640]; % [nrows, mcols]请注意,透镜失真系数被忽略了,因为数据中失真很小。这些参数存储在一个摄影机初始化对象中。

camIntrinsics = cameraIntrinsics(focalLength, principalPoint, imageSize);

接下来,定义相对于车辆底盘的摄像头方向。您将使用此信息建立相机外部,定义三维摄像机坐标系相对于车辆坐标系的位置。

height = 2.1798; % mounting height in meters from the ground 安装高度%,以米为单位

pitch = 14; % pitch of the camera in degrees 摄像机的角度

上述量可以由外函函数返回的旋转和平移矩阵得到。节距指定照相机从水平位置开始的倾斜度。对于本例中使用的照相机,传感器的滚转和偏航都为零。定义内部信息和外部信息的整个配置都存储在单相机对象中。

sensor = monoCamera(camIntrinsics, height, 'Pitch', pitch);

请注意,单摄像头对象设置了一个非常特定的车辆坐标系,其中x轴指向车辆向前,y轴指向车辆的左侧,z轴指向地面向上。

默认情况下,坐标系的原点在地面上,直接在由相机的焦点定义的相机中心的下方。可以通过使用单个照相机对象的传感器位置属性来移动原点。此外,单相机提供图像到车辆和车辆到图像方法之间的图像和车辆坐标系统之间的转换。

注:坐标系之间的转换假设平坦道路。它是基于建立一个同源性矩阵,将成像平面上的位置映射到路面上的位置。非平坦的道路会在距离计算中引入误差,特别是在远离车辆的位置。

加载视频的一帧

在处理整个视频之前,处理单个视频帧,以说明单目相机传感器设计所涉及的概念。

首先,请创建一个可打开视频文件的视频阅读器对象。为了提高内存效率,视频阅读器一次加载一个视频帧。

videoName = 'caltech_cordova1.avi';

videoReader = VideoReader(videoName);

阅读一个包含车道标记和车辆的有趣的框架。

timeStamp = 0.06667; % time from the beginning of the video 从视频一开始开始的时间

videoReader.CurrentTime = timeStamp; % point to the chosen frame 指向所选的框架

frame = readFrame(videoReader); % read frame at timeStamp seconds 读取帧

imshow(frame) % display frame 显示帧

注:本例忽略了镜头失真。如果您担心由镜头失真引起的距离测量误差,在这一点上,您将使用非失真图像函数来消除镜头失真。

创建鸟瞰图像

有许多方法来分割和检测车道标记。其中一种方法是使用鸟瞰图的图像变换。尽管它会产生计算成本,但这种转换提供了一个主要的优势。鸟瞰视图中的车道标记厚度均匀,从而简化了分割过程。属于同一车道的车道标记也变得平行,从而使进一步的分析更加容易。

给定相机设置,鸟瞰对象将原始图像转换为鸟瞰图。此对象允许您指定要使用车辆坐标进行变换的区域。请注意,当摄像机安装高度以米为单位指定时,车辆坐标单位是由单体摄像机对象建立的。例如,如果高度以毫米为单位指定,那么模拟的其余部分将使用毫米。

% Using vehicle coordinates, define area to transform %使用车辆坐标,定义要变换的区域

distAheadOfSensor = 30; % in meters, as previously specified in monoCamera height input

spaceToOneSide = 6; % all other distance quantities are also in meters %所有其他距离量也以米为单位

bottomOffset = 3;

outView = [bottomOffset, distAheadOfSensor, -spaceToOneSide, spaceToOneSide]; % [xmin, xmax, ymin, ymax]

imageSize = [NaN, 250]; % output image width in pixels; height is chosen automatically to preserve units per pixel ratio %输出图像宽度为像素;自动选择高度以保持每像素比的单位

birdsEyeConfig = birdsEyeView(sensor, outView, imageSize);.

生成鸟瞰图。

birdsEyeImage = transformImage(birdsEyeConfig, frame);

figure

imshow(birdsEyeImage

远离传感器的区域更模糊,因为有更少的像素,因此需要更多的插值。

请注意,只要您可以在车辆坐标中定位车道边界候选像素,您就可以在不使用鸟瞰视图的情况下完成后一个处理步骤。

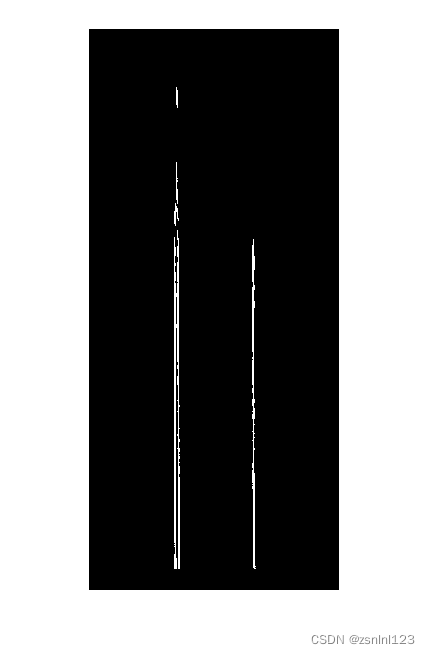

在车辆坐标中找到车道标记

拥有鸟视图图像,您现在可以使用分段车道标记岭功能从路面上分离车道标记候选像素。选择这种技术是因为其简单性和相对有效性。现有的替代分割技术包括语义分割(深度学习)和可操纵过滤器。您可以使用下面的这些技术来获得下一阶段所需的二进制掩码。

下面函数的大多数输入参数都是用世界单位指定的,例如,输入分段车道标记岭的车道标记宽度。世界单位的使用允许您轻松地尝试新的传感器,即使当输入图像大小的变化。这对于使设计更加健壮和灵活,在改变的相机硬件和处理不同的标准在许多国家。

% Convert to grayscale %转换为灰度

birdsEyeImage = rgb2gray(birdsEyeImage);

% Lane marker segmentation ROI in world units %车道标记分割ROI在世界单位

vehicleROI = outView - [-1, 2, -3, 3]; % look 3 meters to left and right, and 4 meters ahead of the sensor %看左右3米,领先传感器4米

approxLaneMarkerWidthVehicle = 0.25; % 25 centimeters 25厘米

% Detect lane features 检测车道特征

laneSensitivity = 0.25;

birdsEyeViewBW = segmentLaneMarkerRidge(birdsEyeImage, birdsEyeConfig, approxLaneMarkerWidthVehicle,...

'ROI', vehicleROI, 'Sensitivity', laneSensitivity);

figure

imshow(birdsEyeViewBW)

定位各个车道标记发生在固定在摄像头传感器上的车辆坐标中。本示例使用一个抛物线车道边界模型,ax^2+bx+c,来表示车道标记。其他的表示形式,如三次多项式或样条曲线,也是可能的。转换到车辆坐标是必要的,否则车道标记曲率不能正确地用抛物线表示,而它受到透视扭曲的影响。

车道模型适用于车辆路径上的车道标记。道路上的车道标志或涂在沥青上的路标将被拒绝。

% Obtain lane candidate points in vehicle coordinates %获取车辆坐标中的车道候选点

[imageX, imageY] = find(birdsEyeViewBW);

xyBoundaryPoints = imageToVehicle(birdsEyeConfig, [imageY, imageX]);

由于分割后的点包含许多不属于实际车道标记的异常值,因此使用基于随机样本共识(RANSAC)的鲁棒曲线拟合算法。

返回抛物线线边界对象、边界数组中的边界及其抛物线参数(a、b、c)。

maxLanes = 2; % look for maximum of two lane markers %查找最多的两个车道标记

boundaryWidth = 3*approxLaneMarkerWidthVehicle; % expand boundary width 扩展边界宽度的%

[boundaries, boundaryPoints] = findParabolicLaneBoundaries(xyBoundaryPoints,boundaryWidth, ...

'MaxNumBoundaries', maxLanes, 'validateBoundaryFcn', @validateBoundaryFcn);

请注意,查找抛物线车道边界需要一个函数句柄,验证边界和fcn。本示例函数列在本示例函数的最后。使用此附加输入,您可以根据a、b、c参数的值拒绝一些曲线。它还可以通过约束基于前一视频帧的未来a,b,c值来利用一系列帧上的时间信息。

在上一步中发现的一些曲线可能仍然无效。例如,当一条曲线符合人行横道标记时。使用额外的启发式方法来拒绝许多这样的曲线。

% Establish criteria for rejecting boundaries based on their length %根据边界的长度建立拒绝边界的标准

maxPossibleXLength = diff(vehicleROI(1:2));

minXLength = maxPossibleXLength * 0.60; % establish a threshold %建立阈值

% Reject short boundaries 拒绝短边界的比例

isOfMinLength = arrayfun(@(b)diff(b.XExtent) > minXLength, boundaries);

boundaries = boundaries(isOfMinLength);

根据查找抛物线车道边界函数计算的强度度量去除额外的边界。根据ROI和图像大小设置车道强度阈值。

% To compute the maximum strength, assume all image pixels within the ROI

% are lane candidate points

%要计算最大强度,请假设ROI内的所有图像像素

%是车道候选点

birdsImageROI = vehicleToImageROI(birdsEyeConfig, vehicleROI);

[laneImageX,laneImageY] = meshgrid(birdsImageROI(1):birdsImageROI(2),birdsImageROI(3):birdsImageROI(4));

% Convert the image points to vehicle points%将图像点转换为车辆点

vehiclePoints = imageToVehicle(birdsEyeConfig,[laneImageX(:),laneImageY(:)]);

% Find the maximum number of unique x-axis locations possible for any lane

% boundary

%查找任何车道可能存在的唯一x轴位置的最大数目

边界百分比

maxPointsInOneLane = numel(unique(vehiclePoints(:,1)));

% Set the maximum length of a lane boundary to the ROI length

%将车道边界的最大长度设置为ROI长度

maxLaneLength = diff(vehicleROI(1:2));

% Compute the maximum possible lane strength for this image size/ROI size

% specification

%计算此图像大小/ROI大小的最大可能的车道强度

%规格

maxStrength = maxPointsInOneLane/maxLaneLength;

% Reject weak boundaries

拒绝弱边界的比例

isStrong = [boundaries.Strength] > 0.4*maxStrength;

boundaries = boundaries(isStrong);

将车道标记类型分类为实线/虚线的启发式方法包含在本示例底部列出的辅助函数中。了解车道标记的类型对于自动转向车辆至关重要。例如,禁止穿过实体标记。

boundaries = classifyLaneTypes(boundaries, boundaryPoints);

% Locate two ego lanes if they are present

如果两个自我通道,定位它们存在

xOffset = 0; % 0 meters from the sensor 距离传感器%0米

distanceToBoundaries = boundaries.computeBoundaryModel(xOffset);

% Find candidate ego boundaries

%找到候选人的自我边界

leftEgoBoundaryIndex = [];

rightEgoBoundaryIndex = [];

minLDistance = min(distanceToBoundaries(distanceToBoundaries>0));

minRDistance = max(distanceToBoundaries(distanceToBoundaries<=0));

if ~isempty(minLDistance)

leftEgoBoundaryIndex = distanceToBoundaries == minLDistance;

end

if ~isempty(minRDistance)

rightEgoBoundaryIndex = distanceToBoundaries == minRDistance;

end

leftEgoBoundary = boundaries(leftEgoBoundaryIndex);

rightEgoBoundary = boundaries(rightEgoBoundaryIndex);

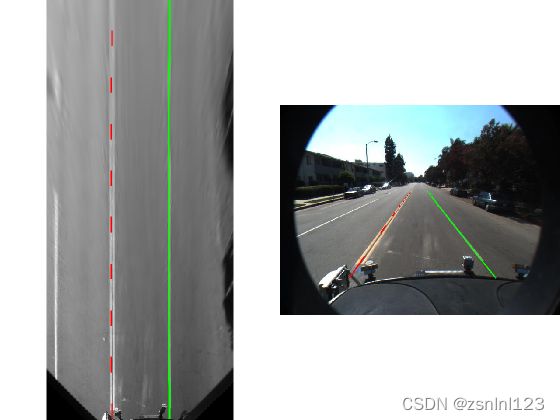

在鸟瞰图图像和常规视图中显示检测到的车道标记。

xVehiclePoints = bottomOffset:distAheadOfSensor;

birdsEyeWithEgoLane = insertLaneBoundary(birdsEyeImage, leftEgoBoundary , birdsEyeConfig, xVehiclePoints, 'Color','Red');

birdsEyeWithEgoLane = insertLaneBoundary(birdsEyeWithEgoLane, rightEgoBoundary, birdsEyeConfig, xVehiclePoints, 'Color','Green');

frameWithEgoLane = insertLaneBoundary(frame, leftEgoBoundary, sensor, xVehiclePoints, 'Color','Red');

frameWithEgoLane = insertLaneBoundary(frameWithEgoLane, rightEgoBoundary, sensor, xVehiclePoints, 'Color','Green');

figure

subplot('Position', [0, 0, 0.5, 1.0]) % [left, bottom, width, height] in normalized units

imshow(birdsEyeWithEgoLane)

subplot('Position', [0.5, 0, 0.5, 1.0])

imshow(frameWithEgoLane)

在车辆坐标中定位车辆

检测和跟踪车辆在前碰撞警告(FCW)和自动紧急制动(AEB)系统中至关重要。

装载一个聚合通道特征(ACF)检测器,通过预先训练来检测车辆的前后部。像这样的检测器可以处理发出碰撞警告很重要的场景。例如,这对于检测一辆在自我车辆前面穿过道路的车辆是不够的。

detector = vehicleDetectorACF();

% Width of a common vehicle is between 1.5 to 2.5 meters

%普通车辆的宽度在1.5米到2.5米之间

vehicleWidth = [1.5, 2.5];

使用configureDetectorMonoCamera功能来专门化通用的ACF检测器,以考虑到典型的汽车应用程序的几何形状。通过这种摄像头配置,这个新的探测器只搜索路面上的车辆,因为没有点搜索消失点上方的车辆。这节省了计算时间,减少了假报的数量。

monoDetector = configureDetectorMonoCamera(detector, sensor, vehicleWidth);

[bboxes, scores] = detect(monoDetector, frame);

因为此示例展示了如何为了演示目的而只处理单个帧,因此不能在原始检测之上应用跟踪。跟踪的添加使返回车辆位置的结果更加稳健,因为即使车辆部分遮挡,跟踪器继续返回车辆的位置。有关更多信息,请参见“使用摄像头跟踪多辆车辆”示例。

接下来,将车辆检测结果转换为车辆坐标。本示例末尾包含的计算车辆定位函数,计算车辆在车辆坐标中的位置,给出一个由检测算法在图像坐标中返回的边界框。它在车辆坐标中返回边界框底部的中心位置。因为我们使用的是一个单眼相机传感器和一个简单的同质成像,只有沿路面的距离才能得到准确的计算。在三维空间中计算任意位置需要使用立体相机或其他能够进行三角测量的传感器。

locations = computeVehicleLocations(bboxes, sensor);

% Overlay the detections on the video frame

%覆盖视频帧上的检测结果

imgOut = insertVehicleDetections(frame, locations, bboxes);

figure;

imshow(imgOut);

模拟一个完整的传感器与视频输入

现在您已经了解了各个步骤的内部工作原理,让我们把它们放在一起,并将它们应用到一个视频序列中,在那里我们也可以利用时间信息。

将视频回放到开始,然后处理视频。下面的代码被缩短了,因为所有的关键参数都在前面的步骤中定义了。在这里,使用的参数没有进一步的解释。

videoReader.CurrentTime = 0;

isPlayerOpen = true;

snapshot = [];

while hasFrame(videoReader) && isPlayerOpen

% Grab a frame of video %获取视频的一帧

frame = readFrame(videoReader);

% Compute birdsEyeView image %计算鸟目视图图像

birdsEyeImage = transformImage(birdsEyeConfig, frame);

birdsEyeImage = rgb2gray(birdsEyeImage);

% Detect lane boundary features %检测车道边界特征

birdsEyeViewBW = segmentLaneMarkerRidge(birdsEyeImage, birdsEyeConfig, ...

approxLaneMarkerWidthVehicle, 'ROI', vehicleROI, ...

'Sensitivity', laneSensitivity);

% Obtain lane candidate points in vehicle coordinates

%获取车辆坐标中的车道候选点

[imageX, imageY] = find(birdsEyeViewBW);

xyBoundaryPoints = imageToVehicle(birdsEyeConfig, [imageY, imageX]);

% Find lane boundary candidates %查找车道边界候选人

[boundaries, boundaryPoints] = findParabolicLaneBoundaries(xyBoundaryPoints,boundaryWidth, ...

'MaxNumBoundaries', maxLanes, 'validateBoundaryFcn', @validateBoundaryFcn);

% Reject boundaries based on their length and strength

%根据边界的长度和强度拒绝边界

isOfMinLength = arrayfun(@(b)diff(b.XExtent) > minXLength, boundaries);

boundaries = boundaries(isOfMinLength);

isStrong = [boundaries.Strength] > 0.2*maxStrength;

boundaries = boundaries(isStrong);

% Classify lane marker type %分类车道标记类型

boundaries = classifyLaneTypes(boundaries, boundaryPoints);

% Find ego lanes %查找自我通道

xOffset = 0; % 0 meters from the sensor 距离传感器%0米

distanceToBoundaries = boundaries.computeBoundaryModel(xOffset);

% Find candidate ego boundaries

%找到候选人的自我边界

leftEgoBoundaryIndex = [];

rightEgoBoundaryIndex = [];

minLDistance = min(distanceToBoundaries(distanceToBoundaries>0));

minRDistance = max(distanceToBoundaries(distanceToBoundaries<=0));

if ~isempty(minLDistance)

leftEgoBoundaryIndex = distanceToBoundaries == minLDistance;

end

if ~isempty(minRDistance)

rightEgoBoundaryIndex = distanceToBoundaries == minRDistance;

end

leftEgoBoundary = boundaries(leftEgoBoundaryIndex);

rightEgoBoundary = boundaries(rightEgoBoundaryIndex);

% Detect vehicles

%检测车辆

[bboxes, scores] = detect(monoDetector, frame);

locations = computeVehicleLocations(bboxes, sensor);

% Visualize sensor outputs and intermediate results. Pack the core

% sensor outputs into a struct.

%可视化传感器输出和中间结果。堆芯

%的传感器输出到一个结构体中。

sensorOut.leftEgoBoundary = leftEgoBoundary;

sensorOut.rightEgoBoundary = rightEgoBoundary;

sensorOut.vehicleLocations = locations;

sensorOut.xVehiclePoints = bottomOffset:distAheadOfSensor;

sensorOut.vehicleBoxes = bboxes;

% Pack additional visualization data, including intermediate results

%打包其他可视化数据,包括中间结果

intOut.birdsEyeImage = birdsEyeImage;

intOut.birdsEyeConfig = birdsEyeConfig;

intOut.vehicleScores = scores;

intOut.vehicleROI = vehicleROI;

intOut.birdsEyeBW = birdsEyeViewBW;

closePlayers = ~hasFrame(videoReader);

isPlayerOpen = visualizeSensorResults(frame, sensor, sensorOut, ...

intOut, closePlayers);

timeStamp = 7.5333; % take snapshot for publishing at timeStamp seconds

%获取快照以便发布时间戳秒

if abs(videoReader.CurrentTime - timeStamp) < 0.01

snapshot = takeSnapshot(frame, sensor, sensorOut);

end

end

显示视频帧。快照是在时间戳秒时拍摄的。

if ~isempty(snapshot)

figure

imshow(snapshot)

end

在不同的视频上尝试传感器设计

螺旋单传感器类将设置和所有必要的步骤,以模拟单目相机传感器组装成一个完整的包,可以应用于任何视频。由于传感器设计中使用的大多数参数都是基于世界单位的,因此该设计对相机参数的变化是稳健的,包括图像大小。请注意,螺旋单传感器类中的代码与上一节中的循环不同,上一节中的循环用于说明基本概念。

除了提供一个新的视频外,您还必须提供与该视频对应的摄像机配置。这个过程显示在这里。在你自己的视频中试试。

% Sensor configuration %传感器配置

focalLength = [309.4362, 344.2161];

principalPoint = [318.9034, 257.5352];

imageSize = [480, 640];

height = 2.1798; % mounting height in meters from the ground

安装高度%,以米为单位

pitch = 14; % pitch of the camera in degrees 摄像机的角度

camIntrinsics = cameraIntrinsics(focalLength, principalPoint, imageSize);

sensor = monoCamera(camIntrinsics, height, 'Pitch', pitch);

videoReader = VideoReader('caltech_washington1.avi');

Create the helperMonoSensor object and apply it to the video.

创建螺旋单传感器对象,并将其应用于视频。

monoSensor = helperMonoSensor(sensor);

monoSensor.LaneXExtentThreshold = 0.5;

% To remove false detections from shadows in this video, we only return

% vehicle detections with higher scores.

%要删除此视频中阴影中的错误检测,我们只返回

分数较高的车辆检测率为%。

monoSensor.VehicleDetectionThreshold = 20;

isPlayerOpen = true;

snapshot = [];

while hasFrame(videoReader) && isPlayerOpen

frame = readFrame(videoReader); % get a frame 得到一个框架

sensorOut = processFrame(monoSensor, frame);

closePlayers = ~hasFrame(videoReader);

isPlayerOpen = displaySensorOutputs(monoSensor, frame, sensorOut, closePlayers);

timeStamp = 11.1333; % take snapshot for publishing at timeStamp seconds

%获取快照以便发布时间戳秒

if abs(videoReader.CurrentTime - timeStamp) < 0.01

snapshot = takeSnapshot(frame, sensor, sensorOut);

end

end

Display the video frame. Snapshot is taken at timeStamp seconds.

显示视频帧。快照是在时间戳秒时拍摄的。

if ~isempty(snapshot)

figure

imshow(snapshot)

end

支持功能

可视化传感器结果显示了来自单眼相机传感器模拟的核心信息和中间结果。

function isPlayerOpen = visualizeSensorResults(frame, sensor, sensorOut,...

intOut, closePlayers)

% Unpack the main inputs%打开主要输入数据

leftEgoBoundary = sensorOut.leftEgoBoundary;

rightEgoBoundary = sensorOut.rightEgoBoundary;

locations = sensorOut.vehicleLocations;

xVehiclePoints = sensorOut.xVehiclePoints;

bboxes = sensorOut.vehicleBoxes;

% Unpack additional intermediate data %卸载其他中间数据

birdsEyeViewImage = intOut.birdsEyeImage;

birdsEyeConfig = intOut.birdsEyeConfig;

vehicleROI = intOut.vehicleROI;

birdsEyeViewBW = intOut.birdsEyeBW;

% Visualize left and right ego-lane boundaries in bird's-eye view

%在鸟瞰图中可视化左右自我通道的边界

birdsEyeWithOverlays = insertLaneBoundary(birdsEyeViewImage, leftEgoBoundary , birdsEyeConfig, xVehiclePoints, 'Color','Red');

birdsEyeWithOverlays = insertLaneBoundary(birdsEyeWithOverlays, rightEgoBoundary, birdsEyeConfig, xVehiclePoints, 'Color','Green');

% Visualize ego-lane boundaries in camera view

%在摄像头视图中可视化自我车道边界

frameWithOverlays = insertLaneBoundary(frame, leftEgoBoundary, sensor, xVehiclePoints, 'Color','Red');

frameWithOverlays = insertLaneBoundary(frameWithOverlays, rightEgoBoundary, sensor, xVehiclePoints, 'Color','Green');

frameWithOverlays = insertVehicleDetections(frameWithOverlays, locations, bboxes);

imageROI = vehicleToImageROI(birdsEyeConfig, vehicleROI);

ROI = [imageROI(1) imageROI(3) imageROI(2)-imageROI(1) imageROI(4)-imageROI(3)];

% Highlight candidate lane points that include outliers

%突出显示包括异常值的候选车道点

birdsEyeViewImage = insertShape(birdsEyeViewImage, 'rectangle', ROI); % show detection ROI 显示检测ROI

birdsEyeViewImage = imoverlay(birdsEyeViewImage, birdsEyeViewBW, 'blue');

% Display the results %显示结果

frames = {frameWithOverlays, birdsEyeViewImage, birdsEyeWithOverlays};

persistent players;

if isempty(players)

frameNames = {'Lane marker and vehicle detections', 'Raw segmentation', 'Lane marker detections'};

players = helperVideoPlayerSet(frames, frameNames);

end

update(players, frames);

% Terminate the loop when the first player is closed %在第一个播放器关闭时终止该循环

isPlayerOpen = isOpen(players, 1);

if (~isPlayerOpen || closePlayers) % close down the other players

%关闭其他玩家

clear players;

end

end

计算车辆位置计算车辆在车辆坐标中的位置,给定一个由图像坐标中的检测算法返回的边界框。它在车辆坐标中返回边界框底部的中心位置。由于使用了单眼相机传感器和简单的同质图,因此只能计算沿路面的距离。在三维空间中计算任意位置需要使用立体摄像机或另一个能够进行三角测量的传感器。

function locations = computeVehicleLocations(bboxes, sensor)

locations = zeros(size(bboxes,1),2);

for i = 1:size(bboxes, 1)

bbox = bboxes(i, :);

% Get [x,y] location of the center of the lower portion of the

% detection bounding box in meters. bbox is [x, y, width, height] in

% image coordinates, where [x,y] represents upper-left corner.

的下部中心的位置

%检测边界框,以米为单位。bbox为[x、y、宽度、高度]在

%图像坐标,其中[x,y]表示左上角。

yBottom = bbox(2) + bbox(4) - 1;

xCenter = bbox(1) + (bbox(3)-1)/2; % approximate center

locations(i,:) = imageToVehicle(sensor, [xCenter, yBottom]);

end

end

insertVehicleDetections inserts bounding boxes and displays [x,y] locations corresponding to returned vehicle detections.

插入车辆检测:插入边界框,并显示与返回的车辆检测对应的[x,y]位置。

function imgOut = insertVehicleDetections(imgIn, locations, bboxes)

imgOut = imgIn;

for i = 1:size(locations, 1)

location = locations(i, :);

bbox = bboxes(i, :);

label = sprintf('X=%0.2f, Y=%0.2f', location(1), location(2));

imgOut = insertObjectAnnotation(imgOut, ...

'rectangle', bbox, label, 'Color','g');

end

end

vehicleToImageROI converts ROI in vehicle coordinates to image coordinates in bird's-eye-view image.

车辆图像投资回报率将车辆坐标中的ROI转换为鸟视图图像中的图像坐标。

function imageROI = vehicleToImageROI(birdsEyeConfig, vehicleROI)

vehicleROI = double(vehicleROI);

loc2 = abs(vehicleToImage(birdsEyeConfig, [vehicleROI(2) vehicleROI(4)]));

loc1 = abs(vehicleToImage(birdsEyeConfig, [vehicleROI(1) vehicleROI(4)]));

loc4 = vehicleToImage(birdsEyeConfig, [vehicleROI(1) vehicleROI(4)]);

loc3 = vehicleToImage(birdsEyeConfig, [vehicleROI(1) vehicleROI(3)]);

[minRoiX, maxRoiX, minRoiY, maxRoiY] = deal(loc4(1), loc3(1), loc2(2), loc1(2));

imageROI = round([minRoiX, maxRoiX, minRoiY, maxRoiY]);

end

validateBoundaryFcn rejects some of the lane boundary curves computed using the RANSAC algorithm.

验证边界算法拒绝使用RANSAC算法计算的一些车道边界曲线。

function isGood = validateBoundaryFcn(params)

if ~isempty(params)

a = params(1);

% Reject any curve with a small 'a' coefficient, which makes it highly

% curved.

%拒绝任何具有较小的“a”系数的曲线,这使它具有很高的系数

曲线百分比。

isGood = abs(a) < 0.003; % a from ax^2+bx+c a来自ax^2+bx+c

else

isGood = false;

end

end

classifyLaneTypes determines lane marker types as solid, dashed, etc.

分类:车道类型将车道标记类型确定为实线、虚线等。

function boundaries = classifyLaneTypes(boundaries, boundaryPoints)

for bInd = 1 : numel(boundaries)

vehiclePoints = boundaryPoints{bInd};

% Sort by x 按x排序

vehiclePoints = sortrows(vehiclePoints, 1);

xVehicle = vehiclePoints(:,1);

xVehicleUnique = unique(xVehicle);

% Dashed vs solid

xdiff = diff(xVehicleUnique);

% Sufficiently large threshold to remove spaces between points of a

% solid line, but not large enough to remove spaces between dashes

%有足够大的阈值,以删除a的点之间的空格

%实线,但没有大到足以删除破折号之间的空格

xdifft = mean(xdiff) + 3*std(xdiff);

largeGaps = xdiff(xdiff > xdifft);

% Safe default %安全默认值

boundaries(bInd).BoundaryType= LaneBoundaryType.Solid;

if largeGaps>2

% Ideally, these gaps should be consistent, but you cannot rely

% on that unless you know that the ROI extent includes at least 3 dashes.

%理想情况下,这些差距应该是一致的,但您不能依赖

除非你知道ROI范围内至少包含3个破折号。

boundaries(bInd).BoundaryType = LaneBoundaryType.Dashed;

end

end

end

takeSnapshot captures the output for the HTML publishing report.

获取快照捕获HTML发布报告的输出。

function I = takeSnapshot(frame, sensor, sensorOut)

% Unpack the inputs %解压缩输入

leftEgoBoundary = sensorOut.leftEgoBoundary;

rightEgoBoundary = sensorOut.rightEgoBoundary;

locations = sensorOut.vehicleLocations;

xVehiclePoints = sensorOut.xVehiclePoints;

bboxes = sensorOut.vehicleBoxes;

frameWithOverlays = insertLaneBoundary(frame, leftEgoBoundary, sensor, xVehiclePoints, 'Color','Red');

frameWithOverlays = insertLaneBoundary(frameWithOverlays, rightEgoBoundary, sensor, xVehiclePoints, 'Color','Green');

frameWithOverlays = insertVehicleDetections(frameWithOverlays, locations, bboxes);

I = frameWithOverlays;

end

远离传感器的区域更模糊,因为有更少的像素,因此需要更多的插值。

请注意,只要您可以在车辆坐标中定位车道边界候选像素,您就可以在不使用鸟瞰视图的情况下完成后一个处理步骤。