2022-5-2至2022-5-8周报

文章目录

- 摘要

- 基础知识

-

- 错误记录--AttributeError

-

- AttributeError: module 'tensorflow' has no attribute 'reset_default_graph'

- AttributeError: module 'tensorflow.compat.v1' has no attribute 'contrib'

- 命令pip和conda

- GAN代码详细分析

摘要

There are three portions in the artical. Some errors that encountered in the process of running through the source code of a paper and the solutions are recorded in the first part. Some commands for Python package management, as well as an understanding of the most common pip and conda commands are recorded in the second part. The implementation code of GAN in detail is analyzed int the third part, starting from the optimization formula of the original paper, setting the pseudo code of the realization formula, and splitting the code into: generating real data of Gaussian random distribution, defining linear operations, and defining the neural network architecture of the generator and the discriminator, the creation of the GAN model, the training of the model, and the main program as the running entry.

文章记录了一周内所学的基础知识。第一部分记录了跑通一篇论文的源码的过程中遇到的一些问题错误以及解决办法。第二部分记录了Python包管理的一些命令,以及最常见的pip和conda命令的理解。第三部分详细分析了一遍GAN的实现代码,从原论文中的优化公式入手,设定实现公式的伪代码,并将代码拆分成:生成高斯随机分布的真实数据、定义线性运算、定义生成器和判别器的神经网络架构、创建GAN模型、训练模型和作为运行入口的主程序。

基础知识

错误记录–AttributeError

关于TensorFlow框架,近几年有1.x更新成2.x的大变动。如果想跑TensorFlow 1.x Python 脚本,而环境中的TensorFlow版本为2.x,则会产生AttributeError

AttributeError: module ‘tensorflow’ has no attribute ‘reset_default_graph’

解决方法:

- 手工或使用tf_upgrade_v2升级代码

在引入处,将

import tensorflow as tf

改为

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

使用了在2.x中提供的兼容性模块。某些 API 符号无法通过简单的字符串替换进行升级。为了确保代码在 TensorFlow 2.0 中仍受支持,升级脚本包含了一个 compat.v1 模块。该模块可将 TF 1.x 符号替换为等效的 tf.compat.v1.foo 引用。

使用自动升级,参考自动将代码升级到TensorFlow 2

- 使用1.x环境

当运行环境中存在1.x版本的TensorFlow,在运行import tensorflow前,运行%tensorflow_version 1.x

AttributeError: module ‘tensorflow.compat.v1’ has no attribute ‘contrib’

由于 TensorFlow 2.x 模块弃用(例如,tf.flags 和 tf.contrib),切换到 compat.v1 无法解决某些更改。

解决方法:使用1.x环境

命令pip和conda

两者均为包管理器

pip是最为广泛使用的Python包管理器,可以帮助我们获得最新的Python包并进行管理。常用命令如下:

pip install [package-name] # 安装名为[package-name]的包

pip install [package-name]==X.X # 安装名为[package-name]的包并指定版本X.X

pip install [package-name] --proxy=代理服务器IP:端口号 # 使用代理服务器安装

pip install [package-name] --upgrade # 更新名为[package-name]的包

pip uninstall [package-name] # 删除名为[package-name]的包

pip list # 列出当前环境下已安装的所有包

conda包管理器是Anaconda自带的包管理器,可以帮助我们在 conda 环境下轻松地安装各种包。相较于 pip 而言,conda 的通用性更强(不仅是 Python 包,其他包如 CUDA Toolkit 和 cuDNN 也可以安装),但 conda 源的版本更新往往较慢。常用命令如下:

conda install [package-name] # 安装名为[package-name]的包

conda install [package-name]=X.X # 安装名为[package-name]的包并指定版本X.X

conda update [package-name] # 更新名为[package-name]的包

conda remove [package-name] # 删除名为[package-name]的包

conda list # 列出当前环境下已安装的所有包

conda search [package-name] # 列出名为[package-name]的包在conda源中的所有可用版本

conda虚拟环境常用命令:

conda create --name [env-name] # 建立名为[env-name]的Conda虚拟环境

conda activate [env-name] # 进入名为[env-name]的Conda虚拟环境

conda deactivate # 退出当前的Conda虚拟环境

conda env remove --name [env-name] # 删除名为[env-name]的Conda虚拟环境

conda env list # 列出所有Conda虚拟环境

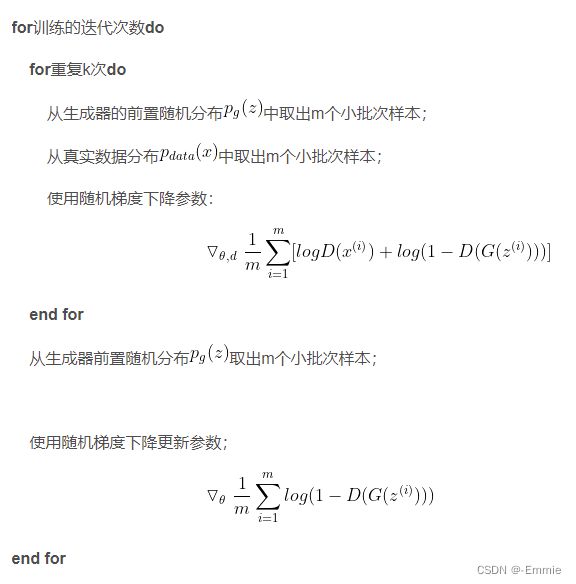

GAN代码详细分析

需要做以下这个式子的优化

![]()

相加的这两个期望在数值上等于真实分布积分+生成数据分布积分。

那么在前置的随机分布 p g ( z ) p_g(z) pg(z)中取出m个随机数,从真实数据 p d a t a ( x ) p_{data}(x) pdata(x)中取出m个真实样本,再使用平均值代替上面的期望。公式改写成: V = V= V=

伪代码:

为随机生成的真实高斯分布数据,用GAN来生成数据

先导入相关包,并设置随机参数

import argparse

import numpy as np

from scipy.stats import norm

import tensorflow as tf

import matplotlib.pyplot as plt

from matplotlib import animation

import seaborn as sns

sns.set(color_codes=True)

seed = 42

np.random.seed(seed)

tf.set_random_seed(seed)

设置真实数据的分布、生成器的初始化分布

class DataDistribution(object):

def __init__(self):

self.mu = 3 #均值为3

self.sigma = 0.5 #方差为0.5

def sample(self, N):

samples = np.random.normal(self.mu, self.sigma, N)

samples.sort()

return samples

#生成器初始分布为平均分布

class GeneratorDistribution(object):

def __init__(self, range):

self.range = range

def sample(self, N):

return np.linspace(-self.range, self.range, N) + \

np.random.random(N) * 0.01

设置线性运算,并用于生成器和判别器

def linear(input, output_dim, scope=None, stddev=1.0):

norm = tf.random_normal_initializer(stddev=stddev)

const = tf.constant_initializer(0.0)

with tf.variable_scope(scope or 'linear'):

w = tf.get_variable('w', [input.get_shape()[1], output_dim], initializer=norm)

b = tf.get_variable('b', [output_dim], initializer=const)

return tf.matmul(input, w) + b

def generator(input, h_dim):

h0 = tf.nn.softplus(linear(input, h_dim, 'g0'))

h1 = linear(h0, 1, 'g1')

return h1

def discriminator(input, h_dim):

h0 = tf.tanh(linear(input, h_dim * 2, 'd0')) #线性函数结果再输入激活函数

h1 = tf.tanh(linear(h0, h_dim * 2, 'd1'))

h2 = tf.tanh(linear(h1, h_dim * 2, 'd2'))

h3 = tf.sigmoid(linear(h2, 1, 'd3'))

return h3

设置优化器,使用学习率衰减的梯度下降方法

def optimizer(loss, var_list, initial_learning_rate):

decay = 0.95

num_decay_steps = 150

batch = tf.Variable(0)

learning_rate = tf.train.exponential_decay(

initial_learning_rate,

batch,

num_decay_steps,

decay,

staircase=True

)

optimizer = tf.train.GradientDescentOptimizer(learning_rate).minimize(

loss,

global_step=batch,

var_list=var_list

)

return optimizer

搭建GAN模型

class GAN(object):

def __init__(self, data, gen, num_steps, batch_size, log_every):

self.data = data

self.gen = gen

self.num_steps = num_steps

self.batch_size = batch_size

self.log_every = log_every

self.mlp_hidden_size = 4

self.learning_rate = 0.03

self._create_model()

def _create_model(self):

......

def train(self):

......

创建模型:创建预训练判别器D_pre、生成器Generator和判别器Discriminator

- 创建预训练判别器D_pre

def _create_model(self):

with tf.variable_scope('D_pre'):

self.pre_input = tf.placeholder(tf.float32, shape=(self.batch_size, 1))

self.pre_labels = tf.placeholder(tf.float32, shape=(self.batch_size, 1))

D_pre = discriminator(self.pre_input, self.mlp_hidden_size)

self.pre_loss = tf.reduce_mean(tf.square(D_pre - self.pre_labels))

self.pre_opt = optimizer(self.pre_loss, None, self.learning_rate)

- 创建生成器Generator

with tf.variable_scope('Generator'):

self.z = tf.placeholder(tf.float32, shape=(self.batch_size, 1))

self.G = generator(self.z, self.mlp_hidden_size)

- 创建判别器Discriminator

with tf.variable_scope('Discriminator') as scope:

self.x = tf.placeholder(tf.float32, shape=(self.batch_size, 1))

self.D1 = discriminator(self.x, self.mlp_hidden_size)

scope.reuse_variables()

self.D2 = discriminator(self.G, self.mlp_hidden_size)

- 分别计算生成器和判别器的损失loss_g和loss_d,并进行优化

self.loss_d = tf.reduce_mean(-tf.log(self.D1) - tf.log(1 - self.D2))

self.loss_g = tf.reduce_mean(-tf.log(self.D2))

self.d_pre_params = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, scope='D_pre')

self.d_params = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, scope='Discriminator')

self.g_params = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, scope='Generator')

self.opt_d = optimizer(self.loss_d, self.d_params, self.learning_rate)

self.opt_g = optimizer(self.loss_g, self.g_params, self.learning_rate)

进行训练:预训练判别器D_pre,然后将训练后的参数共享给判别器Discriminator

- 预训练判别器D_pre

def train(self):

with tf.Session() as session:

tf.global_variables_initializer().run()

# pretraining discriminator

num_pretrain_steps = 1000

for step in range(num_pretrain_steps):

d = (np.random.random(self.batch_size) - 0.5) * 10.0

labels = norm.pdf(d, loc=self.data.mu, scale=self.data.sigma)

pretrain_loss, _ = session.run([self.pre_loss, self.pre_opt], {

self.pre_input: np.reshape(d, (self.batch_size, 1)),

self.pre_labels: np.reshape(labels, (self.batch_size, 1))

})

self.weightsD = session.run(self.d_pre_params)

# copy weights from pre-training over to new D network

for i, v in enumerate(self.d_params):

session.run(v.assign(self.weightsD[i]))

for step in range(self.num_steps):

# update discriminator

x = self.data.sample(self.batch_size)

z = self.gen.sample(self.batch_size)

loss_d, _ = session.run([self.loss_d, self.opt_d], {

self.x: np.reshape(x, (self.batch_size, 1)),

self.z: np.reshape(z, (self.batch_size, 1))

})

- 将训练后的参数共享给判别器Discriminator

z = self.gen.sample(self.batch_size)

loss_g, _ = session.run([self.loss_g, self.opt_g], {

self.z: np.reshape(z, (self.batch_size, 1))

})

if step % self.log_every == 0:

print('{}: {}\t{}'.format(step, loss_d, loss_g))

if step % 100 == 0 or step==0 or step == self.num_steps -1 :

self._plot_distributions(session)

采样以展示生成数据和真实数据的分布

def _samples(self, session, num_points=10000, num_bins=100):

xs = np.linspace(-self.gen.range, self.gen.range, num_points)

bins = np.linspace(-self.gen.range, self.gen.range, num_points)

d = self.data.sample(num_points)

pd, _ = np.histogram(d, bins=bins, density=True)

zs = np.linspace(-self.gen.range, self.gen.range, num_points)

g = np.zeros((num_points, 1))

for i in range(num_points // self.batch_size):

g[self.batch_size * i:self.batch_size * (i + 1)] = session.run(self.G, {

self.z: np.reshape(

zs[self.batch_size * i:self.batch_size * (i + 1)],

(self.batch_size, 1)

)

})

pg, _ = np.histogram(g, bins=bins, density=True)

return pd, pg

可视化

def _plot_distributions(self, session):

pd, pg = self._samples(session)

p_x = np.linspace(-self.gen.range, self.gen.range, len(pd))

f, ax = plt.subplots(1)

ax.set_ylim(0, 1)

plt.plot(p_x, pd, label='Real Data')

plt.plot(p_x, pg, label='Generated Data')

plt.title('GAN Visualization')

plt.xlabel('Value')

plt.ylabel('Probability Density')

plt.legend()

plt.show()

运行主程序

def main(args):

model = GAN(

DataDistribution(),

GeneratorDistribution(range=8),

args.num_steps,

args.batch_size,

args.log_every,

)

model.train()

def parse_args():

parser = argparse.ArgumentParser()

parser.add_argument('--num-steps', type=int, default=1200,

help='the number of training steps to take')

parser.add_argument('--batch-size', type=int, default=12,

help='the batch size')

parser.add_argument('--log-every', type=int, default=10,

help='print loss after this many steps')

parses = parser.parse_args(args=[])#添加args=[]

return parses

if __name__ == '__main__':

main(parse_args())