20221114-20221120工作总结

这里写目录标题

- 一、U2Net提高检测精度

- 二、图像增强

-

- 1.图像增强的目的

- 2.分类

- 3.基于空间域:直接作用于像素点灰度值

-

- 3.1灰度变换

-

- 3.1.1 线性灰度变换

- 3.1.2 分段线性灰度变换

- 3.1.3 非线性变换

- 3.2 直方图修正

-

- 3.2.1 直方图均衡化

- 3.2.2 直方图规定化

- 4.基于照明-反射的同态滤波法

-

- 4.1 原理

- 5. Retinex增强方法

-

- 5.1 Retinex理论

- 5.2 发展历程

- 5.3 SSR算法

-

- 5.3.1 算法原理

- 5.3.2 算法实现

- 5.4 MSR算法

-

- 5.4.1 算法原理

- 结语

-

- 总结

- 目标

- 参考

一、U2Net提高检测精度

通过修改代码,实现用评价指标阈值控制训练次数(训练何时停止),设置阈值如下。

mae_threshold, f1_threshold = 0.04, 0.85 #结束训练的阈值

针对磁瓦缺陷检测数据集进行训练。

Epoch: [1380] [ 0/80] eta: 0:02:19 lr: 0.000310 loss: 0.0066 (0.0066) time: 1.7469 data: 1.4153 max mem: 2102

Epoch: [1380] [10/80] eta: 0:00:22 lr: 0.000309 loss: 0.0113 (0.0266) time: 0.3217 data: 0.1287 max mem: 2102

Epoch: [1380] [20/80] eta: 0:00:15 lr: 0.000309 loss: 0.0522 (0.0263) time: 0.1772 data: 0.0001 max mem: 2102

Epoch: [1380] [30/80] eta: 0:00:11 lr: 0.000308 loss: 0.0241 (0.0297) time: 0.1764 data: 0.0002 max mem: 2102

Epoch: [1380] [40/80] eta: 0:00:08 lr: 0.000307 loss: 0.0142 (0.0271) time: 0.1766 data: 0.0002 max mem: 2102

Epoch: [1380] [50/80] eta: 0:00:06 lr: 0.000307 loss: 0.0138 (0.0292) time: 0.1765 data: 0.0001 max mem: 2102

Epoch: [1380] [60/80] eta: 0:00:04 lr: 0.000306 loss: 0.0185 (0.0292) time: 0.1774 data: 0.0000 max mem: 2102

Epoch: [1380] [70/80] eta: 0:00:01 lr: 0.000306 loss: 0.0531 (0.0307) time: 0.1781 data: 0.0002 max mem: 2102

Epoch: [1380] Total time: 0:00:15

Test: [ 0/44] eta: 0:00:37 time: 0.8410 data: 0.8080 max mem: 2102

Test: Total time: 0:00:02

[epoch: 1380] val_MAE: 0.005 val_maxF1: 0.853

training time 7:34:28

Process finished with exit code 0

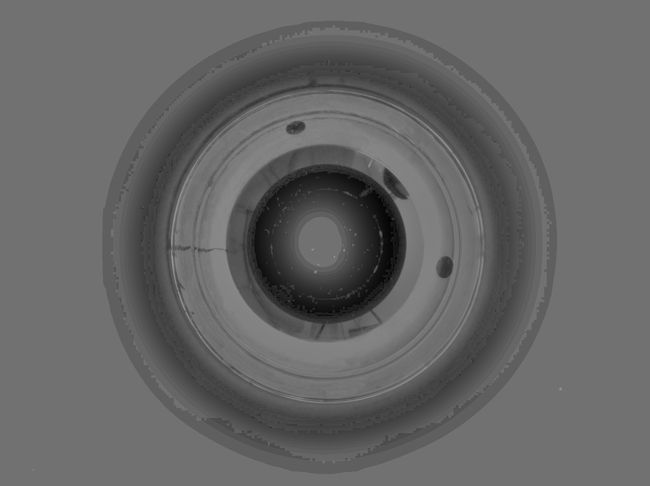

从上到下依次为原图、gt、MAE: 0.007 maxF1: 0.713 、val_MAE: 0.005 val_maxF1: 0.853的结果

数据集扩充:水平翻转、垂直翻转、旋转、横向错切、纵向错切

数据集扩充后

train:1020

test:204

mae_threshold, f1_threshold = 0.04, 0.80 #结束训练的阈值

[epoch: 0] train_loss: 1.9787 lr: 0.000500 MAE: 0.266 maxF1: 0.076

[epoch: 10] train_loss: 0.6683 lr: 0.000998 MAE: 0.073 maxF1: 0.200

[epoch: 20] train_loss: 0.4979 lr: 0.000990 MAE: 0.025 maxF1: 0.314

[epoch: 30] train_loss: 0.2092 lr: 0.000977 MAE: 0.019 maxF1: 0.510

[epoch: 40] train_loss: 0.1087 lr: 0.000958 MAE: 0.008 maxF1: 0.593

[epoch: 50] train_loss: 0.0821 lr: 0.000935 MAE: 0.007 maxF1: 0.734

[epoch: 60] train_loss: 0.0719 lr: 0.000906 MAE: 0.005 maxF1: 0.751

[epoch: 70] train_loss: 0.0751 lr: 0.000873 MAE: 0.005 maxF1: 0.711

[epoch: 80] train_loss: 0.1524 lr: 0.000836 MAE: 0.006 maxF1: 0.751

[epoch: 90] train_loss: 0.0540 lr: 0.000796 MAE: 0.004 maxF1: 0.820

效果一般,很多小缺陷检不出来。

mae_threshold, f1_threshold = 0.04, 0.90 #结束训练的阈值

[epoch: 0] train_loss: 1.9603 lr: 0.000500 MAE: 0.105 maxF1: 0.069

[epoch: 10] train_loss: 0.5043 lr: 0.000998 MAE: 0.022 maxF1: 0.376

[epoch: 20] train_loss: 0.1880 lr: 0.000990 MAE: 0.009 maxF1: 0.581

[epoch: 30] train_loss: 0.1643 lr: 0.000977 MAE: 0.009 maxF1: 0.654

[epoch: 40] train_loss: 0.1356 lr: 0.000958 MAE: 0.006 maxF1: 0.705

[epoch: 50] train_loss: 0.0785 lr: 0.000935 MAE: 0.005 maxF1: 0.767

[epoch: 60] train_loss: 0.0692 lr: 0.000906 MAE: 0.009 maxF1: 0.690

[epoch: 70] train_loss: 0.0568 lr: 0.000873 MAE: 0.005 maxF1: 0.788

[epoch: 80] train_loss: 0.0518 lr: 0.000836 MAE: 0.004 maxF1: 0.820

[epoch: 90] train_loss: 0.0487 lr: 0.000796 MAE: 0.004 maxF1: 0.837

[epoch: 100] train_loss: 0.0478 lr: 0.000752 MAE: 0.004 maxF1: 0.857

[epoch: 110] train_loss: 0.0477 lr: 0.000705 MAE: 0.004 maxF1: 0.852

[epoch: 120] train_loss: 0.0446 lr: 0.000656 MAE: 0.003 maxF1: 0.865

[epoch: 130] train_loss: 0.0452 lr: 0.000605 MAE: 0.003 maxF1: 0.841

[epoch: 140] train_loss: 0.0418 lr: 0.000553 MAE: 0.003 maxF1: 0.864

[epoch: 150] train_loss: 0.0412 lr: 0.000500 MAE: 0.003 maxF1: 0.866

[epoch: 160] train_loss: 0.0403 lr: 0.000447 MAE: 0.003 maxF1: 0.880

[epoch: 170] train_loss: 0.0394 lr: 0.000395 MAE: 0.003 maxF1: 0.885

[epoch: 180] train_loss: 0.0381 lr: 0.000344 MAE: 0.003 maxF1: 0.891

[epoch: 190] train_loss: 0.0368 lr: 0.000295 MAE: 0.003 maxF1: 0.893

[epoch: 200] train_loss: 0.0364 lr: 0.000248 MAE: 0.003 maxF1: 0.893

[epoch: 210] train_loss: 0.0347 lr: 0.000204 MAE: 0.003 maxF1: 0.888

[epoch: 220] train_loss: 0.0341 lr: 0.000164 MAE: 0.002 maxF1: 0.894

[epoch: 230] train_loss: 0.0342 lr: 0.000127 MAE: 0.002 maxF1: 0.898

[epoch: 240] train_loss: 0.0334 lr: 0.000094 MAE: 0.002 maxF1: 0.898

[epoch: 250] train_loss: 0.0329 lr: 0.000065 MAE: 0.002 maxF1: 0.899

[epoch: 260] train_loss: 0.0323 lr: 0.000042 MAE: 0.002 maxF1: 0.896

[epoch: 270] train_loss: 0.0328 lr: 0.000023 MAE: 0.002 maxF1: 0.900

[epoch: 280] train_loss: 0.0325 lr: 0.000010 MAE: 0.002 maxF1: 0.902

mae_threshold, f1_threshold = 0.04, 0.95 #结束训练的阈值

[epoch: 0] train_loss: 1.5706 lr: 0.000500 MAE: 0.205 maxF1: 0.083

[epoch: 10] train_loss: 0.4908 lr: 0.000998 MAE: 0.048 maxF1: 0.249

[epoch: 20] train_loss: 0.2974 lr: 0.000990 MAE: 0.013 maxF1: 0.512

[epoch: 30] train_loss: 0.0992 lr: 0.000977 MAE: 0.008 maxF1: 0.652

[epoch: 40] train_loss: 0.0787 lr: 0.000958 MAE: 0.006 maxF1: 0.728

[epoch: 50] train_loss: 0.1033 lr: 0.000935 MAE: 0.006 maxF1: 0.708

[epoch: 60] train_loss: 0.0613 lr: 0.000906 MAE: 0.004 maxF1: 0.779

[epoch: 70] train_loss: 0.1120 lr: 0.000873 MAE: 0.005 maxF1: 0.789

[epoch: 80] train_loss: 0.0530 lr: 0.000836 MAE: 0.004 maxF1: 0.842

[epoch: 90] train_loss: 0.0505 lr: 0.000796 MAE: 0.004 maxF1: 0.831

[epoch: 100] train_loss: 0.0485 lr: 0.000752 MAE: 0.004 maxF1: 0.838

[epoch: 110] train_loss: 0.0481 lr: 0.000705 MAE: 0.004 maxF1: 0.863

[epoch: 120] train_loss: 0.0463 lr: 0.000656 MAE: 0.004 maxF1: 0.867

[epoch: 130] train_loss: 0.0452 lr: 0.000605 MAE: 0.003 maxF1: 0.870

[epoch: 140] train_loss: 0.0424 lr: 0.000553 MAE: 0.003 maxF1: 0.881

[epoch: 150] train_loss: 0.0423 lr: 0.000500 MAE: 0.003 maxF1: 0.885

[epoch: 160] train_loss: 0.0408 lr: 0.000447 MAE: 0.003 maxF1: 0.884

[epoch: 170] train_loss: 0.0388 lr: 0.000395 MAE: 0.003 maxF1: 0.885

[epoch: 180] train_loss: 0.0375 lr: 0.000344 MAE: 0.003 maxF1: 0.889

[epoch: 190] train_loss: 0.0364 lr: 0.000295 MAE: 0.003 maxF1: 0.893

[epoch: 200] train_loss: 0.0364 lr: 0.000248 MAE: 0.003 maxF1: 0.890

[epoch: 210] train_loss: 0.0346 lr: 0.000204 MAE: 0.002 maxF1: 0.892

[epoch: 220] train_loss: 0.0337 lr: 0.000164 MAE: 0.002 maxF1: 0.892

[epoch: 230] train_loss: 0.0336 lr: 0.000127 MAE: 0.002 maxF1: 0.891

[epoch: 240] train_loss: 0.0329 lr: 0.000094 MAE: 0.002 maxF1: 0.895

[epoch: 250] train_loss: 0.0324 lr: 0.000065 MAE: 0.002 maxF1: 0.896

[epoch: 260] train_loss: 0.0322 lr: 0.000042 MAE: 0.002 maxF1: 0.895

[epoch: 270] train_loss: 0.0322 lr: 0.000023 MAE: 0.002 maxF1: 0.898

[epoch: 280] train_loss: 0.0316 lr: 0.000010 MAE: 0.002 maxF1: 0.896

[epoch: 290] train_loss: 0.0316 lr: 0.000002 MAE: 0.002 maxF1: 0.899

[epoch: 299] train_loss: 0.0315 lr: 0.000000 MAE: 0.002 maxF1: 0.898

[epoch: 300] train_loss: 0.0320 lr: 0.000000 MAE: 0.002 maxF1: 0.898

[epoch: 310] train_loss: 0.0318 lr: 0.000003 MAE: 0.002 maxF1: 0.898

[epoch: 320] train_loss: 0.0314 lr: 0.000012 MAE: 0.002 maxF1: 0.898

[epoch: 330] train_loss: 0.0315 lr: 0.000026 MAE: 0.002 maxF1: 0.899

[epoch: 340] train_loss: 0.0312 lr: 0.000046 MAE: 0.002 maxF1: 0.900

[epoch: 350] train_loss: 0.0320 lr: 0.000071 MAE: 0.002 maxF1: 0.900

[epoch: 360] train_loss: 0.0312 lr: 0.000100 MAE: 0.002 maxF1: 0.900

[epoch: 370] train_loss: 0.0316 lr: 0.000134 MAE: 0.002 maxF1: 0.898

[epoch: 380] train_loss: 0.0317 lr: 0.000171 MAE: 0.002 maxF1: 0.900

[epoch: 390] train_loss: 0.0316 lr: 0.000213 MAE: 0.002 maxF1: 0.897

[epoch: 400] train_loss: 0.0318 lr: 0.000258 MAE: 0.002 maxF1: 0.901

[epoch: 410] train_loss: 0.0321 lr: 0.000305 MAE: 0.002 maxF1: 0.900

[epoch: 420] train_loss: 0.0332 lr: 0.000355 MAE: 0.002 maxF1: 0.902

[epoch: 430] train_loss: 0.0334 lr: 0.000406 MAE: 0.002 maxF1: 0.895

[epoch: 440] train_loss: 0.0330 lr: 0.000458 MAE: 0.002 maxF1: 0.899

[epoch: 450] train_loss: 0.0364 lr: 0.000511 MAE: 0.002 maxF1: 0.899

[epoch: 460] train_loss: 0.0328 lr: 0.000563 MAE: 0.002 maxF1: 0.900

[epoch: 470] train_loss: 0.0340 lr: 0.000615 MAE: 0.002 maxF1: 0.901

[epoch: 480] train_loss: 0.0323 lr: 0.000665 MAE: 0.002 maxF1: 0.903

[epoch: 490] train_loss: 0.0332 lr: 0.000714 MAE: 0.002 maxF1: 0.899

[epoch: 500] train_loss: 0.0333 lr: 0.000761 MAE: 0.002 maxF1: 0.902

[epoch: 510] train_loss: 0.0334 lr: 0.000804 MAE: 0.002 maxF1: 0.906

[epoch: 520] train_loss: 0.0589 lr: 0.000844 MAE: 0.004 maxF1: 0.855

[epoch: 530] train_loss: 0.0324 lr: 0.000880 MAE: 0.002 maxF1: 0.904

[epoch: 540] train_loss: 0.0343 lr: 0.000912 MAE: 0.002 maxF1: 0.902

[epoch: 550] train_loss: 0.0330 lr: 0.000940 MAE: 0.002 maxF1: 0.900

[epoch: 560] train_loss: 0.0323 lr: 0.000962 MAE: 0.002 maxF1: 0.904

[epoch: 570] train_loss: 0.0310 lr: 0.000980 MAE: 0.002 maxF1: 0.902

[epoch: 580] train_loss: 0.0318 lr: 0.000992 MAE: 0.002 maxF1: 0.907

[epoch: 590] train_loss: 0.0317 lr: 0.000999 MAE: 0.002 maxF1: 0.908

[epoch: 600] train_loss: 0.0316 lr: 0.001000 MAE: 0.002 maxF1: 0.909

[epoch: 610] train_loss: 0.0340 lr: 0.000995 MAE: 0.002 maxF1: 0.904

[epoch: 620] train_loss: 0.0312 lr: 0.000985 MAE: 0.002 maxF1: 0.907

[epoch: 630] train_loss: 0.0297 lr: 0.000970 MAE: 0.002 maxF1: 0.910

[epoch: 640] train_loss: 0.0293 lr: 0.000949 MAE: 0.002 maxF1: 0.916

[epoch: 650] train_loss: 0.0291 lr: 0.000924 MAE: 0.002 maxF1: 0.921

[epoch: 660] train_loss: 0.0281 lr: 0.000894 MAE: 0.002 maxF1: 0.919

[epoch: 670] train_loss: 0.0285 lr: 0.000859 MAE: 0.002 maxF1: 0.925

[epoch: 680] train_loss: 0.0274 lr: 0.000820 MAE: 0.002 maxF1: 0.928

[epoch: 690] train_loss: 0.0277 lr: 0.000778 MAE: 0.002 maxF1: 0.926

[epoch: 700] train_loss: 0.0265 lr: 0.000733 MAE: 0.001 maxF1: 0.928

[epoch: 710] train_loss: 0.0268 lr: 0.000685 MAE: 0.001 maxF1: 0.929

[epoch: 720] train_loss: 0.0264 lr: 0.000635 MAE: 0.001 maxF1: 0.930

[epoch: 730] train_loss: 0.0253 lr: 0.000584 MAE: 0.001 maxF1: 0.930

[epoch: 740] train_loss: 0.0252 lr: 0.000532 MAE: 0.001 maxF1: 0.939

[epoch: 750] train_loss: 0.0252 lr: 0.000479 MAE: 0.001 maxF1: 0.934

[epoch: 760] train_loss: 0.0241 lr: 0.000426 MAE: 0.001 maxF1: 0.942

[epoch: 770] train_loss: 0.0246 lr: 0.000375 MAE: 0.001 maxF1: 0.942

[epoch: 780] train_loss: 0.0239 lr: 0.000325 MAE: 0.001 maxF1: 0.944

[epoch: 790] train_loss: 0.0237 lr: 0.000276 MAE: 0.001 maxF1: 0.944

[epoch: 800] train_loss: 0.0232 lr: 0.000230 MAE: 0.001 maxF1: 0.945

[epoch: 810] train_loss: 0.0233 lr: 0.000188 MAE: 0.001 maxF1: 0.948

[epoch: 820] train_loss: 0.0231 lr: 0.000148 MAE: 0.001 maxF1: 0.948

[epoch: 830] train_loss: 0.0230 lr: 0.000113 MAE: 0.001 maxF1: 0.947

[epoch: 840] train_loss: 0.0228 lr: 0.000082 MAE: 0.001 maxF1: 0.948

[epoch: 850] train_loss: 0.0231 lr: 0.000055 MAE: 0.001 maxF1: 0.948

[epoch: 860] train_loss: 0.0227 lr: 0.000034 MAE: 0.001 maxF1: 0.948

[epoch: 870] train_loss: 0.0227 lr: 0.000017 MAE: 0.001 maxF1: 0.949

[epoch: 880] train_loss: 0.0229 lr: 0.000006 MAE: 0.001 maxF1: 0.948

[epoch: 890] train_loss: 0.0228 lr: 0.000001 MAE: 0.001 maxF1: 0.949

[epoch: 900] train_loss: 0.0230 lr: 0.000001 MAE: 0.001 maxF1: 0.949

[epoch: 910] train_loss: 0.0228 lr: 0.000006 MAE: 0.001 maxF1: 0.949

[epoch: 920] train_loss: 0.0227 lr: 0.000017 MAE: 0.001 maxF1: 0.950

[epoch: 930] train_loss: 0.0226 lr: 0.000034 MAE: 0.001 maxF1: 0.949

[epoch: 940] train_loss: 0.0225 lr: 0.000055 MAE: 0.001 maxF1: 0.950

二、图像增强

1.图像增强的目的

一、为了改善图像的视觉效果,提高图像的清晰度;

二、针对给定图像的应用场合,突出某些感兴趣的特征,抑制不感兴趣的特征,以扩大图像中不同物体特征之间的差别,满足某些特殊分析的需要。

2.分类

基于空间域和基于频率域

3.基于空间域:直接作用于像素点灰度值

基于空间域的增强算法包括点运算和局部运算

点运算又包括灰度变换和直方图修正

直方图修正包括均衡化和规定化

3.1灰度变换

灰度变换主要针对独立的像素点进行处理,由输入像素点的灰度值决定相应的输出像素点的灰度值,通过改变原始图像数据所占的灰度范围而使图像在视觉上得到改善。

3.1.1 线性灰度变换

通过线性规则从原图到增强图做映射,将灰度范围拓展或压缩。

假设一幅图像f(x,y)变换前的的灰度范围是[a,b],希望变换后g(x,y)灰度范围拓展或者压缩至[c,d]。

原理图:

公式:

通过调整a,b,c,d,的值可以控制线性变换函数的斜率,从而达到灰度范围的拓展或压缩。

3.1.2 分段线性灰度变换

突出感兴趣的灰度范围

如下图所示:突出中间灰度值的灰度范围,达到增强效果

3.1.3 非线性变换

对数变换

暗区拉伸,亮区压缩

指数变换

暗区压缩,亮区拉伸

伽马变换

c=1时:

γ<1 暗的部分增强,图片整体变亮

γ=1 不变

γ>1 亮的部分被抑制,图片整体变暗

3.2 直方图修正

3.2.1 直方图均衡化

直方图均衡化的意思就是对灰度图像进行灰度变换,使其变换后的灰度概率密度函数恒为1(灰度值归一化到0~1)。

设原图灰度值为r,均衡化后的灰度值为s,0

这里省略推到过程,直接给出T(r)的格式,pr表示原图的灰度值概率密度函数:

由于灰度值是离散的,在进行变换时使用上式的离散格式:

nj表示灰度值为j的像素点个数,n表示所有像素点个数。

注:离散化使得直方图均衡化得到的直方图并不是严格均衡化的(概率密度不是严格为1)。

3.2.2 直方图规定化

所谓“规定”,就是对变换后的图像的灰度值概率密度函数进行规定,即将图像变换为符合给定概率密度函数的图像,而不仅限于目标图像的概率密度为1(均衡化)。

直方图规定化求解思路

对原图r和目标概率密度的图z都进行均衡化,即:

有s=v(这点很关键,均衡化后的结果肯定是相等的)

所以有:

4.基于照明-反射的同态滤波法

4.1 原理

同态滤波通过对数运算将图像的照明部分和反射部分分开,通常来讲,图像的照明部分是慢变的部分,集中在低频部分,而反射部分属于快变部分,倾向于集中在高频部分,可以根据这个性质,对图像进行频域滤波

其中

f(x,y)为图像(看或者拍摄到的);

i(x,y)为照射分量,描述景物照明,变化缓慢,属于低频分量;

r(x,y)为反射分量,描述景物细节,变化较快,属于高频分量。

观察到在原图中低频分量和高频分量是乘积关系,无法将我们所需要的高频分量(细节、纹理信息)提取出来,所以要先对f(x,y)取对数,再滤波。(这就是同态滤波的基本思想)

为了避免f(x,y)取0时对数无意义,一般有:

![]()

可以看到此时低频分量i和高频分量r已经可以通过滤波器区分开来了。

再进行傅里叶变换:

![]()

滤波:

再将滤波后的频域信号变为空域信号(傅里叶反变换,反对数运算):

我们的目的是增强图像高频的细节纹理信息,因此滤波器H(u,v)是高通的,其形式为:

同态滤波法存在以下问题:

- 局部特点描述能力差;

- 空域频域相互转换费时。

针对局部特点描述能力差的问题可以将傅里叶变换改为小波变换;针对转换处理域费时的问题提出了一种基于空域的同态滤波法,其基本思路为:对数变换——>低通滤波——>估计照明分量(难点)——>对照明分量和反射分量分别进行处理。

5. Retinex增强方法

5.1 Retinex理论

数学模型

Retinex理论将一张图片分解为照射分量和反射分量相乘(同4.1)。

由Retinex引发的现有图像增强技术的基本思路:

- 直接将反射分量作为增强结果

反射分量往往会损失一部分信息,图像会变得非常不真实 - 对亮度信息进行处理,再与反射分量重新组合(主流)

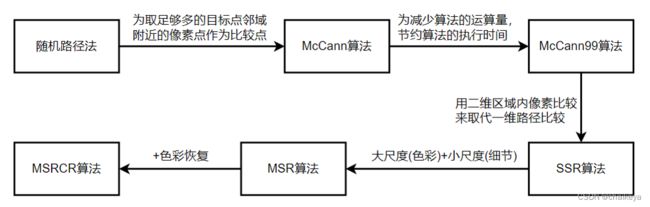

5.2 发展历程

- 基于迭代的Retinex算法

- Frankle-McCann Retinex 算法

- McCann99 Retinex 算法

- 基于中心环绕的Retinex算法

- SSR算法(单尺度)

- MSR算法(多尺度)

- MSRCR算法

5.3 SSR算法

5.3.1 算法原理

算法流程

一般把照射图像假设为空间平滑图像,若原始图像为S(x,y),增强(反射)图像为R(x,y),光照入射分量为L(x,y),可以得到SSR算法公式:

- 标准差σ是尺度参数,σ的大小对图像增强的效果有着显著的影响。当σ较小,也就是小尺度情况下,能够保持较好的边缘细节,动态范围压缩大,但是色彩较差;当σ较大时,也就是大尺度情况下,能够保持较好的色彩平衡,但是图像动态范围压缩小,细节较差。在SSR算法中σ一般取80-100。

SSR的算法可以概括为以下几步:

- 读取原图像数据S(x,y),并将整形转换为double型;

- 确定尺度参数σ的大小,确定满足条件∬G(x,y)dxdy=1的λ值;

- 根据公式r(x,y)=logR(x,y)=logS(x,y)-log[G(x,y)*S(x,y)]求r(x,y)的值;将r(x,y)值由对数域转换到实数域R(x,y);

- 对R(x,y)进行线性拉伸处理并输出显示。

对于灰度图像来说,直接对灰度值做上述步骤处理即可。对于彩色图像来说,在这里同样可以将图像分解为R、G、B三幅灰度图分别进行以上步骤处理再合成彩色图像。

5.3.2 算法实现

import cv2

import numpy as np

#np.nonzero函数是numpy中用于得到数组array中非零元素的位置(数组索引)的函数。

def replaceZeroes(data):

min_nonzero = min(data[np.nonzero(data)])

data[data == 0] = min_nonzero

return data

# retinex SSR

def SSR(src_img, size):

L_blur = cv2.GaussianBlur(src_img, (size, size), 80)

img = replaceZeroes(src_img)

L_blur = replaceZeroes(L_blur)

dst_Img = cv2.log(img / 255.0)

dst_Lblur = cv2.log(L_blur / 255.0)

log_R = cv2.subtract(dst_Img, dst_Lblur)

dst_R = cv2.normalize(log_R, None, 0, 255, cv2.NORM_MINMAX)

log_uint8 = cv2.convertScaleAbs(dst_R)

return log_uint8

def SSR_image(image):

size = 0

b_gray, g_gray, r_gray = cv2.split(image)

b_gray = SSR(b_gray, size)

g_gray = SSR(g_gray, size)

r_gray = SSR(r_gray, size)

result = cv2.merge([b_gray, g_gray, r_gray])

return result

def cv_show(name,img):

cv2.imshow(name,img)

cv2.waitKey(0)

cv2.destroyAllWindows()

if __name__ == "__main__":

image = cv2.imread('./SSR/5.jpg')

image_gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

image_ssr = SSR_image(image)

cv2.imwrite('5.png',image_ssr)

5.4 MSR算法

5.4.1 算法原理

采用几个 (一般3个) 大小不同的尺度参数σ对图像分别进行滤波后再线性加权归一化得到最终增强结果。

一般K=3,qi=1/3。

3尺度MSR算法流程

- 读取原图像数据S(x,y),并将整型转换为double型;

- 分别确定3个尺寸σ1、σ2、σ3的大小,确定满足条件∫∫G(x,y)dxdy=1的λ1、λ2、λ3的值;

- 根据公式求r(x,y);

- 将r(x,y)从从对数域转换到实数域R(x,y);

- 对R(x,y)做线性拉伸处理,并输出显示。

对于灰度图像来说,直接对灰度值做上述步骤处理即可。对于彩色图像来说,在这里同样可以将图像分解为R、G、B三幅灰度图分别进行以上步骤处理再合成彩色图像。

结语

总结

- 修改和调试U2Net网络,显著提高了训练精度和速度;

- 对几种增强算法的理论进行整理,python实现了SSR算法。

目标

- 代码实现同态滤波、MSR和梯度域图像增强法;

- 对有关增强算法的改进和应用的文献进行阅读和整理。

参考

[1] https://zhuanlan.zhihu.com/p/332905808

[2] 梁琳,何卫平,雷蕾,张维,王红霄.光照不均图像增强方法综述[J].计算机应用研究,2010,27(05):1625-1628.

[3] https://blog.csdn.net/qq_42666791/article/details/123213215

[4] https://blog.csdn.net/qq_39751352/article/details/124653791