【机器学习】基于mnist数据集的手写数字识别

文章目录

-

- 第1关:创建训练样本批量生成器

- 第2关:创建卷积神经网络

:机器学习在头歌上发布的作业记录。dataReader函数有更新!记得函数返回return batch_reader不用加括号,否则会出现’XXX’ object is not callable的错误。

第1关:创建训练样本批量生成器

任务描述:

补充完成函数:参考任务生成器创建代码,为分类器提供批量样本,每个样本为(图片,标签)对。

1️⃣输入:def dataReader(imgfile, labelfile, batch_size, drop_last)

imgfile:为IDX图片文件;labelfile:为IDX标签文件,标签顺序与图片顺序相同;batch_size:为生成器提供的批量样本数量;drop_last:是否丢弃不足批量的剩余样本。

2️⃣返回:batch_size长度的列表,列表中元素为图像和标签对。

形式如下:[(图像1,标签1),(图像2,标签2),(图像3,标签3)…]

❗注意:训练时样本顺序必须打乱,随机选取。

列表中的图像元素为28*28的矩阵,类型为

标签元素标量,类型为

########1.加载数据

import struct,random,numpy as np

code2type = {0x08: 'B', 0x09: 'b', 0x0B: 'h', 0x0c: 'i', 0x0D: 'f', 0x0E: 'd'}

def readMatrix(filename):

with open(filename,'rb') as f:

buff = f.read()

offset = 0

fmt = '>HBB'#格式定义,>表示高位在前,I表示4字节整数

_,dcode,dimslen = struct.unpack_from(fmt,buff,offset)

offset += struct.calcsize(fmt)

fmt = '>{}I'.format(dimslen)

shapes = struct.unpack_from(fmt,buff,offset)

offset += struct.calcsize(fmt)

fmt = '>'+ str(np.prod(shapes)) + code2type[dcode]

matrix = struct.unpack_from(fmt,buff,offset)

matrix = np.reshape(matrix,shapes).astype(code2type[dcode])

return matrix

def dataReader(imgfile, labelfile, batch_size, drop_last):

images = readMatrix(imgfile)

labels = readMatrix(labelfile)

buff = list(zip(images,labels))

batchnum = int(len(images)/batch_size)

random.shuffle(buff)

def batch_reader():

for i in range(batchnum):

yield buff[(i)*batch_size:(i+1)*batch_size]

if drop_last and len(images)%batch_size!=0:

yield buff[batchnum*batch_size:]

return batch_reader

第2关:创建卷积神经网络

任务描述:

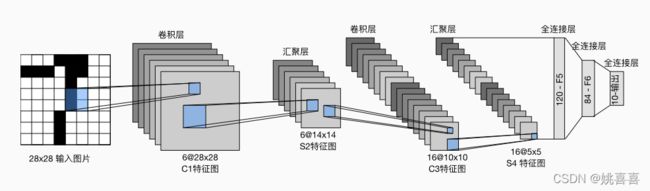

利用pytorch框架构建卷积网络,用于手写数字的识别。按图创建卷积+全连接+分类器网络。可以尝试不同激活函数,汇聚层采用池化层,看看运行300组样本后的预测效果。网络结构如下:

import numpy as np

import torch

import torch.nn as nn,torch.nn.functional as F,torch.optim as optim

from loader import dataReader

#########2.定义卷积神经网络

class MnistNet(nn.Module):

def __init__(self):

super(MnistNet, self).__init__()

self.conv1 = nn.Conv2d(in_channels=1,out_channels=6,kernel_size=5,stride=1,padding=2)

self.pool = nn.MaxPool2d(kernel_size=2,stride=2)

self.conv2 = nn.Conv2d(6,16,5)

self.fc1 = nn.Linear(16*5*5,120)

self.fc2 = nn.Linear(120,84)

self.fc3 = nn.Linear(84,10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1,16*5*5) # view函数相当于numpy的reshape,-1表示一个不确定的数。

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

# 3.训练网络

def train(loader):

model = MnistNet()

# 用分类交叉熵损失和带有动量的 SGD

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.001, momentum=0.9)

for epoch in range(1):

running_loss = 0.0

for i, data in enumerate(loader):

inputs, labels = zip(*data)

inputs = np.array(inputs).astype('float32')

labels = np.array(labels).astype('int64')

inputs = torch.from_numpy(inputs).unsqueeze(1) # 扩展通道维度 NCHW

labels = torch.from_numpy(labels)

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

if i % 100 == 99:

last_loss = running_loss / 100 # 每个batch的loss

print(' batch {} loss: {}'.format(i + 1, last_loss))

running_loss = 0.

if i==199:

break

print('Finished Training')

return model

# 4.测试网络

def test(PATH,loader):

# 重新加载保存的模型

model = MnistNet()

model.load_state_dict(torch.load(PATH))

correct = 0

total = 0

with torch.no_grad():

for data in loader:

images, labels = zip(*data)

images = np.array(images).astype('float32')

labels = np.array(labels).astype('int64')

images = torch.from_numpy(images).unsqueeze(1)

labels = torch.from_numpy(labels)

outputs = model(images)

_, predicted = torch.max(outputs, 1)#torch.argmax

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy of the network on the {:d} test images: {:f}%'.format(total,100 * correct / total))

return model

if __name__ == '__main__':

BATCH_SIZE = 16

PATH = '/data/workspace/myshixun/mnist/model/mnist_model.pth'

train_loader = dataReader('/data/workspace/myshixun/mnist/data/train-images-idx3-ubyte', '/data/workspace/myshixun/mnist/data/train-labels-idx1-ubyte', BATCH_SIZE, True)

test_loader = dataReader('/data/workspace/myshixun/mnist/data/t10k-images-idx3-ubyte', '/data/workspace/myshixun/mnist/data/t10k-labels-idx1-ubyte', BATCH_SIZE, False)

model = train(train_loader)

#快速保存我们训练过的模型:

torch.save(model.state_dict(), PATH)

test(PATH,test_loader)

:欢迎大家指出我的问题,一起讨论学习!