BiLSTM-CRF学习笔记

LSTM和GRU https://blog.csdn.net/IOT_victor/article/details/88934316

1、模型详解

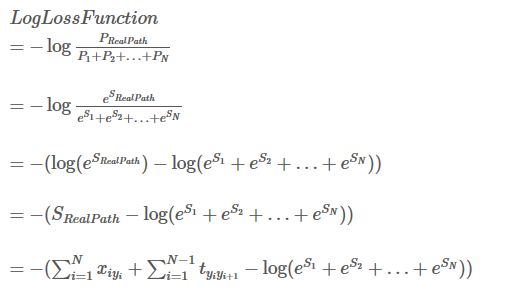

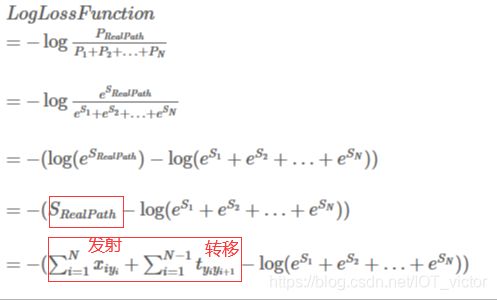

路径分数=发射分数+转移分数

CRF损失函数:p = 真实路径分数(最高)/ 所有路径的总分数。求-logp

真实路径的分数应该是所有路径中分数最高的。使得真实路径所占的比值越来越大。

bilstm-CRF理论 https://zhuanlan.zhihu.com/p/44042528

- BIO体系(约束条件)句子的开头应该是“B-”或“O”,而不是“I-”。

- “B-label1 I-label2 I-label3…”,在该模式中,类别1,2,3应该是同一种实体类别。比如,“B-Person I-Person” 是正确的,而“B-Person I-Organization”则是错误的。

- “O I-label”是错误的,命名实体的开头应该是“B-”而不是“I-”

CRF和HMM

https://www.jianshu.com/p/55755fc649b1

1.HMM是生成模型,CRF是判别模型 2.HMM是概率有向图,CRF是概率无向图

3.HMM求解过程可能是局部最优,CRF可以全局最优 4.CRF概率归一化较合理,HMM则会导致label bias 问题

判别vs生成

在机器学习中任务是从属性X预测标记Y,从本质上来说:

- 判别模型之所以称为“判别”模型,是因为其根据X“判别”Y;求的是后验概率P(Y|X)

- 生成模型之所以称为“生成”模型,是因为其预测的根据是联合概率P(X,Y),而联合概率可以理解为“生成”(X,Y)样本的概率分布(或称为 依据);具体来说,机器学习已知X,从Y的候选集合中选出一个来,可能的样本有(X,Y_1), (X,Y_2), (X,Y_3),…,(X,Y_n),实际数据是如何“生成”的依赖于P(X,Y),那么最后的预测结果选哪一个Y呢?那就选“生成”概率最大的那个吧。

2、代码

(TF):https://zhuanlan.zhihu.com/p/47722475

https://github.com/Determined22/zh-NER-TF

(torch): https://github.com/luopeixiang/named_entity_recognition

# embedding层

def embedding_layer(self, char_inputs):

u"""

:param char_inputs: one-hot encoding of sentence

:return: [1, num_steps, embedding size],

"""

with tf.variable_scope(u"char_embedding"):

embed = tf.nn.embedding_lookup(self.word_embedding, char_inputs)

return embed# bilstm层

def bilstm_layer(self, lstm_inputs, lstm_dim, lengths):

"""

:param lstm_inputs: [batch_size, num_steps, emb_size]

:return: [batch_size, num_steps, 2*lstm_dim]

"""

with tf.variable_scope(u"BiLSTM"):

lstm_cell_forward = tf.nn.rnn_cell.LSTMCell(lstm_dim,

use_peepholes=True,

initializer=self.initializer,

state_is_tuple=True)

lstm_cell_backward = tf.nn.rnn_cell.LSTMCell(lstm_dim,

use_peepholes=True,

initializer=self.initializer,

state_is_tuple=True)

(output_fw_seq, output_bw_seq), final_states = tf.nn.bidirectional_dynamic_rnn(

lstm_cell_forward,

lstm_cell_backward,

lstm_inputs,

dtype=tf.float32,

sequence_length=lengths)

return tf.concat([output_fw_seq, output_bw_seq], axis=2) # 将每一步的输出concat一起作为特征;注分类任务用final_states# 全连接层

def project_layer(self, lstm_outputs):

u"""

hidden layer between lstm layer and logits

:param lstm_outputs: [batch_size, num_steps, emb_size]

:return: [batch_size, num_steps, num_tags]

"""

with tf.variable_scope(u"project"):

with tf.variable_scope(u"hidden"):

W = tf.get_variable(u"W",

shape=[self.lstm_dim*2, self.hidden_dim],

dtype=tf.float32,

initializer=self.initializer)

b = tf.get_variable(u"b",

shape=[self.hidden_dim],

dtype=tf.float32,

initializer=tf.zeros_initializer())

output = tf.reshape(lstm_outputs, shape=[-1, self.lstm_dim*2])

hidden = tf.tanh(tf.nn.xw_plus_b(output, W, b))

# project to score of tags

with tf.variable_scope(u"logits"):

W = tf.get_variable(u"W",

shape=[self.hidden_dim, self.tags_num],

dtype=tf.float32,

initializer=self.initializer)

b = tf.get_variable(u"b",

shape=[self.tags_num],

dtype=tf.float32,

initializer=tf.zeros_initializer())

pred = tf.nn.xw_plus_b(hidden, W, b)

logit = tf.reshape(pred, [-1, self.num_steps, self.tags_num])

return logit全流程和计算loss crf_log_likelihood

# 全流程搭建

def inference(self, char_inputs):

embedding = self.embedding_layer(char_inputs)

# apply dropout before feed to lstm layer

model_inputs = tf.nn.dropout(embedding, self.dropout)

# bi-directional lstm layer

model_outputs = self.bilstm_layer(model_inputs, self.lstm_dim, self.lengths)

# logits for tags

logits = self.project_layer(model_outputs)

return logits

# 计算loss

def loss_layer(self, logits):

log_likelihood, transition_params = crf_log_likelihood(inputs=logits,

tag_indices=self.targets,

sequence_lengths=self.lengths)

loss = -tf.reduce_mean(log_likelihood)

return loss, transition_params解码:预测最佳路径 viterbi_decode

def decode(self, logits, lengths, transition_params):

u"""

:param logits: [batch_size, num_steps, num_tags]float32, logits

:param lengths: [batch_size]int32, real length of each sequence

:param transition_params: transaction matrix for inference

:return:

"""

# inference final labels usa viterbi Algorithm

label_list = []

for logit, seq_len in zip(logits, lengths):

viterbi_seq, _ = viterbi_decode(logit[:seq_len], transition_params) # 维特比解码

label_list.append(viterbi_seq)

return label_list # 预测最优路径evaluate

def evaluate_line(self, sess, inputs, id_to_tag): # 单条数据

trans = self.transition_params.eval(sess)

lengths, scores = self.run_step(sess, False, inputs)

batch_paths = self.decode(scores, lengths, trans) # 维特比解码得到最优路径

tags = [id_to_tag[int(idx)] for idx in batch_paths[0]] # 只能做预测,不知道真实标签

return tags代码2:https://github.com/Determined22/zh-NER-TF

embedding

def lookup_layer_op(self):

with tf.variable_scope("words"):

_word_embeddings = tf.Variable(self.embeddings,

dtype=tf.float32,

trainable=self.update_embedding,

name="_word_embeddings")

word_embeddings = tf.nn.embedding_lookup(params=_word_embeddings,

ids=self.word_ids,

name="word_embeddings")

self.word_embeddings = tf.nn.dropout(word_embeddings, self.dropout_pl)bilstm+project

def biLSTM_layer_op(self):

with tf.variable_scope("bi-lstm"):

cell_fw = LSTMCell(self.hidden_dim)

cell_bw = LSTMCell(self.hidden_dim)

(output_fw_seq, output_bw_seq), _ = tf.nn.bidirectional_dynamic_rnn(

cell_fw=cell_fw,

cell_bw=cell_bw,

inputs=self.word_embeddings,

sequence_length=self.sequence_lengths,

dtype=tf.float32)

output = tf.concat([output_fw_seq, output_bw_seq], axis=-1)

output = tf.nn.dropout(output, self.dropout_pl)

with tf.variable_scope("proj"):

W = tf.get_variable(name="W",

shape=[2 * self.hidden_dim, self.num_tags],

initializer=tf.contrib.layers.xavier_initializer(),

dtype=tf.float32)

b = tf.get_variable(name="b",

shape=[self.num_tags],

initializer=tf.zeros_initializer(),

dtype=tf.float32)

s = tf.shape(output)

output = tf.reshape(output, [-1, 2*self.hidden_dim])

pred = tf.matmul(output, W) + b

self.logits = tf.reshape(pred, [-1, s[1], self.num_tags])loss+op

def loss_op(self):

if self.CRF:

log_likelihood, self.transition_params = crf_log_likelihood(inputs=self.logits,

tag_indices=self.labels,

sequence_lengths=self.sequence_lengths)

self.loss = -tf.reduce_mean(log_likelihood)

else:

losses = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=self.logits,

labels=self.labels)

mask = tf.sequence_mask(self.sequence_lengths)

losses = tf.boolean_mask(losses, mask)

self.loss = tf.reduce_mean(losses)

tf.summary.scalar("loss", self.loss)

def softmax_pred_op(self):

if not self.CRF:

self.labels_softmax_ = tf.argmax(self.logits, axis=-1)

self.labels_softmax_ = tf.cast(self.labels_softmax_, tf.int32)

def trainstep_op(self):

with tf.variable_scope("train_step"):

self.global_step = tf.Variable(0, name="global_step", trainable=False)

if self.optimizer == 'Adam':

optim = tf.train.AdamOptimizer(learning_rate=self.lr_pl)

elif self.optimizer == 'Adadelta':

optim = tf.train.AdadeltaOptimizer(learning_rate=self.lr_pl)

elif self.optimizer == 'Adagrad':

optim = tf.train.AdagradOptimizer(learning_rate=self.lr_pl)

elif self.optimizer == 'RMSProp':

optim = tf.train.RMSPropOptimizer(learning_rate=self.lr_pl)

elif self.optimizer == 'Momentum':

optim = tf.train.MomentumOptimizer(learning_rate=self.lr_pl, momentum=0.9)

elif self.optimizer == 'SGD':

optim = tf.train.GradientDescentOptimizer(learning_rate=self.lr_pl)

else:

optim = tf.train.GradientDescentOptimizer(learning_rate=self.lr_pl)

grads_and_vars = optim.compute_gradients(self.loss)

grads_and_vars_clip = [[tf.clip_by_value(g, -self.clip_grad, self.clip_grad), v] for g, v in grads_and_vars]

self.train_op = optim.apply_gradients(grads_and_vars_clip, global_step=self.global_step)

def init_op(self):

self.init_op = tf.global_variables_initializer()