卷积神经网络特征图可视化及其意义

文章目录

- 特征图可视化方法

-

- 1. tensor->numpy->plt.save

- 2. register_forward_pre_hook函数实现特征图获取

- 3. 反卷积可视化

- 特征图可视化的意义

-

- 1. 改进训练网络结构

- 2. 删除冗余节点实现模型压缩

特征图可视化方法

1. tensor->numpy->plt.save

以VGG网络可视化为例,参考代码见链接。

- 不同层的特征图比较

modulelist = list(vgg.features.modules())

def to_grayscale(image):

# mean value

image = torch.sum(image, dim=0)

image = torch.div(image, image.shape[0])

return image

def layer_outputs(image):

outputs = []

names = []

for layer in modulelist[1:]:

outputs.append(layer(image))

names.append(str(layer))

output_im = []

for i in outputs:

temp = to_grayscale(i.squeeze(0))

output_im.append(temp.data.cpu().numpy())

fig = plt.figure()

plt.rcParams["figure.figsize"] = (30, 50)

for i in range(len(output_im)):

a = fig.add_subplot(8, 4, i+1)

imgplot = plt.imshow(output_im[i])

plt.axis('off')

a.set_title(names[i].partition('(')[0], fontsize=30)

plt.savefig('layer_outputs.jpg', bbox_inches='tight')

- 指定层的不同通道特征图比较

def filter_outputs(image, layer_to_visualize):

if layer_to_visualize < 0:

layer_to_visualize += 31

output = None

name = None

for count, layer in enumerate(modulelist[1:]):

image = layer(image)

if count == layer_to_visualize:

output = image

name = str(layer)

filters = []

output = output.data.squeeze()

for i in range(output.shape[0]):

filters.append(output[i, :, :])

fig = plt.figure()

plt.rcParams["figure.figsize"] = (10, 10)

for i in range(int(np.sqrt(len(filters))) * int(np.sqrt(len(filters)))):

fig.add_subplot(np.sqrt(len(filters)), np.sqrt(len(filters)), i+1)

imgplot = plt.imshow(filters[i].cpu())

plt.axis('off')

2. register_forward_pre_hook函数实现特征图获取

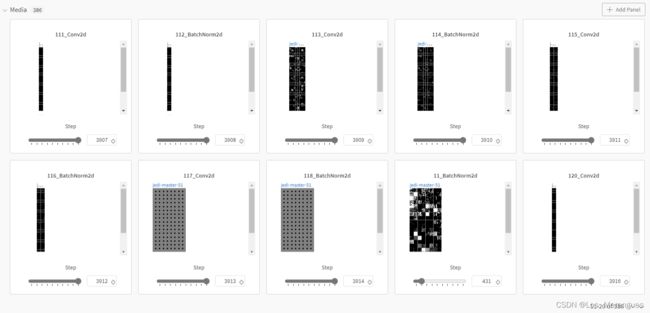

采用register_forward_pre_hook(hook_func: Callable[..., None])函数获取特征图,括号中的参数是一个需要自行实现的函数名,其参数 module, input, output 固定,分别代表模块名称、一个tensor组成的tuple输入和tensor输出;随后采用torchvision.utils.make_grid和torchvision.utils.save_image将特征图转化为 PIL.Image 类型,存储为png格式图片并保存。保存图片的尺寸与特征图张量尺寸一致。关于上述函数的详细解释可参考博文。

其中由于hook_func参数固定,故定义get_image_name_for_hook函数为不同特征图命名,并定义全局变量COUNT表示特征图在网络结构中的顺序。具体实现如下。

COUNT = 0 # global_para for featuremap naming

IMAGE_FOLDER = './save_image'

INSTANCE_FOLDER = None

def hook_func(module, input, output):

image_name = get_image_name_for_hook(module)

data = output.clone().detach().permute(1, 0, 2, 3)

# torchvision.utils.save_image(data, image_name, pad_value=0.5)

from PIL import Image

from torchvision.utils import make_grid

grid = make_grid(data, nrow=8, padding=2, pad_value=0.5, normalize=False, range=None, scale_each=False)

ndarr = grid.mul_(255).add_(0.5).clamp_(0, 255).permute(1, 2, 0).to('cpu', torch.uint8).numpy()

im = Image.fromarray(ndarr)

# wandb save from jpg/png file

wandb.log({f"{image_name}": wandb.Image(im)})

# save locally

# im.save(image_path)

def get_image_name_for_hook(module):

os.makedirs(INSTANCE_FOLDER, exist_ok=True)

base_name = str(module).split('(')[0]

image_name = '.' # '.' is surely exist, to make first loop condition True

global COUNT

while os.path.exists(image_name):

COUNT += 1

image_name = '%d_%s' % (COUNT, base_name)

return image_name

if __name__ == '__main__':

# clear output folder

if os.path.exists(IMAGE_FOLDER):

shutil.rmtree(IMAGE_FOLDER)

# TODO: wandb & model initialization

model.eval()

# layers to log

modules_for_plot = (torch.nn.LeakyReLU, torch.nn.BatchNorm2d, torch.nn.Conv2d)

for name, module in model.named_modules():

if isinstance(module, modules_for_plot):

module.register_forward_hook(hook_func)

index = 1

for idx, batch in enumerate(val_loader):

# global COUNT

COUNT = 1

INSTANCE_FOLDER = os.path.join(IMAGE_FOLDER, f'{index}_pic')

# forward

images_val = Variable(torch.from_numpy(batch[0]).type(torch.FloatTensor)).cuda()

outputs = model(images_val)

3. 反卷积可视化

参考文献:Visualizing and Understanding Convolutional Networks

对特征图 tensor 张量进行反池化-反激活-反卷积得到与原始输入图片尺寸一致的特征图。

- 反卷积为卷积核转置后进行卷积操作(实为转置卷积);

- 反激活与激活操作相同,直接调用ReLU函数(保证输出值非负即可);

- 反池化操作为利用池化过程中记录的激活值位置信息(Switches)复原特征图尺寸,其余位置赋零值。

特征图可视化的意义

1. 改进训练网络结构

图(b)包含过多低频、高频信息,很少有中频信息;图(d)中存在较多混叠伪影。因此对神经网络进行如下改进:

- 将卷积核尺寸从11×11缩小为7×7

- 将卷积层步长从4缩减为2

改进后对应特征层输出如图(c)和图(e)所示,特征提取结果更为鲜明,无效特征(dead feature map)减少,且特征图更加清晰,混影减少。

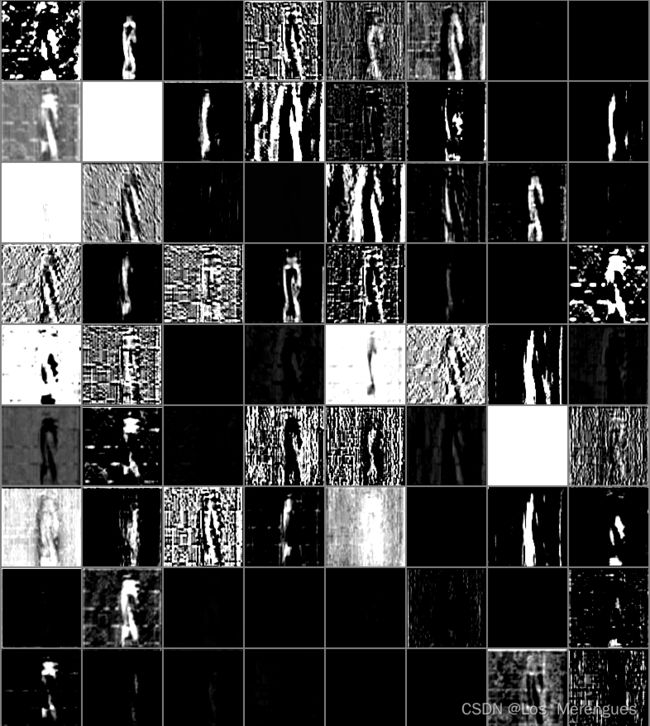

2. 删除冗余节点实现模型压缩

可视化结果里有一些纯黑的特征图(下图红色方框标出),即所谓的 dead feature map,且不同的输入数据下固定卷积层的 dead feature map 位置相同。这些 dead feature map 没有办法提供有效信息,又因它们位置固定,因此可以将对应的卷积核从网络中剔除,起到模型压缩的作用。