tensorflow + faster rcnn + linux +自己的数据集

一、获取代码

代码

二、根据你的显卡更改下对应的计算单元。

在tf-faster-rcnn/lib/setup.py的第130行,2080ti对应的是sm_75。查看显卡计算能力

三、编译Cython

仍然在lib路径下,编译Cython模块(确保你已经安装了easydict,如果没有,conda install easydict ):缺什么库,就conda install安装什么库。

Cython项目借助于源代码编译器将Python代码转换为同等的C语言,这是在CPython(主Python运行环境主要是CPython,由C语言编写而成。)运行环境下完成的,但 具有C语言的编译速度及直接调用C库的能力;同时它也保留了Python源代码的接口,这使得Cython可直接被Python代码使用。

来到lib文件夹下,打开终端:终端中输入

make clean

make

cd ..

其中:

make clean仅仅是清除之前编译的可执行文件及配置文件。清除上次的make命令所产生的object文件(后缀为“.o”的文件)及可执行文件。

make是用来编译的,它从Makefile中读取指令,然后编译。编译就是把C代码转换成CPU可执行的机器指令,每个.c文件生成一个.obj文件。

cd ..的意思是回到faster rcnn的位置。

四、安装COCO API。

项目需要访问 COCO 数据集,安装 Python COCO API。下载压缩包,解压到:2_bolt_tf-faster-rcnn-master文件夹下面。

进入到:/home/dlut/网络/2_bolt_tf-faster-rcnn-master/cocoapi-master/PythonAPI文件夹下,打开终端,输入make进行编译。

五、使用预训练模型来运行Demo并测试

- 下载数据

cd data

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtrainval_06-Nov-2007.tar

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtest_06-Nov-2007.tar

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCdevkit_08-Jun-2007.tar

tar xvf VOCtrainval_06-Nov-2007.tar

tar xvf VOCtest_06-Nov-2007.tar

tar xvf VOCdevkit_08-Jun-2007.tar

ln -s VOCdevkit VOCdevkit2007

cd ..

上述命令解析:来到data目录下,下载并解压数据集,然后创建了一个软连接。

软连接的理解:

根目录下/usr/local/inet-tomcat下面的temp目录每天有大量文件产生,但是根目录硬盘容量不够,于是做了一个软连接,将该目录链接到home目录下,home目录为单独挂载盘,容量较大。

命令如下:

ln -s /home/temp /usr/local/inet-tomcat

相当于在/usr/local/inet-tomcat建一个temp的快捷方式

由于自己早就已经下载过VOC数据集,不想重新下载,所以直接将数据集VOCdevkit文件夹拷贝到tf-fater-rcnn/data路径下,并重命名为VOCdevkit2007,也没有建立那个VOCdevkit的软连接。

2. 下载预训练模型

预训练模型

3. 创建文件,将模型放入文件中

自己创建output/res101/voc_2007_trainval+voc_2012_trainval/default将下载的模型放进去。

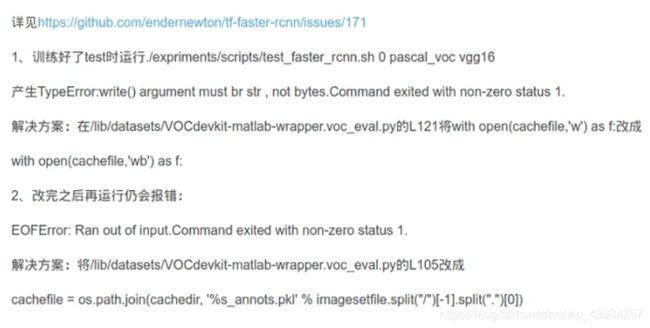

4. 修改一点代码

首先把 tf-faster-rcnn/lib/datasets/voc_eval.py的第121行的with open(cachefile,'w') as f改成:with open(cachefile,'wb') as f

5. 执行demo测试代码

GPU_ID=0

CUDA_VISIBLE_DEVICES=$GPU_ID ./tools/demo.py

Q1:

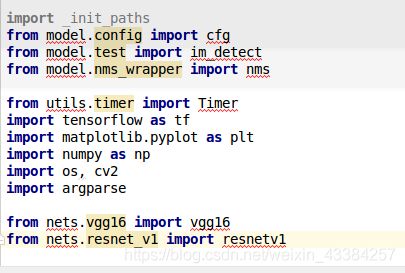

如果import _init_paths报错

解决办法:选中tools——右键——Mark Directoty as——sources Root

Q2:

有个奇怪的问题???demo代码中,import有红色的错误,可是运行却没有错误。

如果我把代码进行了修改,报红的前面都加一个lib.就不会报红,但是运行代码时会出错。

因为代码开始,有一句:import _init_paths这个初始化路径的代码中有一句:lib_path = osp.join(this_dir, ‘…’, ‘lib’)表示下面的import已经进入了lib文件下,所以下面的import不会有问题。

发现一张图片上只能显示一个目标,修改demo代码,使得一张图片上显示所有目标,参考博客。我修改的代码如下:命名为demo_many.py。运行的时候,直接在pycharm中左键点击运行就可以。以下的代码,命名为:demo_many.py

#!/usr/bin/env python

# --------------------------------------------------------

# Tensorflow Faster R-CNN

# Licensed under The MIT License [see LICENSE for details]

# Written by Xinlei Chen, based on code from Ross Girshick

# --------------------------------------------------------

"""

Demo script showing detections in sample images.

See README.md for installation instructions before running.

"""

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import _init_paths

from model.config import cfg

from model.test import im_detect

from model.nms_wrapper import nms

from utils.timer import Timer

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

import os, cv2

import argparse

from nets.vgg16 import vgg16

from nets.resnet_v1 import resnetv1

CLASSES = ('__background__',

'aeroplane', 'bicycle', 'bird', 'boat',

'bottle', 'bus', 'car', 'cat', 'chair',

'cow', 'diningtable', 'dog', 'horse',

'motorbike', 'person', 'pottedplant',

'sheep', 'sofa', 'train', 'tvmonitor')

NETS = {'vgg16': ('vgg16_faster_rcnn_iter_70000.ckpt',),'res101': ('res101_faster_rcnn_iter_110000.ckpt',)}

DATASETS= {'pascal_voc': ('voc_2007_trainval',),'pascal_voc_0712': ('voc_2007_trainval+voc_2012_trainval',)}

def vis_detections(im, class_name, dets, ax,thresh=0.5):

"""Draw detected bounding boxes."""

inds = np.where(dets[:, -1] >= thresh)[0]

if len(inds) == 0:

return

# im = im[:, :, (2, 1, 0)]

# fig, ax = plt.subplots(figsize=(12, 12))

# ax.imshow(im, aspect='equal')

for i in inds:

bbox = dets[i, :4]

score = dets[i, -1]

ax.add_patch(

plt.Rectangle((bbox[0], bbox[1]),

bbox[2] - bbox[0],

bbox[3] - bbox[1], fill=False,

edgecolor='red', linewidth=3.5)

)

ax.text(bbox[0], bbox[1] - 2,

'{:s} {:.3f}'.format(class_name, score),

bbox=dict(facecolor='blue', alpha=0.5),

fontsize=14, color='white')

ax.set_title(('{} detections with '

'p({} | box) >= {:.1f}').format(class_name, class_name,

thresh),

fontsize=14)

# plt.axis('off')

# plt.tight_layout()

# plt.draw()

def demo(sess, net, image_name):

"""Detect object classes in an image using pre-computed object proposals."""

# Load the demo image

im_file = os.path.join(cfg.DATA_DIR, 'demo', image_name)

im = cv2.imread(im_file)

# Detect all object classes and regress object bounds

timer = Timer()

timer.tic()

scores, boxes = im_detect(sess, net, im)

timer.toc()

print('Detection took {:.3f}s for {:d} object proposals'.format(timer.total_time, boxes.shape[0]))

# Visualize detections for each class

CONF_THRESH = 0.8

NMS_THRESH = 0.3

im = im[:, :, (2, 1, 0)]

fig, ax = plt.subplots(figsize=(12, 12))

ax.imshow(im, aspect='equal')

for cls_ind, cls in enumerate(CLASSES[1:]):

cls_ind += 1 # because we skipped background

cls_boxes = boxes[:, 4*cls_ind:4*(cls_ind + 1)]

cls_scores = scores[:, cls_ind]

dets = np.hstack((cls_boxes,

cls_scores[:, np.newaxis])).astype(np.float32)

keep = nms(dets, NMS_THRESH)

dets = dets[keep, :]

vis_detections(im, cls, dets, ax,thresh=CONF_THRESH)

plt.axis('off')

plt.tight_layout()

plt.draw()

def parse_args():

"""Parse input arguments."""

parser = argparse.ArgumentParser(description='Tensorflow Faster R-CNN demo')

parser.add_argument('--net', dest='demo_net', help='Network to use [vgg16 res101]',

choices=NETS.keys(), default='res101')

parser.add_argument('--dataset', dest='dataset', help='Trained dataset [pascal_voc pascal_voc_0712]',

choices=DATASETS.keys(), default='pascal_voc_0712')

args = parser.parse_args()

return args

if __name__ == '__main__':

cfg.TEST.HAS_RPN = True # Use RPN for proposals

args = parse_args()

# model path

demonet = args.demo_net

dataset = args.dataset

tfmodel = os.path.join('output', demonet, DATASETS[dataset][0], 'default',

NETS[demonet][0])

#由于自己对于相对路径没有弄明白,所以用了绝对路径,这个路径自己进行修改就可以,修改为自己权重的绝对路径就可以了。

tfmodel ='/home/dlut/网络/2_bolt_tf-faster-rcnn-master/output/res101/voc_2007_trainval+voc_2012_trainval/default' \

'/res101_faster_rcnn_iter_110000.ckpt'

if not os.path.isfile(tfmodel + '.meta'):

raise IOError(('{:s} not found.\nDid you download the proper networks from '

'our server and place them properly?').format(tfmodel + '.meta'))

# set config

tfconfig = tf.ConfigProto(allow_soft_placement=True)

tfconfig.gpu_options.allow_growth=True

# init session

sess = tf.Session(config=tfconfig)

# load network

if demonet == 'vgg16':

net = vgg16()

elif demonet == 'res101':

net = resnetv1(num_layers=101)

else:

raise NotImplementedError

net.create_architecture("TEST", 21,

tag='default', anchor_scales=[8, 16, 32])

saver = tf.train.Saver()

saver.restore(sess, tfmodel)

print('Loaded network {:s}'.format(tfmodel))

im_names = ['000456.jpg', '000542.jpg', '001150.jpg',

'001763.jpg', '004545.jpg']

for im_name in im_names:

print('~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~')

print('Demo for data/demo/{}'.format(im_name))

demo(sess, net, im_name)

plt.show()

还有一点对于demo.py需要修改,原先的代码只会把所有的结果弹出来显示,并不能保存到一个文件夹里。还可以对代码进行修改:

首先,把想要测试的图片放到data/demo文件夹下,然后对demo最后的代码进行如下修改,修改后的代码可以命名为demo_batch.py

im_names = os.listdir('/home/dlut/网络/2_bolt_tf-faster-rcnn-master/data/demo')#注意这些都是绝对路径,需要按照自己的需求进行修改

for im_name in im_names:

print('~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~')

print('Demo for data/demo/{}'.format(im_name))

demo(sess, net, im_name)

plt.savefig("/home/dlut/网络/2_bolt_tf-faster-rcnn-master/data/demotest/" + im_name)#注意这些都是绝对路径,需要按照自己的需求进行修改

#plt.show()

博客的最后,我会附上自己修改的完整的demo_batch.py代码。

如果上面的结果可以正常显示,那么只能说明环境没有问题。接下来可以自己训练模型试一下。

六、训练模型

- 修改代码

- 为了节约训练时间,我们把训练的迭代次数改为60次。

把experiments/scripts/train_faster_rcnn.sh和experiments/scripts/test_faster_rcnn.sh中的第21行迭代次数由ITERS=70000改为ITERS=60。

注意,test和train里面的 ITERS必须保持一致。 - 为了节约测试时间,把data/VOCdevkit2007/VOC2007/ImageSets/Main/test.txt里面4000+行,只取前面50行

- 删除之前的模型

- 将output删除

- 如果data文件下生成了cache,那么删除:data/cache/voc_2007_test_gt_roidb.pkl 和

data/cache/voc_2007_trainval_gt_roidb.pkl - 删除data/VOCdevkit2007/VOC2007/ImageSets/Main/test.txt_annots.pkl

- 训练模型

- 在tf-faster rcnn下执行:

./experiments/scripts/train_faster_rcnn.sh 0 pascal_voc res101

发现出现错误,截图如下:

发现是因为2_bolt_tf-faster-rcnn-master/data/imagenet_weights文件夹下面缺少:res101.ckpt文件,自己进行下载,放在这个文件夹下面,请参考这篇博客。

还有错误,截图如下:

解决:在/home/dlut/网络/2_bolt_tf-faster-rcnn-master/data/VOCdevkit2007/results/VOC2007/Main文件夹下面建立文本:comp4_9816294d-8cd0af8-b66f-29f985ad1155_det_test_aeroplane.txt

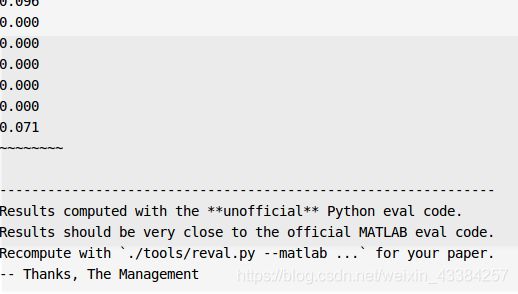

然后终于出结果了:train之后会自动执行test,所以不需要再专门执行test

当然,最终的结果是非常非常差的,因为只迭代了60次。

到现在我们可以自己去训练模型,我们需要的是再自己的数据集上训练自己的模型。

七、在自己的数据集上训练模型

- 准备数据集:将VOC2007里面的数据集换成自己的数据集:Annotation;ImageSets;JPEGImages

- 修改代码

- 将lib/datasets/pascal_voc.py代码修改

将第36行self._classes 改为自己的类型,其中 __background__不需要改;

self._classes = ('__background__', # always index 0

'normal_bolt', 'defect_bolt')

- 将tf-faster-rcnn1/tools/demo.py代码修改

(因为之后还需要用demo跑测试)

将35行的CLASSES修改成自己的类别,和pascal_voc.py一样

CLASSES = ('__background__',

'normal_bolt', 'defect_bolt')

- 38行NETS里面的内容res101_faster_rcnn_iter_12000这个12000要对应你的迭代次数,假如只迭代100次,就改成res101_faster_rcnn_iter_100

(这一点我看博客都没有说,但是不修改的话,我在使用demo.py时中途会找不到文件)

NETS = {'vgg16': ('vgg16_faster_rcnn_iter_70000.ckpt',),'res101': ('res101_faster_rcnn_iter_110000.ckpt',)}

- 156行的第二个参数改为4,就是自己的类别数+1

net.create_architecture("TEST", 3,

tag='default', anchor_scales=[8, 16, 32])

- 一切准备完毕,记得删除之前的模型

- 将output删除

- 如果data文件下生成了cache,那么删除:data/cache/voc_2007_test_gt_roidb.pkl 和

data/cache/voc_2007_trainval_gt_roidb.pkl - 删除data/VOCdevkit2007/VOC2007/ImageSets/Main/test.txt_annots.pkl

- 防止tensorboar中的曲线混乱,也可以将expriments/logs中的文件删除

- 至于迭代次数,batch_size自己想调就调一下。batch_size,学习率等参数在2_bolt_tf-faster-rcnn-master/lib/model/config下进行修改。参数含义参考博客1和修改参数参考博客2

- 然后训练模型

./experiments/scripts/train_faster_rcnn.sh 0 pascal_voc res101 - 训练之后,结果显示也可以调用demo函数,也可以调用demo_many函数或者demo_batch函数。

附上demo_batch代码:注意将要测试的图片放在data/demo文件下。还有记得修改自己的路径。

#!/usr/bin/env python

# --------------------------------------------------------

# Tensorflow Faster R-CNN

# Licensed under The MIT License [see LICENSE for details]

# Written by Xinlei Chen, based on code from Ross Girshick

# --------------------------------------------------------

"""

Demo script showing detections in sample images.

See README.md for installation instructions before running.

"""

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import _init_paths

from model.config import cfg

from model.test import im_detect

from model.nms_wrapper import nms

from utils.timer import Timer

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

import os, cv2

import argparse

from nets.vgg16 import vgg16

from nets.resnet_v1 import resnetv1

CLASSES = ('__background__',

'aeroplane', 'bicycle', 'bird', 'boat',

'bottle', 'bus', 'car', 'cat', 'chair',

'cow', 'diningtable', 'dog', 'horse',

'motorbike', 'person', 'pottedplant',

'sheep', 'sofa', 'train', 'tvmonitor')

NETS = {'vgg16': ('vgg16_faster_rcnn_iter_70000.ckpt',),'res101': ('res101_faster_rcnn_iter_110000.ckpt',)}

DATASETS= {'pascal_voc': ('voc_2007_trainval',),'pascal_voc_0712': ('voc_2007_trainval+voc_2012_trainval',)}

def vis_detections(im, class_name, dets, ax,thresh=0.5):

"""Draw detected bounding boxes."""

inds = np.where(dets[:, -1] >= thresh)[0]

if len(inds) == 0:

return

# im = im[:, :, (2, 1, 0)]

# fig, ax = plt.subplots(figsize=(12, 12))

# ax.imshow(im, aspect='equal')

for i in inds:

bbox = dets[i, :4]

score = dets[i, -1]

ax.add_patch(

plt.Rectangle((bbox[0], bbox[1]),

bbox[2] - bbox[0],

bbox[3] - bbox[1], fill=False,

edgecolor='red', linewidth=3.5)

)

ax.text(bbox[0], bbox[1] - 2,

'{:s} {:.3f}'.format(class_name, score),

bbox=dict(facecolor='blue', alpha=0.5),

fontsize=14, color='white')

ax.set_title(('{} detections with '

'p({} | box) >= {:.1f}').format(class_name, class_name,

thresh),

fontsize=14)

# plt.axis('off')

# plt.tight_layout()

# plt.draw()

def demo(sess, net, image_name):

"""Detect object classes in an image using pre-computed object proposals."""

# Load the demo image

im_file = os.path.join(cfg.DATA_DIR, 'demo', image_name)

im = cv2.imread(im_file)

# Detect all object classes and regress object bounds

timer = Timer()

timer.tic()

scores, boxes = im_detect(sess, net, im)

timer.toc()

print('Detection took {:.3f}s for {:d} object proposals'.format(timer.total_time, boxes.shape[0]))

# Visualize detections for each class

CONF_THRESH = 0.8

NMS_THRESH = 0.3

im = im[:, :, (2, 1, 0)]

fig, ax = plt.subplots(figsize=(12, 12))

ax.imshow(im, aspect='equal')

for cls_ind, cls in enumerate(CLASSES[1:]):

cls_ind += 1 # because we skipped background

cls_boxes = boxes[:, 4*cls_ind:4*(cls_ind + 1)]

cls_scores = scores[:, cls_ind]

dets = np.hstack((cls_boxes,

cls_scores[:, np.newaxis])).astype(np.float32)

keep = nms(dets, NMS_THRESH)

dets = dets[keep, :]

vis_detections(im, cls, dets, ax,thresh=CONF_THRESH)

plt.axis('off')

plt.tight_layout()

plt.draw()

def parse_args():

"""Parse input arguments."""

parser = argparse.ArgumentParser(description='Tensorflow Faster R-CNN demo')

parser.add_argument('--net', dest='demo_net', help='Network to use [vgg16 res101]',

choices=NETS.keys(), default='res101')

parser.add_argument('--dataset', dest='dataset', help='Trained dataset [pascal_voc pascal_voc_0712]',

choices=DATASETS.keys(), default='pascal_voc_0712')

args = parser.parse_args()

return args

if __name__ == '__main__':

cfg.TEST.HAS_RPN = True # Use RPN for proposals

args = parse_args()

# model path

demonet = args.demo_net

dataset = args.dataset

tfmodel = os.path.join('output', demonet, DATASETS[dataset][0], 'default',

NETS[demonet][0])

tfmodel ='/home/dlut/网络/2_bolt_tf-faster-rcnn-master/output/res101/voc_2007_trainval+voc_2012_trainval/default' \

'/res101_faster_rcnn_iter_110000.ckpt'

if not os.path.isfile(tfmodel + '.meta'):

raise IOError(('{:s} not found.\nDid you download the proper networks from '

'our server and place them properly?').format(tfmodel + '.meta'))

# set config

tfconfig = tf.ConfigProto(allow_soft_placement=True)

tfconfig.gpu_options.allow_growth=True

# init session

sess = tf.Session(config=tfconfig)

# load network

if demonet == 'vgg16':

net = vgg16()

elif demonet == 'res101':

net = resnetv1(num_layers=101)

else:

raise NotImplementedError

net.create_architecture("TEST", 21,

tag='default', anchor_scales=[8, 16, 32])

saver = tf.train.Saver()

saver.restore(sess, tfmodel)

print('Loaded network {:s}'.format(tfmodel))

im_names = ['000456.jpg', '000542.jpg', '001150.jpg',

'001763.jpg', '004545.jpg']

# for im_name in im_names:

# print('~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~')

# print('Demo for data/demo/{}'.format(im_name))

# demo(sess, net, im_name)

#

# plt.show()

im_names = os.listdir('/home/dlut/网络/2_bolt_tf-faster-rcnn-master/data/demo')

for im_name in im_names:

print('~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~')

print('Demo for data/demo/{}'.format(im_name))

demo(sess, net, im_name)

plt.savefig("/home/dlut/网络/2_bolt_tf-faster-rcnn-master/data/demotest/" + im_name)

#plt.show()

批量测试的时候,只能将图片放在data/demo文件下进行

- 也可以单独评价你保存的训练的模型,终端或者pycharm输入:

./experiments/scripts/test_faster_rcnn.sh 0 pascal_voc res101

比如我设置的config中__C.TRAIN.SNAPSHOT_ITERS = 5000是5000次保存一次模型,一共训练了12000次,那么我的defalut中一共有三次模型,分别是5000;10000;12000。

如果我想测试5000那次的map是多少,就需要修改/2_bolt_tf-faster-rcnn-master/experiments/scripts中的test_faster_rcnn.sh文件:

case ${DATASET} in

pascal_voc)

TRAIN_IMDB="voc_2007_trainval"

TEST_IMDB="voc_2007_test"

ITERS=5000

ANCHORS="[8,16,32]"

RATIOS="[0.5,1,2]"

;;

本来是ITERS=12000修改为了ITERS=5000

另外如果相对output文件夹进行修改名字,假设修改为了output_1,那么代码中也需要修改路径才能保证正常的测试。

set +x

if [[ ! -z ${EXTRA_ARGS_SLUG} ]]; then

NET_FINAL=output_1/${NET}/${TRAIN_IMDB}/${EXTRA_ARGS_SLUG}/${NET}_faster_rcnn_iter_${ITERS}.ckpt

else

NET_FINAL=output_1/${NET}/${TRAIN_IMDB}/default/${NET}_faster_rcnn_iter_${ITERS}.ckpt

fi

set -x

本来是output修改为了output_1

然后执行:./experiments/scripts/test_faster_rcnn.sh 0 pascal_voc res101就可以测试保存的任意一个模型的map了。

- 另外在源代码中评价的时候,并不显示precision和recall的曲线,如果显示曲线,参考:绘制Precision-recall曲线

八. 遇到的一些问题

补充

上面只是说了一下自己运行faster rcnn的过程,以及遇到的一些小问题,并没有考虑其他多余的内容,但是随着自己看代码,发现好多东西要思考,所以加一个补充。

- 由于自己跑过yolov3的代码,可以参考这篇博客.但是yolov3在eval的时候,会输出precision和recall,然而faster rcnn却没有输出recall和precision,只是输出AP的值。所以我就很想让faster rcnn也输出recall和precision。但是,失败了。后来仔细考虑背后原理,首先要知道这些值是如何计算的?可以参考:目标检测评价指标Precision Recall AP mAP.然后对比yolov3,会发现yolov3在eval的时候给了一个置信度阈值score_thresh,所以yolov3可以在eval的时候输出precision以及recall。然而faster rcnn在eval的时候,只是想计算AP的值,所以并没有给出置信度阈值,因此只能计算AP得值。

- 但是测试的时候,就必须给出一个置信度阈值了,demo的代码中也给出了

CONF_THRESH = 0.8置信度阈值,但是对于faster rcnn就没办法测试置信度阈值是多少更为合适了,所以暂时我也不想动这个阈值。

参考:

tf-faster-rcnn在VOC数据集或者其他数据集训练

python3+Tensorflow+Faster R-CNN训练自己的数据

修改参数参考博客

for me: 自己电脑的环境是:py36下运行成功的.conda list中有环境:

packages in environment at /home/dlut/anaconda3/envs/py36:

Name Version Build Channel

_libgcc_mutex 0.1 main

absl-py 0.7.1 pypi_0 pypi

astor 0.8.0 pypi_0 pypi

backcall 0.1.0 pypi_0 pypi

bleach 1.5.0 pypi_0 pypi

ca-certificates 2019.10.16 0

certifi 2019.9.11 py36_0

cffi 1.12.3 pypi_0 pypi

chardet 3.0.4 pypi_0 pypi

cycler 0.10.0 pypi_0 pypi

decorator 4.4.0 pypi_0 pypi

easydict 1.9 pypi_0 pypi

gast 0.2.2 pypi_0 pypi

google-pasta 0.1.7 pypi_0 pypi

grpcio 1.22.0 pypi_0 pypi

h5py 2.9.0 pypi_0 pypi

html5lib 0.9999999 pypi_0 pypi

idna 2.8 pypi_0 pypi

imageio 2.5.0 pypi_0 pypi

imgcat 0.3.0 pypi_0 pypi

imutils 0.5.3 pypi_0 pypi

ipdb 0.12 pypi_0 pypi

ipython 7.6.1 pypi_0 pypi

ipython-genutils 0.2.0 pypi_0 pypi

jedi 0.14.1 pypi_0 pypi

joblib 0.13.2 pypi_0 pypi

keras 2.2.4 pypi_0 pypi

keras-applications 1.0.8 pypi_0 pypi

keras-preprocessing 1.1.0 pypi_0 pypi

kiwisolver 1.1.0 pypi_0 pypi

libedit 3.1 heed3624_0

libffi 3.2.1 hd88cf55_4

libgcc-ng 9.1.0 hdf63c60_0

libstdcxx-ng 9.1.0 hdf63c60_0

lime 0.1.1.36 pypi_0 pypi

markdown 3.1.1 pypi_0 pypi

matplotlib 3.0.3 pypi_0 pypi

ncurses 6.0 h9df7e31_2

netron 3.5.6 pypi_0 pypi

networkx 2.3 pypi_0 pypi

numpy 1.15.1 pypi_0 pypi

olefile 0.46 pypi_0 pypi

opencv-python 4.1.1.26 pypi_0 pypi

openssl 1.0.2t h7b6447c_1

pandas 0.25.1 pypi_0 pypi

parso 0.5.1 pypi_0 pypi

patsy 0.5.1 pypi_0 pypi

pexpect 4.7.0 pypi_0 pypi

pickleshare 0.7.5 pypi_0 pypi

pillow 5.3.0 pypi_0 pypi

pip 19.3.1 py36_0

plotly 4.1.1 pypi_0 pypi

progressbar 2.5 pypi_0 pypi

prompt-toolkit 2.0.9 pypi_0 pypi

protobuf 3.6.1 pypi_0 pypi

psutil 5.6.3 pypi_0 pypi

ptyprocess 0.6.0 pypi_0 pypi

pycparser 2.19 pypi_0 pypi

pygments 2.4.2 pypi_0 pypi

pyparsing 2.4.2 pypi_0 pypi

pyqt5 5.13.0 pypi_0 pypi

pyqt5-sip 4.19.18 pypi_0 pypi

python 3.6.2 hca45abc_19

python-dateutil 2.8.0 pypi_0 pypi

pytorch-msssim 0.1.3 pypi_0 pypi

pytz 2019.2 pypi_0 pypi

pywavelets 1.0.3 pypi_0 pypi

pyyaml 5.1.1 pypi_0 pypi

pyzmq 18.0.2 pypi_0 pypi

readline 7.0 ha6073c6_4

requests 2.22.0 pypi_0 pypi

retrying 1.3.3 pypi_0 pypi

scikit-image 0.15.0 pypi_0 pypi

scikit-learn 0.21.2 pypi_0 pypi

scipy 1.1.0 pypi_0 pypi

seaborn 0.9.0 pypi_0 pypi

setuptools 41.6.0 py36_0

six 1.12.0 pypi_0 pypi

sklearn 0.0 pypi_0 pypi

sqlite 3.23.1 he433501_0

statsmodels 0.10.1 pypi_0 pypi

tensorboard 1.12.2 pypi_0 pypi

tensorflow-estimator 2.0.1 pypi_0 pypi

tensorflow-gpu 1.12.0 pypi_0 pypi

termcolor 1.1.0 pypi_0 pypi

tk 8.6.8 hbc83047_0

torch 1.1.0 pypi_0 pypi

torchfile 0.1.0 pypi_0 pypi

torchvision 0.3.0 pypi_0 pypi

tornado 6.0.3 pypi_0 pypi

tqdm 4.32.2 pypi_0 pypi

traitlets 4.3.2 pypi_0 pypi

urllib3 1.25.3 pypi_0 pypi

visdom 0.1.8.8 pypi_0 pypi

wcwidth 0.1.7 pypi_0 pypi

websocket-client 0.56.0 pypi_0 pypi

werkzeug 0.15.5 pypi_0 pypi

wget 3.2 pypi_0 pypi

wheel 0.33.6 py36_0

wrapt 1.11.2 pypi_0 pypi

xz 5.2.4 h14c3975_4

zlib 1.2.11 h7b6447c_3