(Android-RTC-1)Android-WebRTC初体验

开篇导读,工程目录在 https://github.com/MrZhaozhirong/AppWebRTC 自行拾取。工程环境是Gradle4.0.x+Androidx,是手动重新fork整个WebRTCdemo,官方源码在这里。

前言

正式开始Android-WebRTC的内容,网上搜索到的不外乎就是WebRTC-Codelab的搬运教程,学习demo也是代码片段;要不然就是老司机直接Nignx+coturn+webrtc.js.api搭载一套,这些内容我还是感觉不全面,没有一个很清晰的整体架构认识,所以就决定去由浅到深,一步步的去挖掘。到现在我都不敢说已经完全了解WebRTC里面的所有内容,但起码是有一个清晰的认知构成图。WebRTC不单单是一套API或理解为一个SDK,它是一套基于浏览器web上针对RTC(Real-Time Communications)实时通信的一套开发协标准(或者说是解决方案?)。既然能基于浏览器web上的,那其他平台上肯定就有其对应的实现。

至于RTC与WebRTC有什么区别?实际上,二者不能划等号。RTC从功能流程上来说,包含采集、编码、前后处理、传输、解码、缓冲、渲染等很多环节,每一个细分环节,还有更细分的技术模块。比如,前后处理环节有美颜、滤镜、回声消除、噪声抑制等,采集有麦克风阵列等,编解码有VP8、VP9、H.264、H.265等等。WebRTC是RTC的一部分,是Google的一个专门针对网页实时通信的标准及开源项目。只提供了基础的前端功能实现,包括编码解码和抖动缓冲等,开发者若要基于WebRTC开发商用项目,那么需要自行做服务端实现和部署,信令前后端选型实现部署,以及手机适配等一系列具体工作;在此之外还要在可用性和高质量方面,进行大量的改进和打磨,对自身开发能力的门槛要求非常高。一个专业的RTC技术服务系统,需要除了涵盖上述的通信环节外,实际上还需要有解决互联网不稳定性的专用通信网络,以及针对互联网信道的高容忍度的音视频信号处理算法。当然常规云服务的高可用、服务质量的保障和监控维护工具等都只能算是一个专业服务商的基本模块。所以,WebRTC仅是RTC技术栈中的几个小细分的技术组合,并不是一个全栈解决方案。

搞清楚这些关系之后,理论基础是必不可少的,还是强烈建议初学小白先去了解WebRTC的理论知识(之前搬运的两篇长篇理论文章就很不错,赶紧淦),再然后才是找代码阅读。理论—实践—再理论—再优化,唯有这条路才是走向技术专家的唯一捷径。

如何开始?

按照RTC的技术方向划分,可以简单分类以下几个大领域:

- 端对端链接 (信令signal、stun打洞、turn转发);

- 视频采集 / 编解码 / 滤镜处理;

- 音频采集 / 编解码 / 回声消除降噪处理;

- 实时传输 / QoE质量保障;

本篇内容从第一部分(端对端链接)开始,分析Android WebRTCdemo的组成,简单的延伸到官方套件api的解读分析。

Android的WebRTC demo工程有两个依赖,一个是官方包,一个是libs/autobanh.jar此包主要是负责websocket的通信。

dependencies {

implementation fileTree(dir: "libs", include: ["*.jar"]) // libs/autobanh.jar

implementation 'androidx.appcompat:appcompat:1.1.0'

implementation 'org.webrtc:google-webrtc:1.0.32006'

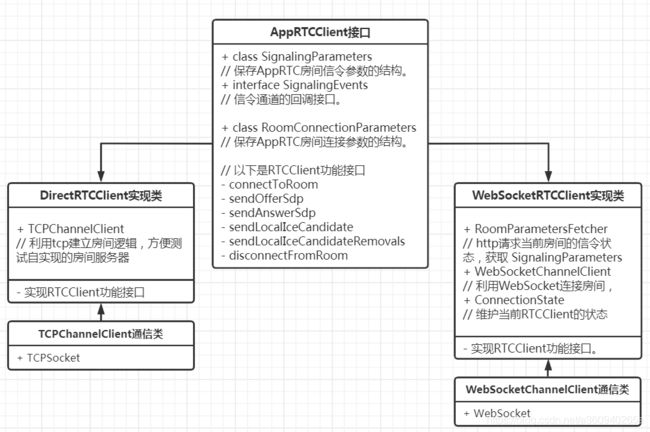

}文件组成及其主要功能,按继承关系可以如下划分。(暂不显示org.webrtc:google-webrtc包里面的类)

Demo的核心基本就是两大块,AppRTCClient 和 PeerConnectClient。CallActivity作为一个载体承接逻辑交互。其余的都是围绕这几部分进行额外的参数设置。

接下来介绍1v1视频通话测试的逻辑,以及如何理解 信令Signal?

什么是信令Signal?

先说说如何利用demo进行测试:

1. Go to https://appr.tc from any browser and create any room number 先在浏览器打开https://appr.tc生成room id,然后进入房间。

2. Start the Android app. Enter the room number and press call. Video call should start. 输入room id然后键入呼叫。

经过步骤1~2之后,ConnectActivity就会跳转到CallActivity.onCreate,有如下代码片段:

peerConnectionParameters =

new PeerConnectionClient.PeerConnectionParameters(

intent.getBooleanExtra(EXTRA_VIDEO_CALL, true),

loopback, tracing, videoWidth, videoHeight,

intent.getIntExtra(EXTRA_VIDEO_FPS, 0),

intent.getIntExtra(EXTRA_VIDEO_BITRATE, 0),

intent.getStringExtra(EXTRA_VIDEOCODEC),

intent.getBooleanExtra(EXTRA_HWCODEC_ENABLED, true),

intent.getBooleanExtra(EXTRA_FLEXFEC_ENABLED, false),

intent.getIntExtra(EXTRA_AUDIO_BITRATE, 0),

intent.getStringExtra(EXTRA_AUDIOCODEC),

intent.getBooleanExtra(EXTRA_NOAUDIOPROCESSING_ENABLED, false),

intent.getBooleanExtra(EXTRA_AECDUMP_ENABLED, false),

intent.getBooleanExtra(EXTRA_SAVE_INPUT_AUDIO_TO_FILE_ENABLED, false),

intent.getBooleanExtra(EXTRA_OPENSLES_ENABLED, false),

intent.getBooleanExtra(EXTRA_DISABLE_BUILT_IN_AEC, false),

intent.getBooleanExtra(EXTRA_DISABLE_BUILT_IN_AGC, false),

intent.getBooleanExtra(EXTRA_DISABLE_BUILT_IN_NS, false),

intent.getBooleanExtra(EXTRA_DISABLE_WEBRTC_AGC_AND_HPF, false),

intent.getBooleanExtra(EXTRA_ENABLE_RTCEVENTLOG, false),

dataChannelParameters);

Uri roomUri = intent.getData(); // 默认为https://appr.tc

String roomId = intent.getStringExtra(EXTRA_ROOMID); // 房间rumber

String urlParameters = intent.getStringExtra(EXTRA_URLPARAMETERS);

roomConnectionParameters = new AppRTCClient.RoomConnectionParameters(

roomUri.toString(), roomId, loopback, urlParameters);从名字和参数列表,可以大概知道PeerConnectionParameters是一些与音视频模块的相关配置参数;另外一个RoomConnectionParameters也很简单,就是房间URL和房间ID。接下来就是实例化AppRtcClient和PeerConnectionClient。

// Create connection client. Use DirectRTCClient if room name is an IP

// otherwise use the standard WebSocketRTCClient.

if (loopback || !DirectRTCClient.IP_PATTERN.matcher(roomId).matches()) {

appRtcClient = new WebSocketRTCClient(this); // AppRTCClient.SignalingEvents Callback

} else {

Log.i(TAG, "Using DirectRTCClient because room name looks like an IP.");

appRtcClient = new DirectRTCClient(this);

}

// Create peer connection client.

peerConnectionClient = new PeerConnectionClient(getApplicationContext(), eglBase, peerConnectionParameters, CallActivity.this);

PeerConnectionFactory.Options options = new PeerConnectionFactory.Options();

if (loopback) {

options.networkIgnoreMask = 0;

}

peerConnectionClient.createPeerConnectionFactory(options);

if (screencaptureEnabled) {

startScreenCapture();

} else {

startCall();

}1、备注清楚的描述了,测试使用ip的房间名字就实例DirectRTCClient,其余情况都使用WebSocketRTCClient。

2、PeerConnectClient是封装PeerConnection的类,掌握WebRTC理论基础的同学可以知道,WebRTC实现了以下三套API:

- MediaStream (也可以叫作 getUserMedia)

- RTCPeerConnection

- RTCDataChannel

其中PeerConnection是WebRTC进行网络连接的核心。到Android版本的API使用了Factory工厂模式配置创建PeerConnection,这个留着下文展开分析。

3、第三个细节还想聊聊的就是WebRTC也用到EGL环境,证明底层视频采集渲染处理都用到OpenGL,这也是留着以后慢慢分析。

4、最后就是Android版本的WebRTC也支持Android L以上的屏幕投影(哎哟~不错喔);接下来分析startCall

private void startCall() {

if (appRtcClient == null) {

Log.e(TAG, "AppRTC client is not allocated for a call.");

return;

}

callStartedTimeMs = System.currentTimeMillis();

// Start room connection.

logAndToast(getString(R.string.connecting_to, roomConnectionParameters.roomUrl));

appRtcClient.connectToRoom(roomConnectionParameters); // <- 这句是重点。

// Create and audio manager that will take care of audio routing,

// audio modes, audio device enumeration etc.

audioManager = AppRTCAudioManager.create(getApplicationContext());

// Store existing audio settings and change audio mode to

// MODE_IN_COMMUNICATION for best possible VoIP performance.

// This method will be called each time the number of available audio devices has changed.

audioManager.start((device, availableDevices) -> {

//onAudioManagerDevicesChanged(device, availableDevices);

Log.d(TAG, "onAudioManagerDevicesChanged: " + availableDevices + ", " + "selected: " + device);

// TODO: add callback handler.

});

}看着还蛮简单的,其中AppRTCAudioManager是创建和管理音频设备,将负责音频路由,音频模式,音频设备枚举等。功能非常丰富包含了:无线近场传感器设备,蓝牙耳机,传统有线耳机等。但是这不是本篇文章分析的重点,有兴趣的同学自行插眼,以后再学习。

重点是 AppRtcClient.connectToRoom(roomConnectionParameters); 具上分析知道此时AppRTCClient的实例对象是WebSocketRTCClient,里面跳转到对应的代码进行分析。

// Connects to room - function runs on a local looper thread.

private void connectToRoomInternal() {

String connectionUrl = getConnectionUrl(connectionParameters);

// connectionUrl = https://appr.tc/join/roomId;

wsClient = new WebSocketChannelClient(handler, this);

RoomParametersFetcher.RoomParametersFetcherEvents callbacks =

new RoomParametersFetcher.RoomParametersFetcherEvents() {

@Override

public void onSignalingParametersReady(final SignalingParameters params) {

WebSocketRTCClient.this.handler.post(new Runnable() {

@Override

public void run() {

WebSocketRTCClient.this.signalingParametersReady(params);

}

});

}

@Override

public void onSignalingParametersError(String description) {

WebSocketRTCClient.this.reportError(description);

}

};

new RoomParametersFetcher(connectionUrl, null, callbacks).makeRequest();

}

// Callback issued when room parameters are extracted. Runs on local looper thread.

private void signalingParametersReady(final SignalingParameters signalingParameters) {

Log.d(TAG, "Room connection completed.");

if (!signalingParameters.initiator || signalingParameters.offerSdp != null) {

reportError("Loopback room is busy.");

return;

}

if (!signalingParameters.initiator && signalingParameters.offerSdp == null) {

Log.w(TAG, "No offer SDP in room response.");

}

initiator = signalingParameters.initiator;

messageUrl = getMessageUrl(connectionParameters, signalingParameters);

// https://appr.tc/message/roomId/clientId

leaveUrl = getLeaveUrl(connectionParameters, signalingParameters);

// https://appr.tc/leave/roomId/clientId

events.onConnectedToRoom(signalingParameters);

wsClient.connect(signalingParameters.wssUrl, signalingParameters.wssPostUrl);

wsClient.register(connectionParameters.roomId, signalingParameters.clientId);

}1、请求连接 https://appr.tc/join/roomId,加入到roomId对应的房间,并返回当前房间的信令参数signalingParameters。

2、通过返回的信令参数signalingParameters,获取两个房间事件的url(message,leave)还有两个WebSocket的url。可以看到类似日志打印如下:

com.zzrblog.appwebrtc D/RoomRTCClient: RoomId: 028711912. ClientId: 74648260

com.zzrblog.appwebrtc D/RoomRTCClient: Initiator: false

com.zzrblog.appwebrtc D/RoomRTCClient: WSS url: wss://apprtc-ws.webrtc.org:443/ws

com.zzrblog.appwebrtc D/RoomRTCClient: WSS POST url: https://apprtc-ws.webrtc.org:443

com.zzrblog.appwebrtc D/RoomRTCClient: Request TURN from: https://appr.tc/v1alpha/iceconfig?key=

com.zzrblog.appwebrtc D/WebSocketRTCClient: Room connection completed.

com.zzrblog.appwebrtc D/WebSocketRTCClient: Message URL: https://appr.tc/message/028711912/74648260

com.zzrblog.appwebrtc D/WebSocketRTCClient: Leave URL: https://appr.tc/leave/028711912/74648260

四个url只有一个signalingParameters.wssUrl是真的websocket的url,其他都是https是的请求,可以猜想wssUrl是负责房间内部访客之间的信令交互的地址,其他的都是用于维护房间状态。

所以到这里知道,信令其实就是一系列用于维护当前 终端/房间 进行端对端通话预连接的逻辑参数,所以WebRTC不可能定义或者实现 与业务强相关的api,因为不同业务需求,信令参数的类型就会改变,很难实现协议化的统一。

4、再分析wsClient(WebSocketChannelClient)的connect 和 register进一步肯定:wssUrl是用于send发送功能命令进行信令交互,wssPostUrl只有在退出房间的时候进行请求。

5、这部分逻辑分析完之后,不要忘了还有 events.onConnectedToRoom(signalingParameters); 把信令参数回调宿主CallActivity。

private void onConnectedToRoomInternal(final AppRTCClient.SignalingParameters params) {

signalingParameters = params;

VideoCapturer videoCapturer = null;

if (peerConnectionParameters.videoCallEnabled) {

videoCapturer = createVideoCapturer();

}

peerConnectionClient.createPeerConnection(

localProxyVideoSink, remoteSinks, videoCapturer, signalingParameters);

if (signalingParameters.initiator) {

// 房间创建第一人走这里

peerConnectionClient.createOffer();

} else {

if (params.offerSdp != null) {

peerConnectionClient.setRemoteDescription(params.offerSdp);

// 如果不是房间创建第一人,那就判断信令是否拿到offersdp,

// 如果有有offersdp就证明有人进入房间并发出了offer

// 设置remote sdp 并 answer

peerConnectionClient.createAnswer();

}

if (params.iceCandidates != null) {

// Add remote ICE candidates from room.

for (IceCandidate iceCandidate : params.iceCandidates) {

peerConnectionClient.addRemoteIceCandidate(iceCandidate);

}

}

}

}分析这一段CallActivity.onConnectedToRoom,配合注释逻辑应该不难理解,正常测试流程createPeerConnection之后一般都是走createAnswer的分支,因为用 https://appr.tc 进入房间后便成为创建房间的第一人。按照流程步骤先分析PeerConnection的创建过程。

PeerConnection的创建

现在开始分析PeerConnectionClient是如何创建PeerConnection对象,到现在为止PeerConnectionClient一共进行了三个步骤:1、PeerConnectionClient构造函数;2、createPeerConnectionFactory;3、createPeerConnection;按照这个流程贴出详细代码。

1、PeerConnectionClient构造函数

public PeerConnectionClient(Context appContext, EglBase eglBase,

PeerConnectionParameters peerConnectionParameters,

PeerConnectionEvents events)

{

this.rootEglBase = eglBase; this.appContext = appContext; this.events = events;

this.peerConnectionParameters = peerConnectionParameters;

this.dataChannelEnabled = peerConnectionParameters.dataChannelParameters != null;

final String fieldTrials = getFieldTrials(peerConnectionParameters);

executor.execute(() -> {

Log.d(TAG, "Initialize WebRTC. Field trials: " + fieldTrials);

PeerConnectionFactory.initialize(

PeerConnectionFactory.InitializationOptions.builder(appContext)

.setFieldTrials(fieldTrials)

.setEnableInternalTracer(true)

.createInitializationOptions());

});

}

WebRTC API代码//

public static class InitializationOptions.Builder {

private final Context applicationContext;

private String fieldTrials = "";

private boolean enableInternalTracer;

private NativeLibraryLoader nativeLibraryLoader = new DefaultLoader();

private String nativeLibraryName = "jingle_peerconnection_so";

@Nullable private Loggable loggable;

@Nullable private Severity loggableSeverity;

... ...

}使用Factory的配置模式初始化PeerConnectionFactory,其中有两个我稍微留意的地方:fieldTrials 和 nativeLibraryName = "jingle_peerconnection_so"; 这里买个关子,放到后面深入源码分析的再详解。

2、createPeerConnectionFactory

private void createPeerConnectionFactoryInternal(PeerConnectionFactory.Options options) {

// Check if ISAC is used by default.

preferIsac = peerConnectionParameters.audioCodec!=null

&& peerConnectionParameters.audioCodec.equals(AUDIO_CODEC_ISAC);

// Create peer connection factory.

final boolean enableH264HighProfile =

VIDEO_CODEC_H264_HIGH.equals(peerConnectionParameters.videoCodec);

final VideoEncoderFactory encoderFactory;

final VideoDecoderFactory decoderFactory;

if (peerConnectionParameters.videoCodecHwAcceleration) {

encoderFactory = new DefaultVideoEncoderFactory(

rootEglBase.getEglBaseContext(), true, enableH264HighProfile);

decoderFactory = new DefaultVideoDecoderFactory(rootEglBase.getEglBaseContext());

} else {

encoderFactory = new SoftwareVideoEncoderFactory();

decoderFactory = new SoftwareVideoDecoderFactory();

}

final AudioDeviceModule adm = createJavaAudioDevice();

factory = PeerConnectionFactory.builder()

.setOptions(options)

.setAudioDeviceModule(adm)

.setVideoEncoderFactory(encoderFactory)

.setVideoDecoderFactory(decoderFactory)

.createPeerConnectionFactory();

Log.d(TAG, "Peer connection factory created.");

adm.release();

// 篇幅关系,只显示关键代码。

}

AudioDeviceModule createJavaAudioDevice() {

if (!peerConnectionParameters.useOpenSLES) {

Log.w(TAG, "External OpenSLES ADM not implemented yet.");

// TODO: Add support for external OpenSLES ADM.

}

// Set audio record error callbacks.

JavaAudioDeviceModule.AudioRecordErrorCallback audioRecordErrorCallback;

// Set audio track error callbacks.

JavaAudioDeviceModule.AudioTrackErrorCallback audioTrackErrorCallback;

// Set audio record state callbacks.

JavaAudioDeviceModule.AudioRecordStateCallback audioRecordStateCallback;

// Set audio track state callbacks.

JavaAudioDeviceModule.AudioTrackStateCallback audioTrackStateCallback;

// 篇幅关系就不把代码贴全了。

return JavaAudioDeviceModule.builder(appContext)

.setSamplesReadyCallback(saveRecordedAudioToFile)

.setUseHardwareAcousticEchoCanceler(!peerConnectionParameters.disableBuiltInAEC)

.setUseHardwareNoiseSuppressor(!peerConnectionParameters.disableBuiltInNS)

.setAudioRecordErrorCallback(audioRecordErrorCallback)

.setAudioTrackErrorCallback(audioTrackErrorCallback)

.setAudioRecordStateCallback(audioRecordStateCallback)

.setAudioTrackStateCallback(audioTrackStateCallback)

.createAudioDeviceModule();

}这里有几个点需要标记,根据是否使用硬件加速初始化VideoEncode/DecoderFactory;然后就是AudioDeviceModule,是WebRTC-Java层代表音频模块的接口定义类,当前的音频模块只支持OpenSLES,然后有两个我非常关心的设置setUseHardwareAcousticEchoCanceler / setUseHardwareNoiseSuppressor,众所周知WebRTC被google收购前,最闻名的技术点就是音频处理这一块,以后必须深挖出文章。

用一张图简单描述PeerConnectionFactory的构成:

3、createPeerConnection

终于到了真正创建PeerConnection的地方了,废话就不说了,看代码吧。

public void createPeerConnection(final VideoSink localRender,

final List remoteSinks,

final VideoCapturer videoCapturer,

final AppRTCClient.SignalingParameters signalingParameters)

{

this.localRender = localRender; // 本地视频渲染载体VideoSink

this.remoteSinks = remoteSinks; // 远程端视频渲染载体VideoSink,可能多个,所以是List

this.videoCapturer = videoCapturer; // 本地视频源

this.signalingParameters = signalingParameters;

executor.execute(() -> {

createMediaConstraintsInternal();

createPeerConnectionInternal();

maybeCreateAndStartRtcEventLog();

});

}

private void createMediaConstraintsInternal() {

// Create video constraints if video call is enabled.

if (isVideoCallEnabled()) {

videoWidth = peerConnectionParameters.videoWidth;

videoHeight = peerConnectionParameters.videoHeight;

videoFps = peerConnectionParameters.videoFps;

}

// Create audio constraints.

audioConstraints = new MediaConstraints();

// added for audio performance measurements

if (peerConnectionParameters.noAudioProcessing) {

Log.d(TAG, "Audio constraints disable audio processing");

audioConstraints.mandatory.add(

new MediaConstraints.KeyValuePair(AUDIO_ECHO_CANCELLATION_CONSTRAINT, "false"));

audioConstraints.mandatory.add(

new MediaConstraints.KeyValuePair(AUDIO_AUTO_GAIN_CONTROL_CONSTRAINT, "false"));

audioConstraints.mandatory.add(

new MediaConstraints.KeyValuePair(AUDIO_HIGH_PASS_FILTER_CONSTRAINT, "false"));

audioConstraints.mandatory.add(

new MediaConstraints.KeyValuePair(AUDIO_NOISE_SUPPRESSION_CONSTRAINT, "false"));

}

// Create SDP constraints.

sdpMediaConstraints = new MediaConstraints();

sdpMediaConstraints.mandatory.add(

new MediaConstraints.KeyValuePair("OfferToReceiveAudio", "true"));

sdpMediaConstraints.mandatory.add(new MediaConstraints.KeyValuePair(

"OfferToReceiveVideo", Boolean.toString(isVideoCallEnabled())));

}

private void createPeerConnectionInternal() {

PeerConnection.RTCConfiguration rtcConfig =

new PeerConnection.RTCConfiguration(signalingParameters.iceServers);

// TCP candidates are only useful when connecting to a server that supports ICE-TCP.

rtcConfig.tcpCandidatePolicy = PeerConnection.TcpCandidatePolicy.DISABLED;

rtcConfig.bundlePolicy = PeerConnection.BundlePolicy.MAXBUNDLE;

rtcConfig.rtcpMuxPolicy = PeerConnection.RtcpMuxPolicy.REQUIRE;

rtcConfig.continualGatheringPolicy = PeerConnection.ContinualGatheringPolicy.GATHER_CONTINUALLY;

rtcConfig.keyType = PeerConnection.KeyType.ECDSA; //Use ECDSA encryption.

// Enable DTLS for normal calls and disable for loopback calls.

rtcConfig.enableDtlsSrtp = !peerConnectionParameters.loopback;

rtcConfig.sdpSemantics = PeerConnection.SdpSemantics.UNIFIED_PLAN;

// 请关注这里

peerConnection = factory.createPeerConnection(rtcConfig, pcObserver);

List mediaStreamLabels = Collections.singletonList("ARDAMS");

if (isVideoCallEnabled()) {

peerConnection.addTrack(createVideoTrack(videoCapturer), mediaStreamLabels);

// We can add the renderers right away because we don't need to wait for an

// answer to get the remote track.

remoteVideoTrack = getRemoteVideoTrack();

if (remoteVideoTrack != null) {

remoteVideoTrack.setEnabled(renderVideo);

for (VideoSink remoteSink : remoteSinks) {

remoteVideoTrack.addSink(remoteSink);

}

}

}

peerConnection.addTrack(createAudioTrack(), mediaStreamLabels);

if (isVideoCallEnabled()) {

for (RtpSender sender : peerConnection.getSenders()) {

if (sender.track() != null) {

String trackType = sender.track().kind();

if (trackType.equals(VIDEO_TRACK_TYPE)) {

Log.d(TAG, "Found video sender.");

localVideoSender = sender;

}

}

}

}

if (peerConnectionParameters.aecDump) {

try {

ParcelFileDescriptor aecDumpFileDescriptor =

ParcelFileDescriptor.open("Download/audio.aecdump"), ...);

factory.startAecDump(aecDumpFileDescriptor.detachFd(), -1);

} catch (IOException e) {

Log.e(TAG, "Can not open aecdump file", e);

}

}

}

private void maybeCreateAndStartRtcEventLog() {

rtcEventLog = new RtcEventLog(peerConnection);

rtcEventLog.start(createRtcEventLogOutputFile());

} 代码编幅有点长,已经是压缩保留有用的部分。有WebRTC基础的同学应该可以理解 函数createMediaConstraintsInternal 的逻辑,对应getUserMedia(mediaStreamConstraints)的约束条件设置。

再认真看看 函数createPeerConnectionInternal的逻辑,设置PeerConnection.RTCConfiguration,重点是信令参数SignalingParameters中的iceServers,记录着业务服务器(就是我们程序员)所提供的打洞服务器stun的url 和 转发服务器的turn的url,然后使用 PeerConnectionFactory.createPeerConnection(rtcConfig, pcObserver);创建PeerConnection,并通过pcObserver回调各种状态处理。由于篇幅关系就不贴代码了,同学可以自行跟进代码,记住那几个IceCandidateConnection的回调就可以了。

接着就是PeerConnection的两个addTrack,一起来解读一下:

List mediaStreamLabels = Collections.singletonList("ARDAMS");

peerConnection.addTrack(createVideoTrack(videoCapturer), mediaStreamLabels);

peerConnection.addTrack(createAudioTrack(), mediaStreamLabels);

private @Nullable AudioTrack createAudioTrack() {

audioSource = factory.createAudioSource(audioConstraints);

localAudioTrack = factory.createAudioTrack(AUDIO_TRACK_ID, audioSource);

localAudioTrack.setEnabled(enableAudio);

return localAudioTrack;

}

private @Nullable VideoTrack createVideoTrack(VideoCapturer capturer) {

surfaceTextureHelper =

SurfaceTextureHelper.create("CaptureThread", rootEglBase.getEglBaseContext());

videoSource = factory.createVideoSource(capturer.isScreencast());

capturer.initialize(surfaceTextureHelper, appContext, videoSource.getCapturerObserver());

capturer.startCapture(videoWidth, videoHeight, videoFps);

localVideoTrack = factory.createVideoTrack(VIDEO_TRACK_ID, videoSource);

localVideoTrack.setEnabled(renderVideo);

localVideoTrack.addSink(localRender);

return localVideoTrack;

}

/// WebRTC.PeerConnection.内部代码

public RtpSender addTrack(MediaStreamTrack track, List streamIds) {

if (track != null && streamIds != null) {

RtpSender newSender = this.nativeAddTrack(track.getNativeMediaStreamTrack(), streamIds);

if (newSender == null) {

throw new IllegalStateException("C++ addTrack failed.");

} else {

this.senders.add(newSender);

return newSender;

}

} else {

throw new NullPointerException("No MediaStreamTrack specified in addTrack.");

}

} 第一个重点,在PeerConnection.addTrack的内部,通过nativeAddTrack之后返回一个RtpSender的对象,并在java层上维护起来;

好奇细心的同学可能还会发现,除了RtpSender,还有RtpReceiver and RtpTransceiver,RtpTransceiver = RtpSender + RtpReceiver ;看起来有点凌乱,现在先有个认识(挖坑)以后再来深入分析(填坑)

public class PeerConnection {

private final ListlocalStreams;

private final long nativePeerConnection;

private Listsenders;

private Listreceivers;

private Listtransceivers;

... ...

}

public class RtpTransceiver {

private long nativeRtpTransceiver;

private RtpSender cachedSender;

private RtpReceiver cachedReceiver;

... ...

}

public class RtpReceiver {

private long nativeRtpReceiver;

private long nativeObserver;

@Nullable private MediaStreamTrack cachedTrack;

... ...

}

public class RtpSender {

private long nativeRtpSender;

@Nullable private MediaStreamTrack cachedTrack;

@Nullable private final DtmfSender dtmfSender;

... ...

}

第二个重点,把本地的videoTrack和audioTrack添加到PeerConnection之后,接着尝试从PeerConnection的RtpTransceiver中获取 远端的remoteVideoTrack,并把当前对应渲染远程视频的VideoSink加入到remoteVideoTrack;同时再获取对应的,贴出对应的代码片段:

remoteVideoTrack = getRemoteVideoTrack();

if (remoteVideoTrack != null) {

for (VideoSink remoteSink : remoteSinks) {

remoteVideoTrack.addSink(remoteSink);

}

}

// Returns the remote VideoTrack, assuming there is only one.

private @Nullable VideoTrack getRemoteVideoTrack() {

for (RtpTransceiver transceiver : peerConnection.getTransceivers()) {

MediaStreamTrack track = transceiver.getReceiver().track();

if (track instanceof VideoTrack) {

return (VideoTrack) track;

}

}

return null;

}到这里基本已经解读完 createPeerConnection的逻辑。这里给出两个总结思路点:

1、怎样理解Track,Source,Sink的三者关系,它们是如何连接上的?

答:其实从字面意思可以理解:首先source就是数据来源或者数据输入的封装,对于视频来源一般都是摄像头对象或者文件对象。source注入到track输送轨道,成为一个source与sink的沟通桥梁。sink就相当于输送轨道的终点水槽。一个source可以流经多个track,一个track最终也可以流到多个sink,并通过不同的sink做处理之后进行输出。至于在WebRTC的代码中,VideoSink无外乎就是surfaceview等android系统的渲染载体,也可以是本地文件写入,抽象理解这些对象之间的关系有助于以后深入分析代码。

2、PeerConnection的构成如下所示:(紧接着上方Factory的图)

onConnectedToRoom

还记得PeerConnection是从哪里触发创建的吗?(参考onConnectedToRoomInternal代码片段)就是在连接房间前访问https://appr.tc/join/roomid,获取到信令参数之后的SignalingEvents.onConnectedToRoom回调,那么现在回归到这里。正常测试用浏览器访问 https://appr.tc 随机生成roomid后进入房间便成为创建房间的第一人,所以在createPeerConnection之后一般都是走createAnswer的分支。从代码看到createOffer / createAnswer / setRemoteDescription,唯独缺了setLocalDescription,带着这些疑问我们继续分析PeerConnectionClient的逻辑流程。

public void createAnswer() {

executor.execute(() -> {

if (peerConnection != null && !isError) {

isInitiator = false;

peerConnection.createAnswer(sdpObserver, sdpMediaConstraints);

}

});

}

public void createOffer() {

executor.execute(() -> {

if (peerConnection != null && !isError) {

isInitiator = true;

peerConnection.createOffer(sdpObserver, sdpMediaConstraints);

}

});

}

public void setRemoteDescription(final SessionDescription sdp) {

executor.execute(() -> {

// 按需修改sdp设置

SessionDescription sdpRemote = new SessionDescription(sdp.type, sdpDescription);

peerConnection.setRemoteDescription(sdpObserver, sdpRemote);

});

}

private class SDPObserver implements SdpObserver {

@Override

public void onCreateSuccess(SessionDescription sdp) {

if (localSdp != null) {

reportError("LocalSdp has created.");

return;

}

String sdpDescription = sdp.description;

if (preferIsac) {

sdpDescription = preferCodec(sdpDescription, AUDIO_CODEC_ISAC, true);

}

if (isVideoCallEnabled()) {

sdpDescription =

preferCodec(sdpDescription, getSdpVideoCodecName(peerConnectionParameters), false);

}

final SessionDescription renewSdp = new SessionDescription(sdp.type, sdpDescription);

localSdp = renewSdp;

executor.execute(() -> {

if (peerConnection != null && !isError) {

Log.d(TAG, "Set local SDP from " + sdp.type);

peerConnection.setLocalDescription(sdpObserver, sdp);

}

});

}

@Override

public void onSetSuccess() {

executor.execute(() -> {

if (peerConnection == null || isError) {

return;

}

if (isInitiator) {

// For offering peer connection we first create offer and set

// local SDP, then after receiving answer set remote SDP.

if (peerConnection.getRemoteDescription() == null) {

// We've just set our local SDP so time to send it.

Log.d(TAG, "Local SDP set successfully");

events.onLocalDescription(localSdp);

} else {

// We've just set remote description,

// so drain remote and send local ICE candidates.

Log.d(TAG, "Remote SDP set successfully");

drainCandidates();

}

} else {

// For answering peer connection we set remote SDP and then

// create answer and set local SDP.

if (peerConnection.getLocalDescription() != null) {

// We've just set our local SDP so time to send it, drain

// remote and send local ICE candidates.

Log.d(TAG, "Local SDP set successfully");

events.onLocalDescription(localSdp);

drainCandidates();

} else {

Log.d(TAG, "Remote SDP set succesfully");

}

}

});

}

@Override

public void onCreateFailure(String error) {

reportError("createSDP error: " + error);

}

@Override

public void onSetFailure(String error) {

reportError("setSDP error: " + error);

}

}从实现代码可以看到 PeerConnection的createOffer/Answer的事件都由一个SDPObserver接管。其中有两个回调函数onCreateSuccess/onSetSuccess,看名字就可以猜测到onCreateSuccess是createOffer/Answer成功之后的回调,onSetSuccess是setLocal/RemoteDescription的回调。

按照正常流程进行解读:

1、在createPeerConnection之后调用createAnswer,触发回调SDPObserver.onCreateSuccess,此时全局变量localSdp==null,会跟着创建localSdp并调用setLocalDescription

2、创建localSdp调用setLocalDescription后,触发SDPObserver.onSetSuccess,因为是非创建第一人,走isInitiator==false的分支;因为在第一步setLocalDescription,所以PeerConnection.getLocalDescription() != null,回调PeerConnectionEvents.onLocalDescription(localSdp)到CallActivity;

implements PeerConnectionClient.PeerConnectionEvents

@Override

public void onLocalDescription(SessionDescription sdp) {

runOnUiThread(() -> {

if (appRtcClient != null) {

if (signalingParameters!=null && signalingParameters.initiator) {

appRtcClient.sendOfferSdp(sdp);

} else {

appRtcClient.sendAnswerSdp(sdp);

}

// ... ...

}

});

}3、因为是非创建房间第一人,走isInitiator==false的分支,触发AppRTCClient实例WebSocketRTCClient.sendAnswerSdp

@Override

public void sendAnswerSdp(SessionDescription sdp) {

handler.post(new Runnable() {

@Override

public void run() {

JSONObject json = new JSONObject();

jsonPut(json, "sdp", sdp.description);

jsonPut(json, "type", "answer");

wsClient.send(json.toString());

}

});

}

public void onWebSocketMessage(String message) {

JSONObject json = new JSONObject(message);

String msgText = json.getString("msg");

String errorText = json.optString("error");

if (msgText.length() > 0) {

json = new JSONObject(msgText);

String type = json.optString("type");

if (type.equals("candidate")) {

events.onRemoteIceCandidate(toJavaCandidate(json));

}else if (type.equals("remove-candidates")) {

JSONArray candidateArray = json.getJSONArray("candidates");

IceCandidate[] candidates = new IceCandidate[candidateArray.length()];

for (int i = 0; i < candidateArray.length(); ++i) {

candidates[i] = toJavaCandidate(candidateArray.getJSONObject(i));

}

events.onRemoteIceCandidatesRemoved(candidates);

} else if (type.equals("answer")) {

if (initiator) {

SessionDescription sdp = new SessionDescription(

SessionDescription.Type.fromCanonicalForm(type), json.getString("sdp"));

events.onRemoteDescription(sdp);

} else {

reportError("Received answer for call initiator: " + message);

}

} else if (type.equals("offer")) {

if (!initiator) {

SessionDescription sdp = new SessionDescription(

SessionDescription.Type.fromCanonicalForm(type), json.getString("sdp"));

events.onRemoteDescription(sdp);

} else {

reportError("Received offer for call receiver: " + message);

}

} else if (type.equals("bye")) {

events.onChannelClose();

} else {

reportError("Unexpected WebSocket message: " + message);

}

} else {

if (errorText.length() > 0) {

reportError("WebSocket error message: " + errorText);

} else {

reportError("Unexpected WebSocket message: " + message);

}

}

}4、wsClient是利用WebSocket建立连接wssUrl的通信对象,之前就分析过wssUrl对应的服务是用于负责房间内部访客之间交换信令参数。在onWebSocketMessage处理各种类型的消息并进行回调。但是这里并不是回调 type.equals("answer") 类型的信息,有兴趣的同学把这个回调的信息全打印出来,这对于了解整个信令参数交互的过程是非常有帮助的。

我这里直接给出答案:可能是 "candidate" / "offer"类型的消息,也可能是什么信息都没收到了。 "candidate"类型的消息可能性比较大,因为"offer"在wssUrl创建链接成功之后一般就会立刻收到此类信息,进而回调CallActivity.onRemoteDescription,进而调用PeerConnectionClient.createAnswer(),有同学可能就懵逼了,第1步不是已经createAnswer了嚒?是的,但是这次回调SDPObserver.onCreateSuccess后,localSdp!=null,就不再往外发送任何类型的信息了。到此local/remote的sdp都已经设置成功了。

结束了吗?

到此本篇文章算是结束了,但是Android-WebRTC还有很多很多内容值得深挖。后续会有一系列文章,记录自己的学习过程。重点是放在网络连接传输,视频编解码,音频处理(回声消除降噪)等模块。

下一篇内容多数会是涉及WebRTC Java的API分析,以及打开jingle_peerconnection_so的源码之门。

That is all.