卷积神经网络预测函数出来的值一模一样是什么问题?

这里写自定义目录标题

- 卷积神经网络预测函数出来的值一模一样是什么问题?

卷积神经网络预测函数出来的值一模一样是什么问题?

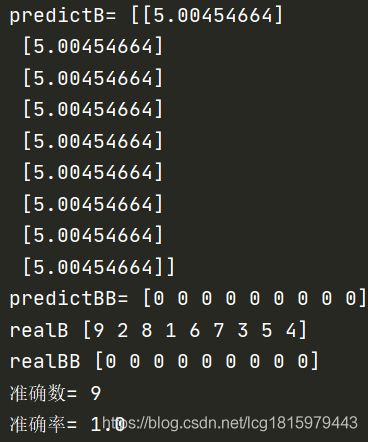

预测值predict一列下来一模一样,是过拟合呢还是欠拟合?太小白纠结了一个月了,求大神指导

`

1.测试的代码:

import joblib

import numpy as np

from com.lcg.version11 import model

from com.lcg.version11 import Loading_pictures as lp

# dnn = joblib.load("D:/python/data/model/model1.model") # 加载模型

# dnn = joblib.load("D:/python/data/model/model2.model") # 加载模型

dnn = joblib.load("D:/python/data/model/model3.model") # 加载模型

# 加载数据集

A, B = lp.loaddata("D:/python/data/testdata")

predictB = dnn.BatchPredict(A)

print("predictB=", predictB)

predictBB = np.array([np.argmax(one_hot) for one_hot in predictB])

print("predictBB=", predictBB)

realB = B

print("realB",realB)

realBB = np.array([np.argmax(one_hot) for one_hot in realB])

print("realBB", realBB)

from sklearn.metrics import accuracy_score

# accuracy_score(predictYY, realYY)

print("准确数=",accuracy_score(predictBB, realBB, normalize=False))

print("准确率=",accuracy_score(predictBB, realBB))

2.训练的代码:

#---------训练1--------------

dnn = md.DNN()

# dnn.Add(CNN2D(3,3,2,10))

# 卷积

dnn.Add(md.CNN2D_MultiLayer(4, 4, 2, 10))

# 池化

dnn.Add(md.DMaxPooling2D(2, 2))

yy = dnn.Forward(X[0])

dnn.Add(md.CNN2D_MultiLayer(3, 3, 1, 5))

yy2 = dnn.Forward(X[0])

dnn.Add(md.DFlatten())

# yy=dnn.Forward(X[0])

dnn.Add(md.DDense(10, 'relu', bFixRange=False))

dnn.Add(md.DDense(1))

# dnn.Add(DDense(1,'relu'))

# yy=dnn.BatchPredict(X)

dnn.Compile()

# ratio=dnn.AdjustWeightByComputingRatio(X,Y)

# print(ratio)

dnn.Fit(X, Y, 50)

# 将模型持久化保存

joblib.dump(dnn, "D:/python/data/model/model3.model")

3.包含预测函数的dnn模型:

class DNN:

def __init__(self):

self.layers = []

# 在前一层的基础上继续追加层

def Add(self, layer):

self.layers.append(layer)

def Forward(self, X):

if myDebug == 1:

print('dnn forward', X.shape)

nL = len(self.layers)

y = X

for i in range(nL):

y = self.layers[i].Forward(y)

return y

# 批预测

def BatchPredict(self, X):

self.predictY = []

for k in range(X.shape[0]):

self.predictY.append(self.Forward(X[k]))

# print(np.array(self.predictY))

self.predictY = np.array(self.predictY)

return self.predictY

def Compile(self, lossMethod='MSE'):

# self.lossModel=Entropy()

if lossMethod == 'MSE':

self.lossModel = MSE()

if lossMethod == 'CrossEntropy':

self.lossModel = CrossEntropy()

if lossMethod == 'SoftmaxCrossEntropy':

self.lossModel = SoftmaxCrossEntropy()

def FitOneRound(self, X, Y, iRound, epochs):

loss = 0

nL = len(self.layers)

for k in range(X.shape[0]):

y = self.Forward(X[k])

loss += self.lossModel.loss(nx=y, ny=Y[k])

dy = self.lossModel.backward()

# print('k=',k,'y=',y,'realY=',Y[k],'dy=',dy)

# dy=np.min(-1,np.max(dy,1))

# print('type y=',type(y),'shape y',y.shape)

# if (np.linalg.norm(dy,1)>1):

if (y.shape[0] == 1) and (np.linalg.norm(dy, 1) > 1):

if y > Y[k]:

dy = 1

else:

dy = -1

step = 0.75 * (epochs - iRound) / epochs + 0.01

# if iRound%10==0:

# print('iter',iteration,'k= ',k,' dy=',dy,'y=',y,'Yk=',Y[k])

for i in range(nL):

dy = self.layers[nL - i - 1].Backward(dy)

self.layers[nL - i - 1].Learn()

if (iRound % (int(epochs / 10)) == 0) or (iRound == epochs - 1):

print('round=', iRound, 'loss=', loss)