【nlp】天池学习赛-新闻文本分类-机器学习

目录

1、读取数据

查看句子长度

2、可视化

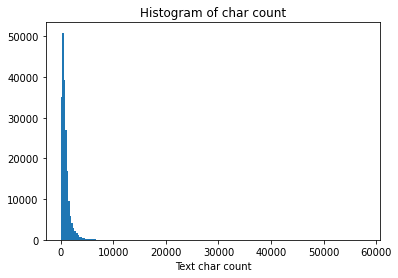

2.1、新闻的字数分布

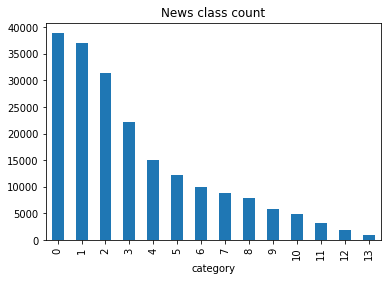

2.2、新闻文本类别统计

3、数据分析

3.1、统计每个字符出现的次数

3.2、统计不同字符在句子中出现的次数

4、文本特征提取

4.1、CountVectors+RidgeClassifier

4.2、TF-IDF + RidgeClassifier

4.3、MultinomialNB +CountVectors

4.4、MultinomialNB +TF-IDF

4.5、 绘图

1、读取数据

import pandas as pd

import seaborn as sns

# nrows=100 设置读取100行数据

train_df = pd.read_csv('新建文件夹/天池—新闻文本分类/train_set.csv', sep='\t')

print(train_df.head())

label text 0 2 2967 6758 339 2021 1854 3731 4109 3792 4149 15... 1 11 4464 486 6352 5619 2465 4802 1452 3137 5778 54... 2 3 7346 4068 5074 3747 5681 6093 1777 2226 7354 6... 3 2 7159 948 4866 2109 5520 2490 211 3956 5520 549... 4 3 3646 3055 3055 2490 4659 6065 3370 5814 2465 5...

查看句子长度

#句子长度分析

train_df['text_len'] = train_df['text'].apply(lambda x: len(x.split(' ')))

print(train_df['text_len'].describe())

#平均长度907.207110

count 200000.000000 mean 907.207110 std 996.029036 min 2.000000 25% 374.000000 50% 676.000000 75% 1131.000000 max 57921.000000 Name: text_len, dtype: float64

2、可视化

2.1、新闻的字数分布

由图可知,字数 10000以上的新闻文本是极少数,小于5000

import matplotlib.pyplot as plt

_ = plt.hist(train_df['text_len'], bins=200)

plt.xlabel('Text char count')

plt.title("Histogram of char count")

plt.show()

2.2、新闻文本类别统计

train_df['label'].value_counts().plot(kind = 'bar')

plt.title('News class count')

plt.xlabel('category')

plt.show()数据集中标签的对应的关系如下:{‘科技’: 0, ‘股票’: 1, ‘体育’: 2, ‘娱乐’: 3, ‘时政’: 4, ‘社会’: 5, ‘教育’: 6, ‘财经’: 7, ‘家居’: 8, ‘游戏’: 9, ‘房产’: 10, ‘时尚’: 11, ‘彩票’: 12, ‘星座’: 13}

- 由图知,科技,股票,体育类别的新闻占比最多

3、数据分析

3.1、统计每个字符出现的次数

- Counter()是collections里面的一个类,作用是计算出字符串或者列表等中不同元素出现的个数,返回值可以理解为一个字典: {"字符":”字符出现次数“}

#统计每个字符出现的次数

from collections import Counter

#先将所有字符用空格连接起来

all_lines = ' '.join(list(train_df['text']))

#统计按空格切割的字符数目

#Counter 返回字典,key为元素,值为元素个数。

word_count = Counter(all_lines.split(' '))

#按降序排列字符出现的次数 #排的是次数

word_count = sorted(word_count.items(),key = lambda d : d[1],reverse = True)

#打印字符的数量

print('len(word_count) : ',len(word_count))

#打印第一个字符出现的次数

print('word_count[0]:',word_count[0])

#打印最后一个字符出现的次数

print('word_count[-1]:',word_count[-1])

'''

len(word_count) : 6869

word_count[0]: ('3750', 7482224)

word_count[-1]: ('3133', 1)'''

#训练集中总共包括6869个字,其中编号3750的字出现的次数最多,编号3133的字出现的次数最少。3.2、统计不同字符在句子中出现的次数

- list(set()):对原列表去重并按从小到大排序

- 将text中的字符用空格切割并打乱成无序列表,用空格连接无序列表

- 重复出现多次的很有可能是标点符号,字符3750,字符900和字符648在20w新闻的覆盖率接近99%,很有可能是标点符号。

#统计不同字符在句子中出现的次数

train_df['text_unique'] = train_df['text'].apply(lambda x : ' '.join(list(set(x.split(' ')))))

all_lines = ' '.join(list(train_df['text_unique']))

word_count = Counter(all_lines.split(' '))

#按降序排列字符出现的次数 #排的是次数

word_count = sorted(word_count.items(),key = lambda d :int(d[1]),reverse = True)

#打印出现次数前三的字符

print('word_count[0]:',word_count[0])

print('word_count[1]:',word_count[1])

print('word_count[2]:',word_count[2])

'''word_count[0]: ('3750', 197997)

word_count[1]: ('900', 197653)

word_count[2]: ('648', 191975)'''

4、文本特征提取

4.1、CountVectors+RidgeClassifier

from sklearn.feature_extraction.text import CountVectorizer

vectorizer = CountVectorizer(max_features = 3000,ngram_range=(1,3))

train_text = vectorizer.fit_transform(train_df['text'])

#CountVectors+RidgeClassifier

import pandas as pd

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.linear_model import RidgeClassifier

from sklearn.metrics import f1_score

from sklearn.model_selection import train_test_split

df = pd.read_csv('新建文件夹/天池—新闻文本分类/train_set.csv', sep='\t',nrows = 15000)

##统计每个字出现的次数,并赋值为0/1 用词袋表示text(特征集)

##max_features=3000,文档中出现频率最多的前3000个词

#ngram_range(1,3)(单个字,两个字,三个字 都会统计

vectorizer = CountVectorizer(max_features = 3000,ngram_range=(1,3))

train_text = vectorizer.fit_transform(train_df['text'])

X_train,X_val,y_train,y_val = train_test_split(train_text,df.label,test_size = 0.3)

#岭回归拟合训练集(包含text 和 label)

clf = RidgeClassifier()

clf.fit(X_train,y_train)

val_pred = clf.predict(X_val)

f1_score_cv = f1_score(y_val,val_pred,average = 'macro')

print(f1_score_cv)4.2、TF-IDF + RidgeClassifier

#TF-IDF + RidgeClassifier

import pandas as pd

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.linear_model import RidgeClassifier

from sklearn.metrics import f1_score

df = pd.read_csv('新建文件夹/天池—新闻文本分类/train_set.csv', sep='\t',nrows = 15000)

train_test = TfidfVectorizer(ngram_range=(1,3),max_features = 3000).fit_transform(df.text)

X_train,X_val,y_train,y_val = train_test_split(train_text,df.label,test_size = 0.3)

clf = RidgeClassifier()

clf.fit(X_train,y_train)

val_pred = clf.predict(X_val)

f1_score_tfidf = f1_score(y_val,val_pred,average = 'macro')

print(f1_score_tfidf)4.3、MultinomialNB +CountVectors

from sklearn.naive_bayes import MultinomialNB

df = pd.read_csv('新建文件夹/天池—新闻文本分类/train_set.csv', sep='\t',nrows = 15000)

##统计每个字出现的次数,并赋值为0/1 用词袋表示text(特征集)

##max_features=3000文档中出现频率最多的前3000个词

#ngram_range(1,3)(单个字,两个字,三个字 都会统计

vectorizer = CountVectorizer(max_features = 3000,ngram_range=(1,3))

train_text = vectorizer.fit_transform(train_df['text'])

X_train,X_val,y_train,y_val = train_test_split(train_text,df.label,test_size = 0.3)

clf = MultinomialNB()

clf.fit(X_train,y_train)

val_pre_CountVec_NBC = clf.predict(X_val)

score_f1_CountVec_NBC = f1_score(y_val,val_pre_CountVec_NBC,average='macro')

print('CountVec + MultinomialNB : %.4f' %score_f1_CountVec_NBC )4.4、MultinomialNB +TF-IDF

df = pd.read_csv('新建文件夹/天池—新闻文本分类/train_set.csv', sep='\t',nrows = 15000)

train_test = TfidfVectorizer(ngram_range=(1,3),max_features = 3000).fit_transform(df.text)

X_train,X_val,y_train,y_val = train_test_split(train_text,df.label,test_size = 0.3)

clf = MultinomialNB()

clf.fit(X_train,y_train)

val_pre_tfidf_NBC = clf.predict(X_val)

score_f1_tfidf_NBC = f1_score(y_val,val_pre_tfidf_NBC,average='macro')

print('TF-IDF + MultinomialNB : %.4f' %score_f1_tfidf_NBC )4.5、 绘图

import matplotlib.pyplot as plt

import numpy as np

%matplotlib inline

scores = [f1_score_cv , f1_score_tfidf , score_f1_CountVec_NBC , score_f1_tfidf_NBC]

x_ticks = np.arange(4)

x_ticks_label = ['CountVec_RidgeClassifier','tfidf_RidgeClassifier','CountVec_NBC','tfidf_NBC']

plt.plot(x_ticks,scores)

plt.xticks(x_ticks, x_ticks_label, fontsize=8) #指定字体

plt.ylabel('F1_score')

plt.show()文本特征提取