ResNet应用——猫十二分类

残差思想

修改输入比重构整个输出更容易(锦上添花 比 雪中送炭 容易太多)

注意

本文展现的是做此题的大概流程,准确率并不高。为提高准确率使用了迁移学习 + ResNet。此文章目前准确率能达到93.4328%

题目出自Paddle猫十二分类,但实在不习惯Paddle,故用Pytorch重新写一遍。

0. 库函数

# 路径操作

import os

# 文件操作

import shutil

# torch相关

import torch

import torch.nn as nn

import torchvision

from torchvision import transforms

from torch.autograd import Variable

# 日志

import logging

import random

import numpy as np

import matplotlib.pyplot as plt

import cv2

import math

from PIL import Image, ImageEnhance

1. 数据部分

1.1 获得数据

由于数据是paddle的需要现下载。数据

# 获取预训练模型

!wget http://paddle-imagenet-models-name.bj.bcebos.com/ResNet101_pretrained.tar

!tar -xvf ResNet101_pretrained.tar

# 解压数据集图片至当前文件夹

!unzip data/cat_12_train.zip

!unzip data/cat_12_test.zip

- 统一配置超参数,待用

train_core1 = {

# 输入大小,因各图片尺寸不一,最好能保持一致

"input_size" : [3, 224, 224],

# 分类数

"class_dim" : 12,

# 学习率

"lr":0.0002,

# 使用GPU

"use_gpu": True,

# 前期的训练轮数

"num_epochs": 5,

# 当达到想要的准确率就立刻保存下来当时的模型

"last_acc":0.4

}

1.2 初始化日志

用于记录和调试

# 设置log全局变量

global logger

logger = logging.getLogger()

logger.setLevel(logging.INFO) # 定义日志的级别。Info显示当程序运行时期望的一些信息

# 生成log文件夹

log_path = os.path.join(os.getcwd(), 'logs')

if not os.path.exists(log_path):

os.makedirs(log_path)

# 生成log文件

log_name = os.path.join(log_path, 'train_log') # log文件名称定义为 train_log

fh = logging.FileHandler(log_name, mode='w') # file handler 以 写 模式将文件分配至正确的目的地

fh.setLevel(logging.DEBUG)

# 声明日志格式

formatter = logging.Formatter("%(asctime)s - %(filename)s[line:%(lineno)d] - %(levelname)s: %(message)s") # levelname指前面添加的'INFO',message指下方添加的train_core1

fh.setFormatter(formatter)

logger.addHandler(fh)

# 记录此次运行的超参,方便日后做记录进行比对

logger.info(train_core1)

2022-02-28 10:59:26,853 - 2874012559.py[line:23] - INFO: {‘input_size’: [3, 224, 224], ‘class_dim’: 12, ‘lr’: 0.0002, ‘use_gpu’: True, ‘num_epochs’: 5, ‘last_acc’: 0.4}

日志内容不显示只保存在log文件中

1.3 分割数据集

train_ratio = 0.7

train = open('./data/train_split_list.txt', 'w')

val = open('./data/val_split_list.txt', 'w')

with open("./data/train_list.txt") as f:

for line in f.readlines():

if random.uniform(0, 1) <= train_ratio:

train.write(line)

else:

val.write(line)

train.close()

val.close()

1.4 图像处理部分

差不多是手写了一遍transforms。

random.seed(0)

np.random.seed(0)

DATA_DIM = 224

THREAD = 8

BUF_SIZE = 102400

# 为什么0-255的像素值的mean和std在(0, 1)---->见157行

img_mean = np.array([0.485, 0.456, 0.406]).reshape((3, 1, 1))

img_std = np.array([0.229, 0.224, 0.225]).reshape((3, 1, 1))

'''

[[[0.485]],

[[0.456]],

[[0.406]]]

'''

1.4.1 放缩

# 简单放缩

def resize_short(img, target_size):

'''

根据输入的img和target_size, 返回经过多相位图象插值算法处理过后的放大或缩小的图片

'''

percent = float(target_size) / min(img.size[0], img.size[1])

resized_width = int(round(img.size[0] * percent))

resized_height = int(round(img.size[1] * percent))

img = img.resize((resized_width, resized_height), Image.LANCZOS) # LANCZOS 多相位图象插值算法

return img

1.4.2 裁剪

# 裁剪

def crop_image(img, target_size, center):

'''

center表示中心,否则随机裁剪

target表示裁剪后的尺寸

'''

width, height = img.size

size = target_size

if center == True:

w_start = (width - size) / 2

h_start = (height - size) / 2

else:

w_start = np.random.randint(0, width - size + 1)

h_start = np.random.randint(0, height - size + 1)

w_end = w_start + size

h_end = h_start + size

img = img.crop((w_start, h_start, w_end, h_end))

return img

1.4.3 随机裁剪

# 随机裁剪

def random_crop(img, size, scale = [0.08, 1.0], ratio = [3. /4., 4. / 3.]):

'''

通过一系列骚操作,确定随即裁剪的起始点和裁剪长和裁剪宽

返回裁剪区域经过resize的统一尺寸图像

'''

aspect_ratio = math.sqrt(

np.random.uniform(*ratio) # 在ratio = [0.75, 1.333]之间随机生成浮点数。*是解引用、等同于(ratio[0], ratio[1])

) # 0.86 -- 1.15

w = 1.0 * aspect_ratio

h = 1.0 / aspect_ratio

bound = min(

float(img.size[0] / img.size[1]) / (w ** 2),

float(img.size[1] / img.size[0]) / (h ** 2),

)

scale_max = min(scale[1], bound)

scale_min = min(scale[0], bound)

# 确定裁剪区域

target_area = img.size[0] * img.size[1] * np.random.uniform(scale_min, scale_max)

# 确定裁剪大小

target_size = math.sqrt(target_area)

w = int(target_size * w) # 裁剪的宽

h = int(target_size * h) # 裁剪的高

i = np.random.randint(0, img.size[0] - w + 1)

j = np.random.randint(0, img.size[1] - h + 1)

img = img.crop((i, j, i+w, j+h))

img = img.resize((size, size), Image.LANCZOS)

return img

1.4.4 旋转

# 旋转角度

def rotate_image(img):

angle = np.random.randint(-10, 11)

img = img.rotate(angle)

return img

1.4.5 颜色增强

# 颜色增强

def distort_color(img):

'''

数据增强

随机改变图片颜色的参数,如曝光度,对比度,颜色

'''

def random_brightness(img, lower = 0.5, upper = 1.5):

e =np.random.uniform(lower, upper) # 增强幅度

return ImageEnhance.Brightness(img).enhance(e)

def random_contrast(img, lower = 0.5, upper = 1.5):

e =np.random.uniform(lower, upper) # 增强幅度

return ImageEnhance.Contrast(img).enhance(e)

def random_color(img, lower = 0.5, upper = 1.5):

e =np.random.uniform(lower, upper) # 增强幅度

return ImageEnhance.Color(img).enhance(e)

# 随机选择一种增强顺序

ops = [random_brightness, random_contrast, random_color]

np.random.shuffle(ops)

img = ops[0](img)

img = ops[1](img)

img = ops[2](img)

return img

1.4.6 综合处理

# 处理图片

def process_image(sample, mode, color_jitter, rotate):

'''

: params

sample: (图片地址, label)

mode: train or val or test

color_jitter: 颜色增强(0 or 1)

rotate: 0 or 1

: return

train和val模式返回: img, label

test: img

'''

img_path = sample[0]

img = Image.open(img_path)

# 图像增强

if mode == 'train':

if rotate:

img = rotate_image(img)

img = random_crop(img, DATA_DIM)

else:

# val and test

img = resize_short(img, target_size=256)

img = crop_image(img, target_size=DATA_DIM, center=True)

if mode == 'train':

if color_jitter:

img = distort_color(img)

if np.random.randint(0, 2) == 1:

# 以百分之五十的概率镜像图片

img = img.transpose(Image.FLIP_LEFT_RIGHT)

# 非三通道转换为三通道

if img.mode != 'RGB':

img = img.convert('RGB')

# 将图像从(w, h, c) 变为 (c, w, h),并缩放到0 1区间

img = np.array(img).astype('float32').transpose((2, 0, 1)) / 255

# 0,1区间内像素值标准化

img -= img_mean

img /= img_std

if mode == 'train' or mode == 'val':

return img, int(sample[1])

elif mode == 'test':

return [img]

1.5 生成数据集

def load_data(mode = 'train', shuffle = False, color_jitter = False, rotate = False):

'''

:return : img, label

img: (channel, w, h)

'''

filelist = './data/%s_split_list.txt' % mode

imgs = []

labels = []

data_dir = os.getcwd()

if mode == 'train' or mode == 'val':

with open(filelist) as flist:

lines = [line.strip() for line in flist]

if shuffle:

np.random.shuffle(lines)

for line in lines:

img_path, label = line.split('\t')

img_path = img_path.replace('JEPG', 'jepg')

img_path = os.path.join(data_dir, img_path)

try:

img, label = process_image((img_path, label), mode, color_jitter, rotate)

imgs.append(img)

labels.append(label)

except:

print(img_path)

continue

return imgs, labels

elif mode == 'test':

full_lines = os.listdir('./cat_12_test')

lines = [line.strip() for line in full_lines]

for img_path in lines:

img_path = img_path.replace('JEPG', 'jepg')

img_path = os.path.join(data_dir, "cat_12_test/",img_path)

# try:

# img= process_image((img_path, label), mode, color_jitter, rotate)

# imgs.append(img)

# except:

# print(img_path)

img= process_image((img_path, 0), mode, color_jitter, rotate)

imgs.append(img)

return imgs

# 训练集

trainset = load_data(mode='train', shuffle=True, color_jitter=True, rotate=True)

# 验证集

valset = load_data(mode='val', shuffle=False, color_jitter=False, rotate=False)

# 测试集

testset = load_data(mode='test', shuffle=False, color_jitter=False, rotate=False)

2. 模型部分

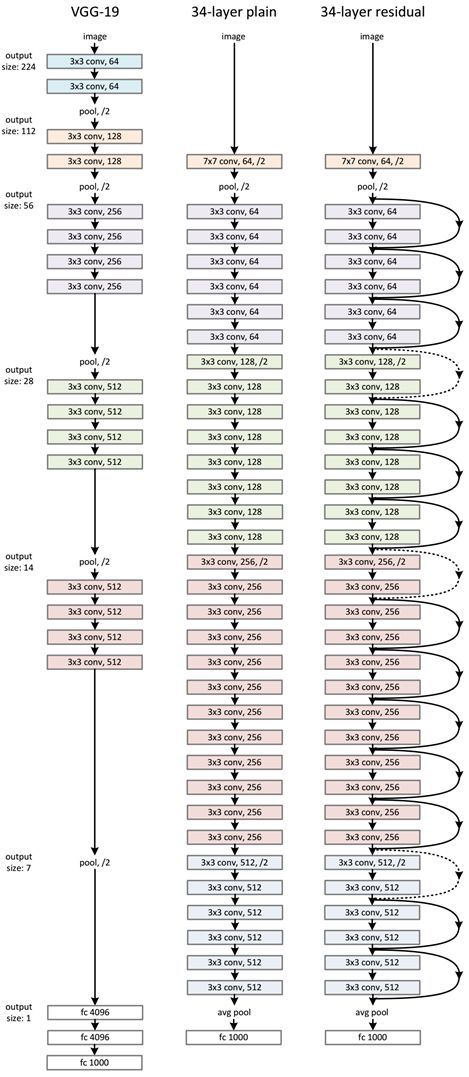

使用残差神经网络ResNet50进行预测。ResNet解决了CNN模型深度较大时难训练的问题。

2.1 退化问题

从经验来看,网络的深度对模型的性能至关重要,当增加网络层数后,模型理论上可以取得更好的结果,但实际上,网络深度增加时,网络准确度出现饱和,甚至出现下降,这就是退化问题。而且这不同于过拟合,因为它的训练误差同样很高

2.2 残差学习

假设对于一个问题,你通过堆积层数来建立深层网络。极端一点,后续的网络不进行学习,只复制浅层网络的特征(称为恒等映射),那么它的性能至少不能低于浅层网络,即不能出现退化现象。如此一来,不得不承认是目前训练深层网络的方法有问题。

对于一个堆积层结构(几层堆积而成)当输入为 x x x 时其学习到的特征(结果)记为 H ( x ) H(x) H(x) ,现在我们希望其可以学习到残差 F ( x ) = H ( x ) − x F(x) = H(x) - x F(x)=H(x)−x ,这样其实原始的学习特征是 H ( x ) = F ( x ) + x H(x) = F(x) + x H(x)=F(x)+x ,即目标为 F ( x ) + x F(x) + x F(x)+x

之所以这样设计是因为,当残差( F ( x ) F(x) F(x))等于零时,堆积层仅仅相当于做了恒等映射(近学习到 x x x, 而 x x x是堆积层的输入的同时,又是浅层网络学习的结果,即啥也没学),这保证了网络的性能至少不会下降。甚至实际上残差不会为0,即会一直学习到新的特征。

称上图中间两个weight layer为堆积层。

2.3 网络结构

ResNet的一个重要设计原则是:为了保持网络层的复杂度, 当feature map大小降低一半时,feature map的数量需要增加一倍(通过改变卷积核个数实现)。

ResNet相比普通网络每两层间增加了短路机制(短路的部分称作shortcut),这就形成了残差学习,其中虚线表示通道数量发生了改变(例如第一条虚线的起点channel = 64, 而终点为128)。下图中的右侧虚线框中的虚线部分解释了如何通过卷积核改变channel数。

2.4 下面实现简单的ResNet

2.4.1 定义模型

class myRes(nn.Module):

def __init__(self):

super(myRes, self).__init__()

self.resnet = torchvision.models.resnet50(pretrained=False)

def forward(self, x):

x = self.resnet(x)

return x

resnet的调用实现很简单,具体原理跳转,讲的很详细

2.4.2 调用Cuda

将模型放入gpu中。

# 判断cuda是否可用

cuda_available = torch.cuda.is_available()

if cuda_available:

device = torch.device('cuda')

model = myRes().to(device)

else:

model = myRes()

2.4.3 定义损失函数和优化器

criterion = nn.CrossEntropyLoss()

optim = torch.optim.SGD(model.parameters(), lr=0.5, momentum=0.5)

ps: 学习率和动量不是实验后得出的最优结果

2.4.4 训练与预测

accu = []

def train(epoch):

model.train()

correct = 0

sum_loss = 0.0

for (data, target) in train_loader:

data, target = Variable(data).cuda(), Variable(target).cuda()

optim.zero_grad()

pred = model(data)

loss = criterion(pred, target)

loss.backward()

optim.step()

_, id = torch.max(pred.data, 1)

sum_loss += loss.data

correct += torch.sum(id == target.data)

# 尝试释放显存

data = data.cpu()

target = target.cpu()

torch.cuda.empty_cache()

# 变为cpu数据后并不会丢失

# print(data.data)

# print(correct.data)

print('[epoch %d] loss:%.03f' % (epoch + 1, sum_loss.data / len(train_loader)))

print(' correct:%.03f%%' % (100 * correct.data / len(trainset)))

accu.append((correct.data / len(trainset)).data)

def test():

model.eval()

with torch.no_grad():

correct = 0

for (data, target) in val_loader:

data, target = Variable(data).cuda(), Variable(target).cuda()

pred = model(data)

_, id = torch.max(pred.data, 1)

correct += torch.sum(id == target.data)

print("test accu: %.03f%%" % (100 * correct / len(valset)))

2.4.5 保存与读取模型

if __name__ == '__main__':

epochs = 5

for epoch in range(epochs):

train(epoch)

model_name = "round %d.pkl" % epochs

# 保存模型

torch.save(model, model_name)

test()

pytorch封装了保存模型的功能。

- 保存

torch.save(model, 'model.pkl') - 读取

model = torch.load('model.pkl).cuda()'

2.4.6 结果可视化

accu = [np.array(x.cpu()) for x in accu]

plt.plot(np.array(list(range(5))), accu)

plt.show()

因为accu的元素是tensor类型,需要先放入cpu再转换成np类型。

3. Dataset定义

# Dataset

import os

import cv2

import torch

from torchvision import models, transforms

from torch.utils.data import DataLoader, Dataset

import numpy as np

import random

from PIL import Image

class myData(Dataset):

def __init__(self, kind):

super(myData, self).__init__()

self.mode = kind

# self.transform = transforms.ToTensor()

self.transform = transforms.Compose([

transforms.ToTensor(),

transforms.Resize((224, 224)),

transforms.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5)),

])

if kind == 'test':

self.imgs = self.load_origin_data()

elif kind == 'train':

self.imgs, self.labels = self.load_origin_data()

# self.imgs, self.labels = self.enlarge_dataset(kind, self.imgs, self.labels, 0.5)

print('train size:')

print(len(self.imgs))

else:

self.imgs, self.labels = self.load_origin_data()

def __getitem__(self, index):

if self.mode == 'test':

sample = self.transform(self.imgs[index])

return sample

else:

sample = self.transform(self.imgs[index])

return sample, torch.tensor(self.labels[index])

def __len__(self):

return len(self.imgs)

def load_origin_data(self):

filelist = './data/%s_split_list.txt' % self.mode

imgs = []

labels = []

data_dir = os.getcwd()

if self.mode == 'train' or self.mode == 'val':

with open(filelist) as flist:

lines = [line.strip() for line in flist]

if self.mode == 'train':

np.random.shuffle(lines)

for line in lines:

img_path, label = line.split('&')

img_path = os.path.join(data_dir, img_path)

try:

# img, label = process_image((img_path, label), mode, color_jitter, rotate)

img = Image.fromarray(cv2.imdecode(np.fromfile(img_path, dtype=np.float32), 1))

imgs.append(img)

labels.append(int(label))

except:

print(img_path)

continue

return imgs, labels

elif self.mode == 'test':

full_lines = os.listdir('data/test/')

lines = [line.strip() for line in full_lines]

for img_path in lines:

img_path = os.path.join(data_dir, "data/test/", img_path)

# try:

# img= process_image((img_path, label), mode, color_jitter, rotate)

# imgs.append(img)

# except:

# print(img_path)

# img = Image.open(img_path)

img = Image.fromarray(cv2.imdecode(np.fromfile(img_path, dtype=np.float32), 1))

imgs.append(img)

return imgs

def load_data(self, mode, shuffle, color_jitter, rotate):

'''

:return : img, label

img: (channel, w, h)

'''

filelist = './data/%s_split_list.txt' % mode

imgs = []

labels = []

data_dir = os.getcwd()

if mode == 'train' or mode == 'val':

with open(filelist) as flist:

lines = [line.strip() for line in flist]

if shuffle:

np.random.shuffle(lines)

for line in lines:

img_path, label = line.split('&')

img_path = os.path.join(data_dir, img_path)

try:

img, label = process_image((img_path, label), mode, color_jitter, rotate)

imgs.append(img)

labels.append(label)

except:

# print(img_path)

continue

return imgs, labels

elif mode == 'test':

full_lines = os.listdir('data/test/')

lines = [line.strip() for line in full_lines]

for img_path in lines:

img_path = os.path.join(data_dir, "data/test/", img_path)

# try:

# img= process_image((img_path, label), mode, color_jitter, rotate)

# imgs.append(img)

# except:

# print(img_path)

img = process_image((img_path, 0), mode, color_jitter, rotate)

imgs.append(img)

return imgs

# dataset

# img_datasets = {x: myData(x) for x in ['train', 'val']}

# dataset_sizes = {x: len(img_datasets[x]) for x in ['train', 'val']}

# test_datasets = {'test': myData('test')}

# test_size = {'test': len(test_datasets)}