音视频学习(十五)——ffmpeg+sdl实现视频播放

音视频播放的流程

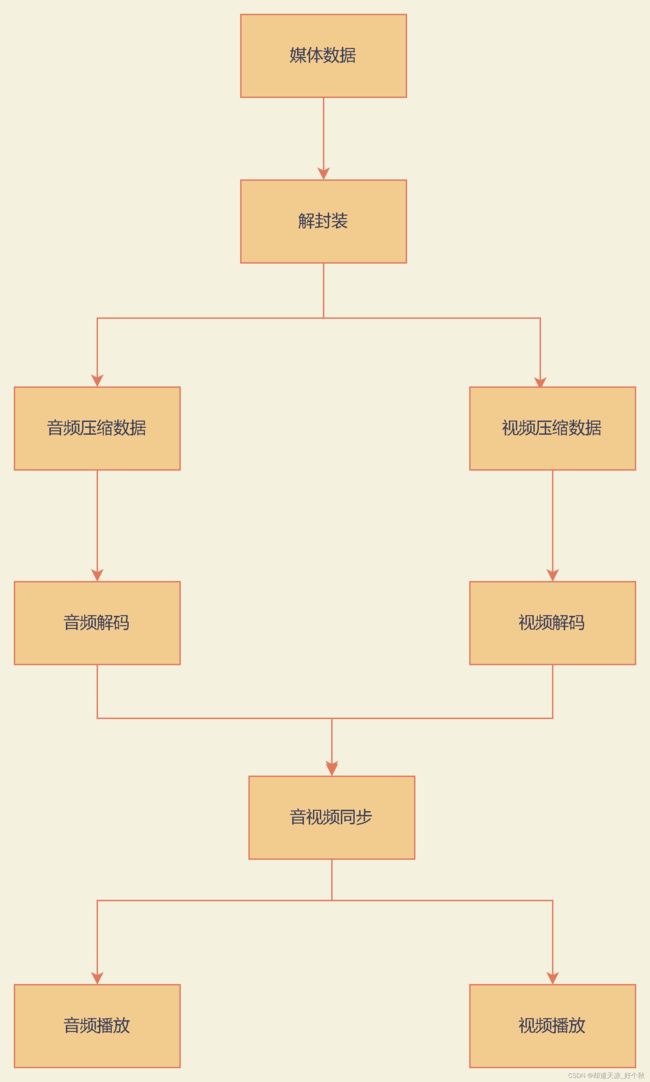

音视频数据播放的流程主要分为以下步骤:

- 数据解封装

- 数据解码

- 数据采样和同步

处理流程如图所示:

ffmpeg视频播放流程

- 注册容器格式和解码器 — av_register_all()

- 将打开的文件解封装 — av_open_input_file()

- 从文件中获取音视频信息 — av_find_stream_info()

- 获取视频流中编解码上下文 — AVCodecContext

- 根据编解码上下文中的编码id查找对应的解码器 — avcodec_find_diecoder()

- 打开编码器 — avcodec_open2()

- 从码流中读取帧数据 — av_read_frame()

- 视频帧解码 — avcodec_decode_video2()

- 对解码数据进行像素格式和分辨率转换 — sws_scale()

- 释放解码器 — avcodec_close()

- 关闭输入文件 — av_close_input_file()

SDL

简介

sdl是一个跨平台的媒体开发库,主要用于对音视频、键盘、鼠标、操纵杠等操作。SDL主要用于将ffmpeg解码后的音视频数据进行播放。

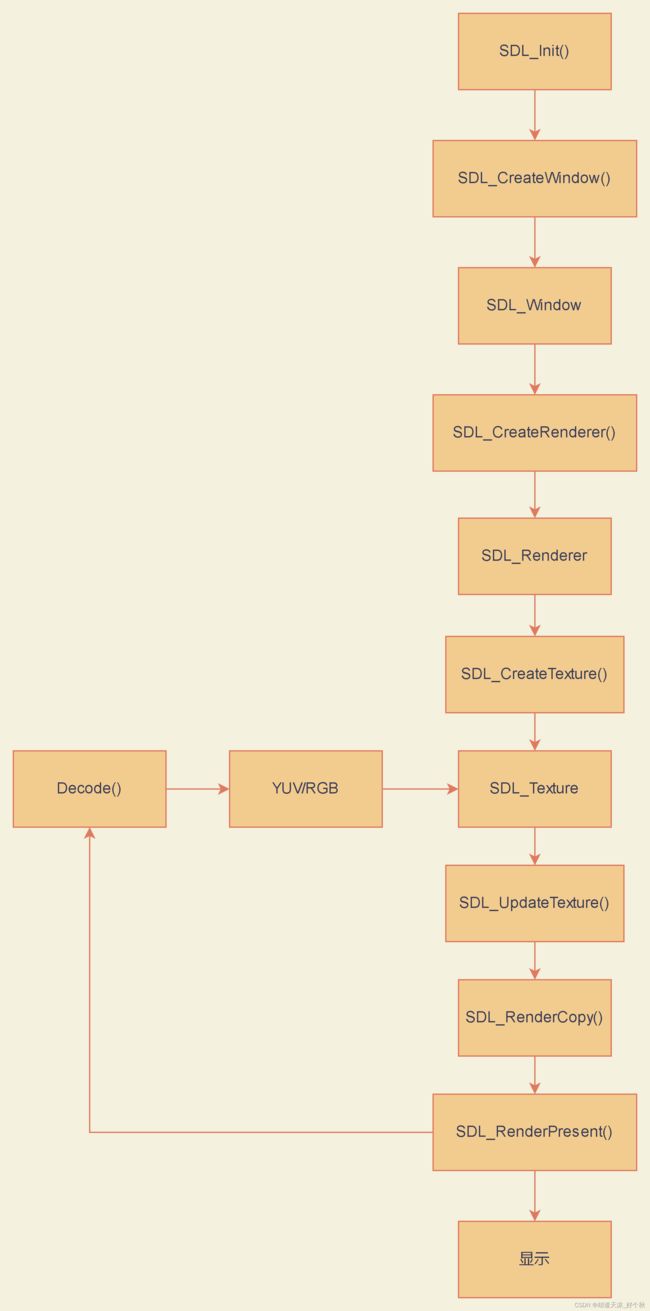

流程

- SDL_init():接口初始化;

- SDL CreateWindow():创建播放窗口;

- SDL_ CreateRenderer() :创建渲染器;

- SDL_ CreateTexture():创建纹理;

- SDL_ UpdateTexture():更新纹理参数;

- SDL_ RenderClear():清除上一帧渲染;

- SDL_ RenderCopy():复制渲染器;

主要处理流程如图所示:

补充说明:

SDL_Window:使用SDL的时候弹出的播放窗口。

SDL_Texture:用于YUV的纹理数据显示的区域。一个SDL_Texture对应一帧YUV数据。

SDL_Renderer:用于渲染SDL_Texture纹理至SDL_Window播放窗口。

SDL_Rect:用于确定SDL_Texture显示的位置区域。注意:一个SDL_Texture可以指定多个不同的SDL_Rect,这样就可以在SDL_Window不同位置显示相同的内容(使用SDL_RenderCopy()函数)。

示例

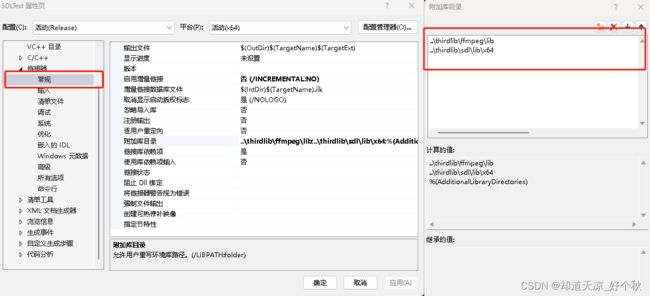

工程配置

1)添加依赖头文件

2)添加依赖库目录

需要添加的依赖库如下所示:

libavcodec.lib

libavdevice.lib

libavfilter.lib

libavformat.lib

libavutil.lib

libswscale.lib

postproc.lib

swresample.lib

swscale.lib

SDL2.lib

SDL2main.lib

需要注意的是:由于是在windows下测试,使用的库均是x64-release。

sdl库的下载地址:https://github.com/libsdl-org/SDL/releases/tag/release-2.26.1

代码示例

#include

#include

extern "C" {

#include "libavcodec/avcodec.h"

#include "libavfilter/avfilter.h"

#include "libavformat/avformat.h"

#include "libavutil/avutil.h"

#include "libavdevice/avdevice.h"

#include "libswresample/swresample.h"

#include "libswscale/swscale.h"

#include "libavutil/imgutils.h"

}

static int threadQuit = 0;

#define VEDIO_FILE "D:\\test.ps"

#define EVENT_FREASH (SDL_USEREVENT + 1)

static bool pause = 0;

#undef main

int threadFunc(void* argv) {

SDL_Event event;

threadQuit = 0;

while (!threadQuit) {

if (!pause) {

event.type = EVENT_FREASH;

SDL_PushEvent(&event);

SDL_Delay(50);

}

}

return 0;

}

int main()

{

AVFormatContext* pFormatCtx = nullptr;

AVCodecContext* pCodecCtx = nullptr;

AVCodec* pCodec = nullptr;

AVCodecParameters* pCodecParm = nullptr;

AVPacket* pPacket = nullptr;

AVFrame* pFrame = nullptr;

AVFrame* pFrameYuv = nullptr;

SwsContext* pSwsCtx = nullptr;

SDL_Window* window = nullptr;

SDL_Renderer* render = nullptr;

SDL_Texture* texture = nullptr;

SDL_Thread* thread = nullptr;

SDL_Event event;

int rst = 0, vedioIndex = -1;

int windowW, windowH;

unsigned char* outBuffer = nullptr;

SDL_Init(SDL_INIT_VIDEO);

rst = avformat_open_input(&pFormatCtx, VEDIO_FILE, nullptr, nullptr);

if (rst < 0) {

printf("open input failed\n");

goto _EIXT2;

}

rst = avformat_find_stream_info(pFormatCtx, nullptr);

if (rst < 0) {

printf("find stream info failed\n");

goto _EIXT2;

}

rst = vedioIndex = av_find_best_stream(pFormatCtx, AVMEDIA_TYPE_VIDEO, -1, -1, nullptr, 0);

if (rst < 0) {

printf("find best stream failed\n");

goto _EIXT2;

}

pCodecParm = pFormatCtx->streams[vedioIndex]->codecpar;

pCodec = avcodec_find_decoder(pCodecParm->codec_id);

if (nullptr == pCodec) {

printf("find decoder failed\n");

goto _EIXT2;

}

pCodecCtx = avcodec_alloc_context3(pCodec);

if (nullptr == pCodecCtx) {

printf("alloc avcodec failed\n");

goto _EIXT2;

}

rst = avcodec_parameters_to_context(pCodecCtx, pCodecParm);

if (rst < 0) {

printf("avcodec_parameters_to_context failed\n");

goto _EIXT3;

}

rst = avcodec_open2(pCodecCtx, pCodec, nullptr);

if (rst < 0) {

printf("avcodec_open2 failed\n");

goto _EIXT4;

}

pPacket = av_packet_alloc();

if (nullptr == pPacket) {

printf("av_packet_alloc failed\n");

goto _EIXT5;

}

av_init_packet(pPacket);

pFrame = av_frame_alloc();

pFrameYuv = av_frame_alloc();

windowH = pCodecParm->height;

windowW = pCodecParm->width;

windowH = (windowH >> 4) << 4;

windowW = (windowW >> 4) << 4;

outBuffer = static_cast(av_malloc(static_cast(av_image_get_buffer_size(AV_PIX_FMT_YUV420P, windowW, windowH, 1))));

if (nullptr == outBuffer) {

av_log(nullptr, AV_LOG_ERROR, "malloc out buff failed\n");

goto _EIXT5;

}

else {

av_log(nullptr, AV_LOG_INFO, "malloc out buff for yuv frame successfully\n");

}

rst = av_image_fill_arrays(pFrameYuv->data, pFrameYuv->linesize, outBuffer, AV_PIX_FMT_YUV420P, windowW, windowH, 1);

if (rst < 0) {

printf("fill array failed\n");

goto _EIXT6;

}

pSwsCtx = sws_alloc_context();

rst = sws_init_context(pSwsCtx, nullptr, nullptr);

if (rst < 0) {

printf("init sws_context failed\n");

goto _EIXT6;

}

pSwsCtx = sws_getContext(pCodecParm->width, pCodecParm->height, pCodecCtx->pix_fmt,

windowW, windowH, AV_PIX_FMT_YUV420P, SWS_BICUBIC, nullptr, nullptr, nullptr);

if (nullptr == pSwsCtx) {

printf("get sws context failed\n");

goto _EXIT7;

}

window = SDL_CreateWindow("SDL Test", 100, 100, windowW, windowH,

SDL_WINDOW_SHOWN | SDL_WINDOW_OPENGL | SDL_WINDOW_RESIZABLE);

render = SDL_CreateRenderer(window, -1, 0);

texture = SDL_CreateTexture(render, SDL_PIXELFORMAT_IYUV, SDL_TEXTUREACCESS_TARGET, windowW, windowH);

SDL_SetRenderDrawColor(render, 255, 100, 100, 255);

thread = SDL_CreateThread(threadFunc, nullptr, nullptr);

while (1) {

SDL_WaitEvent(&event);

if (event.type == EVENT_FREASH) {

while (1) {

if (av_read_frame(pFormatCtx, pPacket) < 0) {

threadQuit = 1;

break;

}

if (pPacket->stream_index == vedioIndex) {

rst = avcodec_send_packet(pCodecCtx, pPacket);

if (rst < 0) {

printf("send packet faild\n");

continue;

}

else {

break;

}

}

}

while (!threadQuit && rst >= 0) {

rst = avcodec_receive_frame(pCodecCtx, pFrame);

if (rst == AVERROR(EAGAIN) || rst == AVERROR_EOF)

break;

if (!threadQuit && rst == 0) {

rst = sws_scale(pSwsCtx, pFrame->data, pFrame->linesize, 0, pFrame->height, pFrameYuv->data, pFrameYuv->linesize);

if (rst < 0) {

printf("sws_scale implement failed\n");

break;

}

SDL_RenderClear(render);

SDL_UpdateYUVTexture(texture, nullptr,

pFrameYuv->data[0], pFrameYuv->linesize[0],

pFrameYuv->data[1], pFrameYuv->linesize[1],

pFrameYuv->data[2], pFrameYuv->linesize[2]);

SDL_RenderCopy(render, texture, nullptr, nullptr);

SDL_RenderPresent(render);

}

}

av_packet_unref(pPacket);

}

else if (threadQuit == 1) {

break;

}

else if (event.type == SDL_QUIT) {

threadQuit = 1;

break;

}

else if (event.type == SDL_WINDOWEVENT) {

SDL_GetWindowSize(window, &windowW, &windowH);

}

else if (event.type == SDL_KEYUP) {

if (event.key.keysym.sym == SDLK_SPACE) {

pause = !pause;

}

}

}

//flush decode

{

rst = avcodec_send_packet(pCodecCtx, nullptr);

while (!threadQuit && rst >= 0) {

rst = avcodec_receive_frame(pCodecCtx, pFrame);

if (rst == AVERROR(EAGAIN) || rst == AVERROR_EOF)

break;

if (!threadQuit && rst == 0) {

rst = sws_scale(pSwsCtx, pFrame->data, pFrame->linesize, 0, pFrame->height, pFrameYuv->data, pFrameYuv->linesize);

if (rst < 0) {

printf("sws_scale implement failed\n");

break;

}

SDL_RenderClear(render);

SDL_UpdateYUVTexture(texture, nullptr,

pFrameYuv->data[0], pFrameYuv->linesize[0],

pFrameYuv->data[1], pFrameYuv->linesize[1],

pFrameYuv->data[2], pFrameYuv->linesize[2]);

SDL_RenderCopy(render, texture, nullptr, nullptr);

SDL_RenderPresent(render);

}

}

}

avcodec_send_packet(pCodecCtx, pPacket);

rst = avcodec_receive_frame(pCodecCtx, pFrame);

threadQuit = 1;

SDL_DestroyWindow(window);

SDL_DestroyTexture(texture);

SDL_DestroyRenderer(render);

SDL_Quit();

_EXIT7:

sws_freeContext(pSwsCtx);

_EIXT6:

av_free(outBuffer);

_EIXT5:

avcodec_close(pCodecCtx);

_EIXT4:

avcodec_parameters_free(&pCodecParm);

_EIXT3:

avcodec_free_context(&pCodecCtx);

_EIXT2:

avformat_close_input(&pFormatCtx);

return 0;

}

显示效果

部分参考:

https://blog.csdn.net/longjiang321/article/details/103499785