From time to time I would read some ML/AI/DL papers just to keep up with what’s going on in the tech industry these days — and I thought it might be a good idea to collate some interesting ones and share them with you guys in an article (coupled with some key technical concepts). So here’s a few research projects that I (personally) really like and I hope you will too:

我会不时阅读一些ML / AI / DL论文,以跟上当今科技行业的发展-我认为整理一些有趣的文章并与您分享这些想法可能是个好主意。一篇文章(结合一些关键技术概念)。 因此,这是我(个人)非常喜欢的一些研究项目,希望您也能:

1.自律 (1. Toonify Yourself)

Justin Pinkney’s blog — Source from: Justin Pinkney的博客-来源: link 链接Authors: Doron Adler and Justin Pinkney

作者:多伦·阿德勒( Doron Adler)和贾斯汀·平克尼( Justin Pinkney)

Starting off with something light-hearted, this fun little project lets you upload your own image and transform yourself into a cartoon. Image processing and manipulation probably isn’t something very new by this point in time but it is still very interesting considering that this site even provides you with the Google Collab file that allows you to try to toonify yourself + allows you to follow along the steps so you can recreate it for yourself.

从一个轻松愉快的项目开始,这个有趣的小项目使您可以上传自己的图像并将自己变成卡通。 目前,图像处理和操作可能还不是什么新鲜事物,但是考虑到该站点甚至为您提供了Google Collab文件,使您可以尝试自我统一+允许您按照步骤进行操作,这仍然非常有趣。因此您可以自己重新创建它。

Some key technical ideas/concepts:

一些关键的技术构想/概念:

This project is a network blending/layer swapping project in StyleGAN and they’ve used pre-trained models to do transfer learning. Specifically, they utilized two models — a fine-tuned model (created from the base model) and a base model and swapped layers between the two to produce such results. High resolution layers are taken from the base model and low resolution layers are taken from the fine-tuned model. Next, a latent vector is produced from an original image that we want to “toonify” and this latent vector would act as the input to our blended model (this latent vector would look very similar to the original image). After inputting this latent vector into the blended model, the toonified version would be created.

该项目是StyleGAN中的网络混合/层交换项目,他们使用了预先训练的模型来进行迁移学习。 具体来说,他们利用了两个模型-一个经过微调的模型(从基本模型创建)和一个基本模型,并在两者之间交换了图层以产生这样的结果。 高分辨率层取自基本模型,低分辨率层取自微调模型。 接下来,从原始图像中生成一个潜在矢量,我们想要对其进行“ toonify”处理,并且该潜在矢量将作为我们混合模型的输入(此潜在矢量看上去与原始图像非常相似)。 在将该潜在矢量输入到混合模型中之后,将创建音化版本。

Read more about it here.

在此处了解更多信息。

2.手提电话 (2. Lamphone)

link 链接Authors: Nassi, Ben and Pirutin, Yaron and Shamir, Adi and Elovici, Yuval and Zadov, Boris

作者:Nassi,Ben和Pirutin,Yaron和Shamir,Adi和Elovici,Yuval和Zadov,Boris

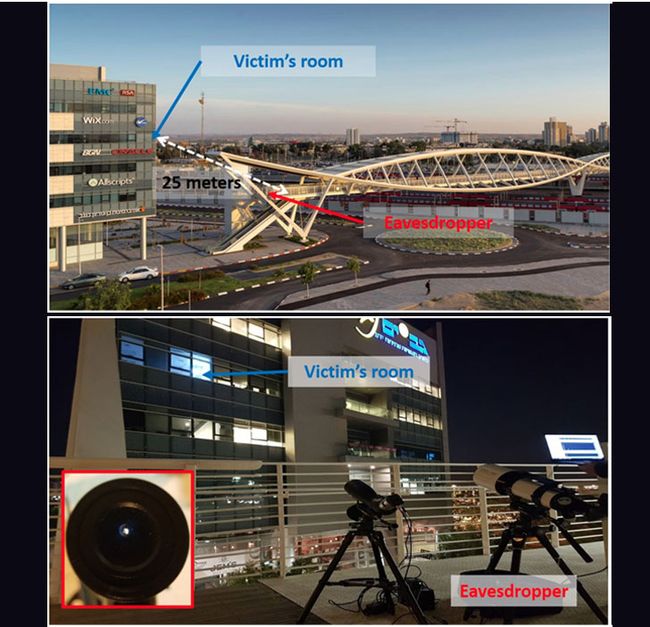

I find this to be slightly creepy — Lamphone is a novel side-channel attacking technique that allows eavesdroppers to recover speech and non-speech information by recovering sound from the optical measurements obtained from the vibrations of a light bulb and captured by the electro-optical sensor.

我发现这有点令人毛骨悚然-Lamphone是一种新颖的旁通道攻击技术,它允许窃听者通过从灯泡振动获得的光学测量结果中恢复声音并通过电光捕获来恢复语音和非语音信息。传感器。

Some key technical ideas/concepts:

一些关键的技术构想/概念:

The setup involves having a telescope with an electro-optical sensor mounted on it that can detect the vibration patterns of the hanging bulb from the victim’s room. Specifically, they’ve exploited the fluctuations in air pressure around the hanging bulb (this causes the hanging bulb to vibrate). For this particular experiment, they were capable of successfully recovering the song played in the room and a snippet of Trump’s speech recording from a distance of 25 meters when they used a $400 electro-optical sensor. It is said that this distance could be extended with more high-range equipment.

设置包括安装一个带有光电传感器的望远镜,该望远镜可以检测受害人房间悬挂灯泡的振动模式。 具体来说,他们利用了悬挂灯泡周围的气压波动(这会导致悬挂灯泡振动)。 对于这个特定的实验,当他们使用价格为400美元的光电传感器时,他们能够从25米的距离成功恢复房间里播放的歌曲和特朗普语音记录的片段。 据说,可以通过使用更多的高射程设备来扩大这个距离。

Read more about it here.

在此处了解更多信息。

3. GANPaint Studio (3. GANPaint Studio)

MIT-IBM Watson AI Lab — Source from: MIT-IBM Watson AI Lab的研究人员-来源: link 链接Authors: David Bau, Hendrik Strobelt, William Peebles, Jonas Wulff, Bolei Zhou, Jun-Yan Zhu, Antonio Torralba

作者:大卫·鲍(David Bau),亨德里克·斯特博尔特(Hendrik Strobelt),威廉·皮布尔斯(William Peebles),乔纳斯·沃尔夫(Jonas Wulff),周伯乐,朱俊彦,安东尼奥·托拉尔巴

This was the project that I got really excited about when I was collating this list. This is basically a tool that allows you to “blend” in an image object over the highlighted portion of the original image (you get to decide where to highlight as well). I have always been really excited about exploring the idea of allowing people without formal art training to easily create new images/artworks. I find the process of creating new artworks and images to be really enjoyable creative process and hopefully with more projects like these, the machine can play an assisting role that will encourage more people to exercise their creative muscle and create art. In this case, even though their tool is not meant to create art, I don’t think it is that far-fetched to have a variation of their tool that WILL assist users to create art instead.

当我整理这份清单时,我对这个项目感到非常兴奋。 基本上,这是一个工具,可让您将图像对象“融合”在原始图像的突出显示部分上(您也可以决定要突出显示的位置)。 我一直对探索允许未经正式艺术训练的人轻松创建新图像/艺术品的想法感到非常兴奋。 我发现创建新艺术品和图像的过程是非常有趣的创作过程,希望通过更多此类项目,机器可以起到辅助作用,鼓励更多的人锻炼自己的创造力和创造艺术。 在这种情况下,即使他们的工具不是用来创造艺术品的,我也不认为拥有各种各样的工具会帮助用户创造艺术品并不是一件容易的事。

Some key technical ideas/concepts:

一些关键的技术构想/概念:

The interesting thing about this project is that it synthesizes new content that follows both the user’s intention and the natural image statistics. Their image manipulation pipeline consists of a three step process — computing the latent vector of the original image, applying a semantic vector space operation in the latent space, then finally regenerating the image from the modified image from the previous step. Here’s what they’ve written in their original paper:

这个项目的有趣之处在于,它可以根据用户的意图和自然图像统计信息合成新内容。 他们的图像处理流程包括三个步骤:计算原始图像的潜在向量,在潜在空间中应用语义向量空间操作,然后最终从上一步的修改后的图像中重新生成图像。 这是他们在原始论文中写的:

Given a natural photograph as input, we first re-render the image using an image generator. More concretely, to precisely reconstruct the input image, our method not only optimizes the latent representation but also adapts the generator. The user then manipulates the photo using our interactive interface, such as by adding or removing specific objects or changing their appearances. Our method updates the latent representation according to each edit and renders the final result given the modified representation. Our results look both realistic and visually similar to the input natural photograph.

给定自然照片作为输入,我们首先使用图像生成器重新渲染图像。 更具体地,为了精确地重建输入图像,我们的方法不仅优化了潜在表示,而且使生成器适应。 然后,用户可以使用我们的交互界面来处理照片,例如添加或删除特定对象或更改其外观。 我们的方法根据每次编辑来更新潜在表示,并在修改后的表示下渲染最终结果。 与输入的自然照片相比,我们的结果看上去既逼真又视觉相似。

Read more about it or try out the demo here.

了解更多信息或在此处尝试演示。

4.点唱机 (4. Jukebox)

link 链接Authors: Prafulla Dhariwal, Heewoo Jun, Christine McLeavey Payne (EQUAL CONTRIBUTORS), Jong Wook Kim, Alec Radford, Ilya Sutskever

作者: Prafulla Dhariwal , Heewoo Jun , Christine McLeavey Payne (平等贡献者), Jong Wook Kim , Alec Radford , Ilya Sutskever

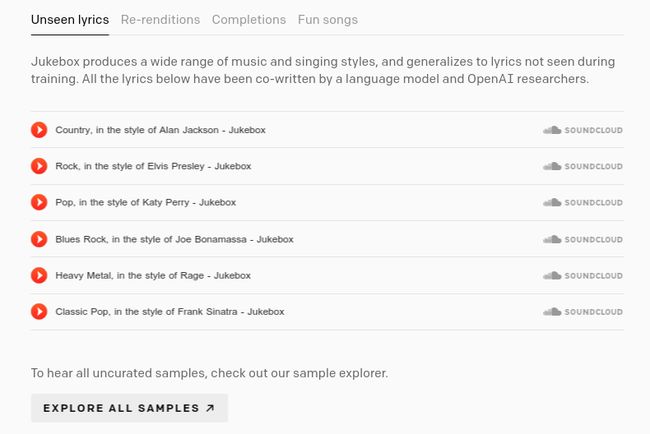

In a nutshell, this project utilizes neural networks to generate music. Given the genre, artist and lyrics as input, Jukebox would output new music sample produced from scratch.

简而言之,该项目利用神经网络生成音乐。 给定流派,艺术家和歌词作为输入,Jukebox将输出从头开始产生的新音乐样本。

link 链接Automated music generation is not exactly new technology — previously, approaches include symbolically generating music. However, for these generators, they often cannot capture essential musical elements like human voices, subtle timbres, dynamics, and expressiveness. I spent some time listening to some of the musical tracks listed in their “Jukebox Sample Explorer” page and personally felt like it’s still not at the level where listeners will be unable to differentiate between the real track VS their auto-generated track. However, I think it is definitely a exciting to see where projects like these will take us and what this means to our music industry in the near future.

自动音乐生成并非完全是新技术,以前的方法包括象征性地生成音乐。 但是,对于这些生成器,它们通常无法捕获基本的音乐元素,例如人声,微妙的音色,动态和表现力。 我花了一些时间听他们在“ Jukebox示例资源管理器”页面中列出的一些音乐曲目,个人觉得这还不能使听众无法区分真实曲目和自动生成的曲目。 但是,我认为看到这样的项目将带给我们什么,以及在不久的将来对我们的音乐行业意味着什么绝对令人兴奋。

Some key technical ideas/concepts:

一些关键的技术构想/概念:

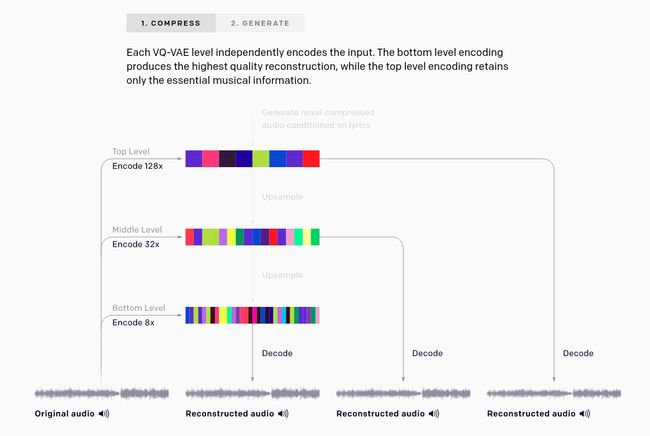

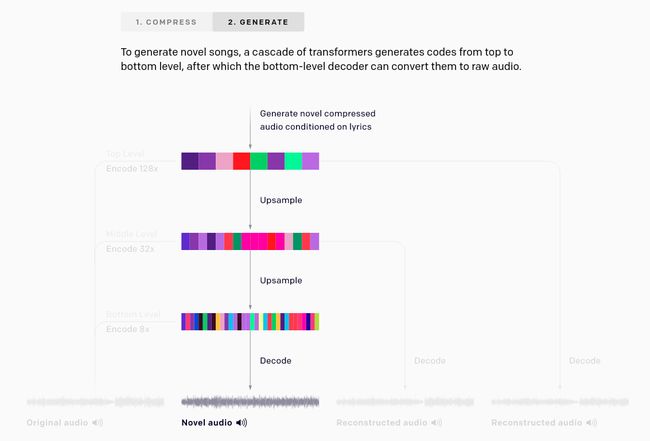

Their approach is a two-step process — the first step consists of compressing music to discrete codes and the second step involves generating codes using transformers. They have a really nice diagram explaining this process and I’ll include them here:

他们的方法分两步进行:第一步是将音乐压缩为离散代码,第二步是使用转换器生成代码。 他们有一个非常漂亮的图表来解释这个过程,我将在这里包括它们:

link) link的源) link) 连结来源)How the compression part works — they utilize a modified version of the Vector Quantised-Variational AutoEncoder (VQ-VAE-2) (generative model for discrete representation learning). With reference to Figure A above, their 44kHz raw audio is compressed 8x, 32x, and 128x, with a codebook size of 2048 for each level. If you go to their site and try clicking on the sound icon to hear how each reconstructed audio sounds like, the right-most one will sound the noisiest since it is compressed 128x and only the very essential features is retained (e.g. pitch, timbre, and volume).

压缩部分的工作原理-他们利用了矢量量化自动编码器(VQ-VAE-2)的改进版本(用于离散表示学习的生成模型)。 参考上面的图A,它们的44kHz原始音频被压缩为8x,32x和128x,每个级别的密码本大小为2048。 如果您访问他们的网站并尝试单击声音图标以听到每种重建的音频的声音,则最右边的声音将听起来最嘈杂,因为它被压缩128倍并且仅保留了非常重要的功能(例如音高,音色,和体积)。

How the generation part works — As mentioned in the VQ-VAE paper and the VQ-VAE-2 paper, a powerful autoregressive decoder is used (but in Jukebox’s algorithm, separate decoders are used and input from the codes of each level is independently reconstructed to maximize the use of the upper levels). This generative phase is essentially about (from their official site):

生成部分的工作原理-如VQ-VAE论文和VQ-VAE-2论文中所述,使用了功能强大的自回归解码器(但在Jukebox的算法中,使用了独立的解码器,并且独立重建了每个级别的代码的输入)最大限度地利用上层)。 这个生成阶段实质上是关于(从其官方站点开始):

(training) the prior models whose goal is to learn the distribution of music codes encoded by VQ-VAE and to generate music in this compressed discrete space. […] The top-level prior models the long-range structure of music, and samples decoded from this level have lower audio quality but capture high-level semantics like singing and melodies. The middle and bottom upsampling priors add local musical structures like timbre, significantly improving the audio quality. […] Once all of the priors are trained, we can generate codes from the top level, upsample them using the upsamplers, and decode them back to the raw audio space using the VQ-VAE decoder to sample novel songs.

(训练)现有模型,其目标是学习由VQ-VAE编码的音乐代码的分布并在此压缩离散空间中生成音乐。 […]最高级别的先验模型对音乐的远程结构进行了建模,从此级别解码的样本具有较低的音频质量,但捕获了诸如唱歌和旋律之类的高级语义。 中间和底部的升采样先验添加了诸如音色之类的本地音乐结构,从而大大改善了音频质量。 […]所有先验知识都经过培训后,我们可以从顶层生成代码,使用上采样器对其进行上采样,然后使用VQ-VAE解码器将其解码回原始音频空间,以对新颖歌曲进行采样。

Read more about it or try out the demo here.

了解更多信息或在此处尝试演示。

5.通过个性化图片字幕 (5. Engaging Image Captioning via Personality)

link 链接Authors: Kurt Shuster, Samuel Humeau, Hexiang Hu, Antoine Bordes, Jason Weston

作者:Kurt Shuster,Samuel Humeau,HuHexiang Hu,Antoine Bordes,Jason Weston

I came across this research paper when browsing through the research projects listed in Facebook AI’s conversational AI category. Traditionally, image captioning tasks are usually very factual and emotionless (e.g. labels like “This is a cat” or “Boy playing football with friends”). In this project, they aim to inject emotions or personalities into their captions to make their captions seem more humane and engaging. Citing from their paper, “ the goal is to be as engaging to humans as possible by incorporating controllable style and personality traits”.

浏览Facebook AI的对话式AI类别中列出的研究项目时,我遇到了这篇研究论文。 传统上,图像字幕任务通常非常真实,毫无感情(例如,“这是一只猫”或“男孩和朋友踢足球”之类的标签)。 在此项目中,他们旨在将情绪或个性注入字幕中,以使字幕看起来更人性化和更具吸引力。 从他们的论文中引用:“目标是通过整合可控的风格和个性特征,尽可能地与人类互动”。

Hopefully, this project will be used for good — the idea of machines/bots communicating like humans seems a little unsettling to me…Nonetheless, it is still a pretty interesting project.

希望这个项目会有用处-机器/机器人像人类一样进行交流的想法对我来说似乎有些不解……尽管如此,它仍然是一个非常有趣的项目。

Some key technical ideas/concepts:

一些关键的技术构想/概念:

link 链接From the paper:

从本文:

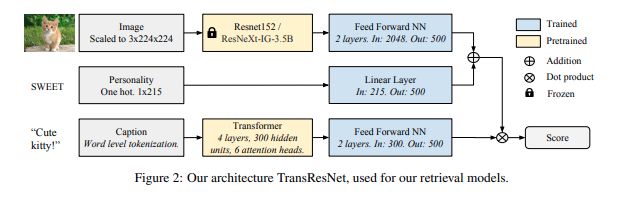

For image representations, we employ the work of [32] (D. Mahajan, R. B. Girshick, V. Ramanathan, K. He, M. Paluri, Y. Li, A. Bharambe, and L. van der Maaten. Exploring the limits of weakly supervised pretraining. CoRR, abs/1805.00932, 2018.) that uses a ResNeXt architecture trained on 3.5 billion social media images which we apply to both. For text, we use a Transformer sentence representation following [36] (P.-E. Mazare, S. Humeau, M. Raison, and A. Bordes. ´ Training Millions of Personalized Dialogue Agents. ArXiv e-prints, Sept. 2018.) trained on 1.7 billion dialogue examples. Our generative model gives a new state-of-the-art on COCO caption generation, and our retrieval architecture, TransResNet, yields the highest known R@1 score on the Flickr30k dataset.

对于图像表示,我们采用了[32]的工作(D. Mahajan,RB Girshick,V。Ramanathan,K。He,M。Paluri,Y。Li,A。Bharambe和L. van der Maaten。探索极限弱监督的预训练(CoRR,abs / 1805.00932,2018.),该方法使用ResNeXt架构对35亿个社交媒体图像进行了训练,我们将两者应用于这两个方面。 对于文本,我们使用[36]之后的Transformer句子表示形式(P.-E. Mazare,S。Humeau,M。Raison和A. Bordes。“培训数百万个性化对话代理。ArXiv电子版,2018年9月。)接受了17亿个对话示例的培训。 我们的生成模型提供了有关COCO字幕生成的最新技术,而我们的检索体系结构TransResNet在Flickr30k数据集上产生的已知R @ 1得分最高。

Read more about it here.

在此处了解更多信息。

Bonus: Three more interesting projects (I’ll just include links for now [because researching and writing this article took up way too much time rip]), but comment if you want technical explanations or summaries!) —

奖励:另外三个有趣的项目(我现在仅包括链接(因为研究和撰写本文占用了太多时间),但是如果您需要技术说明或摘要,请发表评论!)

6.聆听5秒钟后会克隆您的声音的AI:链接 (6. AI that clones your voice after listening for 5 seconds: link)

7.从您的照片创建真实场景的AI:链接(7. AI that creates real scenes from your photos: link)

8.恢复旧图像:链接(8. Restoring old images: link)

结束语(Concluding remarks)

When I was reading through the various deep tech related research papers, I really started to worry about how easily these techniques can potentially be misused and cause detrimental consequences to our modern society as a whole. Even though some of them may seem innocuous, the gap between an innocent toy project and a powerful manipulative tool is extremely narrow. I sincerely hope there would be some frameworks or standards soon so we acquire a controlled assistant rather than unleash a monster.

当我阅读各种与深度技术相关的研究论文时,我真的开始担心这些技术有多么容易被滥用并给整个现代社会带来不利影响。 即使其中一些看上去无害,但无辜的玩具项目与强大的操纵工具之间的差距却非常狭窄。 我衷心希望很快会有一些框架或标准,以便我们获得受控助手而不是释放怪物。

That’s all I have to share for now, if I get any of the concepts or ideas wrong I do sincerely apologize — if you spot any mistakes please feel free to reach out to me so I can correct it as soon as possible.

这就是我现在要分享的所有内容,如果我对任何概念或想法有误,我都深表歉意。如果您发现任何错误,请随时与我联系,以便我尽快予以纠正。

Thank you for reading this article.

感谢您阅读本文。

翻译自: https://medium.com/@m.fortitudo.fr/5-mind-blowing-machine-learning-deep-tech-projects-b33479318986