机器人手眼标定-python

因为科研需要,所以进行眼在手上的手眼标定,期间遇到了一些bug,特此记录

matlab算出的棋盘格姿态有问题,所以改用opencv进行,如果有读者也发现matlab有问题,建议改用opencv

手眼标定的原理请参考网上其他资料

准备工作

- 移动机器人拍摄多张棋盘格照片,如果没有现成的棋盘格,可以从这个网址上下载:棋盘格下载,并记录机器人末端的姿态。

- 使用opencv进行相机标定,并且输出棋盘格相对于相机的姿态。

- 采集机器人末端相对于基座的位姿。

- 图像的顺序与机器人末端位姿的顺序必须对应

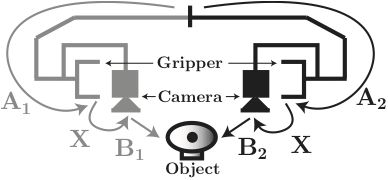

- 将棋盘格位姿“做差”( Δ T B = B 1 − 1 ⋅ B 2 \rm \Delta T_B=B_1^{-1}\cdot B_2 ΔTB=B1−1⋅B2),得到序列中相邻棋盘格位姿差矩阵。

- 将机器人末端的位姿“做差”( Δ T A = A 1 ⋅ A 2 − 1 \rm \Delta T_A=A_1\cdot A_2^{-1} ΔTA=A1⋅A2−1), 得到序列中相邻机器人末端位姿差矩阵。

有等式:

Δ T A ⋅ X = X ⋅ Δ T B \Delta T_A\cdot X=X\cdot \Delta T_B ΔTA⋅X=X⋅ΔTB

- 手眼标定问题就变成了 A X = X B AX=XB AX=XB的问题,再进行求解,得到手眼矩阵。

代码

部分代码以及示意图来自于以下网址:

代码参考1

代码参考2

-

先运行python代码,得到相机内参矩阵、畸变系数和棋盘格的姿态

python代码

# coding=utf-8

# copied by ysh in 2021/12/08

"""

用于相机标定和相机的手眼标定

A2^{-1}*A1*X=X*B2*B1^{−1}

"""

import os.path

import cv2

import numpy as np

np.set_printoptions(precision=8,suppress=True)

import glob

path = os.path.dirname(__file__)

# 角点的个数以及棋盘格间距

XX = 7

YY = 5

L = 0.008

# 设置寻找亚像素角点的参数,采用的停止准则是最大循环次数30和最大误差容限0.001

criteria = (cv2.TERM_CRITERIA_MAX_ITER | cv2.TERM_CRITERIA_EPS, 30, 0.001)

# 获取标定板角点的位置

objp = np.zeros((XX * YY, 3), np.float32)

objp[:, :2] = np.mgrid[0:XX, 0:YY].T.reshape(-1, 2) # 将世界坐标系建在标定板上,所有点的Z坐标全部为0,所以只需要赋值x和y

objp = L*objp

obj_points = [] # 存储3D点

img_points = [] # 存储2D点

images = glob.glob(f'{path}/figure/*.jpg')

i = 0

for fname in images:

img = cv2.imread(fname)

# cv2.imshow('img',img)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

size = gray.shape[::-1]

ret, corners = cv2.findChessboardCorners(gray, (XX, YY), None)

print(ret)

if ret:

obj_points.append(objp)

corners2 = cv2.cornerSubPix(gray, corners, (5, 5), (-1, -1), criteria) # 在原角点的基础上寻找亚像素角点

#print(corners2)

if [corners2]:

img_points.append(corners2)

else:

img_points.append(corners)

cv2.drawChessboardCorners(img, (XX, YY), corners, ret)

# 红色为第一个点,蓝色为最后一个点,先X轴再Y轴

cv2.imwrite(f'{path}/figure_save/{i}.jpg', img)

i = i+1

# cv2.imshow('img', img)

# cv2.waitKey(2000)

N = len(img_points)

print(f'图像个数:{N}')

# cv2.destroyAllWindows()

# 标定,得到图案在相机坐标系下的位姿

ret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(obj_points, img_points, size, None, None)

# print("ret:", ret)

print("内参矩阵:\n", mtx) # 内参数矩阵

print("畸变系数:\n", dist) # 畸变系数 distortion cofficients = (k_1,k_2,p_1,p_2,k_3)

# print("rvecs:\n", rvecs) # 旋转向量 # 外参数

# print("tvecs:\n", tvecs ) # 平移向量 # 外参数

print("-----------------------------------------------------")

# img2 = cv2.imread(f'{path}/figure/*.jpg')

i = 0

for fname in images:

figure = cv2.imread(fname)

h, w = figure.shape[:2]

newcameramtx, roi=cv2.getOptimalNewCameraMatrix(mtx,dist,(w,h),0,(w,h)) # 自由比例参数

dst = cv2.undistort(figure, mtx, dist, None, newcameramtx)

cv2.imwrite(f'{path}/figure_undist/{i}.jpg', dst)

i = i + 1

# 机器人末端在基座标系下的位姿

tool_pose = np.loadtxt(f'{path}/RobotToolPose.csv',delimiter=',')

R_tool = []

t_tool = []

for i in range(int(N)):

R_tool.append(tool_pose[0:3,4*i:4*i+3])

t_tool.append(tool_pose[0:3,4*i+3])

R, t = cv2.calibrateHandEye(R_tool, t_tool, rvecs, tvecs, cv2.CALIB_HAND_EYE_TSAI)

T_tool_camera = np.hstack((R, t))

T_tool_camera = np.vstack((T_tool_camera, np.array([0,0,0,1])))

print(f'相机在机器人末端坐标系的位姿:\n{T_tool_camera}')

with open(f'{path}/camera.txt', 'w') as f:

f.write(f'{mtx}\n')

f.write(f'{dist}\n')

f.write(f'{T_tool_camera}')

部分代码从网上摘取,侵删!

备注

发现一个基于ROS的手眼标定程序,有“眼在手上(eye in hand)”和“眼在手外(eye to hand)”,没来得及仔细看代码,先把链接贴在这。

手眼标定ROS代码