MMA算法的推导及3D简支梁拓扑优化代码详解

文章目录

- MMA算法的推导及代码详解

-

- 问题描述

- 算法推导

- 结果展示

- 代码及注释

- 参考文献

MMA算法的推导及代码详解

对于变密度的参数化方法,设计变量x为材料相对密度,在已知材料的物性,包括弹性模型、密度以及给定载荷的条件下,我们希望简支梁的柔度最小,或者说使得结构势能最小(结构在力作用下的位移,该力做的功也就是势能)。那么当材料总体积保持为常数不变,给定载荷不变的情况下柔度最小可以理解为结构刚度最强。

问题描述

在结构力学相关设计中,通常会出现结构冗余的情况,例如受到一定载荷作用下的悬臂梁和简支梁其结构质量可以进一步减小。这一点在桥梁等设计中体现的更为明显。但是传统的桥梁设计方案是根据经验选择合适的桁架结构然后不断迭代设计方案。这种做法十分繁琐,目前我们可以通过拓扑优化的技术在概念设计阶段就提出最优方案,减少材料的浪费。

目前拓扑优化的参数化方法种类有很多,例如变密度法、水平集法、构型理论等。其中比较常见的是变密度法。密度法假设有一种材料密度可以在0-1之间变化。以这种参数为设计变量,密度为1代表材料存在,密度为0代表材料不存在。这样就在拓扑优化问题与材料分布问题之间建立了联系。

拓扑优化问题与传统最优化问题在基本原理上互通,其数学本质为求多元函数的极值问题。其基本形式可以进行如下抽象:

设 x = ( x 1 , x 2 , ⋅ ⋅ ⋅ x n ) T \mathbf{x} = \left( x_{1},x_{2}, \cdot \cdot \cdot x_{n} \right)^{T} x=(x1,x2,⋅⋅⋅xn)T为n维欧氏空间 R n \mathbf{R}^{n} Rn内一点, f ( x ) , g i ( x ) ( i = 1 , 2 , ⋅ ⋅ ⋅ , m ) f\left( \mathbf{x} \right),g_{i}\left( \mathbf{x} \right)\left( i = 1,2, \cdot \cdot \cdot ,m \right) f(x),gi(x)(i=1,2,⋅⋅⋅,m)和 h j ( x ) ( j = m + 1 , ⋅ ⋅ ⋅ , p ) h_{j}\left( \mathbf{x} \right)\left( j = m + 1, \cdot \cdot \cdot ,p \right) hj(x)(j=m+1,⋅⋅⋅,p)均为定的n元函数。最优化问题可表述为:在如下的约束条件下:

g i ( x ) ≤ 0 i = 1 , 2 ⋅ ⋅ ⋅ m g_{i}\left( \mathbf{x} \right) \leq 0~~~~i = 1,2 \cdot \cdot \cdot m gi(x)≤0 i=1,2⋅⋅⋅m

h j ( x ) = 0 j = m + 1 , ⋅ ⋅ ⋅ p h_{j}\left( \mathbf{x} \right) = 0~~~~j = m + 1, \cdot \cdot \cdot p hj(x)=0 j=m+1,⋅⋅⋅p

求 f ( x ) f(x) f(x)的最小值(或最大值)。通常称 f ( x ) f(x) f(x)为目标函数, g i ( x ) ≤ 0 g_{i}\left( \mathbf{x} \right) \leq 0 gi(x)≤0为不等式约束条件, h j ( x ) = 0 h_{j}\left( \mathbf{x} \right) = 0 hj(x)=0为等式约束条件, x x x为设计变量。通用的表达形式为:

{ min f ( x ) s . t . g i ( x ) ≤ 0 i = 1 , 2 , ⋅ ⋅ ⋅ m h j ( x ) = 0 j = m + 1 , ⋅ ⋅ ⋅ p x ∈ R n \left\{ \begin{matrix} {\min{~~~~f\left( \mathbf{x} \right)}} \\ {s.t.~~~~g_{i}\left( \mathbf{x} \right. )\leq 0~~~~i = 1,2, \cdot \cdot \cdot m~} \\ {h_{j}\left(\mathbf{x} \right.) = 0~~~~j = m + 1, \cdot \cdot \cdot p} \\ {x \in R^{n}} \\ \end{matrix} \right. ⎩⎪⎪⎨⎪⎪⎧min f(x)s.t. gi(x)≤0 i=1,2,⋅⋅⋅m hj(x)=0 j=m+1,⋅⋅⋅px∈Rn

上式又称优化列式,是待求解问题的表达式。

令 R = { x | g i ( x ) ≤ 0 , i = 1 , 2 , ⋅ ⋅ ⋅ m ; h j ( x ) = 0 , j = m + 1 , ⋅ ⋅ ⋅ p } R = \left\{ x \middle| g_{i}\left( x \right) \leq 0,i = 1,2, \cdot \cdot \cdot m;h_{j}\left( x \right) = 0,j = m + 1, \cdot \cdot \cdot p \right\} R={x∣gi(x)≤0,i=1,2,⋅⋅⋅m;hj(x)=0,j=m+1,⋅⋅⋅p}称 x ∈ R x \in R x∈R为上述问题的一个可行解集。

算法推导

基于OC算法并不适用于求解多约束条件的复杂问题,这时候学术界更多使用基于一阶泰勒展开的移动渐近线法[1]。OC算法的详细推导和2D拓扑优化可以看我的另一篇文章https://blog.csdn.net/qq_42183549/article/details/122369170

这样复杂的优化问题一般可以被描述为:

P : P: P:

{ m i n f 0 ( x ) f i ( x ) ≤ f i ^ f o r i = 1 , … , m x j m i n ≤ x j ≤ x j m a x \begin{cases} min~~~~~f_0(x)\\ f_i(x)\le\widehat{f_i}~~~~~for~i=1,\dots,m\\ x_jmin\le~x_j\le~x_jmax \end{cases} ⎩⎪⎨⎪⎧min f0(x)fi(x)≤fi for i=1,…,mxjmin≤ xj≤ xjmax

对该问题的简化分为四步:

- 选择合适的初始值

- 计算迭代后得到的 x ( k ) x^{(k)} x(k)以及每个约束函数的梯度

- 产生原问题严格凸子问题 P ( k ) P^{(k)} P(k)

- 利用迭代产生的结果求解子问题,并返回第二步

所以求解该问题的关键在于生成严格凸的子问题。

对于第k步迭代产生的变量 x ( k ) x^{(k)} x(k)

L j ( k ) < x j ( k ) < U j ( k ) L_j^{(k)}

对于第k次迭代的约束条件 f i ( k ) f_i^{(k)} fi(k)被定义为:

f i ( k ) = r i ( k ) + ∑ j = 1 n ( p i j ( k ) U j ( k ) − x j + q i j ( k ) x j − L j ( k ) ) f_i^{(k)}=r_i^{(k)}+\sum_{j=1}^{n}(\frac{p_{ij}^{(k)}}{U_j^{(k)}-x_j}+\frac{q_{ij}^{(k)}}{x_j-L_j^{(k)}}) fi(k)=ri(k)+j=1∑n(Uj(k)−xjpij(k)+xj−Lj(k)qij(k))

w h e r e p i j ( k ) = { ( U j ( k ) − x j ( k ) ) 2 ∂ f i ∂ x j , i f ∂ f i x j > 0 0 , i f ∂ f i x j ≤ 0 where~~~p_{ij}^{(k)}=\begin{cases} (U_{j}^{(k)}-x_j^{(k)})^2 \frac{\partial{f_i}}{\partial{x_j}},~~if\frac{\partial{f_i}}{x_j}>0\\ 0,~~~~~~~~~~~~~~~~~~~~~~~~~~~~~if\frac{\partial{f_i}}{x_j}\le0 \end{cases} where pij(k)={(Uj(k)−xj(k))2∂xj∂fi, ifxj∂fi>00, ifxj∂fi≤0

q i j ( k ) = { 0 , i f ∂ f i x j ≥ 0 − ( x j ( k ) − L j ( k ) ) 2 ∂ f i ∂ x j , i f ∂ f i x j < 0 q_{ij}^{(k)}=\begin{cases} 0,~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~if\frac{\partial{f_i}}{x_j}\geq0\\ -(x_{j}^{(k)}-L_j^{(k)})^2 \frac{\partial{f_i}}{\partial{x_j}},~~if\frac{\partial{f_i}}{x_j}<0\\ \end{cases} qij(k)={0, ifxj∂fi≥0−(xj(k)−Lj(k))2∂xj∂fi, ifxj∂fi<0

r i ( k ) = f i ( x ( k ) ) − ∑ j = 1 n ( p i j ( k ) U j ( k ) − x j ( k ) + q i j ( k ) x j ( k ) − L j ( k ) ) r_i^{(k)}=f_i(x^{(k)})-\sum_{j=1}^{n}(\frac{p_{ij}^{(k)}}{U_j^{(k)}-x_j^{(k)}}+\frac{q_{ij}^{(k)}}{x_j^{(k)}-L_j^{(k)}}) ri(k)=fi(x(k))−j=1∑n(Uj(k)−xj(k)pij(k)+xj(k)−Lj(k)qij(k))

上式中所有的偏导数 ∂ f i ∂ x j \frac{\partial{f_i}}{\partial{x_j}} ∂xj∂fi均为 x = x ( k ) x=x^{(k)} x=x(k)时取得。

可以看到当 U → + ∞ U\to{+\infty} U→+∞时,有:

f i ( k ) = f i ( x ( k ) ) + ∂ f i ∂ x ⋅ ( x − x k ) ⋅ ( U − x k ) ( U − x ) f_i^{(k)}=f_i(x^{(k)})+\frac{\partial{f_i}}{\partial{x}}\cdot{(x-x^{k})}\cdot\frac{(U-x^k)}{(U-x)} fi(k)=fi(x(k))+∂x∂fi⋅(x−xk)⋅(U−x)(U−xk)

= f i ( x ( k ) ) + ∂ f i ∂ x ⋅ ( x − x k ) =f_i(x^{(k)})+\frac{\partial{f_i}}{\partial{x}}\cdot{(x-x^{k})} =fi(x(k))+∂x∂fi⋅(x−xk)

这显然就是一阶泰勒展开的公式。所以有很多文献都说MMA方法是一种基于一阶泰勒展开的序列凸近似。事实上,大部分的凸近似都借鉴过一阶泰勒展开的思想。

当 L → − ∞ L\to{-\infty} L→−∞可以得到相似的结论。

但根据我们绝倒的高等数学的知识,一阶泰勒展开的代数精度很低,只有当近似函数的区间就在展开点附近时,一阶泰勒才近似成立,所以我们需要引入新的参数对这个展开的区间进行限制也就有了下面将要讲到的 α 和 β \alpha和\beta α和β,只限制区间或许还不够,我们希望近似函数不完全是线性的,这样我们就可以更容易获得严格凸的特性,于是 U 和 L U和L U和L就又起到了控制曲率的作用。

这样做的好处是每一个子问题 P ( k ) P^{(k)} P(k),其约束条件 f i ( k ) f_i^{(k)} fi(k):

∂ 2 f i ( k ) ∂ x j ( 2 ) = 2 p i j ( k ) ( U j ( k ) − x j ) 3 + 2 q i j ( k ) ( x j − L j ( k ) ) 3 \frac{\partial^2{f_i^{(k)}}}{\partial{x_j^{(2)}}}=\frac{2p_{ij^{(k)}}}{(U_j^{(k)}-x_j)^3}+\frac{2q_{ij}^{(k)}}{(x_j-L_j^{(k)})^{3}} ∂xj(2)∂2fi(k)=(Uj(k)−xj)32pij(k)+(xj−Lj(k))32qij(k)

∂ 2 f i ( k ) ∂ x j ∂ x i = 0 , i f i ≠ j \frac{\partial^2f_i^{(k)}}{\partial{x_j}\partial{x_i}}=0,if~i\neq{j} ∂xj∂xi∂2fi(k)=0,if i=j

又因为 p i j ( k ) ≥ 0 , q i j ( k ) ≥ 0 p_{ij}^{(k)}\geq0,q_{ij}^{(k)}\geq0 pij(k)≥0,qij(k)≥0, f i ( k ) f_i^{(k)} fi(k)是一个严格凸的函数,这样就完成了从非凸向凸的转化。

易得近似函数有一条渐近线 x j = U j x_j=U_j xj=Uj,通过调整 U j U_j Uj的值控制近似函数的边界和曲率。该种近似方法的本质是一阶泰勒展开,在 x − > x ( k ) x->x^{(k)} x−>x(k)时有较好的近似效果,所以我们提出变量 x j x_j xj更精确的取值范围,即下次一迭代结果 x ( k + 1 ) x^{(k+1)} x(k+1)不会离 x ( k ) x^{(k)} x(k)太远。

定义 L J ( k ) ≤ α j ( k ) ≤ x j ( k ) ≤ β j ( k ) ≤ U j ( k ) L_J^{(k)}\le\alpha_{j}^{(k)}\leq{x_j}^{(k)}\leq{\beta_j}^{(k)}\le{U_j}^{(k)} LJ(k)≤αj(k)≤xj(k)≤βj(k)≤Uj(k)其中 α j ( k ) = 0.9 ∗ L j ( k ) + 0.1 ∗ x j ( k ) \alpha_j^{(k)}=0.9*L_j^{(k)}+0.1*x_j^{(k)} αj(k)=0.9∗Lj(k)+0.1∗xj(k), β j ( k ) = 0.9 ∗ U j ( k ) + 0.1 ∗ x j ( k ) \beta_j^{(k)}=0.9*U_j^{(k)}+0.1*x_j^{(k)} βj(k)=0.9∗Uj(k)+0.1∗xj(k),在实际计算过程中U和L一般是通过物理条件确定的定值,可以不随迭代发生变化。但是在复杂问题中,如果可行区间更小则收敛性更好。这里提供一种迭代方法:

在迭代的前两步可以取:

L j ( k ) = x j ( k ) − ( x j m a x − x j m i n ) & U j ( k ) = x j ( k ) + ( x j m a x − x j m i n ) L^{(k)}_j=x_j^{(k)}-(x_jmax-x_jmin)~~\&~~U^{(k)}_j=x_j^{(k)}+(x_jmax-x_jmin) Lj(k)=xj(k)−(xjmax−xjmin) & Uj(k)=xj(k)+(xjmax−xjmin)

当K ≥ \ge ≥K+1:

L j ( k ) = x j ( k ) − s ( x j ( k − 1 ) − L j ( k − 1 ) ) & U j ( k ) = x j ( k ) + s ( x j ( k − 1 ) − U j ( k − 1 ) ) L^{(k)}_j=x_j^{(k)}-s(x_j^{(k-1)}-L_j^{(k-1)})~~\&~~U^{(k)}_j=x_j^{(k)}+s(x_j^{(k-1)}-U_j^{(k-1)}) Lj(k)=xj(k)−s(xj(k−1)−Lj(k−1)) & Uj(k)=xj(k)+s(xj(k−1)−Uj(k−1))

如此操作,我们可以得到一系列严格的凸子问题 P ( k ) P^{(k)} P(k):

m i n ∑ j = 1 n ( p 0 j U j − x j − q 0 j x j − L j ) + r 0 min~~~~~~~~~~~~~~~~~\sum_{j=1}^{n}(\frac{p_0j}{U_j-x_j}-\frac{q_0j}{x_j-L_j})+r_0 min j=1∑n(Uj−xjp0j−xj−Ljq0j)+r0

s . t . ∑ j = 1 n ( p i j U j − x j − q i j x j − L j ) ≤ b i s.t.\sum_{j=1}^{n}(\frac{p_{ij}}{U_j-x_j}-\frac{q_{ij}}{x_j-L_j})\le{b_i} s.t.j=1∑n(Uj−xjpij−xj−Ljqij)≤bi

α j ≤ x j ≤ β j \alpha_{j}\leq{x_j}\leq{\beta_j} αj≤xj≤βj

求解 p ( k ) p^{(k)} p(k)可列拉格朗日函数:

l ( x , y ) = f 0 ( k ) ( x ) + ∑ j = 1 n y i f i ( k ) ( x ) l(x,y)=f_0^{(k)}(x)+\sum_{j=1}^{n}y_if_i^{(k)}(x) l(x,y)=f0(k)(x)+j=1∑nyifi(k)(x)

写成分量的形式:

l ( x , y ) = p 0 j + y T p j U j − x j − q 0 j + y T q j x j − L j l(x,y)=\frac{p_{0j}+y^Tp_j}{U_j-x_j}-\frac{q_{0j}+y^Tq_j}{x_j-L_j} l(x,y)=Uj−xjp0j+yTpj−xj−Ljq0j+yTqj

其对偶函数 W ( y ) W(y) W(y)可以写为:

W ( y ) = m i n x { L ( x , y ) ; α j ≤ x j ≤ β j , f o r a l l j } W(y)=\underset{x}{min}\{{L(x,y);\alpha_{j}\leq{x_j}\leq{\beta_j}},for~all~j\} W(y)=xmin{L(x,y);αj≤xj≤βj,for all j}

根据凸优化[2]>的相关知识,求原问题的极小值等价于求对偶问题的极大值。

P ( K ) P^{(K)} P(K)可以转化为对偶问题:

m a x W ( y ) = r 0 − y T b + ∑ j = 1 n ( p 0 j + y T p j U j − x j − q 0 j + y T q j x j − L j ) max~~~~W(y)=r_0-y^Tb+\sum_{j=1}^{n}(\frac{p_{0j}+y^Tp_j}{U_j-x_j}-\frac{q_{0j}+y^Tq_j}{x_j-L_j}) max W(y)=r0−yTb+j=1∑n(Uj−xjp0j+yTpj−xj−Ljq0j+yTqj)

s . t . y ≥ 0 s.t.~~~~~~~y\geq{0} s.t. y≥0

在第一次迭代的过程中如果起始点选择不当可能会出现子问题 P ( K ) P^{(K)} P(K) 不可行,即没有任何可行解。为了解决这一问题我们引入变量 z i z_i zi于是子问题可以写为 P ~ ( k ) \tilde{P}^{(k)} P~(k):

m i n i m i z e ∑ j = 1 n ( p 0 j U j − x j − q 0 j x j − L j ) + ∑ i = 1 m ( d i z i + d i z i 2 ) + r 0 minimize~~~~~~~~~~\sum_{j=1}^{n}(\frac{p_{0j}}{U_j-x_j}-\frac{q_{0j}}{x_j-L_j})+\sum_{i=1}^{m}(d_iz_i+d_iz_i^2)+r_0 minimize j=1∑n(Uj−xjp0j−xj−Ljq0j)+i=1∑m(dizi+dizi2)+r0

s . t . ∑ j = 1 n ( p i j U j − x j − q i j x j − L j ) − z i ≤ b i , f o r i = 1 , . . . , m s.t. ~~~~~~~~~~~~~~~~~~~~\sum_{j=1}^{n}(\frac{p_{ij}}{U_j-x_j}-\frac{q_{ij}}{x_j-L_j})-z_i\leq{b_i},~for~i=1,...,m s.t. j=1∑n(Uj−xjpij−xj−Ljqij)−zi≤bi, for i=1,...,m

a n d α j ≤ x j ≤ β j , f o r j = 1 , . . . , n , z i ≥ 0 , f o r i = 1 , . . . , m and~~~~~~~~~~~~~~~~~~~~\alpha_{j}\leq{x_j}\leq{\beta_j},~for~j=1,...,n~,z_i\ge{0},~for~i=1,...,m and αj≤xj≤βj, for j=1,...,n ,zi≥0, for i=1,...,m

其中 p i j ≥ 0 , q i j ≥ 0 , L j ≤ α j ≤ β j ≤ U j , d i > 0 p_{ij}\ge0,q_{ij}\ge0, L_j\le\alpha_{j}\leq{\beta_j}\le{U_j},d_i>0 pij≥0,qij≥0,Lj≤αj≤βj≤Uj,di>0,每个 d i d_i di都应该是一个相对较大的数。很容易证明如果该系数足够大那么在子问题 P ( k ) P^{(k)} P(k)中自变量 z i z_i zi会自动变为0,另一方面,如果未修改的子问题 P ( k ) P^{(k)} P(k)不可行,则在 P ~ ( k ) \tilde{P}^{(k)} P~(k)中一些自变量 z i z_i zi应该是严格正的。在实践中 d i d_i di不必太大,应为过大的 d i d_i di会造成数值上的不稳定,可以取10或100。

结果展示

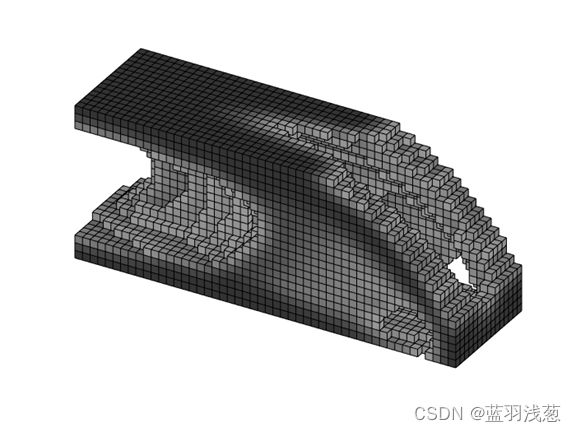

给定参数top3d(30,20,10,0.4,3,1.5),参数的意义分别为长、宽、高、网格尺度、罚函数、最小过滤半径。可以得到结果:

代码及注释

源代码来自kai liu[3],原文使用OC算法,作者完成了MMA算法的移植。

代码已上传至https://download.csdn.net/download/qq_42183549/74926750

top3d

%本文给定top3d(30,20,10,0.4,3,1.5)

function top3d(nelx,nely,nelz,volfrac,penal,rmin)

% 定义循环参数

maxloop = 200; % 最大迭代次数

tolx = 0.01; % 终止条件(残差)

displayflag = 0; % 显示结构表示

% 材料的属性

E0 = 1; % 固体区域的杨氏模量

Emin = 1e-9; % 非固体区域的杨氏模量,尽可能小但是为了避免奇异性一般不取0

nu = 0.3; % 泊松比

% USER-DEFINED LOAD DOFs

[il,jl,kl] = meshgrid(nelx, 0, 0:nelz); % Coordinates

loadnid = kl*(nelx+1)*(nely+1)+il*(nely+1)+(nely+1-jl); % Node IDs

loaddof = 3*loadnid(:) - 1; % DOFs

% USER-DEFINED SUPPORT FIXED DOFs

[iif,jf,kf] = meshgrid(0,0:nely,0:nelz); % Coordinates

fixednid = kf*(nelx+1)*(nely+1)+iif*(nely+1)+(nely+1-jf); % Node IDs

fixeddof = [3*fixednid(:); 3*fixednid(:)-1; 3*fixednid(:)-2]; % DOFs

% 有限元分析程序

nele = nelx*nely*nelz;

ndof = 3*(nelx+1)*(nely+1)*(nelz+1);

F = sparse(loaddof,1,-1,ndof,1);

U = zeros(ndof,1);

freedofs = setdiff(1:ndof,fixeddof);

KE = lk_H8(nu);

nodegrd = reshape(1:(nely+1)*(nelx+1),nely+1,nelx+1);

nodeids = reshape(nodegrd(1:end-1,1:end-1),nely*nelx,1);

nodeidz = 0:(nely+1)*(nelx+1):(nelz-1)*(nely+1)*(nelx+1);

nodeids = repmat(nodeids,size(nodeidz))+repmat(nodeidz,size(nodeids));

edofVec = 3*nodeids(:)+1;

edofMat = repmat(edofVec,1,24)+ ...

repmat([0 1 2 3*nely + [3 4 5 0 1 2] -3 -2 -1 ...

3*(nely+1)*(nelx+1)+[0 1 2 3*nely + [3 4 5 0 1 2] -3 -2 -1]],nele,1);

iK = reshape(kron(edofMat,ones(24,1))',24*24*nele,1);

jK = reshape(kron(edofMat,ones(1,24))',24*24*nele,1);

% 过滤器

iH = ones(nele*(2*(ceil(rmin)-1)+1)^2,1);

jH = ones(size(iH));

sH = zeros(size(iH));

k = 0;

for k1 = 1:nelz

for i1 = 1:nelx

for j1 = 1:nely

e1 = (k1-1)*nelx*nely + (i1-1)*nely+j1;

for k2 = max(k1-(ceil(rmin)-1),1):min(k1+(ceil(rmin)-1),nelz)

for i2 = max(i1-(ceil(rmin)-1),1):min(i1+(ceil(rmin)-1),nelx)

for j2 = max(j1-(ceil(rmin)-1),1):min(j1+(ceil(rmin)-1),nely)

e2 = (k2-1)*nelx*nely + (i2-1)*nely+j2;

k = k+1;

iH(k) = e1;

jH(k) = e2;

sH(k) = max(0,rmin-sqrt((i1-i2)^2+(j1-j2)^2+(k1-k2)^2));

end

end

end

end

end

end

H = sparse(iH,jH,sH);

Hs = sum(H,2);

% 迭代初始化

x = repmat(volfrac,[nely,nelx,nelz]);

xPhys = x;

loop = 0;

change = 1;

% 开始迭代

while change > tolx && loop < maxloop

loop = loop+1;

% 有限元分析

sK = reshape(KE(:)*(Emin+xPhys(:)'.^penal*(E0-Emin)),24*24*nele,1);

K = sparse(iK,jK,sK); K = (K+K')/2;

U(freedofs,:) = K(freedofs,freedofs)\F(freedofs,:);

% 定义目标函数与灵敏度分析

ce = reshape(sum((U(edofMat)*KE).*U(edofMat),2),[nely,nelx,nelz]);

c = sum(sum(sum((Emin+xPhys.^penal*(E0-Emin)).*ce)));

dc = -penal*(E0-Emin)*xPhys.^(penal-1).*ce;

dv = ones(nely,nelx,nelz);

% 对灵敏度进行过滤与投影,需要注意的是,并不能数学上证明对灵敏度进行过滤的

% 稳定性,如果出现不适定的情况可以尝试更改过滤为密度过滤

dc(:) = H*(dc(:)./Hs);

dv(:) = H*(dv(:)./Hs);

% 移动渐近线法求解

xval = x(:);

f0val = c;

df0dx = dc(:);

fval = sum(xPhys(:))/(volfrac*nele) - 1;

dfdx = dv(:)' / (volfrac*nele);

[xmma, ~, ~, ~, ~, ~, ~, ~, ~, low,upp] = ...

mmasub(m, n, loop, xval, xmin, xmax, xold1, xold2, ...

f0val,df0dx,fval,dfdx,low,upp,a0,a,c_MMA,d);

% 更新迭代变量

xnew = reshape(xmma,nely,nelx,nelz);

xPhys(:) = (H*xnew(:))./Hs;

xold2 = xold1(:);

xold1 = x(:);

change = max(abs(xnew(:)-x(:)));

x = xnew;

% 输出结果

fprintf(' It.:%5i Obj.:%11.4f Vol.:%7.3f ch.:%7.3f\n',loop,c,mean(xPhys(:)),change);

% 输出密度

if displayflag, clf; display_3D(xPhys); end %#ok

end

clf; display_3D(xPhys);

end

% === 生成单元刚度矩阵 ===

function [KE] = lk_H8(nu)

A = [32 6 -8 6 -6 4 3 -6 -10 3 -3 -3 -4 -8;

-48 0 0 -24 24 0 0 0 12 -12 0 12 12 12];

k = 1/144*A'*[1; nu];

K1 = [k(1) k(2) k(2) k(3) k(5) k(5);

k(2) k(1) k(2) k(4) k(6) k(7);

k(2) k(2) k(1) k(4) k(7) k(6);

k(3) k(4) k(4) k(1) k(8) k(8);

k(5) k(6) k(7) k(8) k(1) k(2);

k(5) k(7) k(6) k(8) k(2) k(1)];

K2 = [k(9) k(8) k(12) k(6) k(4) k(7);

k(8) k(9) k(12) k(5) k(3) k(5);

k(10) k(10) k(13) k(7) k(4) k(6);

k(6) k(5) k(11) k(9) k(2) k(10);

k(4) k(3) k(5) k(2) k(9) k(12)

k(11) k(4) k(6) k(12) k(10) k(13)];

K3 = [k(6) k(7) k(4) k(9) k(12) k(8);

k(7) k(6) k(4) k(10) k(13) k(10);

k(5) k(5) k(3) k(8) k(12) k(9);

k(9) k(10) k(2) k(6) k(11) k(5);

k(12) k(13) k(10) k(11) k(6) k(4);

k(2) k(12) k(9) k(4) k(5) k(3)];

K4 = [k(14) k(11) k(11) k(13) k(10) k(10);

k(11) k(14) k(11) k(12) k(9) k(8);

k(11) k(11) k(14) k(12) k(8) k(9);

k(13) k(12) k(12) k(14) k(7) k(7);

k(10) k(9) k(8) k(7) k(14) k(11);

k(10) k(8) k(9) k(7) k(11) k(14)];

K5 = [k(1) k(2) k(8) k(3) k(5) k(4);

k(2) k(1) k(8) k(4) k(6) k(11);

k(8) k(8) k(1) k(5) k(11) k(6);

k(3) k(4) k(5) k(1) k(8) k(2);

k(5) k(6) k(11) k(8) k(1) k(8);

k(4) k(11) k(6) k(2) k(8) k(1)];

K6 = [k(14) k(11) k(7) k(13) k(10) k(12);

k(11) k(14) k(7) k(12) k(9) k(2);

k(7) k(7) k(14) k(10) k(2) k(9);

k(13) k(12) k(10) k(14) k(7) k(11);

k(10) k(9) k(2) k(7) k(14) k(7);

k(12) k(2) k(9) k(11) k(7) k(14)];

KE = 1/((nu+1)*(1-2*nu))*...

[ K1 K2 K3 K4;

K2' K5 K6 K3';

K3' K6 K5' K2';

K4 K3 K2 K1'];

end

% === 展示3D效果图 (ISO-VIEW) ===

function display_3D(rho)

[nely,nelx,nelz] = size(rho);

hx = 1; hy = 1; hz = 1; % 定义效果图的单元大小

face = [1 2 3 4; 2 6 7 3; 4 3 7 8; 1 5 8 4; 1 2 6 5; 5 6 7 8];

set(gcf,'Name','ISO display','NumberTitle','off');

for k = 1:nelz

z = (k-1)*hz;

for i = 1:nelx

x = (i-1)*hx;

for j = 1:nely

y = nely*hy - (j-1)*hy;

if (rho(j,i,k) > 0.5) % 定义展示出的密度阈值

vert = [x y z; x y-hx z; x+hx y-hx z; x+hx y z; x y z+hx;x y-hx z+hx; x+hx y-hx z+hx;x+hx y z+hx];

vert(:,[2 3]) = vert(:,[3 2]); vert(:,2,:) = -vert(:,2,:);

patch('Faces',face,'Vertices',vert,'FaceColor',[0.2+0.8*(1-rho(j,i,k)),0.2+0.8*(1-rho(j,i,k)),0.2+0.8*(1-rho(j,i,k))]);

hold on;

end

end

end

end

axis equal; axis tight; axis off; box on; view([30,30]); pause(1e-6);

end

mma

%-------------------------------------------------------

% MMA求解程序 MMA.m

% 本代码将原本的非线性问题线性近似,非凸问题凸近似,得到一系列

% 线性的、凸的子问题,再通过subsolv.m迭代求解这些线性的、凸的子问题最终找到最优值点

function [xmma,ymma,zmma,lam,xsi,eta,mu,zet,s,low,upp] = ...

mmasub(m,n,iter,xval,xmin,xmax,xold1,xold2, ...

f0val,df0dx,fval,dfdx,low,upp,a0,a,c,d)

% This function mmasub performs one MMA-iteration, aimed at

% solving the nonlinear programming problem:

% 该函数进行MMA迭代,已解决符合以下形式的非线性(非凸)优化问题

% Minimize f_0(x) + a_0*z + sum( c_i*y_i + 0.5*d_i*(y_i)^2 )

% subject to f_i(x) - a_i*z - y_i <= 0, i = 1,...,m

% xmin_j <= x_j <= xmax_j, j = 1,...,n

% z >= 0, y_i >= 0, i = 1,...,m

% 输入参数有:

% m = 约束条件的数量

% n = 变量的个数

% iter = 迭代步数

% xval = 包含所有xj的向量

% xmin = 包含所有xj取值下限的向量

% xmax = 包含所有xj取值上限的向量

% xold1 = 上次迭代产生的x_val

% xold2 = 上上次迭代产生的的xval

% f0val = 目标函数的现有值

% df0dx = 包含目标函数对所有xj偏导数的列向量

% fval = 时约束函数fi值的列向量

% dfdx = x_j.fi对xj偏导数的矩阵

% low = 前一次迭代的渐近线下界

% upp = 前一次迭代的渐进线上界

% 引入下面四个量防止在初始值选择不恰当的形况下出现子问题不适定(没有结)的情况

% a0 = a_0*z中的常系数a_0

% a = 用来存储a_i*z中系数 a_i的列向量

% c = 用来存储c_i*y_i中系数c_i的列向量

% d =用来存储0.5*d_i*(y_i)^2中系数d_i的列向量,d_i应该是一个足够大的数,

% 可以证明当d_i足够大时y_i趋近于0

%输出的量:

% xmma = 子问题求解出的xj的列向量

% ymma = 子问题求解出的yi的列向量

% zmma = z的标量

% lam = m个约束对应的拉格朗日乘子

% xsi = n个α0对应的拉格朗日乘子

% s = m个约束条件对应的松弛因子

% low = 本次求解的子问题对应的渐近线的下界

% upp = 本次求解的子问题对应的渐近线上界

%

%epsimin = sqrt(m+n)*10^(-9);计算机精度的最小值

epsimin = 10^(-7);

raa0 = 0.00001;

move = 1.0;

albefa = 0.1;

asyinit = 0.5;

asyincr = 1.2;

asydecr = 0.7;

eeen = ones(n,1);

eeem = ones(m,1);

zeron = zeros(n,1);

% Calculation of the asymptotes low and upp :计算渐近线的上下界

if iter < 2.5

low = xval - asyinit*(xmax-xmin);

upp = xval + asyinit*(xmax-xmin);

else

zzz = (xval-xold1).*(xold1-xold2);

factor = eeen;

factor(find(zzz > 0)) = asyincr;

factor(find(zzz < 0)) = asydecr;

low = xval - factor.*(xold1 - low);

upp = xval + factor.*(upp - xold1);

lowmin = xval - 10*(xmax-xmin);

lowmax = xval - 0.01*(xmax-xmin);

uppmin = xval + 0.01*(xmax-xmin);

uppmax = xval + 10*(xmax-xmin);

low = max(low,lowmin);

low = min(low,lowmax);

upp = min(upp,uppmax);

upp = max(upp,uppmin);

end

% 计算设计变量的精确界限α和β

zzz1 = low + albefa*(xval-low);

zzz2 = xval - move*(xmax-xmin);

zzz = max(zzz1,zzz2);

alfa = max(zzz,xmin);

zzz1 = upp - albefa*(upp-xval);

zzz2 = xval + move*(xmax-xmin);

zzz = min(zzz1,zzz2);

beta = min(zzz,xmax);

% 计算 p0, q0, P, Q 和 b.

xmami = xmax-xmin;

xmamieps = 0.00001*eeen;

xmami = max(xmami,xmamieps);

xmamiinv = eeen./xmami;

ux1 = upp-xval;

ux2 = ux1.*ux1;

xl1 = xval-low;

xl2 = xl1.*xl1;

uxinv = eeen./ux1;

xlinv = eeen./xl1;

%

p0 = zeron;

q0 = zeron;

p0 = max(df0dx,0);

q0 = max(-df0dx,0);

%p0(find(df0dx > 0)) = df0dx(find(df0dx > 0));

%q0(find(df0dx < 0)) = -df0dx(find(df0dx < 0));

pq0 = 0.001*(p0 + q0) + raa0*xmamiinv;

p0 = p0 + pq0;

q0 = q0 + pq0;

p0 = p0.*ux2;

q0 = q0.*xl2;

%

P = sparse(m,n);

Q = sparse(m,n);

P = max(dfdx,0);

Q = max(-dfdx,0);

%P(find(dfdx > 0)) = dfdx(find(dfdx > 0));

%Q(find(dfdx < 0)) = -dfdx(find(dfdx < 0));

PQ = 0.001*(P + Q) + raa0*eeem*xmamiinv';

P = P + PQ;

Q = Q + PQ;

P = P * spdiags(ux2,0,n,n);

Q = Q * spdiags(xl2,0,n,n);

b = P*uxinv + Q*xlinv - fval ;

%

%%% 使用反演原对偶牛顿方法求解子问题

[xmma,ymma,zmma,lam,xsi,eta,mu,zet,s] = ...

subsolv(m,n,epsimin,low,upp,alfa,beta,p0,q0,P,Q,a0,a,b,c,d);

subsolve

% 子问题求解程序 subsolv.m

%

function [xmma,ymma,zmma,lamma,xsimma,etamma,mumma,zetmma,smma] = ...

subsolv(m,n,epsimin,low,upp,alfa,beta,p0,q0,P,Q,a0,a,b,c,d);

%

% 使用该函数求解MMA生成的子问题可以写成:

%

% minimize SUM[ p0j/(uppj-xj) + q0j/(xj-lowj) ] + a0*z +

% + SUM[ ci*yi + 0.5*di*(yi)^2 ],

%

% subject to SUM[ pij/(uppj-xj) + qij/(xj-lowj) ] - ai*z - yi <= bi,

% alfaj <= xj <= betaj, yi >= 0, z >= 0.

% 然后使用拉格朗日的方法求解子问题的对偶问题

% Input: m, n, low, upp, alfa, beta, p0, q0, P, Q, a0, a, b, c, d.

% Output: xmma,ymma,zmma, slack variables and Lagrange multiplers.

%

een = ones(n,1);

eem = ones(m,1);

epsi = 1;

epsvecn = epsi*een;

epsvecm = epsi*eem;

x = 0.5*(alfa+beta);

y = eem;

z = 1;

lam = eem;

xsi = een./(x-alfa);

xsi = max(xsi,een);

eta = een./(beta-x);

eta = max(eta,een);

mu = max(eem,0.5*c);

zet = 1;

s = eem;

itera = 0;

while epsi > epsimin

epsvecn = epsi*een;

epsvecm = epsi*eem;

ux1 = upp-x;

xl1 = x-low;

ux2 = ux1.*ux1;

xl2 = xl1.*xl1;

uxinv1 = een./ux1;

xlinv1 = een./xl1;

plam = p0 + P'*lam ;

qlam = q0 + Q'*lam ;

gvec = P*uxinv1 + Q*xlinv1;

dpsidx = plam./ux2 - qlam./xl2 ;

rex = dpsidx - xsi + eta;

rey = c + d.*y - mu - lam;

rez = a0 - zet - a'*lam;

relam = gvec - a*z - y + s - b;

rexsi = xsi.*(x-alfa) - epsvecn;

reeta = eta.*(beta-x) - epsvecn;

remu = mu.*y - epsvecm;

rezet = zet*z - epsi;

res = lam.*s - epsvecm;

residu1 = [rex' rey' rez]';

residu2 = [relam' rexsi' reeta' remu' rezet res']';

residu = [residu1' residu2']';

residunorm = sqrt(residu'*residu);

residumax = max(abs(residu));

ittt = 0;

while residumax > 0.9*epsi & ittt < 100

ittt=ittt + 1;

itera=itera + 1;

ux1 = upp-x;

xl1 = x-low;

ux2 = ux1.*ux1;

xl2 = xl1.*xl1;

ux3 = ux1.*ux2;

xl3 = xl1.*xl2;

uxinv1 = een./ux1;

xlinv1 = een./xl1;

uxinv2 = een./ux2;

xlinv2 = een./xl2;

plam = p0 + P'*lam ;

qlam = q0 + Q'*lam ;

gvec = P*uxinv1 + Q*xlinv1;

GG = P*spdiags(uxinv2,0,n,n) - Q*spdiags(xlinv2,0,n,n);

dpsidx = plam./ux2 - qlam./xl2 ;

delx = dpsidx - epsvecn./(x-alfa) + epsvecn./(beta-x);

dely = c + d.*y - lam - epsvecm./y;

delz = a0 - a'*lam - epsi/z;

dellam = gvec - a*z - y - b + epsvecm./lam;

diagx = plam./ux3 + qlam./xl3;

diagx = 2*diagx + xsi./(x-alfa) + eta./(beta-x);

diagxinv = een./diagx;

diagy = d + mu./y;

diagyinv = eem./diagy;

diaglam = s./lam;

diaglamyi = diaglam+diagyinv;

if m < n

blam = dellam + dely./diagy - GG*(delx./diagx);

bb = [blam' delz]';

Alam = spdiags(diaglamyi,0,m,m) + GG*spdiags(diagxinv,0,n,n)*GG';

AA = [Alam a

a' -zet/z ];

solut = AA\bb;

dlam = solut(1:m);

dz = solut(m+1);

dx = -delx./diagx - (GG'*dlam)./diagx;

else

diaglamyiinv = eem./diaglamyi;

dellamyi = dellam + dely./diagy;

Axx = spdiags(diagx,0,n,n) + GG'*spdiags(diaglamyiinv,0,m,m)*GG;

azz = zet/z + a'*(a./diaglamyi);

axz = -GG'*(a./diaglamyi);

bx = delx + GG'*(dellamyi./diaglamyi);

bz = delz - a'*(dellamyi./diaglamyi);

AA = [Axx axz

axz' azz ];

bb = [-bx' -bz]';

solut = AA\bb;

dx = solut(1:n);

dz = solut(n+1);

dlam = (GG*dx)./diaglamyi - dz*(a./diaglamyi) + dellamyi./diaglamyi;

end

dy = -dely./diagy + dlam./diagy;

dxsi = -xsi + epsvecn./(x-alfa) - (xsi.*dx)./(x-alfa);

deta = -eta + epsvecn./(beta-x) + (eta.*dx)./(beta-x);

dmu = -mu + epsvecm./y - (mu.*dy)./y;

dzet = -zet + epsi/z - zet*dz/z;

ds = -s + epsvecm./lam - (s.*dlam)./lam;

xx = [ y' z lam' xsi' eta' mu' zet s']';

dxx = [dy' dz dlam' dxsi' deta' dmu' dzet ds']';

stepxx = -1.01*dxx./xx;

stmxx = max(stepxx);

stepalfa = -1.01*dx./(x-alfa);

stmalfa = max(stepalfa);

stepbeta = 1.01*dx./(beta-x);

stmbeta = max(stepbeta);

stmalbe = max(stmalfa,stmbeta);

stmalbexx = max(stmalbe,stmxx);

stminv = max(stmalbexx,1);

steg = 1/stminv;

xold = x;

yold = y;

zold = z;

lamold = lam;

xsiold = xsi;

etaold = eta;

muold = mu;

zetold = zet;

sold = s;

itto = 0;

resinew = 2*residunorm;

while resinew > residunorm & itto < 50

itto = itto+1;

x = xold + steg*dx;

y = yold + steg*dy;

z = zold + steg*dz;

lam = lamold + steg*dlam;

xsi = xsiold + steg*dxsi;

eta = etaold + steg*deta;

mu = muold + steg*dmu;

zet = zetold + steg*dzet;

s = sold + steg*ds;

ux1 = upp-x;

xl1 = x-low;

ux2 = ux1.*ux1;

xl2 = xl1.*xl1;

uxinv1 = een./ux1;

xlinv1 = een./xl1;

plam = p0 + P'*lam ;

qlam = q0 + Q'*lam ;

gvec = P*uxinv1 + Q*xlinv1;

dpsidx = plam./ux2 - qlam./xl2 ;

rex = dpsidx - xsi + eta;

rey = c + d.*y - mu - lam;

rez = a0 - zet - a'*lam;

relam = gvec - a*z - y + s - b;

rexsi = xsi.*(x-alfa) - epsvecn;

reeta = eta.*(beta-x) - epsvecn;

remu = mu.*y - epsvecm;

rezet = zet*z - epsi;

res = lam.*s - epsvecm;

residu1 = [rex' rey' rez]';

residu2 = [relam' rexsi' reeta' remu' rezet res']';

residu = [residu1' residu2']';

resinew = sqrt(residu'*residu);

steg = steg/2;

end

residunorm=resinew;

residumax = max(abs(residu));

steg = 2*steg;

end

epsi = 0.1*epsi;

end

xmma = x;

ymma = y;

zmma = z;

lamma = lam;

xsimma = xsi;

etamma = eta;

mumma = mu;

zetmma = zet;

smma = s;

参考文献

[1]SVANBERG K. MMA and GCMMA – two methods for nonlinear optimization[J]. : 15.

[2]STEPHEN BOYD, LIEVEN VANDENBERGHE. 凸优化[M]. 王书宁, 译, 许鋆, 译, 黄晓霖, 译. 清华大学出版社.

[3]LIU K, TOVAR A. An efficient 3D topology optimization code written in Matlab[J]. Structural and Multidisciplinary Optimization, 2014, 50(6): 1175–1196.