Prometheus Operator(三) 监控ETCD集群

Prometheus Operator 监控ETCD集群

现在我们需要自定义Prometheus operator,这里以监控ETCD为例。由于我们的etcd是跑在kubernetes外部的,想要监控到,

除了prometheus operator自带的资源对象,节点以及组件监控,有的时候实际的业务场景需要我们自定义监控项

- 确保有metric数据

- 创建ServiceMonitor对象,用于添加Prometheus添加监控项

- ServiceMonitor关联metrics数据接口的一个Service对象

- 确保Service可以正确获取到metrics

本次的环境我这里采用Kubernetes二进制安装环境进行演示

获取ETCD证书

对于etcd集群,在搭建的时候我们就采用了https证书认证的方式,所以这里如果想用Prometheus访问到etcd集群的监控数据,就需要添加证书

我们可以通过systemctl status etcd查看证书路径

[root@k8s-01 ~]# systemctl status etcd

● etcd.service - Etcd Server

Loaded: loaded (/etc/systemd/system/etcd.service; ##文件路径

Active: active (running) since 一 2020-03-09 09:47:45 CST; 8h ago

Docs: https://github.com/coreos

Main PID: 1055 (etcd)

Tasks: 9

Memory: 145.5M

CGroup: /system.slice/etcd.service

└─1055 /opt/k8s/bin/etcd --data-dir=/data/k8s/etcd/data --wal-dir=...

...

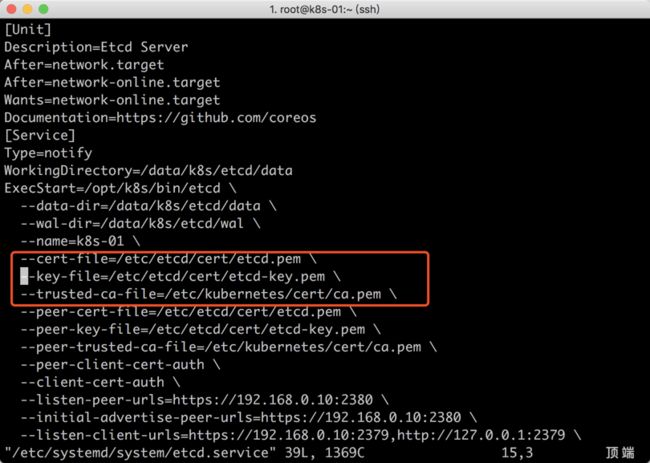

接下来我们编辑启动文件,查看ETCD证书路径

[root@k8s-01 ~]# vim /etc/systemd/system/etcd.service

由于网络上大部分文章都是kubeadm的监控,这里我就不在整理了。这篇文章主要针对kubernetes二进制搭建

接下来我们需要创建一个secret,让prometheus pod节点挂载

kubectl create secret generic etcd-ssl --from-file=/etc/kubernetes/cert/ca.pem --from-file=/etc/etcd/cert/etcd.pem --from-file=/etc/etcd/cert/etcd-key.pem -n monitoring

#这里我们k8s-01 master节点进行创建,ca为k8sca证书,剩下2个为etcd证书

创建完成后可以检查一下

[root@k8s-01 ~]# kubectl describe secrets -n monitoring etcd-ssl

Name: etcd-ssl

Namespace: monitoring

Labels:

Annotations:

Type: Opaque

Data

====

ca.pem: 1371 bytes

etcd-key.pem: 1675 bytes

etcd.pem: 1444 bytes

将etcd-ssl secret对象配置到prometheus资源对象中

1.通过edit直接编辑prometheus

kubectl edit prometheus k8s -n monitoring

2.修改prometheus.yaml文件

vim kube-prometheus-master/manifests/prometheus-prometheus.yaml

nodeSelector:

beta.kubernetes.io/os: linux

replicas: 2

secrets:

- etcd-ssl #添加secret名称

更新完毕后,我们就可以在Prometheus Pod中查看到对象的目录

[root@k8s-01 ~]# kubectl exec -it -n monitoring prometheus-k8s-0 /bin/sh

Defaulting container name to prometheus.

Use 'kubectl describe pod/prometheus-k8s-0 -n monitoring' to see all of the containers in this pod.

/prometheus $ ls /etc/prometheus/secrets/etcd-ssl/

ca.pem etcd-key.pem etcd.pem

创建ServiceMonitor

目前prometheus已经挂载了etcd的证书文件,接下来需要创建ServiceMonitor

[root@k8s-01 prometheus]# cat etcd-servicemonitor.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: etcd-k8s

namespace: monitoring

labels:

k8s-app: etcd-k8s

spec:

jobLabel: k8s-app

endpoints:

- port: port

interval: 30s

scheme: https

tlsConfig:

caFile: /etc/prometheus/secrets/etcd-ssl/ca.pem #证书路径 (在prometheus pod里路径)

certFile: /etc/prometheus/secrets/etcd-ssl/etcd.pem

keyFile: /etc/prometheus/secrets/etcd-ssl/etcd-key.pem

insecureSkipVerify: true

selector:

matchLabels:

k8s-app: etcd

namespaceSelector:

matchNames:

- kube-system

匹配Kube-system这个命名空间下面具有k8s-app=etcd这个label标签的Service,job label用于检索job任务名称的标签。由于证书serverName和etcd中签发的证书可能不匹配,所以添加了insecureSkipVerify=true将不再对服务端的证书进行校验

接下来直接创建这个ServiceMonitor

kubectl app -f etcd-servicemonitor.yaml

#查看servicemonitor

[root@k8s-01 prometheus]# kubectl get servicemonitors -n monitoring |grep etcd

etcd-k8s 7h56m

创建Service

ServiceMonitor创建完成,但是还没有关联对应的Service对象,所以需要创建一个service对象

[root@k8s-01 prometheus]# cat etcd-prometheus-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: etcd-k8s

namespace: kube-system

labels:

k8s-app: etcd

spec:

type: ClusterIP

clusterIP: None

ports:

- name: port

port: 2379

protocol: TCP

---

apiVersion: v1

kind: Endpoints

metadata:

name: etcd-k8s

namespace: kube-system

labels:

k8s-app: etcd

subsets:

- addresses:

- ip: 192.168.0.10 #etcd节点名称

nodeName: k8s-01 #kubelet名称 (kubectl get node)显示的名称

- ip: 192.168.0.11

nodeName: k8s-02

- ip: 192.168.0.12

nodeName: k8s-03

ports:

- name: port

port: 2379

protocol: TCP

接下来进行创建

kubectl apply -f etcd-prometheus-svc.yaml

查看创建结果

[root@k8s-01 prometheus]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

etcd-k8s ClusterIP None 2379/TCP 7h56m

可以详细的看一下service是否绑定到对象的ip上

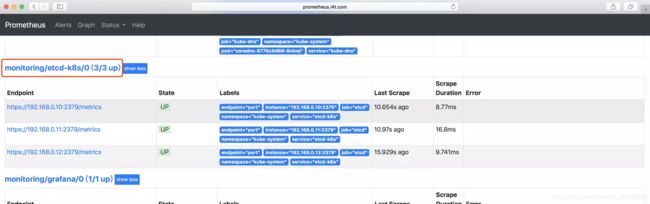

创建完成后,稍等一会我们可以去prometheus ui界面查看targets,便会出现etcd监控信息

如果提示ip:2379 connection refused,首先检查本地Telnet 是否正常,在检查etcd配置文件是否是监听0.0.0.0:2379

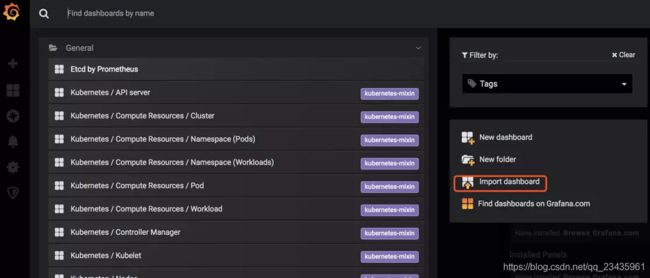

数据采集完成后,接下来可以在grafana中导入dashboard

https://grafana.com/grafana/dashboards/3070

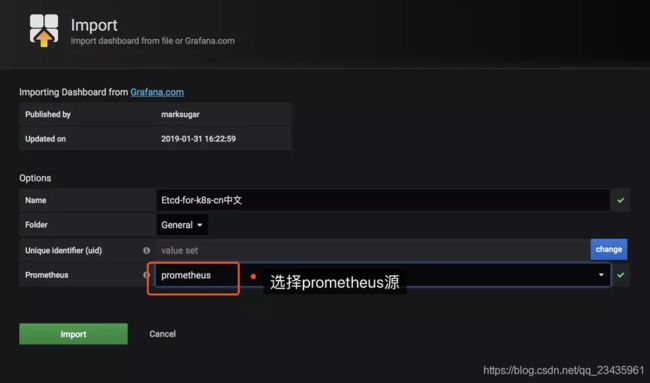

还可以导入中文版ETCD集群插件

https://grafana.com/grafana/dashboards/9733

点击HOME–>导入模板

这里可以导入3070或者9733

3070模板如下

9733模板如下