李宏毅机器学习特训营机器学习作业3-食物图片分类

目录

- 项目描述

-

- 数据集介绍

- 项目要求

- 数据增强

- 读取数据

-

- 获取训练集的信息,包括读取路径和对应的标签

- 自定义数据读取器

- 定义模型(VGG)

-

- 修改vgg11

- 训练

-

- 再训练所有数据

- 残差网络

- 预测

-

- 用训练好的vgg模型进行推理

项目描述

作业基于paddle2.0

训练一个简单的卷积神经网络,实现食物图片的分类。

数据集介绍

本次使用的数据集为food-11数据集,共有11类 标签为0-10

Bread, Dairy product, Dessert, Egg, Fried food, Meat, Noodles/Pasta, Rice, Seafood, Soup, and Vegetable/Fruit.

(面包,乳制品,甜点,鸡蛋,油炸食品,肉类,面条/意大利面,米饭,海鲜,汤,蔬菜/水果)

Training set: 9866张

Validation set: 3430张

Testing set: 3347张

数据格式

下载 zip 档后解压缩会有三个资料夹,分别为training、validation 以及 testing

training 以及 validation 中的照片名称格式为 [类别]_[编号].jpg,例如 3_100.jpg 即为类别 3 的照片(编号不重要)

项目要求

- 请使用 CNN 搭建 model

- 不能使用额外 dataset

- 禁止使用 pre-trained model(只能自己手写CNN)

- 请不要上网寻找 label

#导入相关库

import os

import random

import re

import matplotlib.pyplot as plt

import PIL.Image as Image

import paddle

from paddle.vision.transforms import Compose, ColorJitter, Resize,Transpose,Normalize,RandomHorizontalFlip,RandomVerticalFlip,RandomRotation,RandomCrop

import numpy as np

from paddle.io import Dataset

import paddle.vision.transforms

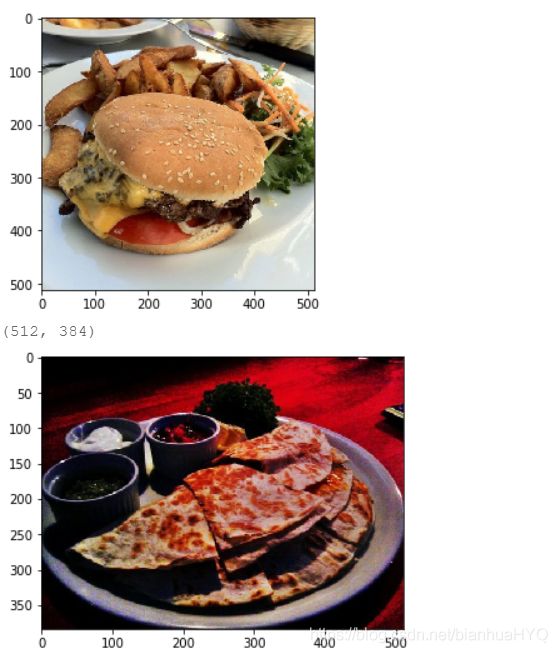

#看一下类别 0 的事物图片

path1='work/food-11/training/0_106.jpg'

path2='work/food-11/training/0_170.jpg'

img1 = Image.open(path1)

print(img1.size)

plt.imshow(img1) #根据数组绘制图像

plt.show()

查看数据

img2 = Image.open(path2)

print(img2.size)

plt.imshow(img2) #根据数组绘制图像

plt.show() #显示图像

#查看当前文件夹中有多张训练图片(包括数据增强后的图片)

fPath = 'work/food-11/training'

trainfood_list = os.listdir(fPath)

print(trainfood_list[0:2])

print(len(trainfood_list))

['4_315.jpg', '0_448.jpg']

15769

fPath = 'work/food-11/training'

trainfood_list = os.listdir(fPath)

for i in trainfood_list:

if '.jpg.jpg' in i:

os.remove(fPath+'/'+i) # 先删除.jpg.jpg图片

trainfood_list = os.listdir(fPath)

print(trainfood_list[0:2])

print(len(trainfood_list))

['4_767.jpg', '2_1205.jpg']

9866

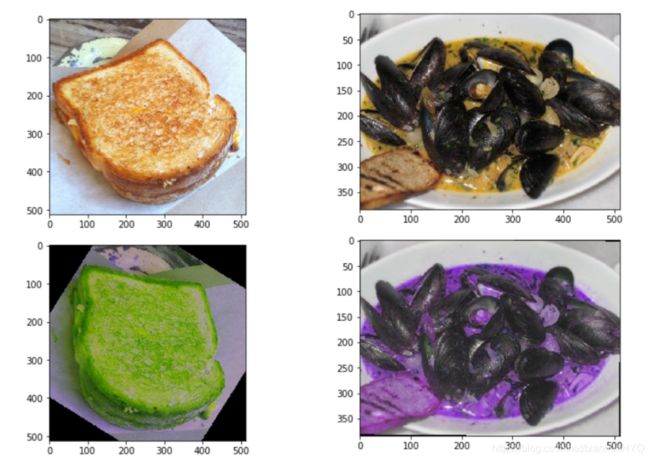

数据增强

数据增强需要合理,不然会出现下面增强不合理的情况。。

#数据增强函数

def dataAugmentation(path_list):

for each in path_list:

if(random.random()>0.4): #每张图片有0.4的概率被处理

img = Image.open(fPath+'/'+each)

transform = Compose([ColorJitter(0.5,0.07,0.2,0.05), #调整图像的亮度,对比度,饱和度和色调。

RandomHorizontalFlip(0.4)])

img = transform(img)

if(random.random()>0.5):

transform1 =RandomRotation(30)

img = transform1(img)

savePath = fPath+'/'+each+'.jpg'

img.save(savePath) #例如:work/food-11/training/0_106.jpg.jpg 多一个.jpg用于区分原图,方便本地查看

#先删掉以前已经存在的经过增强后的图片

trainfood_list = os.listdir(fPath)

for i in trainfood_list:

if '.jpg.jpg' in i:

os.remove(fPath+'/'+i) # 先删除.jpg.jpg图片

trainfood_list = os.listdir(fPath)

print(len(trainfood_list))

dataAugmentation(trainfood_list)

trainfood_list = os.listdir(fPath)

print(len(trainfood_list)) #查看经过数据增强后,总共有多少张训练图片

li = []

for i in trainfood_list:

if '.jpg.jpg' in i:

li.append(i)

print(li[0:2])

一下经过处理后的图片

#看一下经过处理后的图片

path1='work/food-11/training/4_186.jpg'

path2='work/food-11/training/4_186.jpg.jpg'

path3='work/food-11/training/5_306.jpg'

path4='work/food-11/training/5_306.jpg.jpg'

img1 = Image.open(path1)

plt.imshow(img1) #根据数组绘制图像

plt.show()

img2 = Image.open(path2)

plt.imshow(img2) #根据数组绘制图像

plt.show() #显示图像

img3 = Image.open(path3)

plt.imshow(img3)

plt.show()

img4 = Image.open(path4)

plt.imshow(img4)

plt.show()

读取数据

获取训练集的信息,包括读取路径和对应的标签

data_list = [] #用个列表保存每个训练样本的读取路径以及标签

for each in trainfood_list:

data_list.append([fPath+'/'+each,int(re.findall(r"(\d+)_",each)[0])]) #利用正则表达式获取 _ 前的图片标签

print(len(data_list))

print(data_list[0:3])

#按文件顺序读取,可能造成很多属种图片存在序列相关,用random.shuffle方法把样本顺序彻底打乱。

random.shuffle(data_list)

print(len(data_list))

print(data_list[0:3]) #查看打乱顺序后的信息

data_vali_list = [] #用个列表保存每个测试样本的读取路径以及标签

vali_fPath = 'work/food-11/validation'

valifood_list = os.listdir(vali_fPath)

for each in valifood_list:

data_vali_list.append([vali_fPath+'/'+each,int(re.findall(r"(\d+)_",each)[0])]) #利用正则表达式获取 _ 前的图片标签

print(len(data_vali_list))

print(data_vali_list[0:3])

3430

[['work/food-11/validation/4_315.jpg', 4], ['work/food-11/validation/2_56.jpg', 2], ['work/food-11/validation/0_87.jpg', 0]]

自定义数据读取器

#图像预处理函数

def preprocess(img):

img = img.resize((224, 224), Image.ANTIALIAS)

img = np.array(img).astype("float32")

img = img.transpose((2, 0, 1))

img = img / 255.0 # 将图像数据归一化

return img

#自定义数据读取器

class Reader(Dataset):

def __init__(self, datalist):

super().__init__()

self.samples = datalist

def __getitem__(self, idx):

#处理图像

img_path = self.samples[idx][0] #得到某样本的路径

img = Image.open(img_path)

if img.mode != 'RGB':

img = img.convert('RGB')

img = preprocess(img)#图片预处理

#处理标签

label = self.samples[idx][1] #得到某样本的标签

label = np.array([label], dtype="int64") #把标签数据类型转成int64

return img, label

def __len__(self):

#返回每个Epoch中图片数量

return len(self.samples)

#生成训练数据集实例

train_dataset = Reader(data_list)

#打印一个训练样本

print(train_dataset[1136][0].shape)

print(train_dataset[1136][1])

#生成测试数据集实例

vali_dataset = Reader(data_vali_list)

#打印一个训练样本

print(vali_dataset[1136][0].shape)

print(vali_dataset[1136][1])

(3, 224, 224)

[8]

(3, 224, 224)

[3]

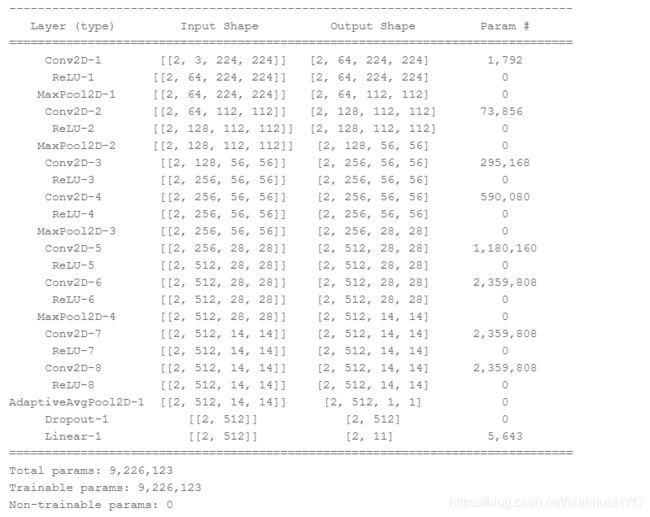

定义模型(VGG)

修改vgg11

最初用vgg16的结构训练,发现验证集只能达到55%的正确率。于是模型结构修改为vgg11,然后把最后的MaxPool2D层换成AdaptiveAvgPool2D,把连续的三个全连接层换成一个全连接层。

import paddle.nn.functional as F

#定义模型

class My_vgg(paddle.nn.Layer):

def __init__(self, num_classes):

super().__init__()

self.num_classes = num_classes

net = []

# block 1

net.append(paddle.nn.Conv2D(3, 64, (3, 3),padding=1, stride=1))

net.append(paddle.nn.ReLU())

# net.append(paddle.nn.Conv2D(64, 64, (3, 3),padding=1, stride=1))

# net.append(paddle.nn.ReLU())

net.append(paddle.nn.MaxPool2D(kernel_size=2, stride=2))

# block 2

net.append(paddle.nn.Conv2D(64, 128, (3, 3), stride=1, padding=1))

net.append(paddle.nn.ReLU())

# net.append(paddle.nn.Conv2D(128, 128, (3, 3), stride=1, padding=1))

# net.append(paddle.nn.ReLU())

net.append(paddle.nn.MaxPool2D(kernel_size=2, stride=2))

# block 3

net.append(paddle.nn.Conv2D(128, 256, (3, 3), stride=1,padding=1))

net.append(paddle.nn.ReLU())

net.append(paddle.nn.Conv2D(256, 256, (3, 3), stride=1,padding=1))

net.append(paddle.nn.ReLU())

# net.append(paddle.nn.Conv2D(256, 256, (3, 3), stride=1,padding=1))

# net.append(paddle.nn.ReLU())

net.append(paddle.nn.MaxPool2D(kernel_size=2, stride=2))

# block 4

net.append(paddle.nn.Conv2D(256, 512, (3, 3), stride=1, padding=1))

net.append(paddle.nn.ReLU())

net.append(paddle.nn.Conv2D(512, 512, (3, 3), stride=1, padding=1))

net.append(paddle.nn.ReLU())

# net.append(paddle.nn.Conv2D( 512, 512, (3, 3), stride=1, padding=1))

# net.append(paddle.nn.ReLU())

net.append(paddle.nn.MaxPool2D(kernel_size=2, stride=2))

# block 5

net.append(paddle.nn.Conv2D( 512, 512, (3, 3), stride=1, padding=1))

net.append(paddle.nn.ReLU())

net.append(paddle.nn.Conv2D( 512, 512, (3, 3), stride=1, padding=1))

net.append(paddle.nn.ReLU())

# net.append(paddle.nn.Conv2D( 512, 512, (3, 3), stride=1, padding=1))

# net.append(paddle.nn.ReLU())

#net.append(paddle.nn.MaxPool2D(kernel_size=2, stride=2))

net.append(paddle.nn.AdaptiveAvgPool2D(output_size=1))

# 组网

#顺序容器。子Layer将按构造函数参数的顺序添加到此容器中。

self.conv = paddle.nn.Sequential(*net)

classifier = []

# classifier.append(paddle.nn.Linear( 512*7*7, 4096))

# classifier.append(paddle.nn.ReLU())

# classifier.append(paddle.nn.Dropout(p=0.5))

# classifier.append(paddle.nn.Linear( 4096, 4096))

# classifier.append(paddle.nn.ReLU())

# classifier.append(paddle.nn.Dropout(p=0.5))

# classifier.append(paddle.nn.Linear( 4096, self.num_classes))

classifier.append(paddle.nn.Dropout(p=0.5))

classifier.append(paddle.nn.Linear( 512, self.num_classes))

# 组网

#顺序容器。子Layer将按构造函数参数的顺序添加到此容器中。

self.classifier = paddle.nn.Sequential(*classifier)

def forward(self, x):

features = self.conv(x)

features = paddle.reshape(features, [features.shape[0], -1])

classify_result = self.classifier(features)

return classify_result

network = My_vgg(11) # 模型实例化

paddle.summary(network, (2,3,224,224))

#定义输入

input_define = paddle.static.InputSpec(shape=[-1,3,224,224], dtype="float32", name="img")

label_define = paddle.static.InputSpec(shape=[-1,1], dtype="int64", name="label")

训练

训练集的准确率上升到了71%左右

#实例化网络对象并定义优化器等训练逻辑

model = My_vgg(num_classes=11)

model = paddle.Model(model,inputs=input_define,labels=label_define) #用Paddle.Model()对模型进行封装

optimizer = paddle.optimizer.AdamW(learning_rate=0.0001, parameters=model.parameters(),weight_decay=0.01)

model.prepare(optimizer=optimizer, #指定优化器

loss=paddle.nn.CrossEntropyLoss(), #指定损失函数

metrics=paddle.metric.Accuracy()) #指定评估方法

model.fit(train_data=train_dataset, #训练数据集

eval_data=vali_dataset, #测试数据集

batch_size=128, #一个批次的样本数量

epochs=50, #迭代轮次

save_dir="/home/aistudio/pp", #把模型参数、优化器参数保存至自定义的文件夹

save_freq=20, #设定每隔多少个epoch保存模型参数及优化器参数

#log_freq=100, #打印日志的频率

verbose=1, # 日志展示模式

shuffle=True # 是否打乱数据集顺序

)

再训练所有数据

利用最后保存的参数,在其基础上使用所有训练数据持续地训练

random.shuffle(data_vali_list)

data_list.extend(data_vali_list)

#生成训练数据集实例

train_dataset2 = Reader(data_list)

#打印一个训练样本

print(train_dataset2[1136][0].shape)

print(train_dataset2[1136][1])

(3, 224, 224)

[9]

加载模型再训练,只训练10个epoch

model = paddle.Model(My_vgg(num_classes=11),inputs=input_define,labels=label_define)

model.load('pp/final') #加载模型

optimizer = paddle.optimizer.AdamW(learning_rate=0.0001, parameters=model.parameters(),weight_decay=0.01)

model.prepare(optimizer=optimizer, #指定优化器

loss=paddle.nn.CrossEntropyLoss(), #指定损失函数

metrics=paddle.metric.Accuracy()) #指定评估方法

model.fit(train_data=train_dataset2, #训练数据集

batch_size=128, #一个批次的样本数量

epochs=10, #迭代轮次

save_dir="/home/aistudio/pp", #把模型参数、优化器参数保存至自定义的文件夹

save_freq=10, #设定每隔多少个epoch保存模型参数及优化器参数

#log_freq=100, #打印日志的频率

verbose=1, # 日志展示模式

shuffle=True # 是否打乱数据集顺序

)

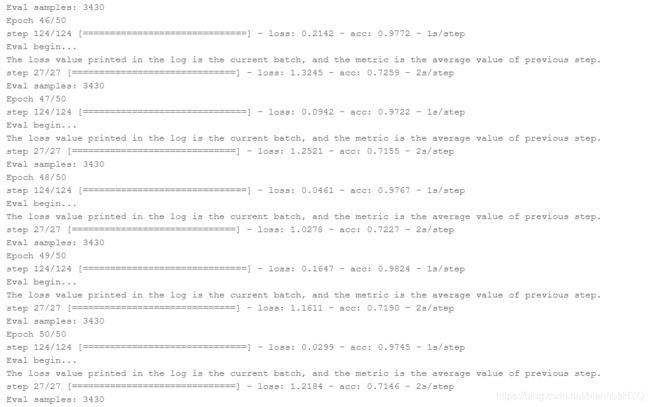

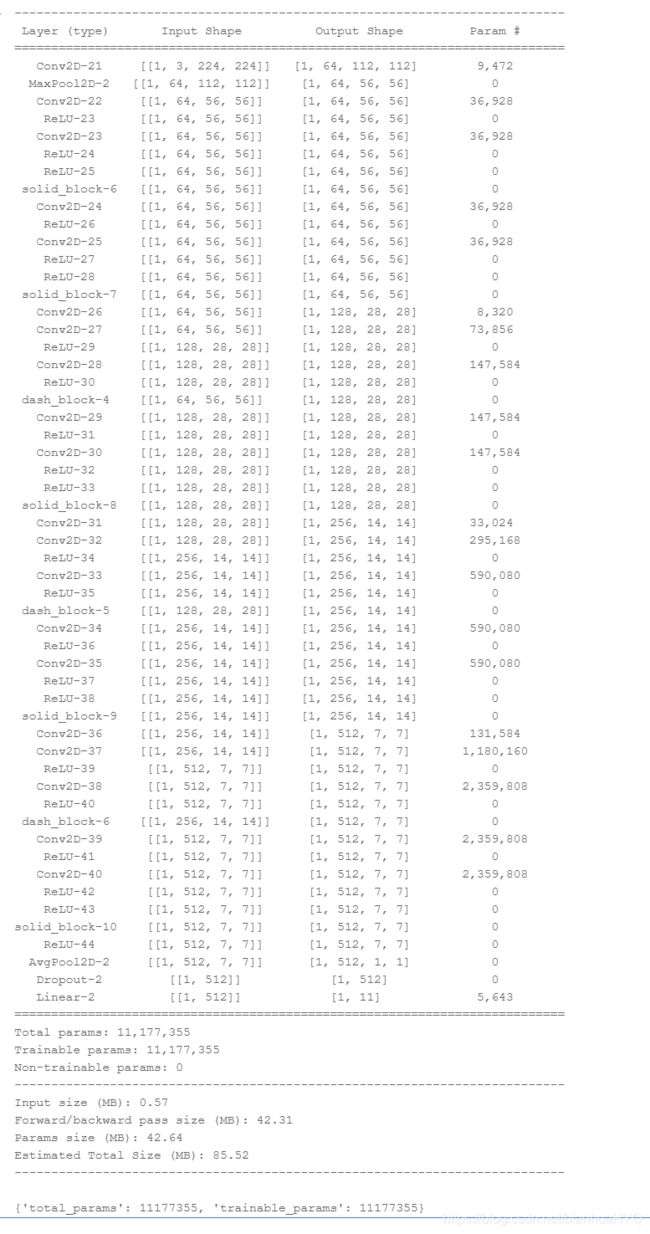

残差网络

采用平均池化和dropout,验证集的准确率只能达到60%

class solid_block(paddle.nn.Layer):

#带实线部分的残差块

def __init__(self,in_channels):

super(solid_block, self).__init__()

self.conv1 = paddle.nn.Conv2D(in_channels,in_channels,kernel_size=3,stride=1,padding=1)

self.relu1 = paddle.nn.ReLU()

self.conv2 = paddle.nn.Conv2D(in_channels,in_channels,kernel_size=3,stride=1,padding=1)

self.relu2 = paddle.nn.ReLU()

self.relu3 = paddle.nn.ReLU()

def forward(self, x):

y = self.conv1(x)

y = self.relu1(x)

y = self.conv2(y)

y = self.relu2(y)

return self.relu3(x+y)

class dash_block(paddle.nn.Layer):

#带虚线部分的残差块

def __init__(self,in_channels,out_channels):

super(dash_block, self).__init__()

self.conv1 = paddle.nn.Conv2D(in_channels,out_channels,kernel_size=1,stride=2)

self.conv2 = paddle.nn.Conv2D(in_channels,out_channels,kernel_size=3,stride=2,padding=1)

self.relu1 = paddle.nn.ReLU()

self.conv3 = paddle.nn.Conv2D(out_channels,out_channels,kernel_size=3,stride=1,padding=1)

self.relu2 = paddle.nn.ReLU()

def forward(self, x):

z = self.conv1(x)

y = self.conv2(x)

y = self.relu1(y)

y = self.conv3(y)

return self.relu2(y+z)

class my_resnst(paddle.nn.Layer):

def __init__(self,num_classes=11):

super(my_resnst, self).__init__()

self.conv1 = paddle.nn.Conv2D(3,64,kernel_size=7, stride=2, padding=3)

self.maxp1 = paddle.nn.MaxPool2D(kernel_size=3, stride=2, padding=1)

self.resnet1 = solid_block(64)

self.resnet2 = solid_block(64)

self.resnet3 = dash_block(64,128)

self.resnet4 = solid_block(128)

self.resnet5 = dash_block(128,256)

self.resnet6 = solid_block(256)

self.resnet7 = dash_block(256,512)

self.resnet8 = solid_block(512)

self.relu = paddle.nn.ReLU()

self.avgp1 = paddle.nn.AvgPool2D(7) #平均池化

self.dropout = paddle.nn.Dropout(p=0.5)

self.fullc = paddle.nn.Linear(512,num_classes)

def forward(self,x) :

x = self.conv1(x)

x = self.maxp1(x)

x = self.resnet1(x)

x = self.resnet2(x)

x = self.resnet3(x)

x = self.resnet4(x)

x = self.resnet5(x)

x = self.resnet6(x)

x = self.resnet7(x)

x = self.resnet8(x)

x = self.relu(x)

x = self.avgp1(x)

x = paddle.reshape(x, [x.shape[0], -1])

x = self.dropout(x)

x = self.fullc(x)

return x

network = my_resnst() # 模型实例化

paddle.summary(network, (1,3,224,224))

#实例化网络对象并定义优化器等训练逻辑

model = my_resnst()

model = paddle.Model(model,inputs=input_define,labels=label_define) #用Paddle.Model()对模型进行封装

optimizer = paddle.optimizer.Adam(learning_rate=0.000125, parameters=model.parameters())

model.prepare(optimizer=optimizer, #指定优化器

loss=paddle.nn.CrossEntropyLoss(), #指定损失函数

metrics=paddle.metric.Accuracy()) #指定评估方法

model.fit(train_data=train_dataset, #训练数据集

eval_data=vali_dataset, #测试数据集

batch_size=128, #一个批次的样本数量

epochs=50, #迭代轮次

save_dir="/home/aistudio/respp", #把模型参数、优化器参数保存至自定义的文件夹

save_freq=50, #设定每隔多少个epoch保存模型参数及优化器参数

#log_freq=100, #打印日志的频率

verbose=1, # 日志展示模式

shuffle=True # 是否打乱数据集顺序

)

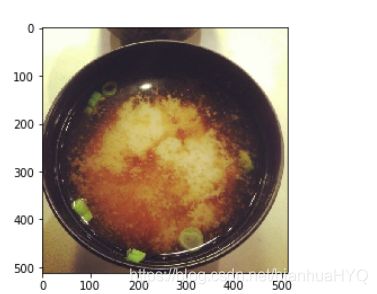

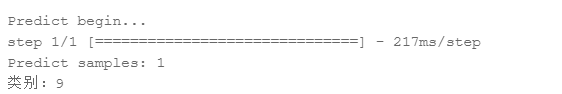

预测

定义测试数据读取器

用训练好的vgg模型进行推理

class TestDataset(Dataset):

def __init__(self, img_path=None):

super().__init__()

if img_path:

self.img_paths = [img_path]

else:

raise Exception("请指定需要预测对应图片路径")

def __getitem__(self, index):

# 获取图像路径

img_path = self.img_paths[index]

img = Image.open(img_path)

if img.mode != 'RGB':

img = img.convert('RGB')

img = preprocess(img) # 数据预处理--这里仅包括简单数据预处理,没有用到数据增强

return img

def __len__(self):

return len(self.img_paths)

model = paddle.Model(My_vgg(num_classes=11),inputs=input_define)

#读取刚刚训练好的参数

model.load('pp/final')

#准备模型

model.prepare()

#得到待预测数据集中每个图像的读取路径

test_list=[]

test_fPath = 'work/food-11/testing'

testfood_list = os.listdir(test_fPath)

for each in testfood_list:

test_list.append(test_fPath+'/'+each)

print(len(test_list))

print(test_list[0:3])

3347

['work/food-11/testing/2760.jpg', 'work/food-11/testing/3215.jpg', 'work/food-11/testing/3011.jpg']

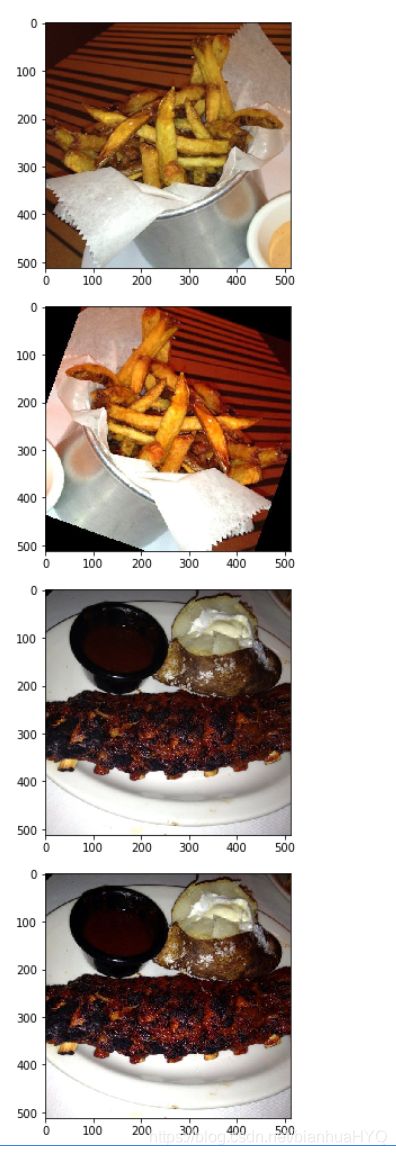

#查看一张测试图片

p='work/food-11/testing/3346.jpg'

img = Image.open(p)

plt.imshow(img) #根据数组绘制图像

plt.show()

testpre = TestDataset(p)

result = model.predict(test_data=testpre)[0] #预测

result = paddle.to_tensor(result)

result = np.argmax(result.numpy()) #获得最大值所在的序号

print("类别:"+str(result))

对整个测试集进行预测

保存时只保存图片的文件名字。

#利用训练好的模型进行预测

results=[]

for each in test_list:

test2pre = TestDataset(each)

result = model.predict(test_data=test2pre)[0] #预测

result = paddle.to_tensor(result)

result = np.argmax(result.numpy()) #获得最大值所在的序号

results.append([os.path.basename(each),result]) #保存该序号所对应的标签 如:[3364.jpg,9]

#把结果保存起来

with open("result.txt", "w") as f:

for r in results:

f.write("{}\n".format(r))