使用Pytorch搭建CNN模型完成食物图片分类(李宏毅视频课2020作业3,附超详细代码讲解)

文章目录

- 0 前言

- 1 任务描述

-

- 1.1 数据描述

- 1.2 作业提交

- 1.3 数据下载

-

- 1.3.1 完整数据集

- 1.3.2 部分数据集

- 2 过程讲解

-

- 2.1 读取数据

- 2.2 数据预处理

- 2.3 模型搭建

- 2.4 模型训练

-

- 2.4.1 Training

- 2.4.2 Validating

- 2.5 模型测试

- 2.6 运行结果

- 3 完整代码

-

- 3.1 我的版本

- 3.2 原始版本(需要GPU且安装cuda)

0 前言

本文利用深度学习框架-Pytorch复现了李宏毅机器学习2020年作业3例程,我会及时回复评论区的问题,如果觉得本文有帮助欢迎点赞 。

1 任务描述

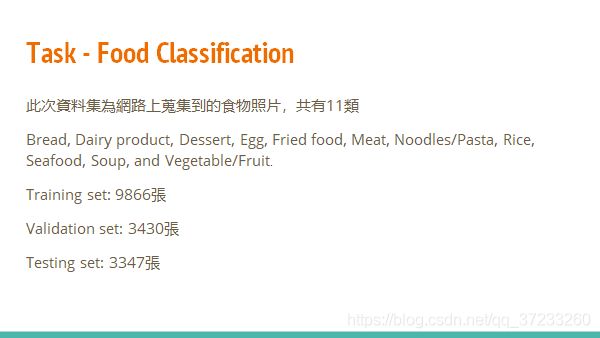

收集来的资料中均是食物的照片,共有11类,搭建CNN模型完成Food Classification。

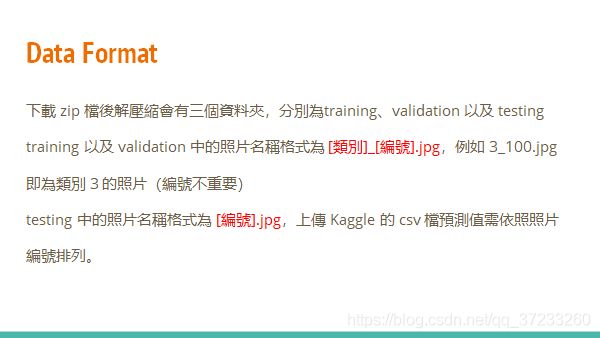

1.1 数据描述

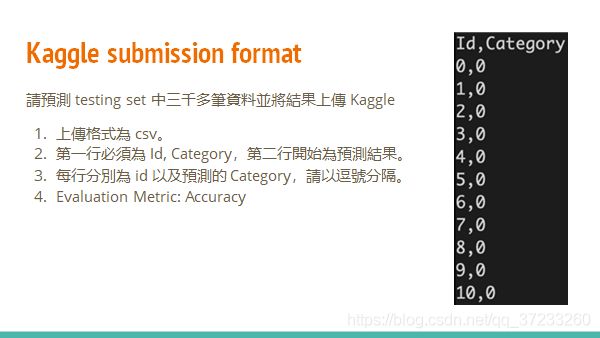

1.2 作业提交

1.3 数据下载

1.3.1 完整数据集

在kaggle竞赛网站上可以下载完整数据集:kaggle链接。界面如下,在这里提交作业和下载数据集(点击“Download All”即可):

1.3.2 部分数据集

由于完整数据集较大,笔者在代码测试时使用了一个微型数据集,已经上传到CSDN中Sub_data.zip,无需积分即可下载。

2 过程讲解

原始代码(后文附上)使用了GPU加速,本文整为使用CPU运算,可以直接运行。

2.1 读取数据

import os

import time

import cv2

import numpy as np

import torch

from torch import nn

from torch.utils.data import DataLoader,Dataset

from torch.optim import Adam

import torchvision.transforms as transforms

from torch.autograd import Variable

# 数据是图片格式,要自己写一个read函数

def readfile(path, label):

# label 是一个布尔变量,代表是否需要回传y,对于测试集是不需要的

# os.listdir() 方法用于返回指定的文件夹包含的文件或文件夹的名字的列表。

image_dir = sorted(os.listdir((path))) # 按类别排序

x = np.zeros((len(image_dir), 128, 128, 3), dtype=np.uint8) # 数量,长,宽,深度(彩图为3通道)

# print(x)

y = np.zeros((len(image_dir)), dtype=np.uint8) # label

for i, file in enumerate(image_dir):

# cv2.imread()在不加第二个参数的情况下默认将图片转换成了一个三维数组,最里面的一维代表的是一个像素的三个通道的灰度值,第二个维度代表的是第一行所有像素的灰度值,第三个维度,也就是最外面的一个维度代表的是这一张图片。

img = cv2.imread(os.path.join(path, file)) # 读取图片

x[i, :, :, :] = cv2.resize(img, (128,128)) # 将图片进行缩放,压缩为128*128*3

if label:

y[i] = int(file.split("_")[0]) # 数据前面是类别,后面是编号

if label:

return x, y

else:

return x

# 建立总的路径

# 数据要放在同一个文件夹下

workspace_dir = './food-11'

print("Reading data--------->")

# print(os.path.join(workspace_dir, "training"))

train_x, train_y = readfile(os.path.join(workspace_dir, "training1"), True)

# print(train_y)

print("Size of training data = {}".format(len(train_x)))

val_x, val_y = readfile(os.path.join(workspace_dir, "validation1"), True)

print(f'Size of validation data = {len(val_x)}')

test_x = readfile(os.path.join(workspace_dir, "testing1"), False)

print("Size of testing data = {}".format(len(test_x)))

2.2 数据预处理

-

在 Pytorch 中,我们可以利用 torch.utils.data 的 Dataset 及 DataLoader 來"包装" data,使得后续的 training 及 testing 更为方便。Dataset 需要 overload 两个函数:__len__ 及 __getitem__。__len__ 必须要回传 dataset 的大小,而 __getitem__ 则定义了当程序利用 [ ] 取值时,dataset 应该怎么回传资料。实际上我们并不会直接用到这两个函数,但是使用 DataLoader 在 enumerate Dataset 时会用到,没有做的话会出现 error。

-

pytorch中dataloader的大小将根据batch_size的大小自动调整。如果训练数据集有1000个样本,并且batch_size的大小为10,则dataloader的长度就是100。

## Dateset

# training 时做 data augmentation

# torchvision.transforms是pytorch中的图像预处理包。一般用Compose把多个步骤整合到一起

train_transform = transforms.Compose([

transforms.ToPILImage(), # 将tensor转成PIL的格式

transforms.RandomHorizontalFlip(), # 随机将图片水平翻转

transforms.RandomRotation(15), # 随机旋转图片

transforms.ToTensor() # 将图片转成 Tensor,并把数值normalization到[0,1]

])

# testing 时不需要做 data augmentation

test_transform = transforms.Compose([

transforms.ToPILImage(),

transforms.ToTensor(),

])

# 写一个将数据处理成DataLoader的类

class ImgDataset(Dataset):

def __init__(self, x, y=None, transform=None):

self.x = x

# label is required to be a LongTensor

self.y = y

if y is not None:

self.y = torch.LongTensor(y)

self.transform = transform

# 当我们集成了一个 Dataset类之后,我们需要重写 len 方法,该方法提供了dataset的大小;

# getitem 方法, 该方法支持从 0 到 len(self)的索引

def __len__(self):

return len(self.x)

def __getitem__(self, index):

X = self.x[index]

if self.transform is not None:

X = self.transform(X)

if self.y is not None:

Y = self.y[index]

return X, Y

else:

return X

batch_size = 4

train_set = ImgDataset(train_x, train_y, train_transform) # 将数据包装成Dataset类

val_set = ImgDataset(val_x, val_y, test_transform)

train_loader = DataLoader(train_set , batch_size= batch_size, shuffle=True) # 将数据处理成DataLoader

val_loader = DataLoader(val_set, batch_size=batch_size, shuffle=False)

2.3 模型搭建

- super() 函数是用于调用父类(超类)的一个方法。super() 是用来解决多重继承问题的,直接用类名调用父类方法在使用单继承的时候没问题,但是如果使用多继承,会涉及到查找顺序(MRO)、重复调用(钻石继承)等种种问题。

- 构建网络需要继承nn.Module,并且调用nn.Module的构造函数。

## Model

class Classifier(nn.Module):

def __init__(self):

super(Classifier, self).__init__()

# torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride, padding)(输出通道就是指卷积核用了多少个)

# torch.nn.MaxPool2d(kernel_size, stride, padding)

# input 维度 [3, 128, 128]

self.cnn = nn.Sequential(

nn.Conv2d(3, 64, 3, 1, 1), # 卷积

nn.BatchNorm2d(64), # 归一化

nn.ReLU(), # 激活函数

nn.MaxPool2d(2,2,0), # 池化

nn.Conv2d(64, 128, 3, 1, 1),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0),

nn.Conv2d(128, 256, 3, 1, 1),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0),

nn.Conv2d(256, 512, 3, 1, 1),

nn.BatchNorm2d(512),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0),

nn.Conv2d(512, 512, 3, 1, 1),

nn.BatchNorm2d(512),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0),

)

# 定义全连接神经网络

self.fc = nn.Sequential(

nn.Linear(512* 4* 4, 1024),

nn.ReLU(),

nn.Linear(1024, 512),

nn.ReLU(),

nn.Linear(512,11)

)

def forward(self, x):

out = self.cnn(x)

out = out.view(out.size()[0], -1)

return self.fc(out)

2.4 模型训练

- model.train() 确保 model 是在 train model (开启 Dropout 等)。

- model.eval() 不启用 BatchNormalization 和 Dropout,保证BN和dropout不发生变化,测试阶段往往是单个图像的输入,不存在mini-batch的概念。所以将model改为eval模式后,BN的参数固定,并采用之前训练好的全局的mean和std。

2.4.1 Training

## Training

# model to cpu

model = Classifier() # 有显卡的可以使用cuda()

loss = nn.CrossEntropyLoss() # 因为是 classification task,所以 loss 使用 CrossEntropyLoss

optimizer = Adam(model.parameters(), lr=0.001) # optimizer 使用 Adam

num_epoch = 5

for epoch in range(num_epoch):

epoch_start_time = time.time()

train_acc = 0.0

train_loss = 0.0

val_acc = 0.0

val_loss = 0.0

model.train() # 确保 model 是在 train model (开启 Dropout 等)

for i, data in enumerate(train_loader):

optimizer.zero_grad() # 用 optimizer 将 model 参数的 gradient 归零,准备下一次的更新

train_pred = model(data[0])

batch_loss = loss(train_pred, data[1])

batch_loss.backward() # 利用 back propagation 算出每个参数的 gradient

optimizer.step() # optimizer 用 gradient 更新参数值, 所以step()要放在后面

# 将数据从 train_loader 中读出来

inputs, labels = data

# 将这些数据转换成Variable类型

inputs, labels = Variable(inputs), Variable(labels)

# 打印

print("epoch=", epoch, "的第", i, "个inputs", inputs.data.size(), "labels", labels.data.size())

train_acc += np.sum(np.argmax(train_pred.cpu().data.numpy(), axis=1)==data[1].numpy())

train_loss += batch_loss.item()

model.eval()

with torch.no_grad():

for i, data in enumerate(val_loader):

val_pred = model(data[0])

batch_loss = loss(val_pred, data[1])

val_acc += np.sum(np.argmax(train_pred.cpu().data.numpy(), axis=1) == data[1].numpy())

val_loss += batch_loss.item()

# 打印结果

print('[%03d/%d] %2.2f sec(s) Train Acc: %3.6f Loss: %3.6f | Val Acc: %3.6f loss: %3.6f' % \

(epoch+1, num_epoch, time.time()-epoch_start_time, \

train_acc/train_set.__len__(), train_loss/train_set.__len__(), val_acc/val_set.__len__(), val_loss/ val_set.__len__())

)

2.4.2 Validating

# 得到好的参数后,我们使用Training Set和Validation Set一起训练(资料越多,效果越好)

train_val_x = np.concatenate((train_x, val_x), axis=0) # 按轴axis连接array组成一个新的array

train_val_y = np.concatenate((train_y, val_y), axis=0)

train_val_set = ImgDataset(train_val_x, train_val_y, train_transform)

train_val_loader = DataLoader(train_val_set, batch_size=batch_size, shuffle=True)

model_best = Classifier()

loss = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model_best.parameters(), lr=0.001 )

num_epoch = 5

for epoch in range(num_epoch):

epoch_start_time = time.time()

train_acc = 0.0

train_loss = 0.0

model_best.train()

for i, data in enumerate(train_val_loader):

optimizer.zero_grad()

train_pred = model_best(data[0])

batch_loss = loss(train_pred, data[1])

batch_loss.backward()

optimizer.step()

train_acc += np.sum(np.argmax(train_pred.cpu().data.numpy(), axis=1) == data[1].numpy()) # 取出train_pred中元素最大值所对应的索引(axis=1为横轴方向),转为numpy数组

train_loss += batch_loss.item()

print(f'train_pred={train_pred}')

print(f'train_pred(np)={train_pred.data.numpy()}')

print('[%03d/%03d] %2.2f sec(s) Train Acc: %3.6f Loss: %3.6f' % \

(epoch + 1, num_epoch, time.time()-epoch_start_time, \

train_acc/train_val_set.__len__(), train_loss/train_val_set.__len__()))

2.5 模型测试

# Testing

# 利用刚刚训练好的模型进行预测

test_set = ImgDataset(test_x, transform = test_transform)

test_loader = DataLoader(test_set, batch_size = batch_size, shuffle=False)

model_best.eval()

prediction = []

with torch.no_grad():

for i, data in enumerate(test_loader):

test_pred = model_best(data)

test_label = np.argmax(test_pred.cpu().data.numpy(), axis=1)

for y in test_label:

prediction.append(y)

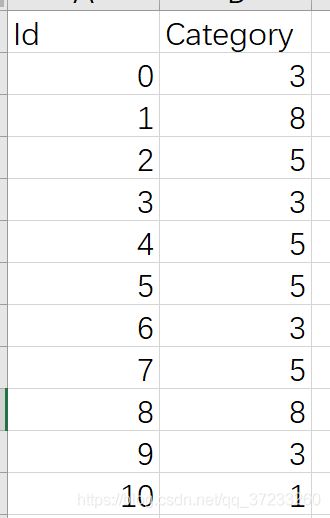

# 将结果写入 csv 文件

with open("predict.csv", 'w') as f:

f.write("Id,Category\n")

for i,y in enumerate(prediction):

f.write('{},{}\n'.format(i, y))

2.6 运行结果

3 完整代码

3.1 我的版本

import os

import time

import cv2

import numpy as np

import torch

from torch import nn

from torch.utils.data import DataLoader,Dataset

from torch.optim import Adam

import torchvision.transforms as transforms

from torch.autograd import Variable

# 定义超参数

# 数据是图片格式,要自己写一个read函数

def readfile(path, label):

# label 是一个布尔变量,代表是否需要回传y,对于测试集是不需要的

# os.listdir() 方法用于返回指定的文件夹包含的文件或文件夹的名字的列表。

image_dir = sorted(os.listdir((path))) # 按类别排序

x = np.zeros((len(image_dir), 128, 128, 3), dtype=np.uint8) # 数量,长,宽,深度(彩图为3通道)

# print(x)

y = np.zeros((len(image_dir)), dtype=np.uint8) # label

for i, file in enumerate(image_dir):

# cv2.imread()在不加第二个参数的情况下默认将图片转换成了一个三维数组,最里面的一维代表的是一个像素的三个通道的灰度值,第二个维度代表的是第一行所有像素的灰度值,第三个维度,也就是最外面的一个维度代表的是这一张图片。

img = cv2.imread(os.path.join(path, file)) # 读取图片

x[i, :, :, :] = cv2.resize(img, (128,128)) # 将图片进行缩放,压缩为128*128*3

if label:

y[i] = int(file.split("_")[0]) # 数据前面是类别,后面是编号

if label:

return x, y

else:

return x

# 建立总的路径

workspace_dir = './food-11'

print("Reading data--------->")

# print(os.path.join(workspace_dir, "training"))

train_x, train_y = readfile(os.path.join(workspace_dir, "training1"), True)

# print(train_y)

print("Size of training data = {}".format(len(train_x)))

val_x, val_y = readfile(os.path.join(workspace_dir, "validation1"), True)

print(f'Size of validation data = {len(val_x)}')

test_x = readfile(os.path.join(workspace_dir, "testing1"), False)

print("Size of testing data = {}".format(len(test_x)))

## Dateset

# training 时做 data augmentation

# torchvision.transforms是pytorch中的图像预处理包。一般用Compose把多个步骤整合到一起

train_transform = transforms.Compose([

transforms.ToPILImage(), # 将tensor转成PIL的格式

transforms.RandomHorizontalFlip(), # 随机将图片水平翻转

transforms.RandomRotation(15), # 随机旋转图片

transforms.ToTensor() # 将图片转成 Tensor,并把数值normalization到[0,1]

])

# testing 时不需要做 data augmentation

test_transform = transforms.Compose([

transforms.ToPILImage(),

transforms.ToTensor(),

])

# 写一个将数据处理成DataLoader的类

class ImgDataset(Dataset):

def __init__(self, x, y=None, transform=None):

self.x = x

# label is required to be a LongTensor

self.y = y

if y is not None:

self.y = torch.LongTensor(y)

self.transform = transform

# 当我们集成了一个 Dataset类之后,我们需要重写 len 方法,该方法提供了dataset的大小;

# getitem 方法, 该方法支持从 0 到 len(self)的索引

def __len__(self):

return len(self.x)

def __getitem__(self, index):

X = self.x[index]

if self.transform is not None:

X = self.transform(X)

if self.y is not None:

Y = self.y[index]

return X, Y

else:

return X

batch_size = 4 # pytorch中dataloader的大小将根据batch_size的大小自动调整。如果训练数据集有1000个样本,并且batch_size的大小为10,则dataloader的长度就是100。

train_set = ImgDataset(train_x, train_y, train_transform) # 将数据包装成Dataset类

val_set = ImgDataset(val_x, val_y, test_transform)

train_loader = DataLoader(train_set , batch_size= batch_size, shuffle=True) # 将数据处理成DataLoader

val_loader = DataLoader(val_set, batch_size=batch_size, shuffle=False)

## Model

class Classifier(nn.Module):

def __init__(self):

# super() 函数是用于调用父类(超类)的一个方法。

# super() 是用来解决多重继承问题的,直接用类名调用父类方法在使用单继承的时候没问题,但是如果使用多继承,会涉及到查找顺序(MRO)、重复调用(钻石继承)等种种问题。

super(Classifier, self).__init__()

# torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride, padding)(输出通道就是指卷积核用了多少个)

# torch.nn.MaxPool2d(kernel_size, stride, padding)

# input 维度 [3, 128, 128]

self.cnn = nn.Sequential(

nn.Conv2d(3, 64, 3, 1, 1), # 卷积

nn.BatchNorm2d(64), # 归一化

nn.ReLU(), # 激活函数

nn.MaxPool2d(2,2,0), # 池化

nn.Conv2d(64, 128, 3, 1, 1),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0),

nn.Conv2d(128, 256, 3, 1, 1),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0),

nn.Conv2d(256, 512, 3, 1, 1),

nn.BatchNorm2d(512),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0),

nn.Conv2d(512, 512, 3, 1, 1),

nn.BatchNorm2d(512),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0),

)

# 定义全连接神经网络

self.fc = nn.Sequential(

nn.Linear(512* 4* 4, 1024),

nn.ReLU(),

nn.Linear(1024, 512),

nn.ReLU(),

nn.Linear(512,11)

)

def forward(self, x):

out = self.cnn(x)

out = out.view(out.size()[0], -1)

return self.fc(out)

## Training

# model to cpu

model = Classifier() # 有显卡的可以使用cuda()

loss = nn.CrossEntropyLoss() # 因为是 classification task,所以 loss 使用 CrossEntropyLoss

optimizer = Adam(model.parameters(), lr=0.001) # optimizer 使用 Adam

num_epoch = 5

for epoch in range(num_epoch):

epoch_start_time = time.time()

train_acc = 0.0

train_loss = 0.0

val_acc = 0.0

val_loss = 0.0

model.train() # 确保 model 是在 train model (开启 Dropout 等)

for i, data in enumerate(train_loader):

optimizer.zero_grad() # 用 optimizer 将 model 参数的 gradient 归零,准备下一次的更新

train_pred = model(data[0])

batch_loss = loss(train_pred, data[1])

batch_loss.backward() # 利用 back propagation 算出每个参数的 gradient

optimizer.step() # optimizer 用 gradient 更新参数值, 所以step()要放在后面

# 将数据从 train_loader 中读出来

inputs, labels = data

# 将这些数据转换成Variable类型

inputs, labels = Variable(inputs), Variable(labels)

# 接下来就是跑模型的环节了,我们这里使用print来代替

print("epoch=", epoch, "的第", i, "个inputs", inputs.data.size(), "labels", labels.data.size())

train_acc += np.sum(np.argmax(train_pred.cpu().data.numpy(), axis=1)==data[1].numpy())

train_loss += batch_loss.item()

model.eval() # 不启用 BatchNormalization 和 Dropout,保证BN和dropout不发生变化,测试阶段往往是单个图像的输入,不存在mini-batch的概念。所以将model改为eval模式后,BN的参数固定,并采用之前训练好的全局的mean和std;

with torch.no_grad():

for i, data in enumerate(val_loader):

val_pred = model(data[0])

batch_loss = loss(val_pred, data[1])

val_acc += np.sum(np.argmax(train_pred.cpu().data.numpy(), axis=1) == data[1].numpy())

val_loss += batch_loss.item()

# 打印结果

print('[%03d/%d] %2.2f sec(s) Train Acc: %3.6f Loss: %3.6f | Val Acc: %3.6f loss: %3.6f' % \

(epoch+1, num_epoch, time.time()-epoch_start_time, \

train_acc/train_set.__len__(), train_loss/train_set.__len__(), val_acc/val_set.__len__(), val_loss/ val_set.__len__())

)

# 得到好的参数后,我们使用Training Set和Validation Set一起训练(资料越多,效果越好)

train_val_x = np.concatenate((train_x, val_x), axis=0) # 按轴axis连接array组成一个新的array

train_val_y = np.concatenate((train_y, val_y), axis=0)

train_val_set = ImgDataset(train_val_x, train_val_y, train_transform)

train_val_loader = DataLoader(train_val_set, batch_size=batch_size, shuffle=True)

model_best = Classifier()

loss = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model_best.parameters(), lr=0.001 )

num_epoch = 5

for epoch in range(num_epoch):

epoch_start_time = time.time()

train_acc = 0.0

train_loss = 0.0

model_best.train()

for i, data in enumerate(train_val_loader):

optimizer.zero_grad()

train_pred = model_best(data[0])

batch_loss = loss(train_pred, data[1])

batch_loss.backward()

optimizer.step()

train_acc += np.sum(np.argmax(train_pred.cpu().data.numpy(), axis=1) == data[1].numpy()) # 取出train_pred中元素最大值所对应的索引(axis=1为横轴方向),转为numpy数组

train_loss += batch_loss.item()

# print(f'train_pred={train_pred}')

# print(f'train_pred(np)={train_pred.data.numpy()}')

print('[%03d/%03d] %2.2f sec(s) Train Acc: %3.6f Loss: %3.6f' % \

(epoch + 1, num_epoch, time.time()-epoch_start_time, \

train_acc/train_val_set.__len__(), train_loss/train_val_set.__len__()))

# Testing

# 利用刚刚训练好的模型进行预测

test_set = ImgDataset(test_x, transform = test_transform)

test_loader = DataLoader(test_set, batch_size = batch_size, shuffle=False)

model_best.eval()

prediction = []

with torch.no_grad():

for i, data in enumerate(test_loader):

test_pred = model_best(data)

test_label = np.argmax(test_pred.cpu().data.numpy(), axis=1)

for y in test_label:

prediction.append(y)

# 将结果写入 csv 文件

with open("predict.csv", 'w') as f:

f.write("Id,Category\n")

for i,y in enumerate(prediction):

f.write('{},{}\n'.format(i, y))

3.2 原始版本(需要GPU且安装cuda)

# Import需要的套件

import os

import numpy as np

import cv2

import torch

import torch.nn as nn

import torchvision.transforms as transforms

import pandas as pd

from torch.utils.data import DataLoader, Dataset

import time

#Read image 利用 OpenCV (cv2) 讀入照片並存放在 numpy array 中

def readfile(path, label):

# label 是一個 boolean variable,代表需不需要回傳 y 值

image_dir = sorted(os.listdir(path))

x = np.zeros((len(image_dir), 128, 128, 3), dtype=np.uint8)

y = np.zeros((len(image_dir)), dtype=np.uint8)

for i, file in enumerate(image_dir):

img = cv2.imread(os.path.join(path, file))

x[i, :, :] = cv2.resize(img,(128, 128))

if label:

y[i] = int(file.split("_")[0])

if label:

return x, y

else:

return x

# 分別將 training set、validation set、testing set 用 readfile 函式讀進來

workspace_dir = './food-11'

print("Reading data")

train_x, train_y = readfile(os.path.join(workspace_dir, "training"), True)

print("Size of training data = {}".format(len(train_x)))

val_x, val_y = readfile(os.path.join(workspace_dir, "validation"), True)

print("Size of validation data = {}".format(len(val_x)))

test_x = readfile(os.path.join(workspace_dir, "testing"), False)

print("Size of Testing data = {}".format(len(test_x)))

# training 時做 data augmentation

train_transform = transforms.Compose([

transforms.ToPILImage(),

transforms.RandomHorizontalFlip(), # 隨機將圖片水平翻轉

transforms.RandomRotation(15), # 隨機旋轉圖片

transforms.ToTensor(), # 將圖片轉成 Tensor,並把數值 normalize 到 [0,1] (data normalization)

])

# testing 時不需做 data augmentation

test_transform = transforms.Compose([

transforms.ToPILImage(),

transforms.ToTensor(),

])

class ImgDataset(Dataset):

def __init__(self, x, y=None, transform=None):

self.x = x

# label is required to be a LongTensor

self.y = y

if y is not None:

self.y = torch.LongTensor(y)

self.transform = transform

def __len__(self):

return len(self.x)

def __getitem__(self, index):

X = self.x[index]

if self.transform is not None:

X = self.transform(X)

if self.y is not None:

Y = self.y[index]

return X, Y

else:

return X

batch_size = 64

train_set = ImgDataset(train_x, train_y, train_transform)

val_set = ImgDataset(val_x, val_y, test_transform)

train_loader = DataLoader(train_set, batch_size=batch_size, shuffle=True)

val_loader = DataLoader(val_set, batch_size=batch_size, shuffle=False)

class Classifier(nn.Module):

def __init__(self):

super(Classifier, self).__init__()

# torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride, padding)

# torch.nn.MaxPool2d(kernel_size, stride, padding)

# input 維度 [3, 128, 128]

self.cnn = nn.Sequential(

nn.Conv2d(3, 64, 3, 1, 1), # [64, 128, 128]

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0), # [64, 64, 64]

nn.Conv2d(64, 128, 3, 1, 1), # [128, 64, 64]

nn.BatchNorm2d(128),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0), # [128, 32, 32]

nn.Conv2d(128, 256, 3, 1, 1), # [256, 32, 32]

nn.BatchNorm2d(256),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0), # [256, 16, 16]

nn.Conv2d(256, 512, 3, 1, 1), # [512, 16, 16]

nn.BatchNorm2d(512),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0), # [512, 8, 8]

nn.Conv2d(512, 512, 3, 1, 1), # [512, 8, 8]

nn.BatchNorm2d(512),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0), # [512, 4, 4]

)

self.fc = nn.Sequential(

nn.Linear(512 * 4 * 4, 1024),

nn.ReLU(),

nn.Linear(1024, 512),

nn.ReLU(),

nn.Linear(512, 11)

)

def forward(self, x):

out = self.cnn(x)

out = out.view(out.size()[0], -1)

return self.fc(out)

model = Classifier().cuda()

loss = nn.CrossEntropyLoss() # 因為是 classification task,所以 loss 使用 CrossEntropyLoss

optimizer = torch.optim.Adam(model.parameters(), lr=0.001) # optimizer 使用 Adam

num_epoch = 30

for epoch in range(num_epoch):

epoch_start_time = time.time()

train_acc = 0.0

train_loss = 0.0

val_acc = 0.0

val_loss = 0.0

model.train() # 確保 model 是在 train model (開啟 Dropout 等...)

for i, data in enumerate(train_loader):

optimizer.zero_grad() # 用 optimizer 將 model 參數的 gradient 歸零

train_pred = model(data[0].cuda()) # 利用 model 得到預測的機率分佈 這邊實際上就是去呼叫 model 的 forward 函數

batch_loss = loss(train_pred, data[1].cuda()) # 計算 loss (注意 prediction 跟 label 必須同時在 CPU 或是 GPU 上)

batch_loss.backward() # 利用 back propagation 算出每個參數的 gradient

optimizer.step() # 以 optimizer 用 gradient 更新參數值

train_acc += np.sum(np.argmax(train_pred.cpu().data.numpy(), axis=1) == data[1].numpy())

train_loss += batch_loss.item()

model.eval()

with torch.no_grad():

for i, data in enumerate(val_loader):

val_pred = model(data[0].cuda())

batch_loss = loss(val_pred, data[1].cuda())

val_acc += np.sum(np.argmax(val_pred.cpu().data.numpy(), axis=1) == data[1].numpy())

val_loss += batch_loss.item()

# 將結果 print 出來

print('[%03d/%03d] %2.2f sec(s) Train Acc: %3.6f Loss: %3.6f | Val Acc: %3.6f loss: %3.6f' % \

(epoch + 1, num_epoch, time.time() - epoch_start_time, \

train_acc / train_set.__len__(), train_loss / train_set.__len__(), val_acc / val_set.__len__(),

val_loss / val_set.__len__()))

train_val_x = np.concatenate((train_x, val_x), axis=0)

train_val_y = np.concatenate((train_y, val_y), axis=0)

train_val_set = ImgDataset(train_val_x, train_val_y, train_transform)

train_val_loader = DataLoader(train_val_set, batch_size=batch_size, shuffle=True)

model_best = Classifier().cuda()

loss = nn.CrossEntropyLoss() # 因為是 classification task,所以 loss 使用 CrossEntropyLoss

optimizer = torch.optim.Adam(model_best.parameters(), lr=0.001) # optimizer 使用 Adam

num_epoch = 30

for epoch in range(num_epoch):

epoch_start_time = time.time()

train_acc = 0.0

train_loss = 0.0

model_best.train()

for i, data in enumerate(train_val_loader):

optimizer.zero_grad()

train_pred = model_best(data[0].cuda())

batch_loss = loss(train_pred, data[1].cuda())

batch_loss.backward()

optimizer.step()

train_acc += np.sum(np.argmax(train_pred.cpu().data.numpy(), axis=1) == data[1].numpy())

train_loss += batch_loss.item()

#將結果 print 出來

print('[%03d/%03d] %2.2f sec(s) Train Acc: %3.6f Loss: %3.6f' % \

(epoch + 1, num_epoch, time.time()-epoch_start_time, \

train_acc/train_val_set.__len__(), train_loss/train_val_set.__len__()))

test_set = ImgDataset(test_x, transform=test_transform)

test_loader = DataLoader(test_set, batch_size=batch_size, shuffle=False)

model_best.eval()

prediction = []

with torch.no_grad():

for i, data in enumerate(test_loader):

test_pred = model_best(data.cuda())

test_label = np.argmax(test_pred.cpu().data.numpy(), axis=1)

for y in test_label:

prediction.append(y)

#將結果寫入 csv 檔

with open("predict.csv", 'w') as f:

f.write('Id,Category\n')

for i, y in enumerate(prediction):

f.write('{},{}\n'.format(i, y))