k8s之Ingress篇七层代理

Ingress介绍

Ingress官网定义:Ingress可以把进入到集群内部的请求转发到集群中的一些服务上,从而可以把服务映射到集群外部。Ingress 能把集群内Service 配置成外网能够访问的 URL,流量负载均衡,提供基于域名访问的虚拟主机等

Ingress简单的理解就是你原来需要改Nginx配置,然后配置各种域名对应哪个 Service,现在把这个动作抽象出来,变成一个 Ingress 对象,你可以用 yaml 创建,每次不要去改Nginx 了,直接改yaml然后创建/更新就行了;那么问题来了:”Nginx 该怎么处理?”

Ingress Controller 这东西就是解决 “Nginx 的处理方式” 的;Ingress Controller 通过与 Kubernetes API 交互,动态的去感知集群中Ingress规则变化,然后读取他,按照他自己模板生成一段 Nginx 配置,再写到 Nginx Pod 里,最后 reload 一下

Ingress Controller介绍

Ingress Controller是一个七层负载均衡调度器,客户端的请求先到达这个七层负载均衡调度器,由七层负载均衡器在反向代理到后端pod,常见的七层负载均衡器有nginx、traefik,以我们熟悉的nginx为例,假如请求到达nginx,会通过upstream反向代理到后端pod应用,但是后端pod的ip地址是一直在变化的,因此在后端pod前需要加一个service,这个service只是起到分组的作用,那么我们upstream只需要填写service地址即可

总结

Ingress Controller 可以理解为控制器,它通过不断的跟 Kubernetes API 交互,实时获取后端Service、Pod的变化,比如新增、删除等,结合Ingress 定义的规则生成配置,然后动态更新上边的 Nginx 或者trafik负载均衡器,并刷新使配置生效,来达到服务自动发现的作用。

Ingress 则是定义规则,通过它定义某个域名的请求过来之后转发到集群中指定的 Service。它可以通过 Yaml 文件定义,可以给一个或多个 Service 定义一个或多个 Ingress 规则。

使用Ingress Controller代理k8s内部应用的流程

-

(1)部署Ingress controller,我们ingress controller使用的是nginx

-

(2)创建Pod应用,可以通过控制器创建pod

-

(3)创建Service,用来分组pod

-

(4)创建Ingress http,测试通过http访问应用

-

(5)创建Ingress https,测试通过https访问应用

客户端通过七层调度器访问后端pod的方式

使用七层负载均衡调度器ingress controller时,当客户端访问kubernetes集群内部的应用时,数据包走向

Ingress-controller高可用

参考:https://github.com/kubernetes/ingress-nginx

https://github.com/kubernetes/ingress-nginx/tree/main/deploy/static/provider/baremetal

Ingress Controller是集群流量的接入层,对它做高可用非常重要,可以基于keepalive实现nginx-ingress-controller高可用,具体实现如下:

Ingress-controller根据Deployment+ nodeSeletor+pod反亲和性方式部署在k8s指定的两个work节点,nginx-ingress-controller这个pod共享宿主机ip,然后通过keepalive+lvs实现nginx-ingress-controller高可用

[root@master1 ~]# kubectl label node node1 kubernetes.io/ingress=nginx

node/node1 labeled

[root@master1 ~]# kubectl label node node2 kubernetes.io/ingress=nginx

node/node2 labeled

[root@node1 ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.1.0

[root@node1 ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1

node/node2 labeled

[root@node2 ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.1.0

[root@node2 ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1

[root@master1 5555]# kubectl apply -f ingress-deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

configmap/ingress-nginx-controller created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

service/ingress-nginx-controller-admission created

service/ingress-nginx-controller created

deployment.apps/ingress-nginx-controller created

ingressclass.networking.k8s.io/nginx created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

serviceaccount/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

184 node2 <none> <none>

[root@master1 5555]# kubectl get pods -n ingress-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-admission-create-f7wxh 0/1 Completed 0 35s 10.1.166.182 node1 <none> <none>

ingress-nginx-admission-patch-stn7t 0/1 Completed 1 35s 10.1.104.34 node2 <none> <none>

ingress-nginx-controller-6c8ffbbfcf-h767r 1/1 Running 0 35s 192.168.0.183 node1 <none> <none>

ingress-nginx-controller-6c8ffbbfcf-vczrv 1/1 Running 0 35s 192.168.0.184 node2 <none> <none>

通过keepalive+nginx实现nginx-ingress-controller高可用

- 安装nginx主备

[root@node1 ~]# yum install nginx keepalived -y

[root@node1 ~]# yum install nginx-mod-stream -y

[root@node2 ~]# yum install nginx keepalived -y

[root@node2 ~]# yum install nginx-mod-stream -y

- 修改nginx配置文件。主备一样

[root@node1 ~]# cat /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

# 四层负载均衡,为两台Master apiserver组件提供负载均衡

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.40.183:80; # Master1 APISERVER IP:PORT

server 192.168.40.184:80; # Master2 APISERVER IP:PORT

}

server {

listen 30080;

proxy_pass k8s-apiserver;

}

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

}

[root@node1 ~]# scp /etc/nginx/nginx.conf node2:/etc/nginx/nginx.conf

nginx.conf

注意:nginx监听端口变成大于30000的端口,比方说30080,这样访问域名:30080就可以了,必须是满足大于30000以上,才能代理ingress-controller

- keepalive配置

主keepalived

[root@node1 ~]# cat /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

# 四层负载均衡,为两台Master apiserver组件提供负载均衡

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.40.183:80; # Master1 APISERVER IP:PORT

server 192.168.40.184:80; # Master2 APISERVER IP:PORT

}

server {

listen 30080;

proxy_pass k8s-apiserver;

}

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

}

[root@node1 ~]# scp /etc/nginx/nginx.conf node2:/etc/nginx/nginx.conf

nginx.conf 100% 1167 1.8MB/s 00:00

[root@node1 ~]# vim /etc/keepalived/keepalived.conf

You have new mail in /var/spool/mail/root

[root@node1 ~]# cp /etc/keepalived/keepalived.conf ./

[root@node1 ~]# vim /etc/keepalived/keepalived.conf

You have new mail in /var/spool/mail/root

[root@node1 ~]# cat /etc/keepalived/keepalived.conf

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

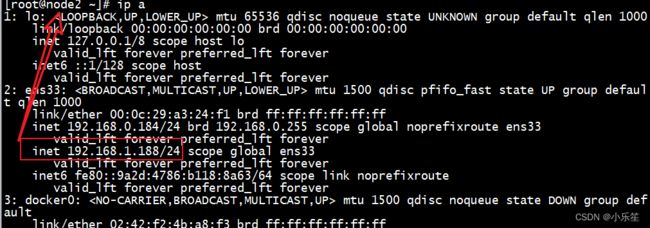

interface ens33 # 修改为实际网卡名

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 100 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟IP

virtual_ipaddress {

192.168.0.188/24

}

track_script {

check_nginx

}

}

#vrrp_script:指定检查nginx工作状态脚本(根据nginx状态判断是否故障转移)

#virtual_ipaddress:虚拟IP(VIP)

[root@node1 ~]# vim /etc/keepalived/check_nginx.sh

[root@node1 ~]# chmod +x /etc/keepalived/check_nginx.sh

[root@node1 ~]# cat /etc/keepalived/check_nginx.sh

#!/bin/bash

#1、判断Nginx是否存活

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

#2、如果不存活则尝试启动Nginx

service nginx start

sleep 2

#3、等待2秒后再次获取一次Nginx状态

counter=`ps -C nginx --no-header | wc -l`

#4、再次进行判断,如Nginx还不存活则停止Keepalived,让地址进行漂移

if [ $counter -eq 0 ]; then

service keepalived stop

fi

fi

备keepalive

[root@node2 ~]# cat /etc/keepalived/keepalived.conf

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_BACKUP

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.188/24

}

track_script {

check_nginx

}

}

[root@node2 ~]# vim /etc/keepalived/check_nginx.sh

You have new mail in /var/spool/mail/root

[root@node2 ~]# cat /etc/keepalived/check_nginx.sh

#!/bin/bash

#1、判断Nginx是否存活

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

#2、如果不存活则尝试启动Nginx

service nginx start

sleep 2

#3、等待2秒后再次获取一次Nginx状态

counter=`ps -C nginx --no-header | wc -l`

#4、再次进行判断,如Nginx还不存活则停止Keepalived,让地址进行漂移

if [ $counter -eq 0 ]; then

service keepalived stop

fi

fi

[root@node2 ~]# chmod +x /etc/keepalived/check_nginx.sh

- 启动服务

[root@node1 ~]# systemctl daemon-reload

You have new mail in /var/spool/mail/root

[root@node1 ~]# systemctl enable nginx keepalived

Created symlink from /etc/systemd/system/multi-user.target.wants/nginx.service to /usr/lib/systemd/system/nginx.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.

[root@node1 ~]# systemctl start nginx

[root@node1 ~]# systemctl start keepalived

[root@node2 ~]# chmod +x /etc/keepalived/check_nginx.sh

[root@node2 ~]# systemctl daemon-reload

You have new mail in /var/spool/mail/root

[root@node2 ~]# systemctl enable nginx keepalived

Created symlink from /etc/systemd/system/multi-user.target.wants/nginx.service to /usr/lib/systemd/system/nginx.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.

[root@node2 ~]# systemctl start nginx

[root@node2 ~]# systemctl start keepalived

[root@node1 ~]# systemctl stop keepalived

启动node1上的keepalived,vip又会飘回到node1上

[root@node1 ~]# systemctl start keepalived

测试Ingress HTTP代理k8s内部站点

部署后端tomcat服务

[root@master1 ingress]# cat ingress-demo.yaml

apiVersion: v1

kind: Service

metadata:

name: tomcat

namespace: default

spec:

selector:

app: tomcat

release: canary

ports:

- name: scport

targetPort: 8080

port: 8009

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-deploy

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: tomcat

release: canary

template:

metadata:

labels:

app: tomcat

release: canary

spec:

containers:

- name: tomcat

image: tomcat:8.5.34-jre8-alpine

imagePullPolicy: IfNotPresent

ports:

- name: containerport

containerPort: 8080

name: ajp

containerPort: 8009

- 查看pod是否部署成功

[root@master1 ingress]# kubectl apply -f ingress-demo.yaml

service/tomcat created

deployment.apps/tomcat-deploy created

[root@master1 ingress]# kubectl get pods -l app=tomcat

NAME READY STATUS RESTARTS AGE

tomcat-deploy-66b67fcf7b-njpm9 1/1 Running 0 47s

tomcat-deploy-66b67fcf7b-wp6rd 1/1 Running 0 47s

编写ingress规则

[root@master1 ingress]# cat ingress-myapp.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-myapp

namespace: default

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

rules: # 定义后端的转发规则

- host: tomcat.test.com # 通过域名进行转发

http:

paths:

- path: / #配置访问路径,如果通过url进行转发。需要修改,空的默认访问路径是"/"

pathType: Prefix

backend: # 配置后端服务

service:

name: tomcat # 配置关联的serverce

port:

number: 8080 # service暴露的端口

- 查看ingress-myapp的详细信息

[root@master1 ingress]# kubectl apply -f ingress-myapp.yaml

ingress.networking.k8s.io/ingress-myapp created

[root@master1 ingress]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-myapp <none> tomcat.test.com 192.168.0.183,192.168.0.184 80 46s

[root@master1 ingress]# kubectl describe ingress ingress-myapp

Name: ingress-myapp

Namespace: default

Address: 192.168.0.183,192.168.0.184

Default backend: default-http-backend:80 (<error: endpoints "default-http-backend" not found>)

Rules:

Host Path Backends

---- ---- --------

tomcat.test.com

/ tomcat:8080 ()

Annotations: kubernetes.io/ingress.class: nginx

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 40s (x2 over 74s) nginx-ingress-controller Scheduled for sync

Normal Sync 40s (x2 over 74s) nginx-ingress-controller Scheduled for sync

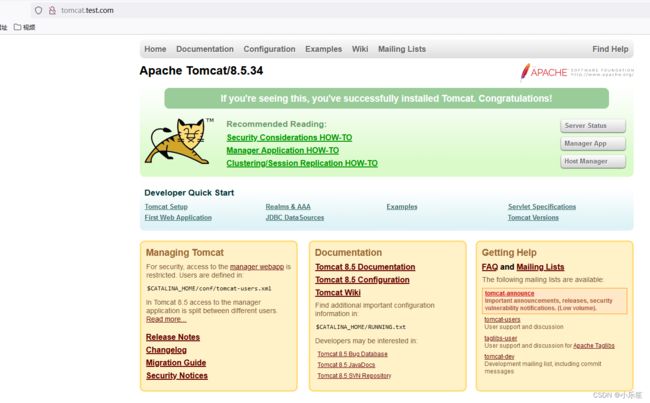

修改电脑本地的host文件,增加如下一行,下面的ip是xianchaonode1节点ip

192.168.0.188 tomcat.test.com

- 浏览器访问tomcat.lucky.com

总结:

通过deployment+nodeSelector+pod反亲和性实现ingress-controller在xianchaonode1和xianchaonode2调度

Keeplaive+nginx实现ingress-controller高可用

测试ingress七层代理是否正常

通过Ingress-nginx实现灰度发布

场景一: 将新版本灰度给部分用户

假设线上运行了一套对外提供 7 层服务的 Service A 服务,后来开发了个新版本 Service A’ 想要上线,但又不想直接替换掉原来的 Service A,希望先灰度一小部分用户,等运行一段时间足够稳定了再逐渐全量上线新版本,最后平滑下线旧版本。这个时候就可以利用 Nginx Ingress 基于 Header 或 Cookie 进行流量切分的策略来发布,业务使用 Header 或 Cookie 来标识不同类型的用户,我们通过配置 Ingress 来实现让带有指定 Header 或 Cookie 的请求被转发到新版本,其它的仍然转发到旧版本,从而实现将新版本灰度给部分用户:

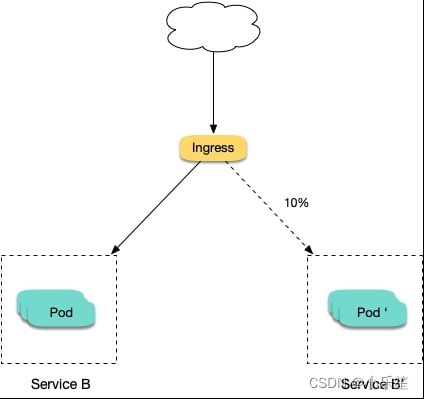

场景二: 切一定比例的流量给新版本

假设线上运行了一套对外提供 7 层服务的 Service B 服务,后来修复了一些问题,需要灰度上线一个新版本 Service B’,但又不想直接替换掉原来的 Service B,而是让先切 10% 的流量到新版本,等观察一段时间稳定后再逐渐加大新版本的流量比例直至完全替换旧版本,最后再滑下线旧版本,从而实现切一定比例的流量给新版本:

Ingress-Nginx是一个K8S ingress工具,支持配置Ingress Annotations来实现不同场景下的灰度发布和测试。 Nginx Annotations 支持以下几种Canary规则:

假设我们现在部署了两个版本的服务,老版本和canary版本

nginx.ingress.kubernetes.io/canary-by-header:基于Request Header的流量切分,适用于灰度发布以及 A/B 测试。当Request Header 设置为 always时,请求将会被一直发送到 Canary 版本;当 Request Header 设置为 never时,请求不会被发送到 Canary 入口。

nginx.ingress.kubernetes.io/canary-by-header-value:要匹配的 Request Header 的值,用于通知 Ingress 将请求路由到 Canary Ingress 中指定的服务。当 Request Header 设置为此值时,它将被路由到 Canary 入口。

nginx.ingress.kubernetes.io/canary-weight:基于服务权重的流量切分,适用于蓝绿部署,权重范围 0 - 100 按百分比将请求路由到 Canary Ingress 中指定的服务。权重为 0 意味着该金丝雀规则不会向 Canary 入口的服务发送任何请求。权重为60意味着60%流量转到canary。权重为 100 意味着所有请求都将被发送到 Canary 入口。

nginx.ingress.kubernetes.io/canary-by-cookie:基于 Cookie 的流量切分,适用于灰度发布与 A/B 测试。用于通知 Ingress 将请求路由到 Canary Ingress 中指定的服务的cookie。当 cookie 值设置为 always时,它将被路由到 Canary 入口;当 cookie 值设置为 never时,请求不会被发送到 Canary 入口。

- 部署两个版本的服务

[root@master1 ingress]# cat v1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-v1

spec:

replicas: 1

selector:

matchLabels:

app: nginx

version: v1

template:

metadata:

labels:

app: nginx

version: v1

spec:

containers:

- name: nginx

image: openresty/openresty:centos

imagePullPolicy: IfNotPresent

ports:

- name: http

protocol: TCP

containerPort: 80

volumeMounts:

- mountPath: /usr/local/openresty/nginx/conf/nginx.conf

name: config

subPath: nginx.conf

volumes:

- name: config

configMap:

name: nginx-v1

---

apiVersion: v1

kind: ConfigMap

metadata:

labels:

app: nginx

version: v1

name: nginx-v1

data:

nginx.conf: |-

worker_processes 1;

events {

accept_mutex on;

multi_accept on;

use epoll;

worker_connections 1024;

}

http {

ignore_invaild_headers off;

server {

listen 80;

location / {

access_by_lua '

local header_str = ngx.say("nginx-v1")

';

}

}

}

---

apiVersion: v1

kind: Service

metadata:

name: nginx-v1

spec:

type: ClusterIP

ports:

- port: 80

protocol: TCP

name: http

selector:

app: nginx

version: v1

v2

[root@master1 ingress]# cat v2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-v2

spec:

replicas: 1

selector:

matchLabels:

app: nginx

version: v2

template:

metadata:

labels:

app: nginx

version: v2

spec:

containers:

- name: nginx

image: openresty/openresty:centos

imagePullPolicy: IfNotPresent

ports:

- name: http

protocol: TCP

containerPort: 80

volumeMounts:

- mountPath: /usr/local/openresty/nginx/conf/nginx.conf

name: config

subPath: nginx.conf

volumes:

- name: config

configMap:

name: nginx-v2

---

apiVersion: v1

kind: ConfigMap

metadata:

labels:

app: nginx

version: v2

name: nginx-v2

data:

nginx.conf: |-

worker_processes 1;

events {

accept_mutex on;

multi_accept on;

use epoll;

worker_connections 1024;

}

http {

ignore_invalid_headers off;

server {

listen 80;

location / {

access_by_lua '

local header_str = ngx.say("nginx-v2")

';

}

}

}

---

apiVersion: v1

kind: Service

metadata:

name: nginx-v2

spec:

type: ClusterIP

ports:

- port: 80

protocol: TCP

name: http

selector:

app: nginx

version: v2

[root@master1 ingress]# kubectl apply -f v1.yaml

deployment.apps/nginx-v1 created

configmap/nginx-v1 created

service/nginx-v1 created

[root@master1 ingress]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-provisioner-75c9ccfb8f-96b22 1/1 Running 7 3d21h

nginx-v1-79bc94ff97-pnzq7 1/1 Running 0 4s

[root@master1 ingress]# kubectl apply -f v2.yaml

deployment.apps/nginx-v2 created

configmap/nginx-v2 created

service/nginx-v2 created

[root@master1 ingress]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-provisioner-75c9ccfb8f-96b22 1/1 Running 7 3d21h

nginx-v1-79bc94ff97-pnzq7 1/1 Running 0 10s

nginx-v2-5f885975d5-lm548 1/1 Running 0 1s

创建ingress,对外暴露服务,指向 v1 版本的服务

[root@master1 ingress]# cat v1-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

annotations:

kubernetes.io/ingress.class: nginx

spec:

rules:

- host: canary.example.com

http:

paths:

- path: / #配置访问路径,如果通过url进行转发,需要修改;空默认为访问的路径为"/"

pathType: Prefix

backend: #配置后端服务

service:

name: nginx-v1

port:

[root@master1 ingress]# kubectl apply -f v1-ingress.yaml

ingress.networking.k8s.io/nginx created

- 访问验证

curl -H “Host: canary.example.com” http://EXTERNAL-IP # EXTERNAL-IP 替换为 Nginx Ingress 自身对外暴露的 IP

[root@master1 ingress]# curl -H "Host: canary.example.com" http://192.168.0.188

nginx-v1

基于 Header 的流量切分

创建 Canary Ingress,指定 v2 版本的后端服务,且加上一些 annotation,实现仅将带有名为 Region 且值为 cd 或 sz 的请求头的请求转发给当前 Canary Ingress,模拟灰度新版本给成都和深圳地域的用户:

[root@master1 ingress]# cat v2-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-by-header: "Region"

nginx.ingress.kubernetes.io/canary-by-header-pattern: "cd|sz"

name: nginx-canary

spec:

rules:

- host: canary.example.com

http:

paths:

- path: / #配置访问路径,如果通过url进行转发,需要修改;空默认为访问的路径为"/"

pathType: Prefix

backend: #配置后端服务

service:

name: nginx-v2

port:

number: 80

- 测试访问:

curl -H “Host: canary.example.com” -H “Region: cd” http://EXTERNAL-IP # EXTERNAL-IP 替换为 Nginx Ingress 自身对外暴露的 IP

[root@master1 ingress]# kubectl apply -f v2-ingress.yaml

ingress.networking.k8s.io/nginx-canary created

[root@master1 ingress]# curl -H "Host: canary.example.com" -H "Region: cd" http://192.168.0.188

nginx-v2

[root@master1 ingress]# curl -H "Host: canary.example.com" -H "Region: sz" http://192.168.0.188

nginx-v2

[root@master1 ingress]# curl -H "Host: canary.example.com" -H "Region: sh" http://192.168.0.188

nginx-v1

由上面测试可以看到,只有 header Region 为 cd 或 sz 的请求才由 v2 版本服务响应,其他的都为v1版本

基于 Cookie 的流量切分:

与前面 Header 类似,不过使用 Cookie 就无法自定义 value 了,这里以模拟灰度成都地域用户为例,仅将带有名为 user_from_cd 的 cookie 的请求转发给当前 Canary Ingress 。先删除前面基于 Header 的流量切分的 Canary Ingress,然后创建下面新的 Canary Ingress:

[root@master1 ingress]# kubectl delete -f v2-ingress.yaml

ingress.networking.k8s.io "nginx-canary" deleted

[root@master1 ingress]# cat v1-cookie.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-by-cookie: "user_from_cd"

name: nginx-canary

spec:

rules:

- host: canary.example.com

http:

paths:

- path: / #配置访问路径,如果通过url进行转发,需要修改;空默认为访问的路径为"/"

pathType: Prefix

backend: #配置后端服务

service:

name: nginx-v2

port:

number: 80

- 测试访问:

curl -s -H “Host: canary.example.com” --cookie “user_from_cd=always” http://EXTERNAL-IP # EXTERNAL-IP 替换为 Nginx Ingress 自身对外暴露的 IP

[root@master1 ingress]# kubectl apply -f v1-cookie.yaml

ingress.networking.k8s.io/nginx-canary configured

[root@master1 ingress]# curl -s -H "Host: canary.example.com" --cookie "user_from_cd=always" http://192.168.0.188

nginx-v2

[root@master1 ingress]# curl -s -H "Host: canary.example.com" --cookie "user_from_bj=always" http://192.168.0.188

nginx-v1

[root@master1 ingress]# curl -s -H "Host: canary.example.com" http://192.168.0.188

nginx-v1

由上面的测试可以看到,只有 cookie user_from_cd 为 always 的请求才由 v2 版本的服务响应

基于服务权重的流量切分

基于服务权重的 Canary Ingress 就简单了,直接定义需要导入的流量比例,这里以导入 10% 流量到 v2 版本为例 (如果有,先删除之前的 Canary Ingress):

[root@master1 ingress]# kubectl delete -f v1-cookie.yaml

ingress.networking.k8s.io "nginx-canary" deleted

[root@master1 ingress]# cat v1-weight.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-weight: "10"

name: nginx-canary

spec:

rules:

- host: canary.example.com

http:

paths:

- path: / #配置访问路径,如果通过url进行转发,需要修改;空默认为访问的路径为"/"

pathType: Prefix

backend: #配置后端服务

service:

name: nginx-v2

port:

number: 80

- 访问测试

for i in {1…10}; do curl -H “Host: canary.example.com” http://EXTERNAL-IP; done;

[root@master1 ingress]# for i in {1..10}; do curl -H "Host: canary.example.com" http://192.168.0.188; done;

nginx-v2

nginx-v1

nginx-v1

nginx-v1

nginx-v1

nginx-v1

nginx-v1

nginx-v1

nginx-v1

nginx-v2

由此可见,大概只有十分之一的几率由 v2 版本的服务响应,符合 10% 服务权重的设置